Recap of SuperComputing 2023

Acceleration everywhere, for HPC, AI, I/Os and more generally next gen workloads

By Philippe Nicolas | November 21, 2023 at 2:03 pmSuperComputing 23, the international conference for HPC, networking, storage, and analysis, took place last week in Denver, CO, for its 35th edition and, once again, it was a big success. Numbers speak for themselves with 425+ exhibitors from 30 countries and 14,000+ attendees, a new number that beats last record established in 2019 according to organizers.

The floor showed a few very big booths like AWS, HPE, Microsoft, Penguin or Weka followed by ArcCompute, Dell, Eviden, Google, Intel, Lenovo, NextSilicon, Pure Storage, Supermicro or Vast Data ones. Clearly it marks a comeback on the trend visible before Covid.

The topic of the year was all about acceleration in all forms and stages, for HPC, AI, storage, I/Os, etc. to support all kinds of demanding workloads.

In terms of storage, we already mentioned this a few times, AI means 2 things: AI storage or storage designed and optimized for AI and AI for or in storage with specific addition and integration of AI components into software and hardware solutions. And the floor was full of these solutions.

We saw and met lots of vendors, this is clearly the place to be and even we were surprised by the presence of some of them.

The first feeling is the omni-presence of Nvidia without any exhibit booth but with cards, machines and systems visible at plenty of booths. The company took advantage of the show to unveil its HGX H200 platform based on Nvidia Hopper architecture and the Nvidia H200 Tensor Core GPU. The company couples this H200 with HBM3e memory, which is 50% faster than current HBM3, delivers a total of 10TB/sec of combined bandwidth. H200 can use up to 141GB of memory at 4.8 TB/s second, nearly double the capacity and 2.4x more bandwidth compared with the A100. This new processor will be available in HGX H200 server boards with 4- and 8-way configurations, compatible with both the hardware and software of HGX H100 systems. It is also available in the Nvidia GH200 Grace Hopper Superchip with HBM3e, announced in last august. According to the spec, “an 8-way HGX H200 provides over 32 petaflops of FP8 deep learning compute and 1.1TB of aggregate high-bandwidth memory for the highest performance in generative AI and HPC applications”. In parallel, at Microsoft Ignite, Nvidia announced Generative AI Foundry Service on Microsoft Azure for enterprises and startups worldwide. It groups a collection of Nvidia AI Foundation Models, Nvidia NeMo framework and tools, and Nvidia DGX™ Cloud AI supercomputing services. The company also promoted several others partnerships during SC. But Nvidia is not alone, we realized that several new processors and systems vendors were present like Groq, NextSilicon, SambaNova or Cerebras, some of them announced new processors dedicated to AI or HPC as well.

GPU cloud or AI cloud providers had a spot there with Lambda, CoreWeave or Vultr in addition to AWS, Google, Microsoft or Oracle among others. And they confirmed once again that parallel file system is largely adopted for AI storage even if we see also a few NFS flavors. As an HPC conference, parallel file storage was highly visible with BeeGFS from ThinkParQ, DDN, IBM, Lustre, Panasas, Weka and pNFS promoted by Hammerspace that unveiled a new AI reference architecture powered by the industry standard pNFS. IBM displayed its last Storage Scale System 6000 that delivers impressive performance numbers. This wouldn’t be complete without the mention of Hammerspace, Pure Storage, Quantum, Qumulo, Tuxera and Vast Data on the NAS side. Tuxera continues its market penetration journey with its high performance SMB stack supporting RDMA (SMB Direct), players like IBM, StorONE, Croit or Weka use them, to name a few.

And of course, Nvidia GPUDirect is now almost integrated by all players. Pure Storage confirmed that the coming Purity operating software in January will offer this integration not yet available with AIRI. We have to mention DAOS as the Linux Foundation officially launched a dedicated industry body to sustain this initiative in partnership with Intel, Argonne National Labs, Enakta Labs, Google and HPE. The IO500 published at that time shows several DAOS powered sites, Argonne being the big winner with some interesting confirmations from BeeGFS, DDN, Huawei, IBM and Weka. As mentioned, HPE has a dense booth as it acquired Cray to sustain its HPC ambition.

Announced just a few days before the conference, DDN insisted a lot on Infinia, its new high performance high end object storage solution. We have to mention also the AI launch of Scaleway same week in Paris, during its AI-pulse event, with its choice of a Nvidia SuperPOD coupled with DDN Exascaler recommended by Nvidia. It confirms once again that AI is the new HPC.

Immediately associated with HPC and AI, cooling systems had a real place during the show as these computing environments really push classic temperature control approaches.

Quantum computing has a special zone.

Covering fast IO and memory expansion, pooling and fabric mode as well, CXL had a dedicated pavilion with several players like Astera Labs, Intel, IntelliProp, Microchip, Siemens, UnifabriX or Zeropoint. CXL was also visible elsewhere like at Panmnesia, Lightelligence or SK Hynix booths. We revealed to the market a few weeks ago that Broadcom acquired Elastics.cloud last September and we should expect some solution from the connectivity giant soon. We have to mention enfabrica, a young player in IO acceleration that plans to release its solution in H1/24.

Liqid took advantage of the show to promote its full disaggregation story, surfing on CXL with XConn, and of course new solution around Nvidia L40S offered by Dell or Fujitsu.

Graid was also very present at various booths, a result of a clear market strategy and confirmation of solution value. It demonstrated strong performance results and partners adoption, more news will come soon from the company.

Promoting also a GPU-based erasure coding storage controller, the alternative storage vendor Nyriad had a significant booth this year to speak about their recent STaaS offering and key deployments in intensive compute environments with parallel FS and object storage software partners.

We had a real surprise, but a positive one, with the presence of Nimbus Data with a significant booth, good for them.

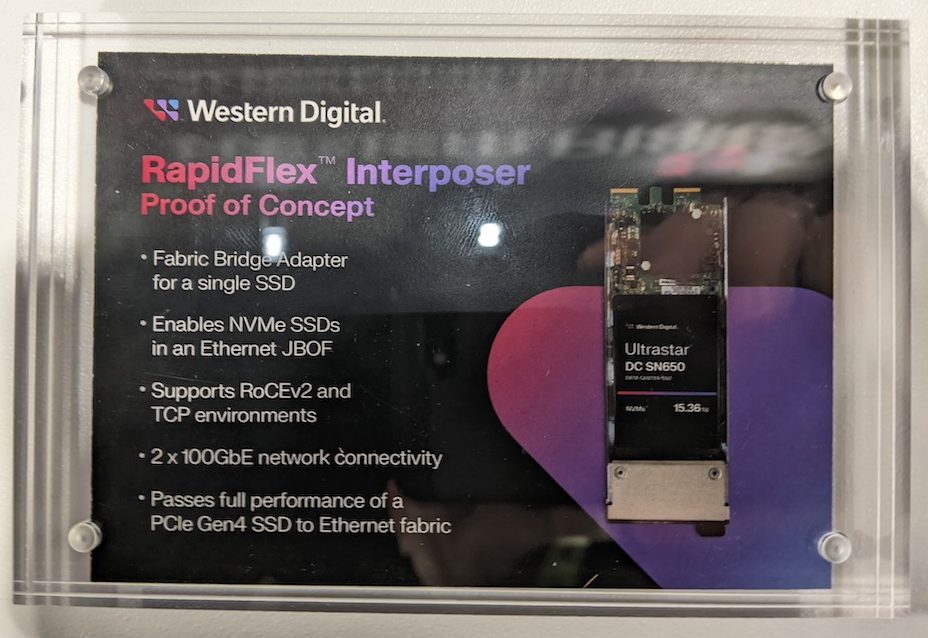

WDC and Seagate were there as well with limited booths displaying their capacity and performance products line. NVMe was obviously a topic largely integrated and accepted by players in all their solution. WDC showed the RapidFlex Interposer proof of concept to connect NVMe SSDs to an Ethernet JBOF supporting RoCEv2 and TCP.

Several data management players were also present such as Arcitecta, Atempo, Bacula, CunoFS, DeepSpace Storage Systems, Grau Data, Hammerspace, iRods, PoINT at IBM booth, QStar, Spectra Logic or Starfish. S3-to-tape is a key topic and PoINT works with IBM for Diamondback and QStar and Grau Data to extend Hammerspace datacenter footprint. Spectra Logic had a reasonable presence sharing info about their large scale tape libraries and data management solutions. On the tape side, beyond IBM, Quantum and Spectra Logic, Fujifilm, as a WW tape manufacturer leader, has a strategic position.

Next SuperComputing will take place in Atlanta, GA, from November 17 to 22, 2024.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter