VergeIO Introduces VergeIQ On-Premises AI for Every Enterprise

Enabling enterprises and research institutions to securely, efficiently, and easily deploy and manage private AI environments

This is a Press Release edited by StorageNewsletter.com on June 4, 2025 at 2:02 pmVergeIO, Inc. announced VergeIQ, an integrated enterprise AI infrastructure solution enabling enterprises and research institutions to securely, efficiently, and easily deploy and manage private AI environments.

VergeIQ is not a bolt-on or standalone AI stack. It is seamlessly integrated as a core component of VergeOS, enabling enterprises to rapidly deploy AI infrastructure and capabilities within their existing data centers in minutes, rather than months.

“With VergeIQ, we’re removing the complexity and hurdles enterprises face when adopting AI,” said Yan Ness, CEO, VergeIO. “Organizations want to leverage the power of AI for competitive advantage without losing control of their most sensitive data. VergeIQ provides exactly that – enterprise-ready AI fully integrated within VergeOS, entirely under your control.”

Scott Sinclair, practice director, infrastructure, cloud, and DevOps segment, Enterprise Strategy Group (ESG), added: “AI has quickly become a strategic priority across every industry, but organizations encounter significant challenges around infrastructure complexity and data governance. VergeIQ directly addresses these pain points by making private, secure AI deployment achievable for enterprises of all sizes. This innovation will help drive the next wave of enterprise AI adoption.”

Click to enlarge

Integrated Enterprise AI Infrastructure

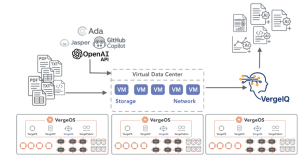

VergeIQ is designed for enterprises needing secure AI capabilities, data privacy, and near-bare-metal performance. Customers can select and deploy various Large Language Models (LLMs) – such as LLaMa, Mistral, and Falcon – and immediately begin using them on their own data within VergeOS secure, tenant-aware infrastructure.

Unlike traditional AI stacks that rely on complex 3rd-party infrastructure or GPU virtualization tools like NVIDIA vGPU, VergeIQ provides native GPU pooling and clustering, eliminating external licensing complexity. This native integration ensures dynamic, efficient GPU resource utilization across virtual data centers.

Key Highlights of VergeIQ:

- Private, Secure Deployment: Fully on-premises, air-gapped, or disconnected deployments ensuring total data sovereignty, compliance, and security.

- Rapid LLM Deployment: Instantly deploy popular pre-trained LLMs, including LLaMa, Falcon, OpenAI, Claude, and Mistral, without complex setup or custom training.

- OpenAI API Routing: A built-in OpenAI-compatible API router simplifies the integration and interaction of diverse large language models (LLMs) within your workflows.

- Vendor-Agnostic GPU Support: Utilize any standard GPU hardware, avoiding vendor lock-in and enhancing flexibility in infrastructure decisions.

- Dynamic GPU/CPU Orchestration: Automatically manage and optimize the loading and utilization of AI models across available GPU and CPU resources, maximizing infrastructure efficiency and scalability.

- GPU Sharing and Clustering: Dynamic, intelligent pooling and sharing of GPU resources across clusters to ensure optimal usage, performance, and cost-efficiency.

- Infrastructure Intelligence: Directly query IT infrastructure to extract actionable insights and simplify operational decision-making rapidly.

VergeIQ will be available as a upgrade of VergeOS to all existing customers, providing full enterprise AI capabilities immediately upon upgrade.

Resources:

Upcoming webinar on June 12th at 1:00pm ET.

Blog: Introducing VergeIQ

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter