Migrate from Multiple On-Premises Data Sources to AWS with Komprise

Approach to unstructured data simplifies planning and movement of data - whether it’s complete NAS/object migration or continuous movement of data to AWS cloud storage services.

This is a Press Release edited by StorageNewsletter.com on September 27, 2023 at 2:01 pm![]() By Steve Moore, information architect, Komprise, Inc., and

By Steve Moore, information architect, Komprise, Inc., and

![]() and Vivienne O’Keeffe, partner solutions architect, AWS

and Vivienne O’Keeffe, partner solutions architect, AWS

Do you have multiple data sources like NAS, file, and object stores at your on-premises data center? Over time, customers can accumulate different technologies as their needs change and grow. This leads to increased costs and complexity managing data and inhibits the ability to consolidate or migrate data for savings and performance.

With on-premises data center space and costs at a premium, many customers are looking to the cloud for savings, scalability, and efficiencies.

To start, you need to identify what datasets to move and the appropriate destination. This requires a thorough analysis and understanding of your data. Other considerations include the time it takes to move data and having a proven, tested network and the proper security settings to prevent errors, delays, and exposure. Data migrations must also minimize the impact to end users and businesses alike.

The Komprise approach to unstructured data simplifies the planning and the movement of data – whether it’s a complete NAS/object migration or a continuous movement of data to Amazon Web Services (AWS) cloud storage services.

In this post, we’ll review a set of tools to help analyze and understand your data. With this knowledge, you can determine the right AWS storage services to place the data for the best match of performance and cost, and build a transition plan to effectively migrate data where it should be -,near the resources it requires. This leaves you with confidence that the right data is in the right place the first time.

Komprise is an AWS Migration and Modernization Competency Partner and AWS Marketplace Seller that’s a leader in analytics-driven data management software, working across both NAS and Amazon Simple Storage Services (Amazon S3) storage.

Right data, right place with file analysis

Komprise supports moving data to AWS file system services such as Amazon Elastic File System (Amazon EFS) and Amazon FSx. Additional savings are possible with consolidating different NAS/object data systems or automatically tiering inactive NAS data to Amazon S3. All of this functionality is available in one interface.

It may be tempting to just lift and shift everything to the cloud. But will you be getting the right data to the right services with the appropriate performance and price points?

With Komprise, you can:

- Analyze your data immediately across any network file system (NFS), SMB, object, and NAS.

- Migrate data and/or transparently tier to the AWS storage service and location best suited for each dataset.

- With intelligent lifecycle management, as data needs change, the company can move your data again so you’re never overpaying for storage or under-delivering on performance and user experience.

Understanding your data is the first step in selecting the appropriate AWS storage service. Komprise gives insight into the type of data, volume, file count, owners, access patterns, and more. This analysis helps determine if the data should be in Amazon EFS, Amazon FSx for Windows File Server, Amazon FSx for NetApp ONTAP, or copied/tiered to S3 for long-term storage giving significant storage savings.

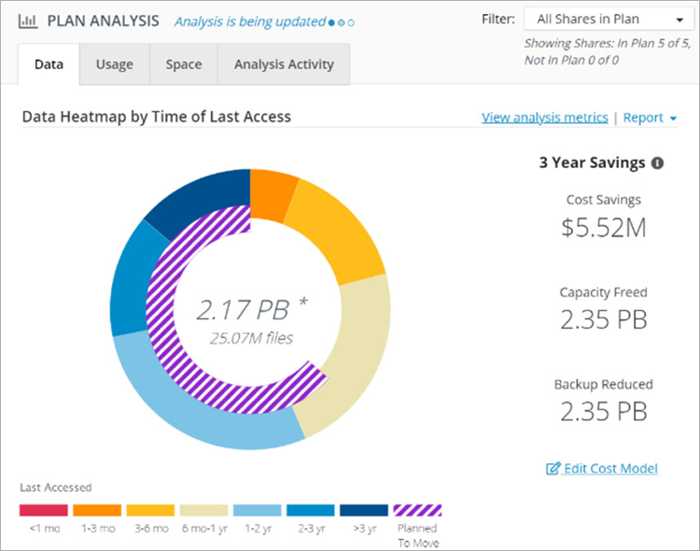

Figure 1 – Komprise data donut heatmap

Komprise also offers FinOps capabilities to help you understand storage costs and compare plans for adding new AWS storage services to determine the best path forward.

Figure 2 – See potential cost savings with built-in FinOps

Analyze to prevent migration issues

You can leverage Komprise to identify potential issues up front, before moving data. For example, identify files and/or directories with restricted access or resolution issues. Are the datasets too large for the destination storage service in file count or capacity? Do you have an exceedingly large number of tiny files or an unusually large number of empty directories? The last place to learn about these issues is in the middle of a migration while everyone is watching.

Comprehensive data visibility and analysis also helps you make nuanced decisions regarding data use and placement vs. taking a one-size-fits-all approach. Instead of directly shifting 2PB worth of unstructured data to another platform, consider the value and ownership costs of data. It’s often advantageous to tier inactive or cold data first to archival storage such as Amazon S3 Glacier.

Build migration plan

Creating a data management plan before migrating will avoid errors, delays, and cost overruns while meeting overall unstructured data management goals and migration objectives.

Data management migration plan will embrace issues such as:

- What are common data types and workloads?

- What are topology requirements?

- Which storage service to choose?

- How to effectively move data?

Komprise Intelligent Data Management creates a central index of all your data for holistic visibility. Unified visibility helps IT collaborate with different departments, and stakeholders from security, legal, and compliance to make better decisions, tune performance, and save money.

Setting up a plan starts with identifying the easy datasets to move and then building to the more complex assets. This process builds experience and confidence and confirms the cutover processes as you progress to harder migrations.

Review your data and organize your migration plan starting with these types of data; building from easy to more complex:

- Tiering type: This is typically inactive data no one is accessing which can move to Amazon S3 for long-term storage. The vendor patented Transparent Move Technology (TMT) leaves a symbolic link so end users never have to hunt down their data regardless of where it resides; the location never changes from their view.

- Easy type: These are fairly static shares with few users which you can migrate quickly in 1 or 2 iterations with short cutovers.

- Moderate type: These shares are moderately active with average file sizes of ~1MB. They require minimal migration time but may require scheduling a specific cutover window.

- Active type: Active data changes daily and can have a significant impact on data verification, operations, costs, and final cutover time. These types of migrations may require multiple iterations and longer final cutover times.

- Complex type: Datasets with a variety of dependencies from multiple shares migrating in unison, shares containing many small files, or shares with many empty directories can create ample overhead and risk to move. IT needs advanced coordination and possibly several iterations and longer cutover windows to move these datasets.

Figure 3 – Migrate PBs of data (SMB, NFS, Dual)

Click to enlarge

Know your topology

Network and security configurations can have enormous consequences on migrations. Will data be moving only from on-premises to cloud only, between sites or regions, or from other clouds to AWS? It’s critical to know the data path, or network topology, to understand the obstacles contributing to round-trip latency and bandwidth issues.

Security technologies like firewalls, load balancers, and particularly antivirus and IDS/IPS can negatively impact migrations when not configured to compensate for the increased workloads.

Just because your storage is fast and your network is fast doesn’t automatically mean migrations will be fast. Often, many issues are hiding in plain sight; a thorough stress test can bring them to light. The purpose of understanding network topology is to avoid bottlenecks ahead of time that slow down or stop a migration altogether.

The Komprise Assessment of Customer Environment (ACE) simulates a series of data movement scenarios between your actual on-premises source systems and the destination wide-area network (WAN) storage services like Amazon EFS, FSx for Windows File Server, and FSx for NetApp Ontap.

ACE performs an independent set of migration simulations and collects overall performance numbers to provide a baseline; it then surfaces potential performance losses to investigate. Once complete, ACE can improve the chances for a successful data migration.

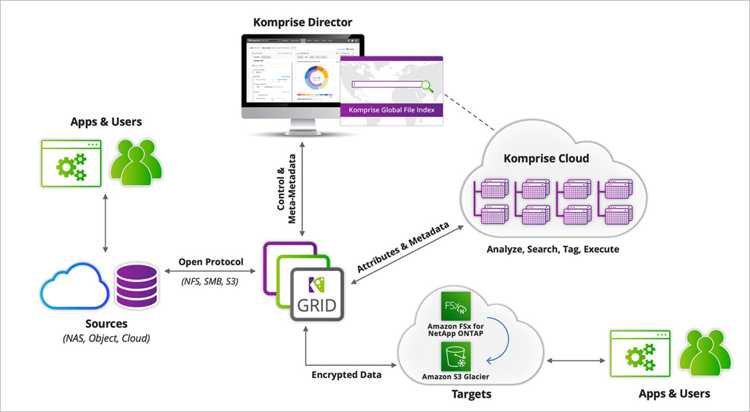

Figure 4 – Komprise distributed, scale-out architecture

Choose frictionless data migration tool

Common open-source tools, such as Robocopy and Rsync, are free but lack the performance, security, ease-of-use, and risk mitigation features of enterprise migration software. Is it worth taking a chance on your organization’s most prized assets?

For large-scale enterprise data migrations to cloud, you need solution which can:

- Efficiently run, monitor, and manage hundreds of data migrations across hybrid cloud storage.

- Identify the right files to migrate to maximize efficiency and reduce spend.

- Minimize network usage.

- Auto retry if network or storage issue occur.

- Migrate with, or without, all file permissions and access control intact.

- Maintain data integrity by conducting MD5 checksums on all files.

Komprise Elastic Data Migration and Hypertransfer technologies maximize performance and throughtput by automatically scaling according to the parallelism of your data across shares, directories, and files to maximize performance. The company can manage multiple migration activities running in parallel, and users can start, monitor, and administer active migration using the single firm’s console.

Key features of Komprise Elastic Data Migration include:

- Policy-based automation to move directories, files, and/or links from a source to a destination.

- At each step, the firm checks data integrity and maintains all attributes, permissions, and access controls from the source.

- In an object migration, the company migrates the prefixes and metadata of each object.

- IT can perform a migration without restricting access to data so end users can keep working.

- The vendor uses an iterative process to migrate everything and keep up with and move the active changes.

- Each iteration is like a trial of the final run, showing performance, number of files, and data transferred, along with time to complete, full data validation, and any errors that may need addressing.

- In the case of unexpected events, the company retries the iteration automatically, delivering a resilient migration process.

- Finally, it delivers full audit logs for compliance and regulatory reviews.

A final iteration is required to see that all final changes have reached the destination. This requires disconnecting the users and applications from the source, so that all changes are pushed, migrated, and verified.

Once final iteration completes, end users and applications can transition to the new destination. If done right, this may be as simple as changing the domain name system (DNS) and distributed file system (DFS) server configurations to point to the new destination.

Once migrations are complete, you will continue to use Komprise to analyze your environment and develop a robust full data lifecycle management plan.

Conclusion

Cloud migrations are a team sport. While tools and metrics are important, don’t forget the value of bringing teams together across IT and business lines for shared data management accountability and risk management.

To get started with Komprise or schedule a demo, visit the Komprise website. You can also learn more about Komprise in AWS Marketplace.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter