NetApp Insight 2025: NetApp Introduces Comprehensive Enterprise-Grade Data Platform for AI

Exabyte-scale AFX systems with disaggregated storage and AI Data Engine built with Nvidia deliver on AI vision

This is a Press Release edited by StorageNewsletter.com on October 14, 2025 at 2:02 pmNetApp Inc., the intelligent data infrastructure company, unveiled visionary new products, strengthening its enterprise-grade data platform for AI innovation. As the era of AI shifts from initial pilots to mission-critical agentic applications, AI-ready data on modern enterprise-grade data infrastructure to deliver the results needed for AI-driven businesses.

Click to enlarge

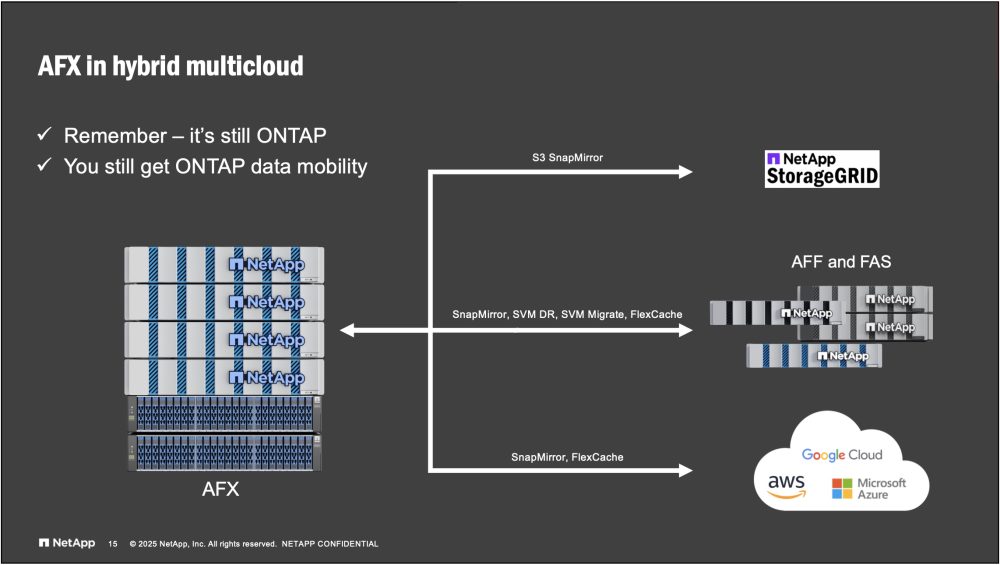

The new NetApp AFX decouples performance and capacity with a disaggregated NetApp ONTAP that runs on the new NetApp AFX 1K storage system. NetApp AI Data Engine is a secure, unified extension of ONTAP integrated with the Nvidia AI Data Platform reference design that helps organizations simplify and secure the entire AI data pipeline – and managed via single, unified control plane. Together, these capabilities unify high-performance storage and intelligent data services into a single, secure, and scalable offering that accelerates enterprise AI retrieval augmented gen (RAG) and inference across hybrid and multicloud environments. Customers will be able to access these products through direct purchase or through a subscription to NetApp Keystone STaaS. With NetApp AFX and the NetApp AI Data Engine, the NetApp data platform is able to immediately ensure that all applicable data is immediately ready for AI.

“With the new NetApp AFX systems, customers now have a trusted, proven choice in on-premises enterprise storage built on a comprehensive data platform to rapidly propel AI innovation forward,” said Syam Nair, CPO, NetApp. “NetApp AI Data Engine enables customers to seamlessly connect their entire data estate across hybrid multicloud environments to build a unified data foundation. Enterprises can then dramatically accelerate their AI data pipelines by collapsing multiple data preparation and management steps into the integrated NetApp AI Data Engine, built with Nvidia accelerated computing and Nvidia AI Enterprise software complete with semantic search, data vectorization and data guardrails. The combination of NetApp AFX with AI Data Engine provides the enterprise resilience and performance built and proven over decades by NetApp ONTAP, now in a disaggregated storage architecture, and all still built on the most secure storage on the planet.“

Click to enlarge

To accelerate modern AI workloads, NetApp introduced new capabilities including:

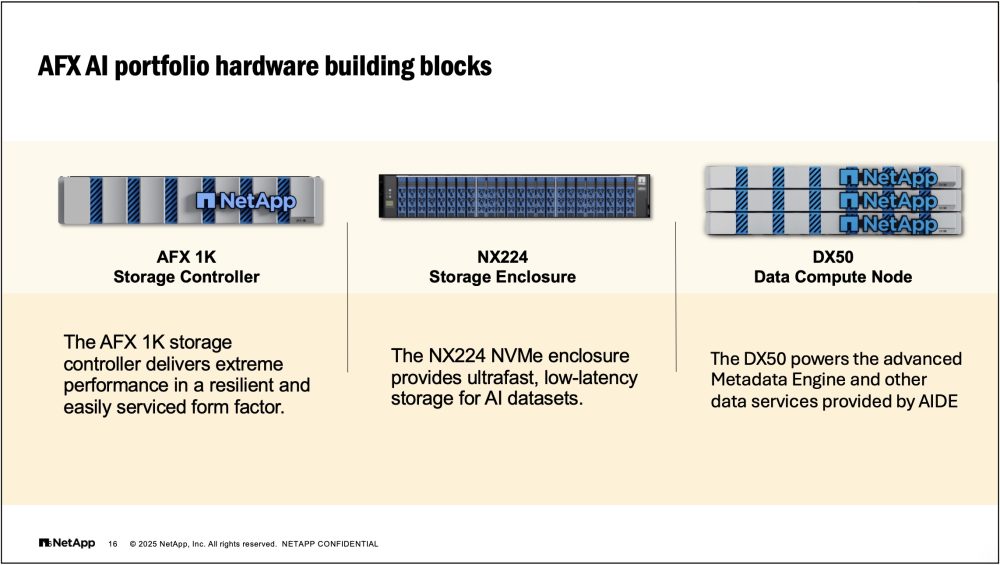

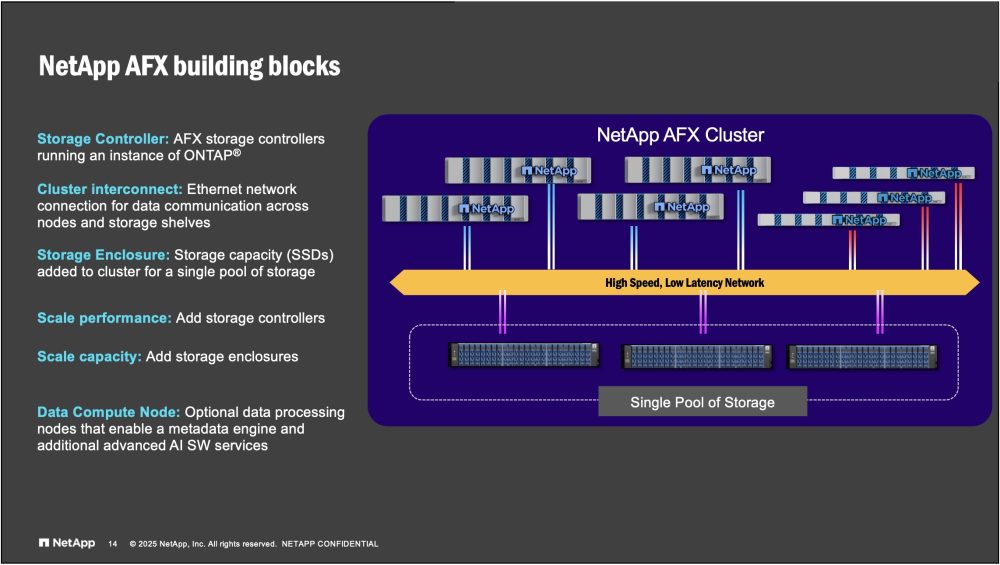

- NetApp AFX: NetApp AFX is an enterprise-grade disaggregated all-flash storage system built for demanding AI workloads. NetApp AFX is a powerhouse data foundation for AI factories. It is certified storage for Nvidia DGX SuperPOD supercomputing and powered by NetApp ONTAP, the industry-leading storage operating system trusted by tens of thousands of enterprise customers across industries to manage EBs of data. AFX delivers the same robust data management and built-in cyber resilience that NetApp is known for, along with secure multi-tenancy and seamless integration across on-premises and cloud environments. AFX is designed for linear performance scaling up to 128 nodes with TBs per second of bandwidth, EB scale capacity, and independent scaling of performance and capacity. Optional DX50 data control nodes enable a global metadata engine for a real-time catalog of enterprise data and leverage Nvidia accelerated computing.

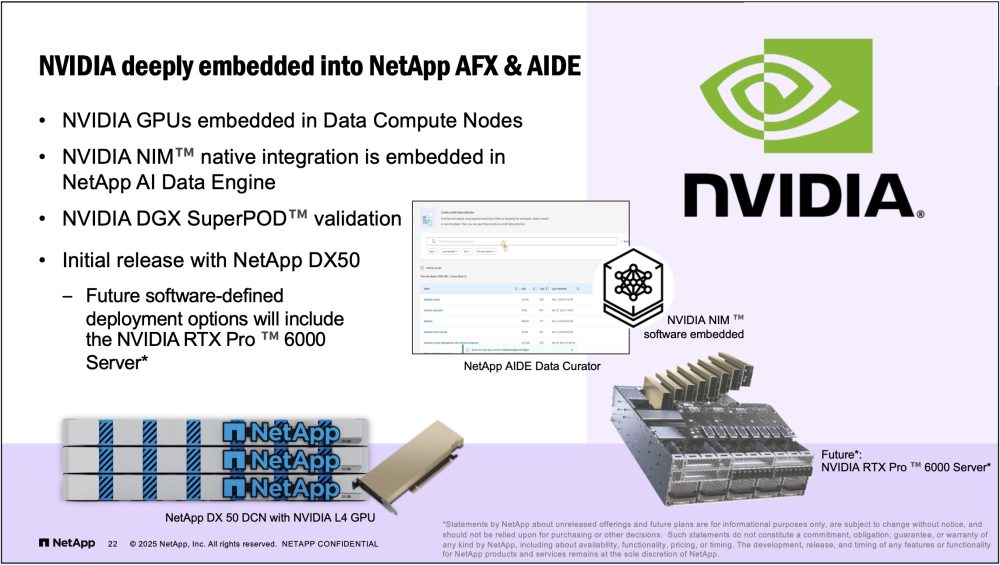

- NetApp AI Data Engine (AIDE): NetApp AIDE is a comprehensive AI data service designed to make AI simple, affordable, and secure. From data ingestion and preparation to serving GenAI applications, AIDE offers a global, up-to-date view of a customer’s entire NetApp data estate for fast searching and curation while seamlessly connecting their data to any model or tool across on-premises and public cloud. It automates data change detection and data synchronization, eliminating redundant copies and ensuring data is always current. Built-in guardrails follow data throughout its AI lifecycle, ensuring security and privacy. AIDE leverages the Nvidia AI Data Platform reference design-featuring Nvidia accelerated computing and Nvidia AI Enterprise software including Nvidia NIM microservices – for vectorization and retrieval, which joins advanced compression, fast semantic discovery, and secure, policy-driven workflows. By bringing AI to data in an integrated system, AIDE provides the efficiency, data clarity, and governance needed for enterprises to confidently adopt AI. NetApp AIDE will run natively within the AFX cluster on top of the optional DX50 data control nodes. Future ecosystem support includes the integration of Nvidia RTX PRO Servers featuring RTX PRO 6000 Blackwell Server Edition GPUs. NetApp AIDE accelerates customers’ AI journeys with simplicity, security, and efficiency.

- Object API for Seamless Access to Azure Data & AI Services: Customers can now access their Azure NetApp Files data through an Object REST API, available in public preview. This new capability means customers no longer need to move or copy file data into a separate object store to use it with Azure services. Instead, NFS and SMB datasets can be connected directly to Microsoft Fabric, Azure OpenAI, Azure Databricks, Azure Synapse, Azure AI Search, Azure Machine Learning, and more. Customers can analyze data, train AI models, enable intelligent search, and build modern applications on their existing ANF datasets-while continuing to rely on the enterprise performance and reliability of Azure NetApp Files.

- Enhanced Unified Global Namespace in Microsoft Azure: Enterprises can now seamlessly unify their global data estate across cloud and on-premises into Microsoft Azure with new FlexCache capabilities in Azure NetApp Files. The same capability can also extend their on-premises workloads such as Electronic Design Automation. This allows for data stored in other ONTAP-based storage in customer data centers or across multiple clouds to be instantly made visible and writeable in an ANF environment, but with data transferred granularly only when requested. This allows for a customer’s entire hybrid cloud data estate to be seamlessly accessed in Microsoft Azure. Additionally, enterprises can migrate data and snapshots effortlessly between environments using SnapMirror, supporting hybrid use cases such as continuous backup, automated disaster recovery, and workload balancing across environments.

“Enterprises are looking for a trusted, high-performance data foundation to turn massive volumes of information into real intelligence that powers their AI journey,” said Justin Boitano, VP, enterprise AI Products, Nvidia. “NetApp’s data platform has transformed into an AI-native storage platform by integrating Nvidia accelerated computing and software, including leading AI models. With this new platform, organizations can index and search vast amounts of unstructured data across their enterprise to drive innovation and deliver real business impact.”

“Today’s new solutions from NetApp demonstrate the speedy fulfillment of an ambitious vision on how to manage data for AI,” said Michael Leone, practice director and principal analyst, Omdia. “The way NetApp solutions bring intelligence to enterprise data shows a deep understanding of customers’ real challenges and how to address them. Adding independent scaling of performance and capacity management to the robust data management capabilities in ONTAP, which has long defined NetApp’s reputation, will enable enterprises to confidently invest in AI projects that deliver value quickly to the business.”

At NetApp INSIGHT 2025 in Las Vegas, October 14-16, NetApp will present sessions and demos, showcasing how it is driving transformation across industries. Tune in to the keynote sessions at: https://www.netapp.com/insight/.

Additional Resources

Comments

Clearly this product announcement is a major one for NetApp coupling multiple initiatives and developments.

Sandeep Singh, SVP and GM, Enterprise Storage

Sandeep Singh, SVP and GM, Enterprise Storage

The company pre-announced last year at the same Insight conference a key move in its roadmap and design toward disaggregated storage architecture and they deliver this year an iteration of it with the AFX product. It was at that time a real big decision for the company and at the same time long awaited for users community especially for the ones using high-end configurations.

As a reader you probably remember the image we produced last year in our conference recap and comments part about this which illustrates the any-to-any storage model the company adopts with full NVMe devices to any front-end node. Obviously, the network here relies on full ethernet supporting RDMA. By disaggregation here, we mean a multi-level instantiation of the concept, at the block and file level. And for users, no changes as this new product iteration with new design is offered via ONTAP.

The engineering team has iterated its storage controller approach with the 1U 1k model connected to NX224 storage enclosures offering 24 NVMe SSDs each, in 2U, up to 60TB per SSD so 1.4PB per 2U chassis. It confirms that the value comes from software in our modern storage world. 128 storage controllers can be configured in one cluster coupled with up to 52 storage enclosures and 10 DX50, the compute nodes, new elements in the config. For access methods, we mentioned pNFS but of course it exposes NFS, SMB, S3 and NFS over RDMA. The DX50 uses AMD Genoa 9554P CPU with 64 cores and 1TB of memory, 2 x 15TB NVMe SSDs and include 1 Nvidia L4 card. Globally an AFX cluster can group 10 Nvidia L4 cards as it glues 10 DX50s.

As a historical champion of NFS, it appears natural and obvious for NetApp to offer pNFS and leverage its parallelization for AFX, again fully available with ONTAP.

To put things in perspective, NetApp arrives not too late but late in the domain like generic storage vendors like Pure Storage and IT vendors such as Dell and HPE who targets usually enterprise. And targeting this large segment, what we mean is that NetApp didn't really take seriously HPC for years, considered too narrow, and finaly tried to address these needs with partners' solutions such as ThinkParQ BeeGFS, Weka or Vdura/Panasas or Lustre-based solution coupled with E-Series. It was a choice, a tactical one that finally illustrates the gap when AI landed on the planet representing the new HPC wave as it crystalizes very high demanding storage requirements. And since AI arrived, they battled to fill the gap with dedicated solutions to AI. AI represents a so gigantic business opportunity building new empires, killing businesses, forcing others to adapt themselves hyper fast, that it invites NetApp and a few other players to radically change their portfolio. In other words, if these vendors wouldn't have native AI storage story, life would become tough... and again the good thing is that users receive now a modern storage architecture to support and run modern workloads.

When we speak about AI we implicitly mean high I/O performance i.e low latency, high bandwidth and high IOPS to sustainability feed GPUs. The key words here are RDMA, ethernet, NVMe, flash and SSD, any-to-any model, disaggregation, access methods such pNFS and S3 plus advanced mode such Nvidia GPUDirect Storage or Scada.

AFX confirms the wave around pNFS with several players adopting the NFS standard iteration launched with NFS v4.1 in 2010 with the RFC 5661 and solidified with NFS v4.2. We - the market - waited 15 years and AI pressure to see now a market adoption and solution presence. It is the case with Pure Storage FlashBlade//EXA, Peak:AIO, Hammerspace, Red Hat of course as a Linux provider offering both pNFS client and server and now NetApp... And as it relies on ONTAP, hybrid configuration are possible with other NetApp solutions.

Click to enlarge

This new NetApp AI platform couples compute nodes equipped with Nvidia L4 board. The product is validated by Nvidia DGX SuperPOD which is mandatory today. With Nvidia board installed in compute server with PCIe connection with one Nvidia L4 card, NetApp targets Enterprise AI challenges but also natively and transparently enriches metadata within the storage cluster with this side processing island. We expect other iterations coupling various Nvidia cards and accelerators but the important aspect here is that NetApp enters into the AI storage and exascale high performance file storage game.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter