Software-Defined Memory Kove Announces Benchmarks

Showing 5x larger AI inference workloads on Redis and Valkey with lower latency than local memory

This is a Press Release edited by StorageNewsletter.com on September 12, 2025 at 2:02 pmKove, creator of software-defined memory Kove:SDM, announced benchmark results proving that Redis and Valkey — 2 of the most widely used engines in AI inference — can run up to 5x larger workloads faster than local DRAM in a majority of cases when powered by remote memory via Kove:SDM a world’s first and only commercially available software-defined memory solution.

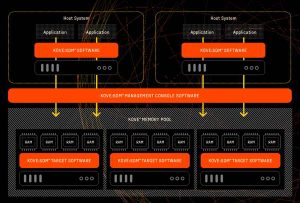

Using pooled memory, running on any hardware supported by Linux, Kove:SDM allows technologists to dynamically right-size memory according to need. This enables better inference-time processing, faster time to solution, increased memory resilience, and substantial energy reductions. The announcement, made during CEO John Overton’s keynote at AI Infra Summit 2025, identifies memory, not compute, as the next bottleneck for scaling AI Inference.

Click to enlarge

While GPUs and CPUs continue to scale, traditional DRAM remains fixed, fragmented, and underutilized — stalling inference workloads, creating unnecessary and repetitive GPU processing, and driving up costs due to inefficient GPU utilization. Tiering to NVMe storage can aid in reducing GPU recomputation but also delivers greatly reduced performance compared to system memory. Instead, Kove:SDM virtualizes memory across servers, creating much larger elastic memory pools that behave and perform like local DRAM to break through the ‘memory wall’ that continues to constrain scale-out AI inference. With Kove:SDM, KV cache can remain in memory without suffering the performance degradation from tiering across HBM, memory, and storage. What happens in memory, stays in memory.

Redis and Valkey Benchmarks: Proof for AI Inference

Kove:SDM improves capacity and latency compared to local server memory. Independent benchmarks ran on the same server — first without Kove:SDM, and then with Kove:SDM — across Oracle Cloud Infrastructure. With 5x more memory from Kove:SDM, server performance compared to local memory was:

Redis Benchmark (v7.2.4)

- 50th Percentile: SET 11% faster, GET 42% faster

- 100th Percentile: SET 16% slower, GET 14% faster

Valkey Benchmark (v8.0.4)

- 50th Percentile: SET 10% faster, GET 1% faster

- 100th Percentile: SET 6% faster, GET 25% faster

“These results show that software-defined memory can directly accelerate AI inference by eliminating KV cache evictions and redundant GPU computation,” said John Overton, CEO, Kove. “Every GET that doesn’t trigger a recompute saves GPU cycles. For large-scale inference environments, that translates into millions of dollars saved annually.”

Redis has long powered caching workloads across industries. A new branch to Redis, Valkey is now widely integrated into vLLMs, making it central to modern inference pipelines. By expanding KV Cache capacity and improving performance, Kove:SDM directly addresses 1 of AI’s most urgent challenges.

Structural Business Impact

Enterprises deploying AI at scale stand to benefit from significant financial savings:

- $30–40M+ annual savings typical for large-scale deployments.

- 20–30% lower hardware spend by deferring costly high-memory server refreshes.

- 25–54% lower power and cooling costs from improved memory efficiency.

- Millions in avoided downtime by eliminating memory bottlenecks that cause failures.

“Kove has created a new category — software-defined memory — that makes AI infrastructure both performant and economically sustainable,” said Beth Rothwell, director GTM strategy, Kove. “It’s the missing layer of the AI stack. Without it, AI hits a wall. With it, AI inference scales, GPUs stay busy doing the right work, and enterprises save tens of millions.”

Why It Matters Now

AI demand is doubling every 6–12 months, while DRAM budgets cannot keep pace. Existing solutions like tiered KV caching or storage offload reduce efficiency or add latency. By contrast, Kove:SDM pools DRAM across servers while delivering local memory performance. KV cache tiering to storage can be 100-1000x less performant than Kove:SDM.

Kove:SDM is available, deploys without application or code changes, and runs on any x86 hardware supported by Linux.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter