Huawei Technologies Pushes AI Computing Limits with CloudMatrix 384

Delivering new levels of performance at the system level

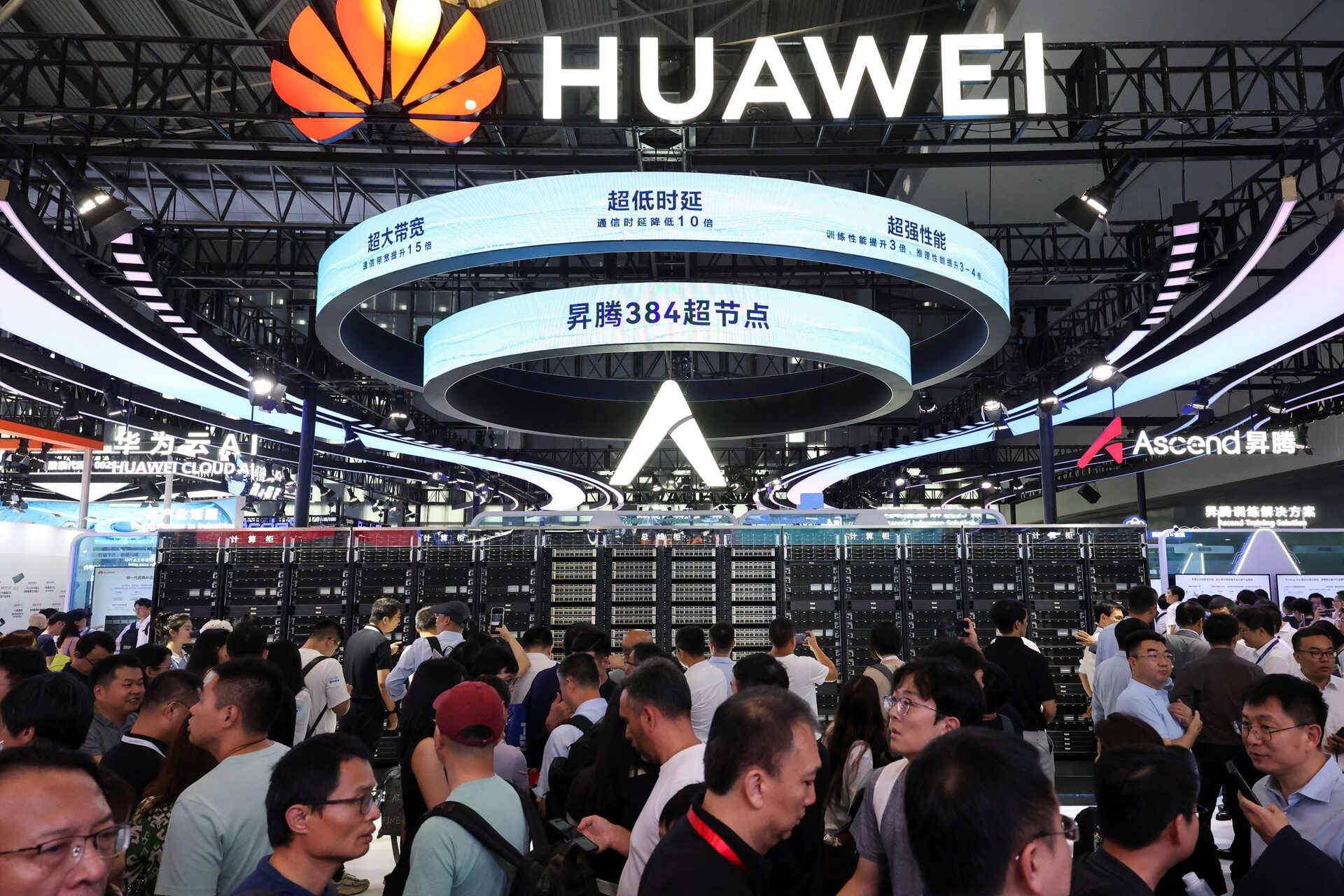

By Philippe Nicolas | August 27, 2025 at 2:02 pmA few weeks ago during the World Artificial Intelligence conference (WAIC) in Shanghai, Huawei Technologies Co., Ltd. unveiled CloudMatrix 384, a monster of technology, probably one of the fastest AI computing system today. It was initially announced last April.

![]()

The CloudMatrix 384 combines 384 Huawei Ascend 910C NPUs, much more than Nvidia, remember the Californian connects vis NVLink 36 Grace CPUs and 72 Blackwell GPUs in a Nvidia GB200 NVL72 platform.

The idea is to demonstrate the full capacity and autonomy of China to develop and deliver leading AI computing system for the high-demanding AI workloads. And it is a serious race, even battle, among leading technology countries.

On the performance side, the system delivers 300 petaflops in BF16 precision doubling the throughput of similar system from Nvidia with 3 times the memory capacity and double the bandwidth globally. The Nvidia B200 GPU delivers 2,500 teraflops by itself which is far more efficient at this level than the Ascend one but globally, it is the reverse. Same thing for the memory, the HBM bandwidth is individually in favor of Nvidia with 8TB/s per GPU, around 2.5 times higher on what the Chinese company offers. At the system level, again, Huawei is faster.

In fact, when things go in the supersize dimension, Huawei appears to be able to demonstrate impressive numbers.

Physically the cluster spans 16 racks, 12 dedicated to compute and 4 for networking based on optical mesh topology with 6192 x 800Gbps connectors. That system consumes 559kW.

Click to enlarge

The MSRP seems to be around $8 million and the firm confirms the deployment of several of them for the domestic China market, plus partners and also for its own usage.

More globally, Huawei develops obviously lots of elements by itself, we mean internally, having tons of talents internally. It also could generate some lack of compatibility, for instance with Nvidia libraries, tools and software stack like CUDA, already well adopted outside China. As open source is very active we should see some interesting alternative software approaches as well, making coding with some abstraction to be “portable”. Initiatives like ONNX – Open Neural Network Exchange – with 58 members, among them Huawei, is a perfect illustration of that.

We anticipate more information on this product during the coming Huawei Connect 2025 conference in Shanghai, China, 18-20 of September, 2025.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter