Microsoft Research Use Case: WekaIO and Nvidia GPUDirect Storage Results With Nvidia DGX-2 Servers

Second test configuration showed that DGX-2 with single mount point to WekaFS system could achieve 113GB/s of throughput.

This is a Press Release edited by StorageNewsletter.com on October 19, 2020 at 2:22 pmWekaIO, Inc. in partnership with Microsoft Research produced among the aggregate Nvidia Corp.‘s GPUDirect Storage (GDS) throughput numbers of all storage solutions that have been tested to date.

Using a single Nvidia DGX-2 server connected to a WekaFS cluster over a Mellanox IB switch the testers were able to achieve 97.9GB/s of throughput to the 16 Tesla V100 GPUs using GPUDirect Storage. This high-level performance was achieved and verified by running the Nvidia GDSIO utility for more than 10 minutes and showing sustained performance over that duration.

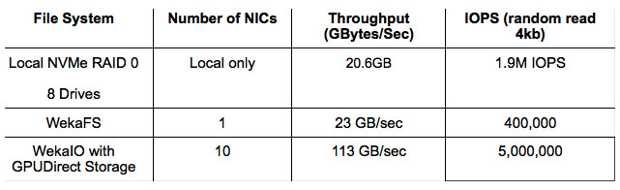

The engineers observed throughout testing that the WekaFS system was not fully burdened. In an effort to completely maximize performance they ran additional GDSIO processes on the same client server, this time utilizing all ten NICs and all 20 ports. Note that the standard single port NICs that ship with the DGX-2 were replaced with dual-port NICs in order to fully utilize the available PCIe bandwidth in the system. This put a full load on the DGX-2 GPUs and also leveraged the CPUs. This second test configuration showed that the DGX-2 server with a single mount point to the WekaFS system could achieve 113.13GB/s of throughput.

Both tests were run by Microsoft who has WekaIO filesystem (WekaFS) deployed in conjunction with multiple DGX-2 servers in a staging environment. They agreed to run performance measurements in their staging environment to determine what performance levels they were capable of achieving with the combination of the new WekaFS code version 3.8 and the GPUDirect Storage feature within their current DGX-2 environment. Microsoft Research was impressed with the test outcome and plans to upgrade their production environment to the newest version of the WekaIO file system, which is available and supports the Nvidia Magnum IO, which contains GPUDirect Storage. (See GPUDirect Storage: A Direct Path Between Storage and GPU Memory)

What is GPUDirect Storage

GPUDirect Storage is a technology from Nvidia that allows storage partners like WekaIO to develop solutions that offer 2 significant benefits.

The first is CPU bypass. Traditionally, the CPU loads data from storage to GPUs for processing, which can cause a bottleneck to application performance because the CPU is limited in the number of concurrent tasks it can run. GPUDirect Storage creates a direct path from storage to the GPU memory, bypassing the CPU complex and freeing the sometimes-overburdened CPU resources on GPU servers to be used for compute and not for storage, thereby potentially eliminating bottlenecks and improving real-time performance.

The second benefit is an increased availability of aggregate bandwidth for storage. Using GPUDirect Storage allows storage vendors to effectively deliver considerably more throughput. As witnessed with WekaFS, using GPUDirect Storage allowed testers to achieve the highest throughput of any solution that has been tested to date.

How GPUDirect Storage and WekaFS impact AI performance

Generally, when designing AI/ML environments the most relevant consideration is the overall pipeline time. This pipeline time can include the initial extract, transform, and load (ETL) phase as well as the time it takes to copy the data to the local GPU server-or possibly only the time it takes to train the model on the data. As we know, storage performance is an enabler for improving the overall pipeline time by accelerating or completely removing the need for some of these steps. Therefore, Microsoft was able to see that the GPUDirect Storage and WekaFS solution enables the GPUs on a server to ingest data at the speed they required. Moreover, the GPU server now had additional CPU cores available for its CPU workloads, whereas before these CPU cores would have been busy performing storage IO/s.

The value for organizations has become clear. The combination of WekaFS and GPUDirect storage allows customers to use their current GPU environments to their maximum potential, as well as to accelerate the performance of their future AI/ML or other GPU workloads. Data scientists and engineers can derive the benefit from their GPU infrastructure and can concentrate on improving their models and applications without being limited by the storage performance and idle GPUs.

Resources:

Weka AI and NVIDIA accelerate AI data pipelines

10 Reasons a Modern Parallel File System Can Solve Big Storage Problems

Blog : How GPUDirect Storage Accelerates Big Data Analytics

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter