CES 2026: Nvidia BlueField-4 Powers New Class of AI-Native Storage Infrastructure

A new iteration for the next frontier of AI

This is a Press Release edited by StorageNewsletter.com on January 6, 2026 at 2:02 pmSummary:

- Nvidia BlueField-4 powers Nvidia Inference Context Memory Storage Platform, a new kind of AI-native storage infrastructure designed for gigascale inference, to accelerate and scale agentic AI

- The new storage processor platform is built for long-context-processing agentic AI systems with lightning-fast long- and short-term memory

- Inference Context Memory Storage Platform extends AI agents’ long-term memory and enables high-bandwidth sharing of context across clusters of rack-scale AI systems – boosting tokens per seconds and power efficiency by up to 5x

- Enabled by Nvidia Spectrum-X Ethernet, extended context memory for multi-turn AI agents improves responsiveness, increases throughput per GPU and supports efficient scaling of agentic inference

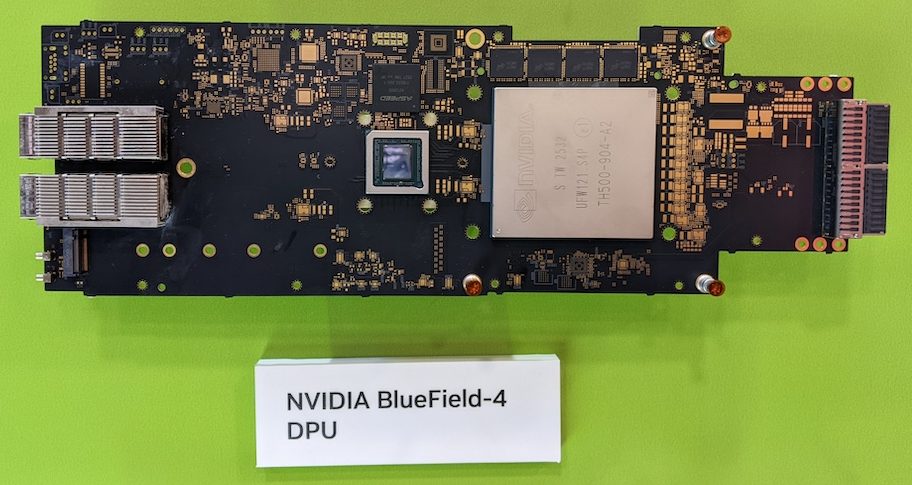

Nvidia Corp. announced that the Nvidia BlueField-4 data processor, part of the full-stack Nvidia BlueField platform, powers Nvidia Inference Context Memory Storage Platform, a new class of AI-native storage infrastructure for the next frontier of AI.![]() As AI models scale to trillions of parameters and multistep reasoning, they generate vast amounts of context data – represented by a KV cache, critical for accuracy, user experience and continuity.

As AI models scale to trillions of parameters and multistep reasoning, they generate vast amounts of context data – represented by a KV cache, critical for accuracy, user experience and continuity.

A KV cache cannot be stored on GPUs long term, as this would create a bottleneck for real-time inference in multi-agent systems. AI-native applications require a new kind of scalable infrastructure to store and share this data.

Nvidia Inference Context Memory Storage Platform provides the infrastructure for context memory by extending GPU memory capacity, enabling high-speed sharing across nodes, boosting tokens per seconds by up to 5x and delivering up to 5x greater power efficiency compared with traditional storage.

“AI is revolutionizing the entire computing stack – and now, storage,” said Jensen Huang, founder and CEO of Nvidia. “AI is no longer about one-shot chatbots but intelligent collaborators that understand the physical world, reason over long horizons, stay grounded in facts, use tools to do real work, and retain both short- and long-term memory. With BlueField-4, Nvidia and our software and hardware partners are reinventing the storage stack for the next frontier of AI.”

Nvidia Inference Context Memory Storage Platform boosts KV cache capacity and accelerates the sharing of context across clusters of rack-scale AI systems, while persistent context for multi-turn AI agents improves responsiveness, increases AI factory throughput and supports efficient scaling of long-context, multi-agent inference.

Key capabilities of the Nvidia BlueField-4-powered platform include:

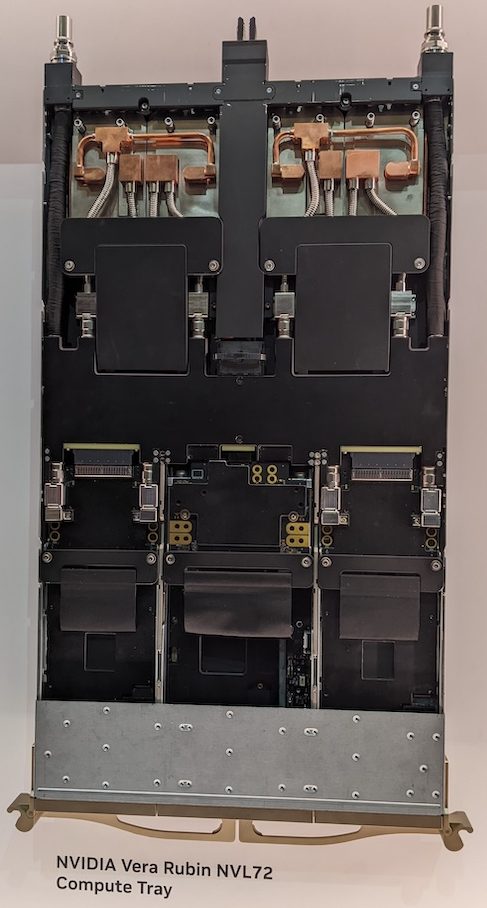

- Nvidia Rubin cluster-level KV cache capacity, delivering the scale and efficiency required for long-context, multi-turn agentic inference

- Up to 5x greater power efficiency than traditional storage

- Smart, accelerated sharing of KV cache across AI nodes, enabled by the Nvidia DOCA framework and tightly integrated with the Nvidia NIXL library and Nvidia Dynamo software to maximize tokens per second, reduce time to first token and improve multi-turn responsiveness

- Hardware-accelerated KV cache placement managed by Nvidia BlueField-4 eliminates metadata overhead, reduces data movement and ensures secure, isolated access from the GPU nodes

- Efficient data sharing and retrieval enabled by Nvidia Spectrum-X Ethernet serves as the high-performance network fabric for RDMA-based access to AI-native KV cache

Storage innovators including AIC, Cloudian, DDN, Dell Technologies, HPE, Hitachi Vantara, IBM, Nutanix, Pure Storage, Supermicro, Vast Data and WEKA are among the first building next-generation AI storage platforms with BlueField-4, which will be available in the second half of 2026.

Comments

Nvidia's presence feels ubiquitous; this is often the first impression when a new announcement emerges from the company. Making a major impact at CES 2026 fits perfectly into this strategy, as the event offers unparalleled global visibility. While Nvidia still maintains a strong consumer footprint through its historic graphics business - an activity that remains relevant - it is now clearly overshadowed by the company's dominant focus on AI, data centers, and large-scale infrastructure solutions.

At the same time, Nvidia must capitalize on every possible lever for growth. Whether through major trade shows, partnerships with technology or business players, government-backed initiatives, evolving regulations, or breakthrough technological advances, the company consistently seeks to expand its influence, reinforce its market control, and defend its leadership positions.

One area where Nvidia excels is in selecting complementary technology companies to broaden its portfolio. Rather than relying solely on traditional acquisitions, the company has increasingly favored licensing-based approaches that shorten integration timelines. This strategy has been visible in collaborations with firms such as Enfabrica and, more recently, Groq. Beyond these targeted moves, Nvidia's broader investment strategy fuels a virtuous cycle involving hyperscalers, neocloud providers, AI specialists, and processor or accelerator vendors. This tightly connected "AI ecosystem" clearly sustains momentum, even as it also raises questions about potential market overheating.

Conventional acquisitions still play a critical role, however, and one in particular has profoundly reshaped Nvidia’s market position: the purchase of Mellanox, finalized in early 2020 for around $7 billion. The impact on networking revenues has been remarkable. In Q3 FY26 alone, this segment generated $8.3 billion, up from $7.3 billion in the previous quarter.

As infrastructure demands intensify, networking has become a central pressure point. Achieving an end-to-end, ultra-fast, low-latency network is now essential. Nvidia's continued investment in DPUs reflects this reality, even though the market has seen many competing initiatives fade away, leaving only a handful of viable platforms - among them BlueField. DPUs have become critical for reducing data access latency, simplifying architectures, and accelerating data movement through offloaded processing. Nvidia's architectural choices clearly demonstrate the strength of this model, and the results validate the approach. The most recent iteration, BlueField-4, was unveiled at GTC Washington in late October.

Yesterday, Nvidia introduced its Inference Context Memory Storage Platform, announced by CEO Jensen Huang at the Fontainebleau. As mentioned, built on the BlueField foundation, this platform aims to dramatically extend memory capacity by enabling ultra-high-speed data sharing across nodes.

Storage vendors have already begun adapting, with several porting or optimizing their software stacks for BlueField. Examples include Weka with NeuralMesh, DDN with Infinia, and VAST Data. This trend reinforces the importance of software-defined storage and advanced software development as the fastest path to integration and compatibility with Nvidia's ecosystem. It also highlights an increasingly competitive arena dominated by high-performance storage specialists, particularly those with deep roots and active in HPC - effectively forming an exclusive and influential club.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter