Recap of the 65th IT Press Tour in Athens, Greece

With 6 companies: 9LivesData, Enakta Labs, Ewigbyte, HyperBunker, Plakar and Severalnines

By Philippe Nicolas | December 18, 2025 at 2:00 pmThe 65th edition of The IT Press Tour took place in Athens, Greece. Recognized as a press event reference, it invites media to meet and engage in in-depth discussions with various enterprises and organizations on IT infrastructure, cloud computing, networking, cybersecurity, data management and storage, big data and analytics, and the broader integration of AI across these domains. Six companies joined that edition, listed here in alphabetical order: 9LivesData, Enakta Labs, Ewigbyte, HyperBunker, Plakar and Severalnines.

9LivesData

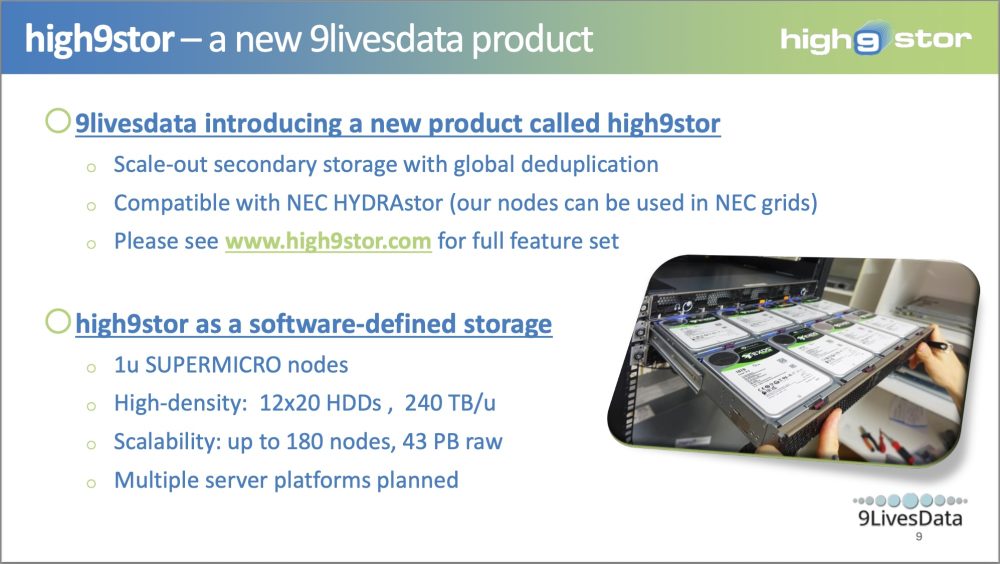

At a time when enterprises are struggling to rein in the spiraling costs of backup infrastructure, Polish storage specialist 9LivesData is positioning itself as a challenger with deep roots in large-scale, mission-critical systems. During its Athens presentation, the company made a clear case: exponential data growth has turned traditional backup storage into a financial and operational liability, and only a radical rethink of secondary storage architecture can restore balance.

Founded as a spin-off from NEC Labs America in Princeton, 9LivesData brings more than 16 years of experience in large-scale backup systems. Its founding team played a central role in the development of NEC HYDRAstor, one of the first scale-out backup storage platforms with global deduplication, deployed in thousands of nodes worldwide and proven to store exabytes of data without loss. That legacy now underpins the company’s latest product, high9stor.

high9stor is positioned squarely in the enterprise secondary storage market, targeting backup and archive use cases where cost efficiency, scalability, and availability matter more than raw performance. The company’s core promise is aggressive total cost of ownership (TCO) reduction – 20% today, with a roadmap toward 30% – achieved through a software-defined, scale-out architecture built on commodity hardware.

Rather than relying on monolithic controllers or proprietary appliances, high9stor distributes both capacity and performance evenly across nodes. Each additional node increases throughput and storage simultaneously, avoiding the bottlenecks that plague scale-up systems. The platform uses inline, global, variable-block deduplication and compression, allowing all data – regardless of source or backup application – to benefit from space savings. According to the company, this not only reduces capacity requirements but also accelerates backup operations, enabling ingest rates of up to 72 TB per hour per node.

Resilience is another cornerstone of the design. Instead of replication-heavy approaches that consume vast amounts of storage, high9stor relies on erasure coding, tolerating up to six disk or node failures per protection group while using only 25% of raw capacity for redundancy. This makes high availability economically viable at scale, particularly for long retention periods.

Security and ransomware protection are tightly integrated rather than bolted on. The system supports WORM (write-once, read-many) enforcement through direct integration with major backup platforms such as Cohesity NetBackup, Veeam, and Commvault, alongside encrypted, deduplication-aware replication for disaster recovery. Crucially, the platform is designed for non-stop operation, allowing nodes to be added, removed, or replaced online – supporting up to three generations of hardware in a single cluster and eliminating forklift upgrades.

From a business standpoint, 9LivesData is targeting enterprises in finance, healthcare, government, telecoms, energy, and media, with a particular focus on EMEA and Central Asia. The company sells through integrators and partners, while retaining responsibility for advanced support – a model shaped by years of operating at the sharp end of critical infrastructure deployments.

Looking ahead, the roadmap includes major efficiency gains, archive-specific configurations, deeper application integration, and a next-generation high9stor platform based on QLC SSDs, aiming to combine higher performance with comparable TCO and improved environmental sustainability. For 9LivesData, the message is clear: backup storage does not need to be bloated, fragile, or prohibitively expensive – and the company believes it has the architectural proof to show it.

Click to enlarge

Enakta Labs

Enakta Labs is positioning itself as a specialist vendor determined to bring extreme-performance storage out of the supercomputing niche and into broader enterprise use. Founded in 2023 by Denis Nuja and Denis Barakhtanov, the company builds on years of hands-on experience with DAOS (Distributed Asynchronous Object Storage), an open-source technology originally developed for the world’s fastest supercomputers. Enakta’s pitch is straightforward: take what is widely regarded as one of the fastest storage engines on the planet and make it usable, supportable, and commercially viable outside elite HPC circles.

At the core of the company’s offering is the Enakta Labs Platform, now shipping in version 1.3 and based on DAOS 2.6.4. Rather than reinventing the storage engine, Enakta focuses on lifecycle management, deployment, and integration. The platform is designed for bare-metal environments and provides a highly available management framework, containerized services, and PXE-based deployment that can stand up hundreds of nodes in hours – assuming hardware and networking are ready. The company emphasizes simplicity: an immutable, in-memory OS image, containerized data services, and no reliance on legacy PXE components like TFTP servers.

Performance is the main selling point, and Enakta does not shy away from bold claims. The platform has been validated in demanding environments, including Core42’s 10,000-GPU Maximus-01 cluster, where it appeared in the IO500 benchmark. Using RDMA over 400-Gbit Ethernet and NVMe storage, Enakta demonstrated extremely high throughput and low latency, approaching hardware limits in real-world testing. The company argues that its scale-out design allows customers to adopt new generations of NVMe drives and faster networks without rewriting code, ensuring performance growth tracks hardware innovation rather than software bottlenecks.

Beyond raw speed, Enakta Labs positions itself as an antidote to what it sees as common enterprise storage frustrations. Its presentation highlights familiar pain points: GPUs sitting idle while waiting for data, lengthy filesystem checks, overprovisioned systems built to hit peak performance, and the complexity of deploying and operating DAOS without specialist expertise. Enakta’s answer is a managed, opinionated platform that abstracts DAOS complexity while preserving its advantages. Support for multiple access methods, including a high-performance SMB interface and a limited S3 interface in preview, is intended to broaden appeal beyond traditional HPC workloads into AI/ML, fintech, and media and entertainment.

Culturally, Enakta Labs is keen to differentiate itself from larger infrastructure vendors. Its stated mission rejects long contracts, opaque roadmaps, and costly professional services add-ons. The company emphasizes direct access to engineers and 24/7 support, presenting itself as customer-driven rather than sales-driven. Go-to-market plans reflect this stance: a partner-first, single-tier channel model, hardware neutrality, and simple node-based licensing, with both perpetual and subscription options depending on customer type.

Looking ahead, Enakta Labs plans to expand protocol support with S3 and NFS, deepen hardware validation, and continue contributing to the DAOS ecosystem. While the company is open to external funding, it signals reluctance to pursue hypergrowth at the expense of product quality or customer satisfaction. In an industry often dominated by scale and marketing muscle, Enakta Labs is betting that deep technical credibility, extreme performance, and a stripped-down commercial model can carve out a durable niche in the data-intensive era.

Click to enlarge

Ewigbyte

As artificial intelligence, data growth, and energy constraints collide, ewigbyte is positioning itself as a radical alternative to how the world stores cold data. The European startup argues that the storage industry is approaching a structural breaking point – one that incremental improvements to hard drives, SSDs, or tape can no longer fix.

The company’s central thesis is stark: global data volumes are expanding faster than storage production capacity. By 2030, ewigbyte projects a shortfall of roughly 15 zettabytes, equivalent to a 50% supply gap between enterprise-grade storage demand and what traditional technologies can deliver. At the same time, the era of cheap storage is ending. HDD and SSD prices are rising sharply year over year, while energy consumption, electronic waste, and carbon emissions continue to climb.

Ewigbyte frames this crisis within three converging megatrends – AI, energy scarcity, and data growth. Without a fundamental shift, the company warns, Europe’s data will increasingly be co-located with power plants and hyperscale AI facilities, undermining sustainability and digital sovereignty. The reliance on decades-old technologies such as HDDs, SSDs, and magnetic tape—systems vulnerable to failure, ransomware, environmental hazards, and limited lifespans – only deepens the problem.

The company’s response is to rethink cold storage from first principles. Rather than optimizing for speed or density, ewigbyte focuses on durability, immutability, and zero operational energy use. Its solution uses photonic laser technology to permanently write data onto ultra-thin glass media. Data blocks are “burned” into glass using ultra-short pulse UV lasers and spatial light modulators, without chemical coatings. Once written, the data is immutable, immune to electromagnetic pulses, radiation, humidity, heat, and cyberattacks, and designed to last more than 10,000 years.

Crucially, ewigbyte emphasizes that glass ablation is not experimental science. It is a proven industrial process already used in precision manufacturing. The company’s innovation lies in adapting and refining the optical system, integrating robotics, and building a scalable operational model around what it calls physical data warehousing. Instead of conventional data centers, glass-based storage “cubes” are catalogued and stored securely, consuming no power once written and accessed only when needed.

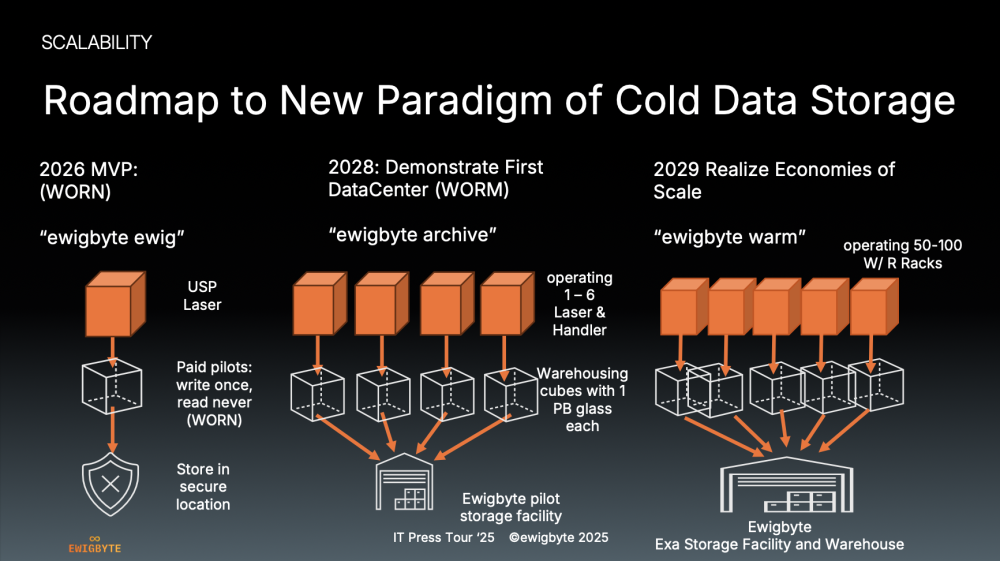

From a market standpoint, ewigbyte sees HDDs and tape steadily losing relevance as SSDs dominate hot data tiers and optical technologies take over cold archives. The company’s go-to-market strategy reflects this transition. Initial deployments target WORN (write once, read never) use cases, such as compliance archives and long-term backups. This evolves toward WORM (write once, read many) archival data centers by 2028, with broader cold and warm data services following as scale and economics improve.

The roadmap outlines an MVP by 2026, a first operational archival data center by 2028, and large-scale facilities by 2029. Backed by a founding team with deep technical, legal, and industry experience, ewigbyte is not positioning itself as another storage vendor – but as a foundational infrastructure company seeking to redefine how humanity preserves data for centuries.

Click to enlarge

HyperBunker

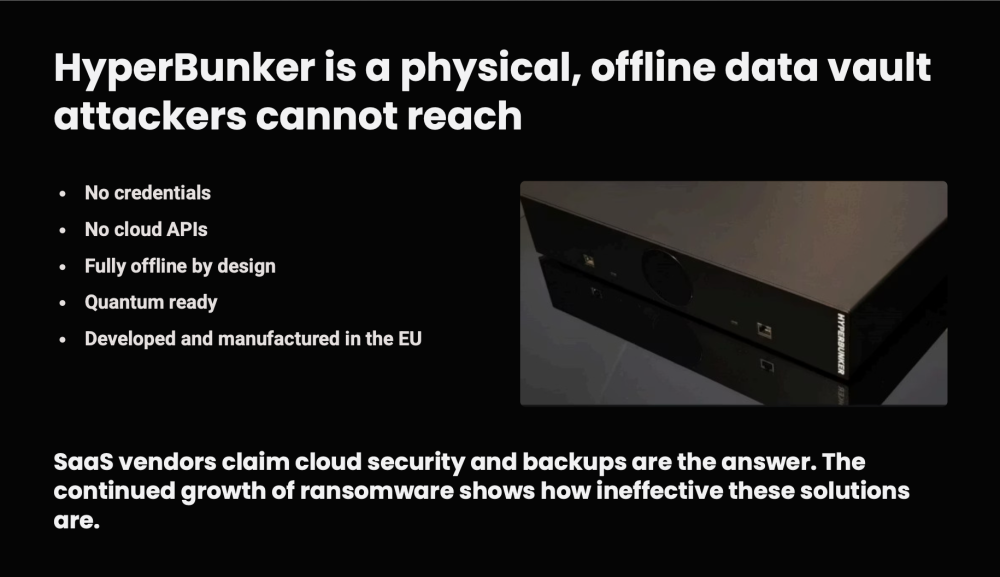

HyperBunker positions itself as a radical rethink of cyber-resilience at a time when ransomware is outpacing traditional defenses. The company argues that the industry’s prevailing reliance on cloud-connected backups and credential-based security has created a dangerous illusion of safety – one that repeatedly collapses under real-world attacks.

The company’s origins lie in hard lessons learned from more than 50,000 real data-recovery cases accumulated over 25 years across sectors such as finance, healthcare, manufacturing, energy and transport. According to HyperBunker, these incidents revealed a consistent pattern: attackers move faster than defenders, credentials are inevitably stolen, and backups – especially those connected to networks or cloud APIs – are frequently compromised. The most devastating moment, the company notes, is not the attack itself, but the discovery that recovery is impossible.

HyperBunker’s response is deliberately uncompromising. Rather than adding another layer of software or policy controls, it delivers a physical, hardware-based data vault that is fully offline by design. There are no credentials to steal, no cloud interfaces to exploit and no persistent network connections. Data is transferred through a patented “butlering” unit that acts as a controlled airlock, enforcing a double physical air gap before information reaches the vault. Once inside, immutable copies are stored completely offline, with the last four versions of critical data always preserved and beyond an attacker’s reach.

The target is not general-purpose backup, but the narrow slice of information that keeps an organization alive: identity data, financial records, operational configurations, regulatory and legal documentation, and trust-critical customer or partner data. HyperBunker frames this as a business continuity issue rather than an IT convenience. In essential industries – critical infrastructure, healthcare, energy, government or manufacturing – downtime quickly becomes a compliance failure, a safety risk or a governance crisis.

A central claim of the presentation is that “the worst place to protect against ransomware is the cloud.” HyperBunker contrasts its approach with software-defined, credential-based systems that are marketed as “air-gapped” yet remain reachable to attackers. By stripping connectivity out of the equation entirely, the company says its vaults sit beyond the attack surface. If attackers cannot see the data, they cannot encrypt or destroy it.

Commercially, HyperBunker is offered as hardware-as-a-service through a simple subscription model that includes the hardware, SLA-backed support and regular restore checks. The company argues this predictable monthly cost is trivial compared to the multi-million-euro losses often triggered by ransomware incidents. Early validation includes more than 80 technical demonstrations without rejection, endorsement from a major U.S. cyber-insurer after technical review, and early discussions with defense innovation groups – underscoring interest in offline recovery for mission-critical and even dual-use environments.

Financially backed by Fil Rouge Capital and Sunfish, HyperBunker has raised around €800,000, delivered its first 20 units to customers and reached serial production readiness. Its roadmap points toward deeper integration with storage providers, expanded insurance partnerships, higher capacities and military-grade variants. The broader ambition is clear: to make offline resilience a standard governance layer, not an optional add-on. In a threat landscape shaped by automation and AI-driven attacks, HyperBunker is betting that the only truly safe data is the data attackers can never reach.

Click to enlarge

Plakar

Plakar arrived in Athens with an ambitious pitch: the data protection industry, after three decades of incremental innovation, is stuck in a structural deadlock. Ransomware, cloud sprawl, SaaS proliferation and now AI-driven attacks have pushed data loss incidents to record levels, while most organizations still rely on fragmented backup tools, proprietary formats and an illusion of readiness that collapses during a real incident.

Founded by Julien Mangeard, former CPTO at Veepee, and Gilles Chehade, founder of OpenSMTPD and long-time OpenBSD core committer, Plakar positions itself as both an open-source project and a venture-backed company. Headquartered in France, the company raised €3 million in March 2025, led by Seedcamp and backed by well-known figures from Datadog, Docker and Sqreen. Its stated mission is straightforward but far-reaching: establish a new, open standard for data resilience that makes secure backup and recovery simpler, verifiable and vendor-neutral.

The company’s diagnosis is blunt. Modern IT environments span on-premises systems, multiple clouds and dozens of SaaS applications, while legacy backup architectures were never designed for petabyte-scale data, zero-trust security or strict regulatory requirements such as GDPR, NIS2 and DORA. According to figures cited in the presentation, recovery failure rates after cyberattacks remain alarmingly high, and a majority of IT leaders overestimate their organization’s actual readiness. Backup, Plakar argues, has become the “last line of defense” in an increasingly indefensible landscape.

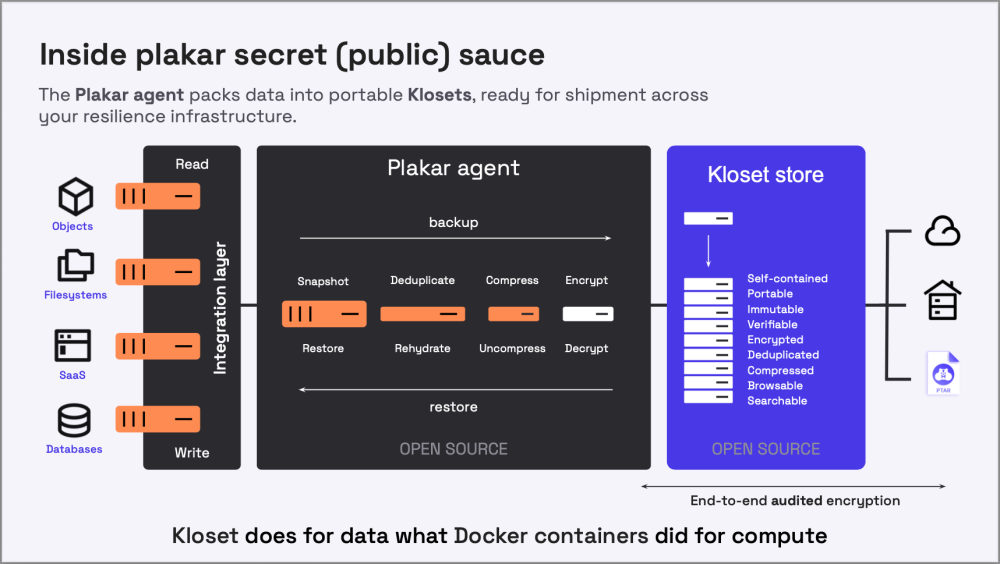

Plakar’s answer is what it calls the “Open Resilience Standard.” At the core is an open-source agent that packages data from virtually any source – filesystems, objects, databases or SaaS – into portable, immutable containers called “Klosets.” These containers are encrypted end-to-end, deduplicated, compressed, verifiable and browsable, and can be stored anywhere: cloud object storage, low-cost NAS, tape or on-premises infrastructure. The key point is separation of trust and service. Data is encrypted before it leaves the customer’s environment, and encryption keys never leave their control. Storage providers, MSPs or cloud platforms can manage retention, replication and SLAs without ever seeing the data itself.

This design is meant to break two long-standing industry traps. The first is the “smart infrastructure” model, where client-side encryption undermines deduplication and drives up storage costs. The second is the “walled garden” approach of tightly integrated appliances, which lock customers into proprietary formats and perpetual licenses just to access their own backups. Plakar claims its architecture resolves what it calls the “impossible equation”: combining zero-trust security, cost efficiency, interoperability and fast, granular restores in a single system.

During the tour, Plakar also announced a preview of Plakar Enterprise, a commercial offering that builds on the open-source core. Delivered as a virtual appliance, it adds a unified control plane with role-based access, secret management, compliance tooling, SLA management and centralized “backup posture management” across on-premises, cloud and SaaS environments. Notably, Plakar stresses a “no vendor lock-in” guarantee: backups created with the enterprise version can always be restored for free, even without an active license.

Looking ahead, Plakar envisions an ecosystem where “resilience-as-a-service” becomes a standard offering. Cloud providers and MSPs can deliver compliant backup services on top of the Plakar standard, while customers retain full sovereignty over their data. In a market dominated by proprietary platforms and growing cyber risk, Plakar is betting that openness, cryptographic trust and standardization are no longer ideological choices – but operational necessities.

Click to enlarge

Severalnines

Severalnines is positioning itself at the intersection of open-source databases, cloud economics, and digital sovereignty, arguing that the future of Database-as-a-Service (DBaaS) will not be owned exclusively by hyperscalers. Instead, the company promotes what it calls “Sovereign DBaaS”: a model that allows organizations to build and operate their own DBaaS platforms while retaining full control over data, infrastructure, costs, and compliance.

Founded in 2011, Severalnines has spent more than a decade helping enterprises automate and manage databases at scale, supporting over 200 enterprise customers. Drawing on this experience, the presentation frames today’s DBaaS market as the product of three evolutionary “acts.” The first act saw hyperscalers define DBaaS through convenience, speed, and low entry costs, but at the price of cloud lock-in, rising costs at scale, and limited hybrid or multi-cloud flexibility. The second act brought database vendors into the DBaaS arena, reducing reliance on hyperscalers but introducing a new form of vendor lock-in and continued infrastructure constraints.

Severalnines argues that a third act is now emerging. In this phase, intelligent automation platforms make it possible for organizations to “roll their own” DBaaS using open-source databases, running them wherever it makes sense: on-premises, in private clouds, or across multiple public clouds. This shift is driven by both economics and regulation. Slides comparing hyperscaler infrastructure spending with European cloud providers highlight a widening investment gap, while data sovereignty, regulatory compliance, and cost predictability are becoming board-level concerns rather than purely technical ones.

At the heart of the company’s proposition is Sovereign DBaaS, defined as a vendor-neutral, portable DBaaS model that avoids lock-in and gives organizations direct control over their data plane and control plane. According to Severalnines, this approach enables enterprises to achieve cloud-like automation and self-service without surrendering ownership of infrastructure, encryption keys, or deployment choices. It also allows organizations to run comparable workloads at significantly lower cost than hyperscaler-managed DBaaS, particularly at scale.

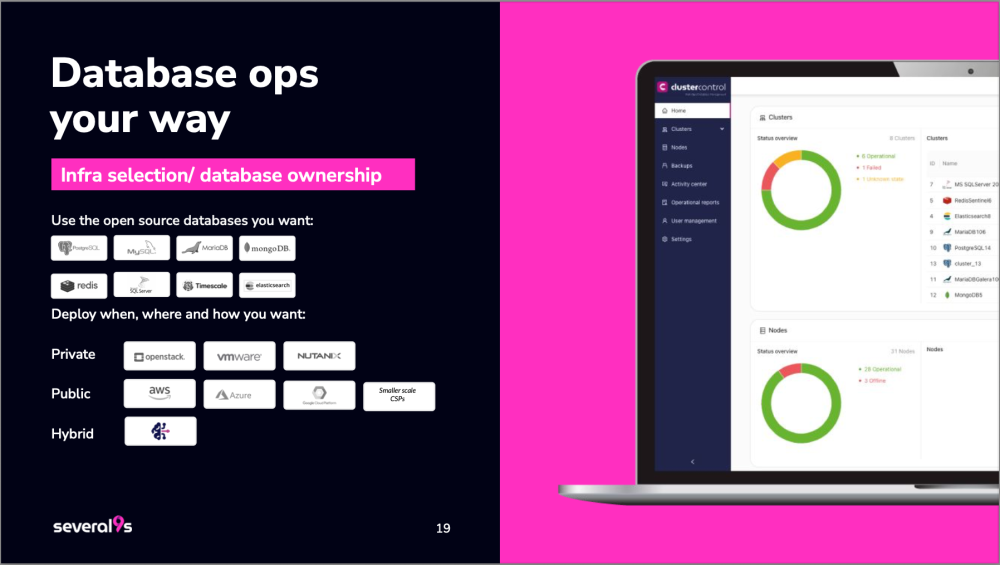

The technical foundation of this vision is ClusterControl, Severalnines’ automation platform, which delivers what the company describes as “12 operations, one platform.” These include provisioning, scaling, backup management, failover, monitoring, security, upgrades, compliance, and integration with DevOps tooling. The platform supports a wide range of open-source databases – including MySQL, PostgreSQL, MariaDB, Redis, and others – and is designed to manage polyglot database environments across hybrid and multi-cloud infrastructures.

Governance and compliance are presented as core design principles rather than add-ons. Sovereign DBaaS emphasizes data residency, auditability, encryption (including bring-your-own-key models), and alignment with regulatory frameworks. Severalnines argues that this model better reflects enterprise reality, where organizations increasingly operate across multiple clouds and on-premises environments while facing growing regulatory pressure.

Customer case studies, including large-scale hybrid deployments such as ABSA (formerly Barclays Africa Group), are used to illustrate how enterprises are adopting open-source automation to enable self-service database provisioning without sacrificing control or requiring constant DBA intervention. For Severalnines, these examples underscore a broader trend: DBaaS is no longer synonymous with outsourcing databases to hyperscalers, but with giving organizations the tools to operate databases as a service on their own terms.

In conclusion, Severalnines positions Sovereign DBaaS as both a technical and strategic response to cloud concentration, rising costs, and sovereignty concerns. Rather than rejecting cloud outright, the company advocates a pragmatic middle ground – one where automation delivers the benefits of DBaaS, while ownership, portability, and control remain firmly in the hands of the customer.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter