SC25: DDN Launches DDN CORE Unified Data Engine Powering AI Factory Era

Setting new performance benchmark for HPC and AI with extreme scale, efficiency, and intelligence

This is a Press Release edited by StorageNewsletter.com on November 19, 2025 at 2:03 pmAt SC25, DDN, the global leader in AI and data intelligence solutions, unveiled DDN CORE, a breakthrough unified data engine built to sustain the world’s most data-intensive AI and HPC environments.  For decades, DDN has powered the fastest AI HPCs and most advanced research institutions on Earth. With DDN CORE, that same precision-engineered foundation now becomes the backbone of the AI Factory Era-unifying HPC and AI data workflows under one intelligent, high-performance system that keeps GPUs at full throttle and infrastructure fully productive.

For decades, DDN has powered the fastest AI HPCs and most advanced research institutions on Earth. With DDN CORE, that same precision-engineered foundation now becomes the backbone of the AI Factory Era-unifying HPC and AI data workflows under one intelligent, high-performance system that keeps GPUs at full throttle and infrastructure fully productive.

“The bottleneck in AI isn’t compute anymore-it’s data,” said Alex Bouzari, CEO and co-founder, DDN. “DDN CORE gives organizations a single data foundation where HPC and AI operate together at full speed and scale. It’s how we turn infrastructure cost into intelligence ROI.”

“DDN CORE was engineered to eliminate idle GPUs,” added Sven Oehme, CTO, DDN. “By combining our expertise in parallel data systems with new intelligence-driven automation, CORE removes I/O latency, streamlines orchestration, and keeps every GPU working – not waiting.”

Problem: Billions in Compute, Stalled by Data

Enterprises and research centers are pouring more than $180 billion into AI infrastructure each year. However, many admit that their environments are too complex to manage-burdened by bottlenecks between training, inference, and data preparation that leave vast amounts of compute power idle.

At the same time, global data-center power use is on track to double to 1,000 TWh, roughly the annual electricity consumption of the UK. Inefficient I/O and fragmented data architectures are wasting both power and progress.

DDN CORE changes this equation. It replaces the patchwork of separate HPC and AI systems with one unified data engine designed to move information at the speed of computation – converting every watt of power and every GPU cycle into meaningful work.

Breakthrough: One Engine, Every Workload

At its core, DDN CORE unites DDN’s proven EXAScaler and Infinia technologies into a single high-performance data fabric that feeds, manages, and optimizes the entire AI lifecycle – from simulation to training, inference, and retrieval-augmented generation (RAG).

CORE isn’t a storage refresh; it’s a new class of intelligent software built for performance without compromise.

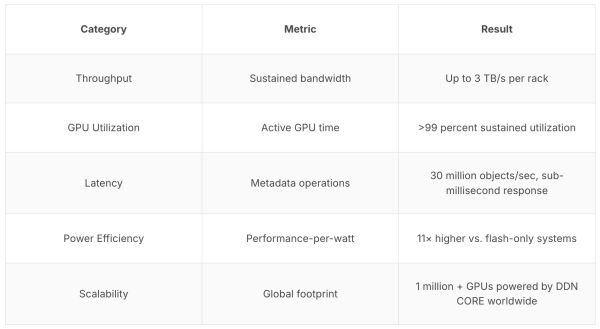

Performance Highlights:

- Unified Data Plane: HPC-grade consistency and parallel throughput across hybrid, on-premises, and sovereign deployments

- Training Acceleration: Up to 15× faster checkpointing and 4× faster model loading, driving >99% GPU utilization in production AI environments

- Inference and RAG Optimization: Integrated caching and token reuse deliver 25× faster response and 60% lower cost per query

- Extreme Density and Efficiency: Up to 11× higher performance-per-watt and 40% lower power consumption with next-gen system designs

- Autonomous Operations: Built-in telemetry, observability, and self-tuning through DDN Insight, ensuring continuous optimization – no idle cycles, no manual tuning

Software-Defined Foundation of the AI Factory

DDN CORE is a software-defined data engine-the intelligence layer that unifies performance, observability, and orchestration across diverse compute and storage architectures. CORE runs natively on DDN’s AI400X3 and Infinia platforms, as well as certified systems from partners such as Supermicro and leading cloud providers, ensuring consistent AI data performance everywhere.

At SC25, DDN is showcasing the next gen of systems purpose-built to harness CORE’s capabilities for customers:

- AI400X3 Family: New AI400X3i, SE-2, and SE-4 deliver up to 140GB/s read, 110GB/s write, and 4 million IOs in just 2U – combining raw performance with exceptional power density

- AI2200 (Infinia): Purpose-built for inference and RAG, doubling throughput and tokens-per-watt for hyperscale AI factories

- DDN CORE Deployment Flexibility: Customers can run DDN CORE on-premises or in the cloud, with consistent AI data performance across any environment.

Validated Across the World’s Leading AI Ecosystems

DDN CORE is optimized for the Nvidia AI Data Platform and Nvidia AI Factory architectures and validated on Nvidia GB200 NVL72, Nvidia Spectrum-X Ethernet, and Nvidia BlueField DPUs – guaranteeing peak throughput, consistency, and scalability.

Ecosystem Integrations:

- Google Cloud Managed Lustre (First-Party): Up to 70% faster training throughput and 15× faster checkpointing, powered by DDN.

- Oracle Cloud Infrastructure: DDN Infinia delivers low-latency inference with high-density caching acceleration.

- Powered by DDN Cloud Program: Expands deployment through CoreWeave, Nebius, and Scaleway, enabling on-demand AI Factory capacity with consistent, production-grade performance.

“AI-ready storage is no longer optional-it’s foundational to running at scale with data that moves at the speed of compute. Leveraging the Nvidia AI Data Platform reference design, DDN powers AI factories with the performance, throughput, and scale needed to turn data into intelligence in real time,” said Justin Boitano, VP, enterprise AI products, Nvidia.

“By combining the scale of GCP with the performance of DDN CORE, we’re unlocking new levels of throughput for customers training models in days, not weeks,” said Sameet Agarwal, VP Engineering, Google Cloud.

“DDN’s GPU-optimized storage technology, combined with the scalability and security of OCI, gives customers a cloud-native platform purpose-built for AI,” said Sachin Menon, VP, Cloud Engineering, Oracle. “Together, we are enabling enterprises to run complex AI and analytics workloads at scale, with predictable low latency and high throughput.”

Proof at Scale

Across more than one million GPUs and the world’s most demanding data environments, DDN continues to define the state of performance.

- Yotta Shakti Cloud, India: 8,000 Nvidia Blackwell 200 GPUs GPUs, 99% GPU utilization, 40% lower power – the largest sovereign AI deployment in Asia

- CINECA and Helmholtz Munich: 15× faster checkpointing, unified HPC + AI pipelines for faster time-to-insight

- Guardant Health: 70% faster data processing, 40% lower compute cost across AI-driven medical workloads

- SK Telecom Petasus Cloud: Production GPUaaS platform built on DDN Infinia for real-time inference and RAG

Performance Validation

“AI-ready storage is no longer optional-it’s foundational to running at scale with data that moves at the speed of compute. Leveraging the Nvidia AI Data Platform reference design, DDN powers AI factories with the performance, throughput, and scale needed to turn data into intelligence in real time,” said Justin Boitano, VP, enterprise AI products, Nvidia.

Recognized Performance Leadership

- 7 of the Top 10 IO500 systems worldwide run on DDN

- #1 for Enterprise AI Storage in Gartner Critical Capabilities 2025

- Named Fast Company’s “Next Big Things in Tech” 2025 for breakthroughs in AI and data intelligence

See It Live at SC25: Visit DDN Booth #1527.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter