Charting an Open Future for AI Data Storage

Open standard, open source and reference architecture to sustain high demanding AI storage infrastructure pressure

By Philippe Nicolas | August 20, 2025 at 2:02 pmOn July 15th, 2025 the Open Flash Platform (OFP) initiative was introduced. Inaugural members of the initiative are: Hammerspace, the Linux community, Los Alamos National Laboratory, ScaleFlux, SK hynix, and Xsight Labs. This initiative addresses many of the fundamental requirements emerging from the next wave of data storage for AI. The convergence of data creation associated with emerging AI applications coupled with limitations around power availability, hot data centers, and data center space constraints means we need to take a blank-slate approach to building AI data infrastructure.

This initiative addresses many of the fundamental requirements emerging from the next wave of data storage for AI. The convergence of data creation associated with emerging AI applications coupled with limitations around power availability, hot data centers, and data center space constraints means we need to take a blank-slate approach to building AI data infrastructure.

The OFP initiative addresses the data storage component of these challenges.

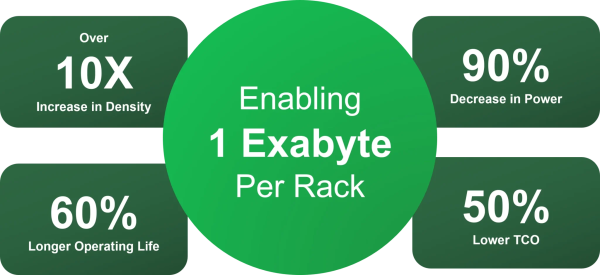

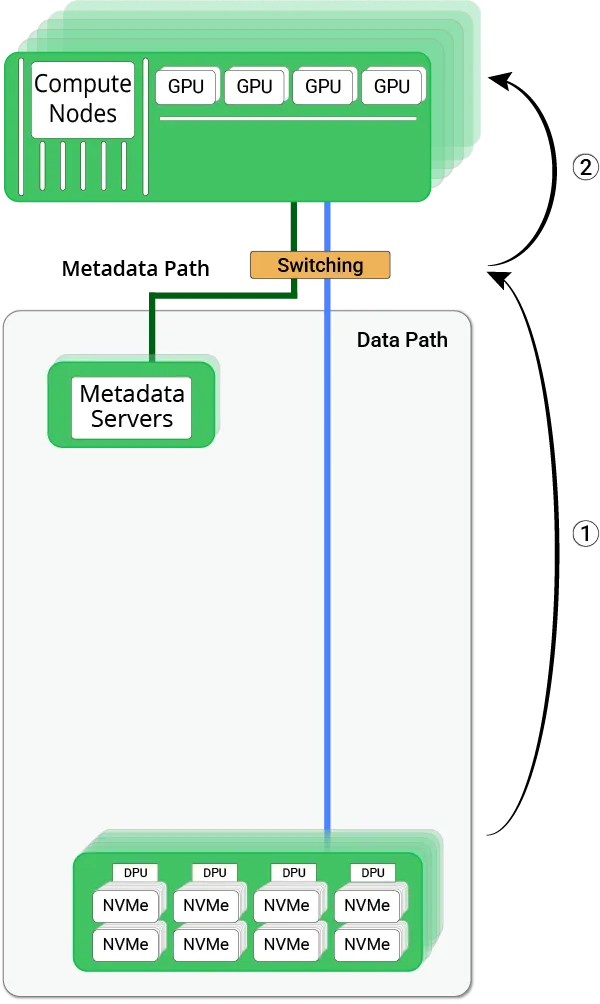

A decade ago, NVMe unleashed flash as the performance tier by disintermediating legacy storage busses and controllers. Now, OFP unlocks flash as the capacity tier by disintermediating storage servers and proprietary software stacks. OFP leverages open standards and open source – specifically parallel NFS and standard Linux – to place flash directly on the storage network. Open, standards-based solutions inevitably prevail. By delivering an order of magnitude greater capacity density, substantial power savings and much lower TCO, OFP accelerates that inevitability.

Current solutions are inherently tied to a storage server model that demands excessive resources to drive performance and capability. Designs from all current all-flash vendors are not optimized to facilitate the best in flash density, and tie solutions to the operating life of a processor (typically five years) vs. the operating life of flash (typically eight years). These storage servers also introduce proprietary data structures that fragment data environments by introducing new silos, resulting in a proliferation of data copies, and adding licensing costs to every node.

We are advocating for an open, standards-based approach which includes several key elements:

- Flash devices – conceived around, but not limited to, QLC flash for its density. Flash sourcing should be flexible to enable customers to purchase NAND from various fabs, potentially through controller partners or direct module design, avoiding single-vendor lock-in.

- IPUs/DPUs – have matured to a point that they can replace much more resource intensive processors for serving data. Both lower cost and lower power requirements mean a much more efficient component for data services.

- OFP cartridge – a cartridge contains all of the essential hardware to store and serve data in a form factor that is optimized for low power consumption and flash density.

- OFP trays – An OFP tray fits a number of OFP cartridges and supplies power distribution and fitment for various data center rack designs.

- Linux Operating System – OFP utilizes standard Linux running stock NFS to supply data services from each cartridge.

Our goals are not modest and there is a lot of work in store, but by leveraging open designs and industry standard components as a community, this initiative will result in enormous improvements in data storage efficiency.

These benefits, relative to current architectures, include:

Selected quotes from OFP Initiative members:

- “Power efficiency isn’t optional; it’s the only way to scale AI. Period. The Open Flash Platform removes the shackles of legacy storage, making it possible to store exabytes using less than 50 kilowatts, vs yesterday’s megawatts. That’s not incremental, it’s radical,” said Hao Zhong, CEO adn co-founder, ScaleFlux.

- “Agility is everything for AI – and only open, standards-based storage keeps you free to adapt fast, control costs, and lower power use,” added Gary Grider, director of HPC, Los Alamos National Lab (LANL).

- “Flash will be the next driving force for the AI era. To unleash its full potential, storage systems must evolve. We believe that open and standards-based architectures like OFP can maximize the full potential of flash-based storage systems by significantly improving power efficiency and removing barriers to scale,” confirmed Hoshik Kim, SVP, head of memory systems research, SK hynix.

- ”Compute, storage, and networking must work as one. Next-gen DPUs deliver the freedom, speed, and power savings open AI infrastructure needs and the E1 from Xsight Labs delivers all this plus unprecedented performance and flexibility,” illustrated Eric Vallone, VP business development, Xsight Labs.

- “pNFS inside a DPU makes the promise real: fully Open Flash Platform, Linux-native file storage that scales without adding power-hungry servers or complex network fabric,” said Trond Myklebust, NFS Client Kernel Maintainer and CTO, Hammerspace.

- “Open, standards-based solutions inevitably prevail. By delivering 10x greater capacity density and a 50% lower TCO, OFP accelerates that inevitability,” concluded David Flynn, founder and CEO, Hammerspace

Want to join and contribute to the initiative? Click here to get more information and help OFP shape the open data storage future.

Comments

I realized that this idea is in the mind of a few people and especially David Flynn, CEO of Hammerspace and previously Primary Data, who promoted this approach for quite some time being a true believer of the model. By model I mean, open standard, open source, Linux and pNFS as he's been a real ambassador of these topics, models and technology directions for several years.

The domain name was registered at the end of June but more than that the .com, .net, .io and .org have been secured the same date and all redirect to the .org web page.

The OFP is interesting by the layer model it promotes with, at the launch, key players for each service or role in the AI storage infrastructure picture.

With a goal to reach 1EB/rack, the idea is pretty simple in a rapid and complex IT world with growing AI demands. In fact modern workloads require a modern approach.

In the initiative, all words are important, I mean Open, Standard, Platform, SDS, COTS and the coupling of SDS and COTS leveraging the others and embodying the platform role.

The concept of OFP cartridge and tray gives an innovative approach like a building block model and makes things interchangeable as more players will join the band. Each of them play a specific role, again Hammerspace for the storage software layer and service with pNFS, Linux for the OS of course providing both a ready to use pNFS server and client stack, SK hynix and ScaleFlux for the SSD and controllers, Xsight Labs for the network part and DPU and finally LANL for recommendations, validation, support and early adoption. Now it goes further with pNFS embedded in a DPU as the logic needs to be in the network, closer to SSDs to offload processing and make things independent and pretty portable. In the model, performance comes from the distribution, the network aspect and the number of participating entities as it provides open scalability in multiple dimensions.

We expect the group to be extended in the coming months as it represents a real opportunity for many players and it should be the case on the Linux and pNFS aspects as finally several vendors have finally realized the maturity of pNFS and its capabilities. A new iteration could be shared around the SC25 conference in St Louis, MI, in November.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter