Recap of 62nd IT Press Tour in California

With 9 organizations: Cohesity, DDN, Graid Technology, Hunch, Lucidity, Phison, PuppyGraph, Tabsdata and the Ultra Accelerator Link Consortium

By Philippe Nicolas | July 31, 2025 at 2:00 pmThe 62nd edition of The IT Press Tour was held in Silicon Valley and San Francisco, CA early June. The event provided a platform for the press group and participating organizations to engage in meaningful discussions on a wide range of topics, including IT infrastructure, cloud computing, networking, security, data management and storage, big data, analytics, and the pervasive role of AI across these domains. Nine organizations participated in the tour, listed here in alphabetical order: Cohesity, DDN, Graid Technology, Hunch, Lucidity, Phison, PuppyGraph, Tabsdata and the Ultra Accelerator Link Consortium.

Cohesity

Over the past several years, data protection has steadily evolved, integrating advanced security-oriented features to better meet the broader needs of enterprises. Today, Cohesity stands as the leading provider of enterprise backup and recovery software. In a recent session with CEO Sanjay Poonen and his executive team, we gained valuable insight into the company’s business strategy, product vision, and long-term direction.

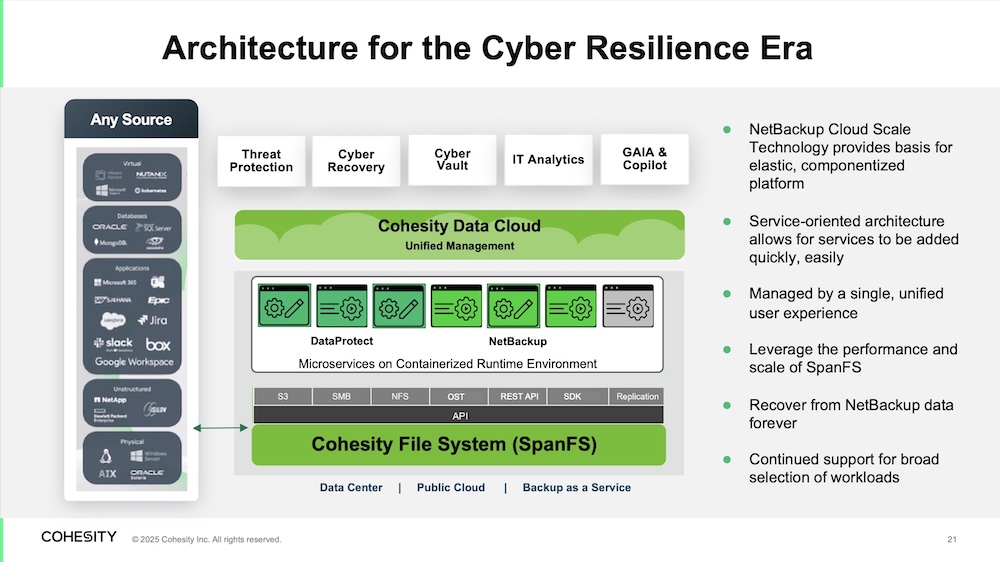

The product roadmap reflects a clear strategy built on multiple key pillars. The first is the management console and its convergence toward Helios, Cohesity’s centralized management platform. A second area of focus is the persistent data layer, which stores backup images. Here, the company is advancing toward the Cohesity Data Platform, powered by its proprietary SpanFS file system, positioning it as the successor to NetBackup’s Flex Appliance. Cohesity’s attention to NetBackup is also driven by its renowned connectivity and integration capabilities – supporting a wide range of devices, clouds, and applications – an advantage Cohesity aims to replicate and surpass.

A notable innovation is Clean Application Recovery, designed to provide secure, rapid, and reliable application-level recovery, reinforcing Cohesity’s emphasis on business continuity.

The broader product vision centers on the Cohesity Platform, which unifies data protection, security, and actionable insights. A key enabler of this vision is GAIA, Cohesity’s generative AI engine, built to interpret and interact with enterprise data across all sources – not limited to secondary storage. GAIA already delivers capabilities such as ransomware and anomaly detection, threat intelligence, data classification, entropy analysis, capacity planning and forecasting, operational insights, and retrieval-augmented generation (RAG) AI for advanced data interaction.

With annual recurring revenue approaching $2 billion, Cohesity is clearly at scale. This growth trajectory strongly suggests that the company is preparing for its next milestone – an IPO, potentially within the next 18 months.

Click to enlarge

DDN

A recognized leader in HPC storage for many years and, more recently, a key player in AI storage, DDN delivered an impressive presentation led by Paul Bloch, President and Co‑founder, and Sven Oehme, CTO. The session stood out not only for its depth of content and strategic vision but also for the company’s remarkable trajectory. Today, DDN serves over 11,000 customers worldwide, employs more than 1,000 people, and is rapidly approaching $1 billion in revenue. A recent highlight in its corporate growth was a $300 million investment from Blackstone, valuing the company at $5 billion.

Leveraging the natural synergy between HPC and AI workloads, DDN was quick to expand into AI infrastructure, developing dedicated extensions such as Nvidia GPUDirect Storage (GDS), now widely supported across the industry. This early commitment positioned DDN at the forefront of AI data management and accelerated computing solutions.

The company’s portfolio focuses on two flagship solutions: ExaScaler and Infinia, both aligned with DDN’s broader data intelligence platform vision. ExaScaler, built on the open-source Lustre file system, is a well-established HPC storage solution recognized on the IO500 list for its performance leadership. Recent updates include support for Nvidia Spectrum‑X and BlueField, as well as a reference architecture optimized for Nvidia’s Blackwell GPU platform, delivering tangible performance gains. Its enhanced multi-tenancy capabilities make it a strong foundation for complex, high-demand workloads.

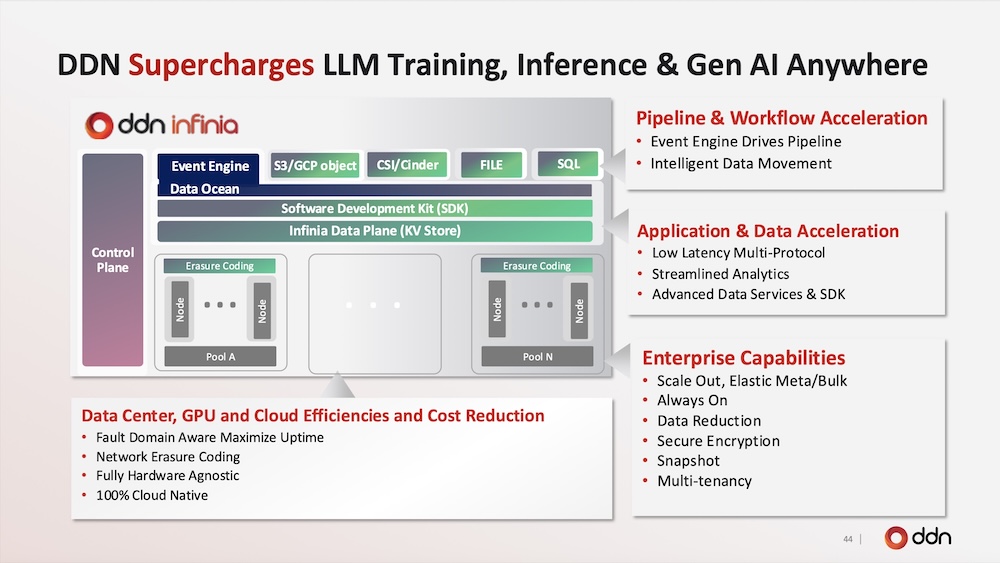

Infinia, DDN’s newest innovation, is a high-performance object storage platform designed and developed entirely in-house. Offered as an appliance, Infinia embodies a next-generation, software-defined storage approach optimized for Flash and SSD media. Its innovative key-value store delivers exceptional performance for both small and large objects, supported by advanced erasure coding for data protection and simultaneous multi-protocol access. Tailored for large-scale configurations, Infinia also introduces a robust multi-tenancy model, making it a versatile and powerful solution for modern data-intensive environments.

Click to enlarge

Graid Technology

Offloading RAID processing to a dedicated controller is not a new concept, but combining it with a GPU to accelerate encoding and decoding operations is far less common. Graid Technology pioneered this innovative approach several years ago and continues to refine it, delivering optimized solutions for a wide range of configurations and demanding environments. This method is specifically designed for NVMe SSD-based infrastructures, where performance is a critical factor, making Graid’s solution particularly compelling for high‑throughput workloads.

We had a very dense company and product update from Tom Paquette, Senior Vice President and General Manager, Americas and EMEA, and Leander Yu, Founder and CEO.

Graid has invested significant effort over the years in building a strong partner ecosystem that includes distributors, resellers, OEMs, and server vendors. This strategic outreach has paid off, earning the attention of Nvidia, which recognized Graid as one of its top 50 key partners following IT Press Tour coverage. To accelerate its market expansion, the company recently raised an additional $30 million in venture funding, bringing its total to approximately $50 million.

The maturity of the technology and its adoption across diverse customer environments have enabled Graid to develop a comprehensive product line. Initially centered around the SupremeRAID SR‑1000, SR‑1001, and SR‑1010, the lineup now includes new models designed for specific use cases: the SE (Simple Edition), AE (AI Edition), and HE (HPC Edition), as well as support for Ampere-based CPUs.

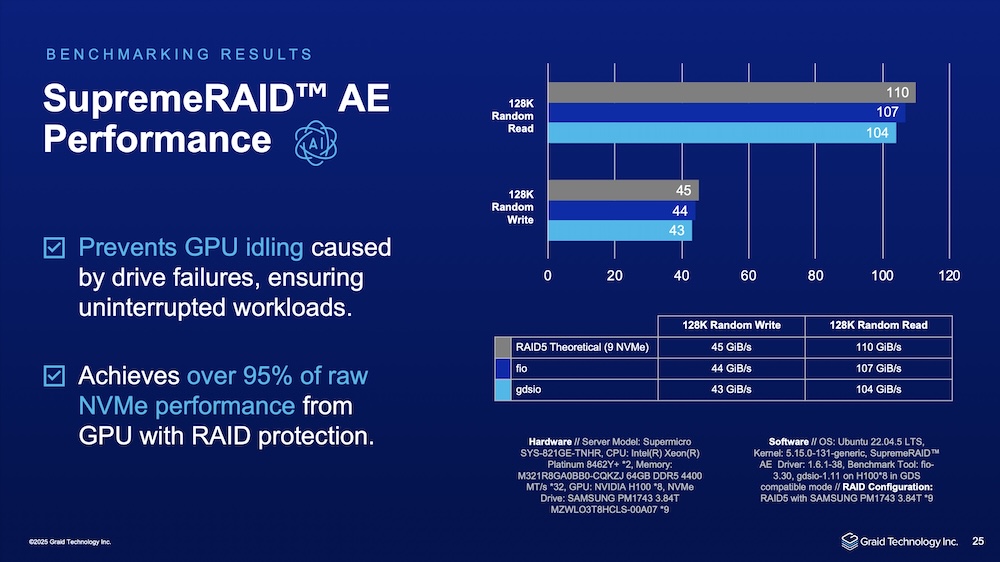

The AE model is optimized for AI workloads, leveraging GPUs to reduce latency while delivering up to 95% of raw NVMe performance. When paired with Nvidia’s H100 GPU, the card consumes only six streaming multiprocessors out of the 144 available, minimizing resource overhead. It has been validated with popular storage systems such as BeeGFS, Ceph, and Lustre, providing a seamless upgrade path for existing deployments without requiring major infrastructure changes.

Looking ahead, Graid plans to introduce next-generation SupremeRAID cards capable of supporting more drives per card, with a strong focus on HPC and AI markets through additional partner validations and performance benchmarks. The company aims to achieve $15 million in revenue in 2025, building on its impressive track record of 5× growth between 2022 and 2024, during which it sold over 5,000 cards in 2024 alone.

Click to enlarge

Hunch

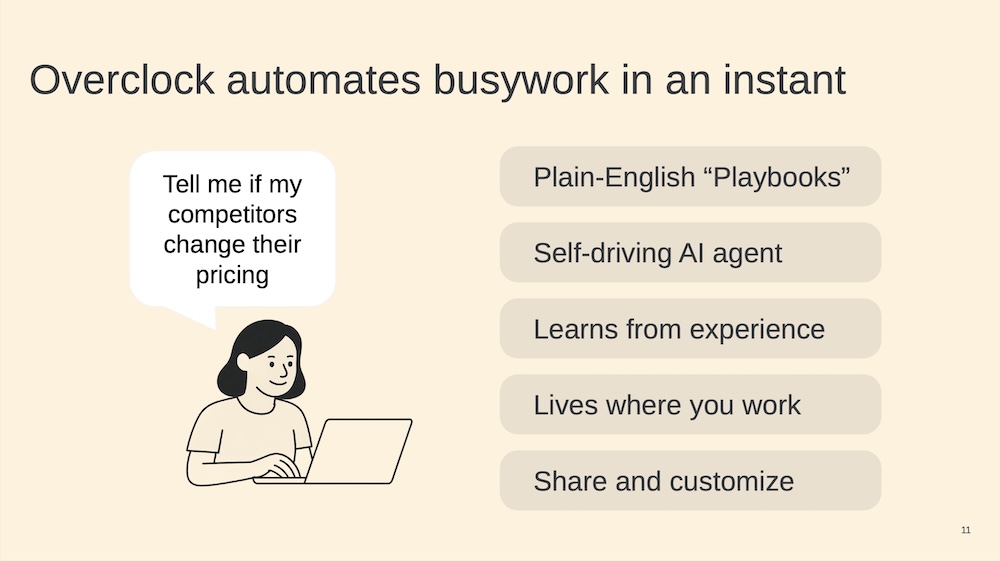

As AI is so hot, we had the opportunity to meet Hunch. The firm presents Overclock, an AI-powered platform built to instantly automate repetitive “busywork” tasks that drain time, mental energy, and potential from teams. Existing solutions such as no-code automation tools, isolated ChatGPT scripts, or early AI agents often fail because they are cumbersome to build, brittle, hard to maintain, and lack scalability or adaptive learning. Overclock addresses these limitations by allowing users to describe their tasks once in Plain-English “Playbooks” that generate self-driving AI agents. These agents learn from experience, live inside existing workflows, and can be shared and customized across teams.

The initial focus is on automating marketing operations: monitoring competitor pricing, scanning emerging topics, distilling and sharing insights from customer calls, qualifying new leads and routing them to sales, transforming white papers into social posts, triaging negative reviews, and tracking rising influencers. By eliminating repetitive tasks, Overclock enables teams to focus on strategy and creativity rather than manual busywork.

The company was founded in 2023 by David Wilson, CEO, Ross Douglas and Alex Leibhanner, who all worked in the past at Cape Networks, a network AI company from South Africa, acquired by HPE Aruba in 2018. The team creates OverClock to automate routine on daily repetitive tasks and avoid what Wilson presented as the “busy work tax”. They’re also known for LinkedIn Rewind we saw a lot early 2025 to promote individual LinkedIn activities.

Overclock already shows early momentum, attracting over 300,000 users in two weeks and powering more than 10 million LLM calls. Positioned as a workflow platform for power users, Overclock aims to “max out” AI models and deliver personalized content, daily marketing ideas, and social posts automatically. The solution is currently available in a pre-release early alpha at overclock.work.

Click to enlarge

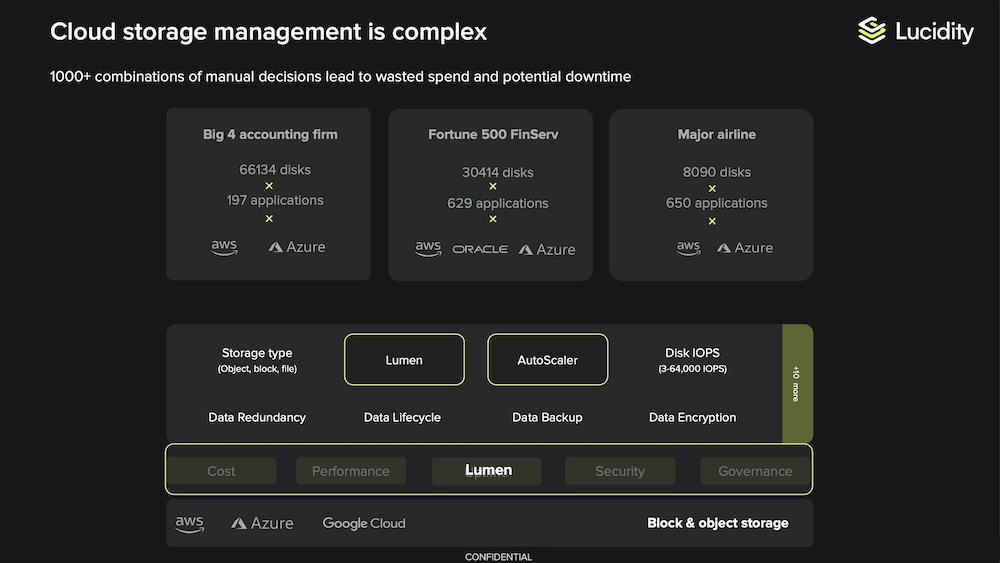

Lucidity

Founded in 2021 by Nitin Bhadauria and Vatsal Rastogi, and backed by $31 million in funding, Lucidity specializes in automated storage optimization for cloud environments. With a team of 100+ employees and a growing roster of Fortune 500 customers, Lucidity addresses the complexity of modern cloud storage management, where manual disk sizing, tiering, and provisioning often result in 50–70% wasted capacity, degraded application performance, increased downtime risks, and hundreds of wasted engineering hours each year.

Its flagship product, Lucidity AutoScaler, is the industry’s first multi-cloud storage management platform supporting AWS, Azure, and GCP. AutoScaler dynamically shrinks and expands storage volumes in real time without downtime, boosting IOPS by up to 2x and improving disk utilization to 75–80% from day one. It seamlessly integrates with databases, application servers, Kubernetes clusters, and more, delivering a true NoOps experience with minimal human intervention.

The platform has already demonstrated significant business impact: a leading U.S. airline saved $88K per month with a 77% ROI, a Big 4 accounting firm automated 51,000 provisioning tasks saving $470K, and an IT management company reduced storage costs by 46%, equating to $1.5M in savings across 1PB of data.

Lucidity is now extending its automation to disk tiering with its new product Lumen, launched during the session by Vatsal Rastogi,. Disk tiering is a frequent source of inefficiency, with 50% of disks often placed on the wrong tier, wasting up to 25% of spend. Lumen provides unified visibility, AI-driven tiering recommendations, and one-click execution without downtime, enabling enterprises to further optimize costs, enhance performance, and improve reliability.

Following a channel-first go-to-market strategy, Lucidity enables partners to bundle AutoScaler and Lumen for greater customer value and revenue expansion. With multi-cloud support, zero-downtime operations, and proven ROI, Lucidity is emerging as a key player in cloud cost optimization and autonomous storage management.

Click to enlarge

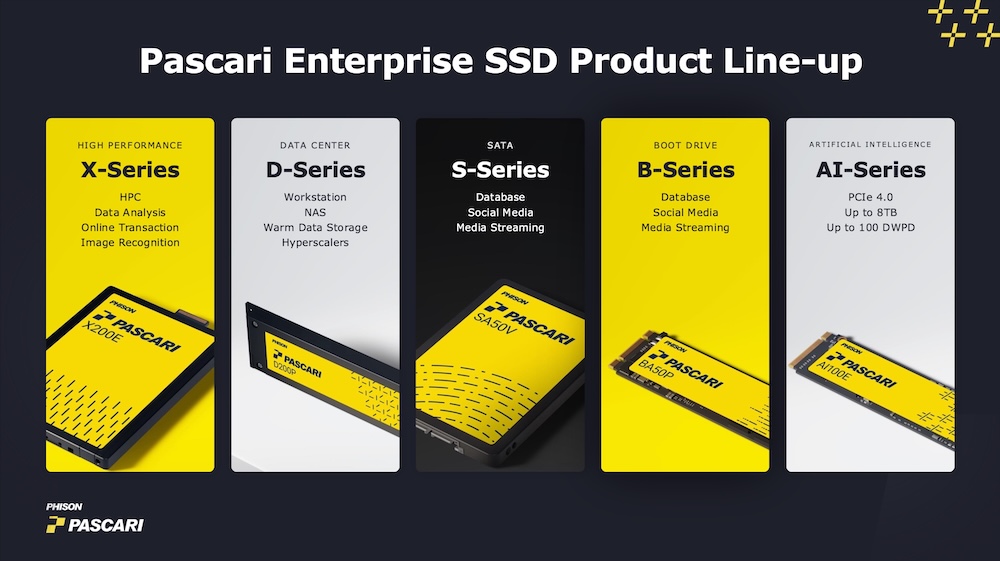

Phison

The company is a global leader in NAND storage solutions, generating $1.8 billion in revenue in 2024 and holding more than 20% of the worldwide SSD market. With over 4,500 employees, 75% in R&D, Phison invests heavily in innovation and vertical integration, controlling its controller, firmware, hardware, and manufacturing. This end-to-end expertise allows the company to deliver customized SSD solutions optimized for specific performance, endurance, and security requirements across industrial, client, enterprise, and AI markets.

Phison has a long track record of industry firsts, including the first PCIe Gen4 SSD, the fastest PCIe Gen4 and Gen5 drives, and the highest-capacity 2.5-inch and M.2 SSDs. The company also holds 2,200+ worldwide patents covering NAND performance optimization, error handling, high-speed interfaces, and mobile security. Its solutions have been validated in extreme environments, including space applications, and are now supporting missions such as lunar data storage.

To meet fast-evolving enterprise and AI needs, Phison launched its IMAGIN+ design service, enabling co-development of tailored SSDs and accelerated time-to-market. Its Pascari Enterprise SSD portfolio spans multiple use cases:

- X-Series for high-performance computing, hyperscale, and AI training/inference, led by the flagship X200 SSD offering PCIe Gen5 performance, dual-port design, and capacities up to 30.72TB

- X200Z, designed for ultra-fast caching, delivering the endurance once associated with Intel Optane but at Gen5 speeds

- D-Series, providing up to 122.88TB QLC capacity for dense, cost-efficient storage, plus specialized models (D200E, D200P) for AI inference and training

- AI-Series, purpose-built for AI pipeline workloads

Phison supports a wide range of form factors – U.2, E3.S/L, E1.S, M.2, and SATA – ensuring compatibility from legacy systems to next-generation hyperscale infrastructures. With AI adoption driving data center growth and the enterprise SSD market forecast to reach $71 billion by 2030, Phison is positioned as a trusted innovation partner, delivering high-performance, customizable storage solutions for data-intensive and AI-driven workloads – on Earth and even beyond, as proven in lunar missions.

Click to enlarge

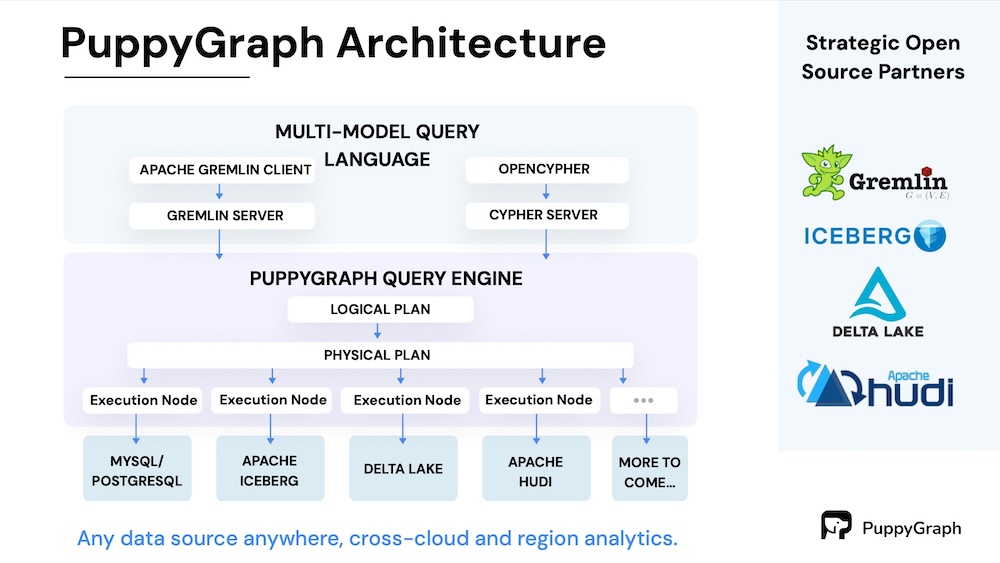

PuppyGraph

Founded in 2023 by Weimo Liu (CEO) and Danfen Xu (CTO), PuppyGraph focuses on simplifying analytics with an innovative graph database engine. The company has already secured $5 million in two funding rounds to accelerate development. Its core idea is to eliminate the need for complex ETL pipelines traditionally required to integrate diverse data sources, query languages, and client libraries. Instead, PuppyGraph connects directly to widely adopted enterprise systems and development tools, effectively bypassing the data lake layer. This allows users to query data as a graph, directly from existing data warehouses and lakes, without heavy data movement or transformation.

Unlike traditional graph databases, which rely on proprietary raw-based or key-value storage, PuppyGraph leverages columnar storage architectures for high-performance query execution. It incorporates massively parallel processing (MPP) to optimize graph queries and uses a dynamic distributed model to sustain demanding workloads at scale. This design has already attracted high-profile customers such as eBay, Palo Alto Networks, and Coinbase, with use cases spanning GraphRAG for AI, fraud detection, and cybersecurity.

The platform’s performance advantages are highlighted through benchmarks, demonstrating significant gains compared to established solutions like Neo4j. PuppyGraph can be deployed flexibly, whether as a Docker container on-premises or in AWS, GCP, and Azure, with Kubernetes for orchestration and Datadog for cluster monitoring. A recent milestone is its integration with Databricks Unity Catalog, making it the first graph compute engine partner in the Databricks ecosystem.

By participating in major industry events and collaborating with strategic partners, PuppyGraph has positioned itself as a rising star in analytics infrastructure. Given its momentum and unique value proposition, it would not be surprising to see PuppyGraph become an acquisition target for a leading analytics provider or cloud platform vendor in the near future.

Click to enlarge

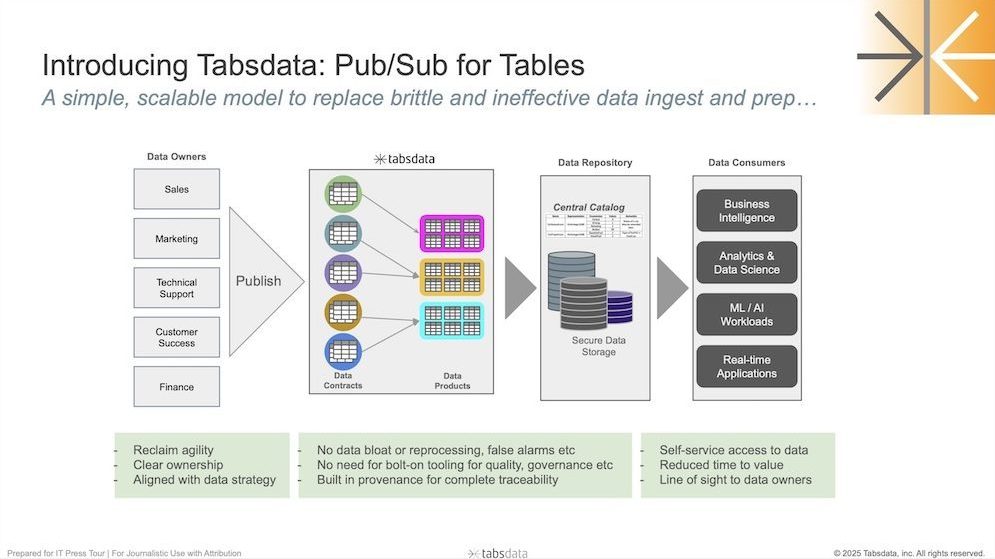

Tabsdata

The company is redefining how enterprises handle data ingestion and preparation by introducing a Pub/Sub model for tables. Founded in 2024 by Arvind Prabhakar (CEO) and Alejandro Abdelnur (CTO) – both veterans from StreamSets and Cloudera – the company aims to eliminate the complexity and brittleness of traditional data pipelines.

Traditional ETL-driven pipelines often fail due to evolving sources, unclear data ownership, and expensive reprocessing needs, resulting in low trust and delayed insights. Tabsdata’s approach focuses on product-centric data with built-in ownership, quality, and governance rather than bolted-on tooling. Through its Pub/Sub architecture, data tables are published and subscribed to directly, enabling self-service access, faster delivery, built-in provenance, and reduced operational overhead.

Tabsdata provides an out-of-the-box alternative to manually combining databases, workflow orchestrators, message brokers, and data lakes to achieve similar functionality. Its platform supports Change Data Capture (CDC), automated data engineering, integration, quality controls, data contracts, and governance – streamlining the process of building trusted data products for AI, analytics, and real-time applications.

The solution is offered under an open-core model with enterprise extensions, available as a self-managed Python-based package (distributed via PyPi) and free for developers. Tabsdata targets Python data engineers and provides flexible deployment on any infrastructure.

The company has quickly gained traction since emerging from stealth in early 2025, filing patents and preparing for a 1.0 public release. With its clear vision – making Pub/Sub the standard for enterprise data propagation – Tabsdata positions itself as a faster, simpler alternative to brittle pipelines, turning every dataset into a trusted, ready-to-use asset.

Click to enlarge

The Ultra Accelerator Link Consortium

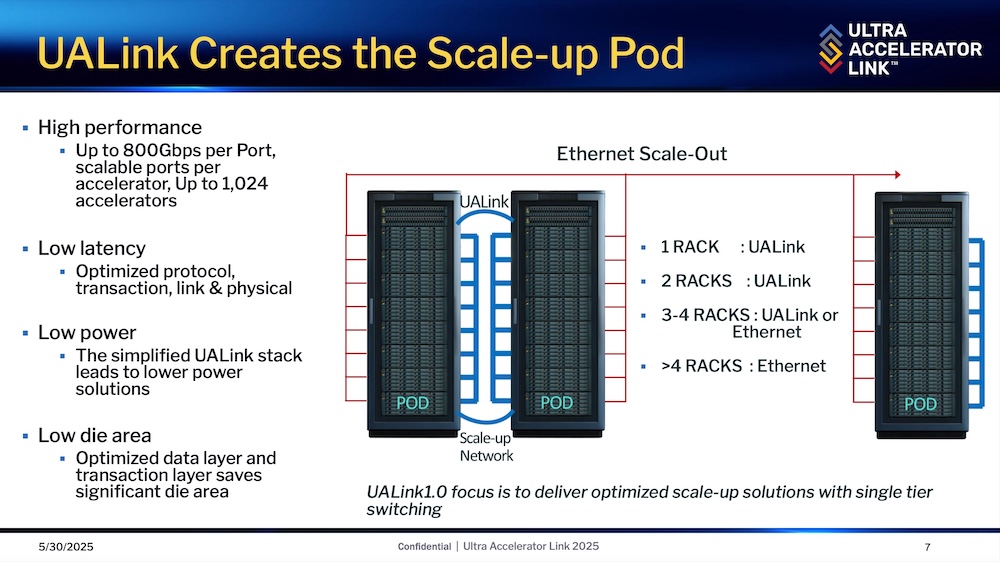

The Ultra Accelerator Link Consortium, aka UALink, aims to meet the growing infrastructure demands of large-scale AI training and inference, where models require massive compute and memory resources across tens to thousands of accelerators. Founded by industry leaders, the consortium promotes an open, standardized interconnect for efficient accelerator-to-accelerator communication within data center pods, initially supporting up to 1,024 accelerators per pod.

The recently released UALink 200G 1.0 Specification delivers up to 800 Gbps per port, combining the raw speed of Ethernet with latency comparable to PCIe switches, while optimizing power efficiency and silicon footprint. This design enables cost-effective scaling for AI workloads through simplified switching, memory sharing, direct load/store and atomic operations, and software-managed coherency.

UALink leverages standard Ethernet infrastructure – cables, connectors, retimers, and management software – while introducing a lightweight protocol stack that simplifies routing, transaction management, and data integrity. It supports flexible scaling from single racks to multi-rack deployments and integrates features such as virtual pod isolation for fault containment.

Key use cases include high-bandwidth GPU communication and advanced memory pooling for AI clusters. The roadmap includes upcoming 128G DL/PL specifications and in-network collective operations, reinforcing UALink’s focus on enabling future AI infrastructure.

With 100+ members, growing adoption, and support from major industry players, UALink is positioned as a critical enabler for AI scale-up architectures, helping organizations train and deploy next-generation models more efficiently. The specification is available at ualinkconsortium.org.

Click to enlarge

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter