Brookhaven’s Scientific Data and Computing Center Reaches 300PB of on Tapes

Largest compilation of nuclear and particle physics data in USA, all easily accessible - with plans for much more.

This is a Press Release edited by StorageNewsletter.com on October 14, 2024 at 2:02 pmFrom Brookhaven National Laboratory

The Scientific Data and Computing Center (SDCC) at the U.S. Department of Energy’s (DOE) Brookhaven National Laboratory has reached a major milestone: It now stores more than 300PB of data. That’s far more data than would be needed to store everything written by humankind since the dawn of history – or, if you prefer your media in video format, all the movies ever created.

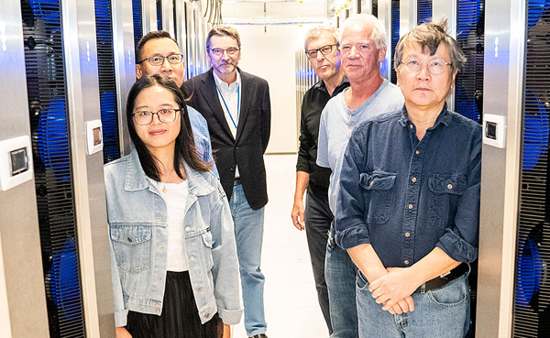

Part of team that manages tape storage library at the Scientific Data and Computing Center

of U.S. Department of Energy’s Brookhaven National Laboratory,

left to right: Qiulan Huang, Tim Chou, Alexei Klimentov, Ognian Novakov, Joe Frith, Shigeki Misawa.

(Source: David Rahner/Brookhaven National Laboratory)

“This is the largest tape archive in the U.S. for data from nuclear and particle physics (NPP) experiments, and third in terms of scientific data overall (*),” said Alexei Klimentov, physicist, Brookhaven Lab, who manages SDCC.

“Our 300PB would equal 6 or 7 million movies,” said Tim Chou, engineer and data specialist, Brookhaven Lab. “Since the first movie was made in 1888, humans have generated some 500,000 movies. So, all the feature films ever created would fill only a small percentage of our storage.”

Written history, starting from Sanskrit to today, would fill just 50PB.

“We have 6x more data,” Klimentov said.

The current SDCC cache comes from experiments at the Relativistic Heavy Ion Collider (RHIC), a DOE Office of Science user facility for nuclear physics research that’s been operating at Brookhaven Lab since 2000, and the ATLAS experiment at the Large Hadron Collider (LHC), located at CERN, the European Organization for Nuclear Research. These 2 colliders each smash protons and/or atomic nuclei together at nearly the speed of light, thousands of times/second, to explore questions about the nature of matter and fundamental forces. Detectors collect countless characteristics of the particles that stream from each collision and the conditions under which the data were recorded.

“And amazingly, every single byte of this data is online. It’s not in bolted storage that is not available,” Chou said. “Collaborators around the world can access it, and we will mount it and send it back to them.”

Accessible storage: Left: Just a sample of the tape storage racks holding data from Brookhaven Lab’s

Relativistic Heavy Ion Collider (RHIC) dating back to 1999

and the ATLAS experiment at Europe’s Large Hadron Collider.

Right: The newest tape racks occupy a state-of-the-art facility with room for future expansion.

(Source: Roger Stoutenburgh/Brookhaven National Laboratory)

Seamless access on demand

By mounting it, he means pulling the relevant information out of a state-of-the-art, high-tech tape storage library. When RHIC and ATLAS collaborators want a particular dataset – or multiple sets simultaneously – an SDCC robot grabs the appropriate tape(s) and mounts the desired data to disk within seconds. Scientists can tap into that data as if it were on their own desktop, even from halfway around the world.

“We have data available on demand,” Klimentov said. “It’s stored on tape and then staged on disk for physicists to access when they need it, and this is done automatically. It’s really a ‘carousel of data,’ depending on what RHIC or ATLAS physicists want to analyze.”

Yingzi (Iris) Wu, engineer, SDCC, noted that the system requires very good monitoring, some redundancy of data and control paths, and good support of the equipment.

Improved capacity: Ognian Novakov holds one of the original storage tapes from the beginning

of the data center in 1999 (left) and the latest rendition in use today (right).

It would take 900 of the original tapes to hold the data stored on the newer variety.

(Source: David Rahner/Brookhaven National Laboratory)

“We have developed our own software and website to generate plots that let us know what is going on with the data transfers,” she said. “And we added a lot of capabilities for monitoring how the data goes into and out of the High Performance Storage System (HPSS).”

HPSS is a data-management system designed by a consortium of DOE labs and IBM to ensure that components of complex data storage systems – tapes, databases, disks, and other technologies – can ‘talk’ to one another. The consortium developed the software physicists use to access SDCC’s data.

“We install and configure the software and let users use it,” Wu said. “But we always need to improve the way to talk to those systems to get data in and out. Our log system has alerts. If anything is not performing well, it will send out alerts to the team,” she said.

AI and ML algorithms can help detect such anomalies and reduce the operational burden on the computing professionals and engineers who provide support for users of HPSS and SDCC’s storage systems, Klimentov noted. “This is something SDCC staff plan to develop more over the next few years to meet future data demands.”

Energy and cost savings

Why use such a complex 2-tiered tape-to-disk system? The answer is simple: cost.

“When you consider the cost per terabyte of storage, tape is 4 or 5x less expensive than disk,” said Ognian Novakov, engineer, SDCC.

In addition, for data to be available on disk, the disks have to spin in computers, eating up energy and emitting heat – which further increases the energy needed to keep the computers cool. Tape, which is relatively static when not in use, has lower power demands.

“Tape storage is generally designed for deep storage, deep archives. You write the data and almost never read it, unless you need it for recovery or to meet compliance requirements,” Novakov said.

Robotic access: A view inside a storage rack showing a robot that retrieves

tapes so data can be mounted and shared with collaborators around the world.

(Source: Roger Stoutenburgh/Brookhaven National Laboratory)

“But in our case, it’s a very dynamic archive,” he said. ”The robots frequently access the tape archive to move/stage requested data to disk, then the staged data gets deleted from disk so there’s space for the next request. “HPSS plus our tape libraries provide the functionality of an infinite file system,” Novakov said.

Klimentov noted that the more efficient storage has allowed SDCC to reduce the data volume on disk ‘by a factor of 2.’

”Cutting down on disks has another benefit since they have an average lifetime of just 5 years, compared to tape with a shelf life of about 30 years,” Chou said.

And tape capacity keeps improving.

“The storage capacity on tape generally doubles every four to five years,” Novakov said. “We started 26 years ago with 20GB tape cartridges; now we are at 18TB on one cartridge – and it’s even smaller in physical size. By periodically rewriting data from older media to new, we are freeing a lot of slots in the library.”

Meeting ever-increasing data demands

Most of the SDCC’s tape libraries are now located in a facility with power and cooling efficiencies designed specifically for data systems. And there should be enough room for expansion to meet the ever-increasing demand of current experiments as well as those planned for the future.

“RHIC’s newest detector, sPHENIX, with a readout rate of 15,000 events/second, is projected to more than double the data we have now,” said Chou.

After RHIC has completed its science mission toward the end of 2025, it will be transformed into an Electron-Ion Collider (EIC). This new nuclear physics facility is currently in the design stage at Brookhaven and is expected to become operational in the 2030s. Around the same time, a ‘high-luminosity’ upgrade to increase collision rates at the LHC is expected to ramp up the ATLAS experiment’s data output by about ten times!

Plus, SDCC handles smaller data loads for a few other experiments, including the Belle II experiment in Japan, the Deep Underground Neutrino Experiment based at DOE’s Fermi National Accelerator Laboratory, and some experiments at the National Synchrotron Light Source II and Center for Functional Nanomaterials, 2 other DOE Office of Science user facilities at Brookhaven.

“Space wise, we probably have to grow our physical capacity to one-and-a-half or two times our current size [by adding more racks to the existing facility], while in data capacity, we are growing by a factor of 10 or more,” Novakov said.

Chou has spec’d it out: “From our calculations, our existing tape room can probably hold 1.5 or 1.6EB of data with existing old technology. One exabyte is 1,000 petabytes – a billion billion bytes,” he said. “But we know the capacity of tape technology will grow exponentially. We think with technology upgrades, we can hold 3EB without major upgrades to our facility.”

The tape data archive of Brookhaven Lab’s Scientific Data and Computing Center is very dynamic.

When physicists want access to a particular dataset – or multiple sets simultaneously – a robot grabs

the appropriate tape(s) and mounts the desired data to disk within seconds.

Onsite processors can perform local data analyses, but even scientists located halfway around the world can tap into the data as if it were on their own desktop.

(Source: David Rahner/Brookhaven National Laboratory)

AI-enabled analysis — in quasi-real-time

Adding to the challenge is that physicists are now inclined to record more of the data collected by experiments.

“Before, much of the data was filtered by triggers that decided based on certain criteria which collision events to store and which to discard — because we couldn’t keep it all,” Klimentov noted.

But now, sPHENIX and the future EIC experiment(s) plan to stream all their data to SDCC and use AI/ML algorithms for data noise suppression. Keeping raw data will ensure that future analyses can access characteristics that selective triggers might have discarded. Scientists could also deploy AI algorithms to mine through unfiltered data to detect patterns, potentially making unforeseen discoveries.

“At the same time, as computing is getting faster and faster,” Klimentov said, “we have managed, at least for LHC, to analyze data in quasi-real time – with a delay of just 56 hours – which was impossible 20 years ago. The reliability of our system – and the people who operate it, who are very important – makes that possible.“

“That means we can detect anomalies in practically real time, including anomalies in accelerator and detector performance, while the accelerator and experiments are operating,” he said.

Such real-time AI-enabled analysis of data could guide corrective actions to minimize accelerator/detector downtime or change the way those systems are operating. It also has the potential to alert physicists to something in the data that’s worth a closer look discovery-wise.

EIC’s dual data centers

Handling data for the EIC will present other challenges. Since this new facility is being built in partnership with DOE’s Thomas Jefferson National Accelerator Facility (Jefferson Lab) in Newport News, Virginia, both Jefferson Lab and Brookhaven plan to keep a full record of all the facility’s data.

“For the EIC, we are expecting to collect about 220 petabytes of data per year at nominal collision rates,” Klimentov said. “We are working with Jefferson Lab on how we will organize this data among the two Labs.”

“When you increase your archive and anomaly detection and analysis functioning, you need to also increase monitoring and alarming systems,” he said.

Because Brookhaven will be the site of the collider, he expects some data handling, such as ‘noise suppression’ and pre-filtering, to be done on site before data is shipped and archived. Some of this processing may even take place in the ‘counting house’ computing systems immediately adjacent to the EIC detector, before making its way to SDCC and Jefferson Lab.

“Fortunately, we have junior-generation physicists who have started to learn about these challenges using ATLAS and RHIC data,” Klimentov said.

sPHENIX, with an expected output of 565PB of data and 2 separate data streams – one going to tape and one going to a disk cache for immediate processing to ensure all detectors are working – is giving them lots of practice.

“I see sPHENIX as a nice steppingstone for a streaming model so that these young physicists, computing professionals, and engineers can learn,” Klimentov said, “and they will eventually work for the EIC.”

SDCC operations are funded by the DOE Office of Science.

(*) The top spots for U.S. stores of scientific data go to the National Energy Research Scientific Computing Center (NERSC) and the National Oceanic and Atmospheric Administration (NOAA), each with 355PB.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter