AI SSD Procurement Capacity Estimated to Exceed 45EB in 2024

NAND flash suppliers accelerate process upgrades.

This is a Press Release edited by StorageNewsletter.com on August 15, 2024 at 2:02 pmPublished on August 12, 2024, this market report was written by TrendForce Corp.

AI SSD Procurement Capacity Estimated to Exceed 45EB in 2024

NAND flash suppliers accelerate process upgrades

This report on enterprise SSDs reveals that a surge in demand for AI has led AI server customers to significantly increase their orders for enterprise SSDs over the past 2 quarters. Upstream suppliers have been accelerating process upgrades and planning for 2YY products – slated to enter mass production in 2025 – in order to meet the growing demand for SSDs in AI applications.

Increased orders for enterprise SSDs from AI server customers have resulted in contract prices for this category rising by over 80% from 4Q23 to 3Q24. SSDs play a crucial role in AI development. In AI model training, SSDs primarily store model parameters, including evolving weights and deviations.

Another key application of SSDs is creating checkpoints to periodically save AI model training progress, allowing recovery from specific points in case of interruptions. Due to a high reliance on fast data transfer and superior write endurance for these functions, customers typically opt for 4TB/8TB TLC SSD to meet the demanding requirements of the training process.

TrendForce points out that SSDs used in AI inference servers assist in adjusting and optimizing AI models during the inference process. Notably, SSDs can update data in real time to fine-tune inference model outcomes. AI inference primarily provides retrieval-augmented generation (RAG) and LLM services. SSDs store the reference documents and knowledge bases that RAG and LLM use to generate more informative responses. Additionally, as more generated information is displayed as videos or images, the storage capacity required also increases, making high-capacity SSDs such as TLC/QLC 16TB or larger the preferred choice for AI inference applications.

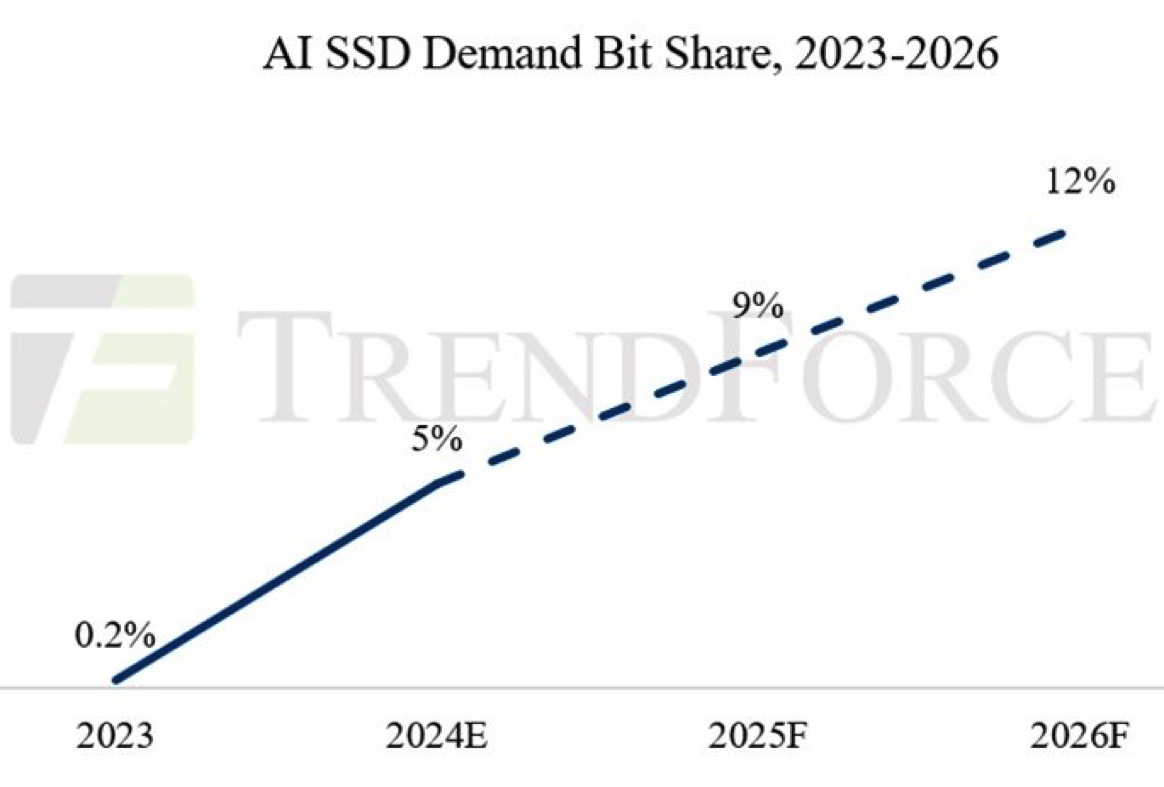

Growth rate of AI SSD demand exceeds 60% as suppliers accelerate development of high-capacity products

In 2024, the AI server SSD demand market has seen a significant increase in demand for products larger than 16TB starting in 2Q24. With the arrival of NVIDIA’s H100, H20, and H200 series products, customers have begun to further boost their orders for 4TB and 8TB TLC enterprise SSDs. TrendForce estimates that this year’s AI-related SSD procurement capacity will exceed 45EB. Over the next few years, AI servers are expected to drive an average annual growth rate of over 60% in SSD demand, with AI SSD demand potentially rising from 5% of total NAND flash consumption in 2024 to 9% in 2025.

AI inference servers will continue to adopt high-capacity SSD products. Suppliers have already started accelerating process upgrades and are aiming for mass production of 2YY/3XX-layer products from 1Q25, as well as eventually producing 120TB enterprise SSD products.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter