Lenovo to Deliver Enterprise AI Compute for NetApp AIPod through Collaboration with NetApp and Nvidia

Solution that helps customers operationalize their enterprise AI workloads to transform their business

This is a Press Release edited by StorageNewsletter.com on May 27, 2024 at 2:01 pm By Kamran Amini, VP and GM, server, storage and software defined infrastructure, Lenovo ISG.

By Kamran Amini, VP and GM, server, storage and software defined infrastructure, Lenovo ISG.

Enabling AI infrastructure

AI has transcended beyond a technological buzzword into a key driver of turning valuable data into actionable insights, delivering real business value and competitive advantage for customers.

With the advent of ChatGPT in late 2022 and the ensuing development of large language models (LLMs), AI has gained traction within enterprises, enabling new use cases, applications, and workloads across many industries. AI has moved beyond just training LLMs in the cloud to become more hybrid in nature, driving the need for running private AI use cases leveraging on-prem AI infrastructure for enterprise customers. These private AI use cases require new converged infrastructure solutions targeted at enterprise customers to simplify and accelerate AI infrastructure implementations at scale for on-premises solutions.

However, enterprise customers face the following types of challenges, which can limit on-prem AI deployments:

-

Lack of data scientists who can build, deploy, and manage LLMs.

-

Datacenter power and cooling limitations for enterprise customers typically are limited to 8 to 10KW/physical rack space that houses the IT. Typical LLMs/Generative AI (GenAI) utilizing GPUs consume in a single server over 10KW of power. This limits the utilization of datacenter real estate.

-

Challenges with configuring the right AI solution deployments for customers who don’t have the skilled resources to determine the amount of GPU accelerators, storage, and networking needed to support the training, retraining, and inference of their data for their AI deployment.

Lenovo collaboration with NetApp and NVIDIA

Lenovo is focused on simplifying our customers’ AI journey and providing comprehensive solutions to unleash the power of AI to drive intelligent transformation in every aspect of our lives and every industry – delivering AI for All. It does this by delivering customer-valued innovation and partnering with industry leaders – such as NetApp, Inc. and NVIDIA Corp. – to bring you the right set of solutions for your enterprise AI deployments.

It is further expanding our strategic partnership with NetApp by delivering reliable and secure enterprise AI compute in the NetApp AIPod solution. AIPod is an integrated solution designed to simplify the planning, sizing, deployment, and management of tuning and inferencing enterprise AI models.

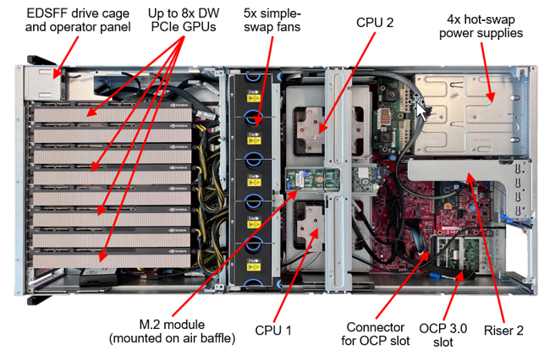

As announced on May 14, 2024, the Lenovo ThinkSystem SR675 V3 servers are foundational to this solution and deliver the powerful computing resources needed for accelerating the complex GenAI computations used in today’s enterprise AI models.

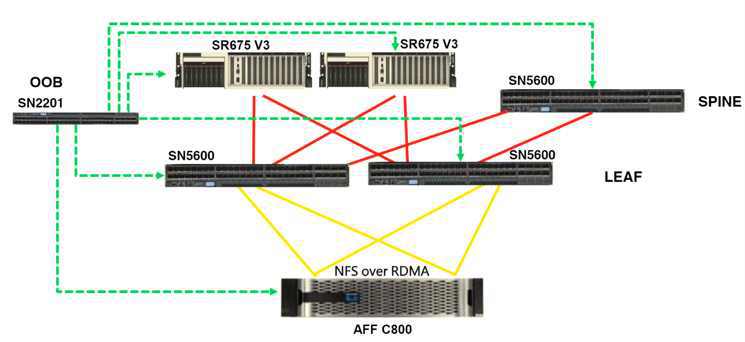

Figure 1. NetApp AIPod solution featuring ThinkSystem SR675 V3 servers

AI has emerged as one of the most transformative technologies in the industry, with the potential to unlock faster and better data insights to help customers transform their business operations. In the past few years, the industry has been focused on developing and honing AI models in the cloud, leveraging massive investments in technology infrastructure. As these AI models mature in the cloud, the logical evolution is to bring them into private AI deployments so enterprise customers can turn their own business data into valuable business insights and actions.

Given the continued growth in data and the large datasets common in most enterprise deployments, data gravity and data privacy trends have driven a higher demand for an on-premises and hybrid approach to AI. This hybrid AI approach enables customers to use their own data when running AI models to deliver the optimal, unique, and tailored results for their business operations. The same Lenovo infrastructure and technology that helped mature and hone these AI models in the cloud is the foundation for our private AI enterprise infrastructure deployments. With our AI portfolio and services, such as AI Discover Center of Excellence and AI Innovators, Lenovo has the proven AI expertise, solutions, services, and support to turn your organization’s data into actionable insights.

Customer data is the most valuable part of an organization’s AI strategy, and our partner NetApp is the storage platform for most enterprise customers’ unstructured data. The Lenovo strategy to bring AI to customers’ data makes NetApp the partner for AI solutions. We are expanding on our 5-year partnership to deliver new innovation and efficiency for enterprise customers’ AI deployment.

Lenovo integrated AIPod solution

The AIPod solution is a new validated, integrated solution composed of ThinkSystem SR675 V3 servers, NetApp C800 high performance storage, NVIDIA L40S GPUs, the NVIDIA Spectrum-X networking platform, and the NVIDIA AI Enterprise software platform. This is a solution to host AI workloads, including the targeted workloads of training and inferencing for AIPod. Lenovo works closely with NVIDIA to deploy NVIDIA GPUs and DPUs and NVIDIA AI Enterprise, which includes NVIDIA NIM and other microservices, throughout the Lenovo AI infrastructure portfolio.

The collaboration with NetApp and NVIDIA enables Lenovo to deliver the 1st integrated AI solution in the market with retrieval augmented generation (RAG) to support chatbot, knowledge management, and object recognition use cases with critical benefits, including:

-

Data management simplicity: The AIPod with Lenovo addresses the complexities of data management by providing tools and features that simplify infrastructure management, enhance automation, and ensure scalability and data protection.

-

High performance: Combining the raw power of ThinkSystem servers with advanced NVIDIA L40S GPUs and NVIDIA Spectrum-X networking, the AIPod with Lenovo for NVIDIA OVX delivers the computational strength required for the most intensive AI tasks, supported by the unparalleled speed and efficiency of NetApp storage systems.

-

Integrated solution: The AIPod with Lenovo for NVIDIA OVX integrates NVIDIA AI Enterprise to streamline the deployment and scaling of AI workloads, while NVIDIA NeMo allows organizations to customize, build, and deploy AI models with ease, leveraging pre-trained models for quicker deployment and NVIDIA TensorRT software for accelerating and optimizing inference performance.

-

Trusted secure data: Security is a foundational element of the AIPod with Lenovo, ensuring that data is not only rapid and accessible but also rigorously protected.

Figure 2. Lenovo ThinkSystem SR675 V3 server

The company collaborates with NetApp and NVIDIA to deliver a simplified, efficient, and robust solution that helps customers operationalize their enterprise AI workloads to transform their business. The reference architecture with a detailed BoM for this solution will be published later in June 2024 and will be available from the network of channel partners for Lenovo, NetApp, and NVIDIA.

Resources:

Artificial Intelligence

ThinkSystem SR675 V3 Server

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter