Buyer’s Guide to AI/ML Data Infrastructure

Choosing right storage solution for data-driven enterprise

This is a Press Release edited by StorageNewsletter.com on December 27, 2023 at 2:01 pmThis buyer’s guide was written by WekaIO, Inc.

Buyer’s Guide to AI/ML Data Infrastructure

There are several opinions on the topic of what makes a storage solution modern. Here we look at the intersections of these opinions.

Anatomy of modern solution

There are several opinions on the topic of what makes a storage solution modern. Here we look at the intersections of these opinions. One of the cornerstones of scientific discovery is HPC, and while the systems making up a high-performance computing infrastructure has evolved radically due to technical innovation, the storage solutions that feed the data have experienced little change over the last 25-35 years.

The legacy, old-world storage platforms were designed for the Ph.D., by Ph.Ds, and the complexities along with the myopic performance model have proven difficult at best to manage. Today, the modern HPC platform, driven by powerful GPUs, is becoming mainstream having been deployed across broad and diverse industries such as M&E, FINTECH, life sciences, and high-performance data analytics, however, some are still using these legacy storage solutions from the previous century to serve up data to the platform. Achieving results and outcomes should be the primary purpose for using an HPC computing platform, and the reason why a modern storage solution for HPC is required. If you are tasked with identifying a modern solution for your environment, it is important to take into consideration not only your environment today, which will soon be in your immediate past but also what your environment will consist of in the future. In this section, we will provide a list of key characteristics that make up the anatomy of the modern storage solution. Use this list to weigh key decision factors when choosing the right solution today, for tomorrow’s workloads, ensuring you truly have selected a modern storage solution.

Top 10 key characteristics of modern storage solution

1. Cloud 1st Enabled; Software-Defined

Modern solutions are software-defined and equally at home in the cloud, on-premises, or in a hybrid model without limiting features or capabilities.

2. Intelligent Software

A modern solution will minimize the impact on administration and configuration, while intelligently managing the placement of data across storage tiers.

3. Flexible Choices in Cloud and On-Premises

A modern solution offers customers freedom to choose the hardware and components for an on-premises environment. Similarly, when investing in cloud, customers should have the choice of hyperscalers.

4. Unified Global Namespace

Modern solutions have overcome the old hierarchical storage solutions of old by creating a single name-space to access all data, irrespective of the tier it is located. This enhances the user experience and eliminates costly cycles required for data recall or data copies.

5. Deployment Independence

Modern solutions allow customers to take full advantage of the cloud and/or new technologies gaining mainstream adoption and not be locked-in to either old technology or an unproven technology that has reached end of life.

6. High Performance and Low Latency: Speed

Today’s GPU-driven applications such as deep learning, AI/ML require a considerable data pipeline to take full advantage of these resources. A modern solution will perform at the highest levels, while at the lowest latency without having to be reconfigured based on the dataset variety or characteristics.

7. Built-in Data Protection

Many storage solutions offer snapshots as an option to enhance data protection. Modern solutions provide this feature and integrate to cloud resources for rapid recovery.

8. User Experience: Simplicity

Maximizing your employee resources is one key to your success. Your experience with a modern storage solution should be consistent and repeatable no matter where the solution exists, on-premises, the cloud, or hybrid.

9. Exabyte Capacities: Scale

With data growth continuing to skyrocket, so should your storage solution based on your unique and specific needs. Modern solutions manage trillions of files and directories and multiple exabytes of storage.

10. Multi-protocol and cross-protocol support

Multi-protocol has been around for years, but modern solutions offer not only multiple protocols for access but cross protocol support for simultaneous access to data via any protocol.

Data-Driven Enterprise

According to Accenture, “the amount of data generated by modern businesses is scarcely conceivable. And that amount will only grow to increasingly monumental proportions in the near future.“

They go on to project that as a global society, we will be producing data at a rate of over 450EB per day by 2025. This is why Accenture urges organizations to capitalize on the rise of the data-driven enterprise.

However, over the years, legacy vendors have built architectures to deal with each workload requirement separately. One for SAN, another for NAS, and yet another for object storage.

To top it off, it is not uncommon to find separate architectures built for capacity and performance. These days most enterprises have a hybrid or multi-cloud strategy, which can introduce yet another layer of complexities, leading to complicated management due to incompatible tools and processes from several different architectures.

Additionally, as data grows it becomes increasingly more difficult to manage across these multiple siloed architectures and provide meaningful and timely access to your users without having to maintain multiple copies of your data.In the end, there is a trade-off between simplicity, speed, scale, and sustainability, and the legacy architectures are quite simply ill-equipped to serve the data-driven enterprise.

NAS is simple to use and manage, but not performant enough for modern applications. SAN or parallel file systems provide the performance but are complex and expensive. Object storage provides the scale but is not performant to be useful. Accenture says that “84% of businesses do not have the data platform they need” to truly elevate to the level of a data-driven enterprise.

This in many ways exacerbates the lack of a solid data strategy reported by 81% of businesses to use the full potential of their data.

There is a better way.

How to Spot a “Vintage Vendor” in Storage?

Let’s face it, if you had a Sony Walkman in the 80s playing your favorite cassette mix tape, you were considered one of the cool kids. Today if you showed up with that on the street, folks might be wondering what museum you are advertising. Just like cassettes were cool for their time, it just doesn’t cut it for today’s needs.

So, to help you spot those “vintage vendors”, we have identified 6 aspects outlined below, all of which make a vendor ineffective for modern data storage requirements:

1. Selling Systems Built with Proprietary Hardware: If you are still using a storage solution that is available only in a customized proprietary hardware form factor from your storage vendor, it’s a clear indication you’re not using a solution based on current design principles, and you’re buying legacy storage.

2. Hybrid Cloud is Limited: If your current storage vendor does not support the same specifications for features, CLI, performance, and scaling both on-premises and in the cloud, these are clear indications that you’re using a legacy vendor.

3. Limited Scale and Support for Mixed Workloads: When considering your data center architecture decisions, if you must deploy more storage systems than functionally required by the physical separation of resources, you’re using a legacy storage vendor. If you must make sizing decisions that lock you in for the life of that system without the ability to expand or shrink as needed, you’re also using a legacy storage vendor.

4. Limited Aggregate Performance & Single-Client Performance: If the storage system you’re using now has the same single-client performance limitations that existed about a decade ago, you’re using a legacy storage vendor. If the system you’re using now has an aggregate throughput\IO/s number that has not increased dramatically when compared with numbers a decade ago, you’re using a legacy storage vendor.

5. Data Backup and DR Are Performed by Others-or Are Afterthoughts:

If your primary storage product, your backup product, and your cloud product are different entities, you are dealing with a legacy storage vendor. If your storage vendor forces you to treat backup and DR differently and store the data twice, you’re using a legacy vendor. If your storage vendor forces you to go to a third-party solution to get a sound backup or archive strategy, you’re also using a legacy vendor. If the performance of the storage system drops significantly during the rebuild, you’re using a legacy vendor. If you are still rebuilding blocks and not files, you are using a legacy vendor. If you don’t have end-to-end data integrity protection for the client (each block has a checksum that is calculated at the client and verified on each step of the way to ensure no bit-rot), you’re using a legacy storage vendor.

6. You Need to Make Tradeoffs: If your storage vendor has many products, each with slightly different tradeoffs, and you must use a different mix of them as solutions to different projects, you’re using a legacy vendor (for example, different utilities for taking snapshots, taking snapshots for backup, taking snapshots for bursting, etc.).

What Are Modern Workloads?

Technology has changed dramatically in the last 20 years. High-speed connectivity has become a commodity, and computing now works at mind-blowing speeds. Just think about how quickly an autonomous vehicle processes and reacts to potential accident situations–it’s done in milliseconds. Data is being generated all around us: street cameras, shopping centers, office buildings, your car, your phone, your watch, your family’s home automation and security systems, and more. Just as our personal and consumer data speeds and quantities have changed, so has the enterprise workload changed – from client-server technologies and relational databases to machine and deep learning processing by mini-edge processors or supercomputers in the core. This leads to new workloads, use cases, and abilities to accomplish previously impossible outcomes.

Here are some examples of modern workloads and use cases:

Life Sciences

Ability to sequence genomes in record time, electron microscopy, and AI in image processing.

Research

Drug discovery and image processing in pharmaceutical development and research.

Financial Services

New trading algorithms, modeling, and simulations.

Automotive

Autonomous driving and training, models that crunch enormous amounts of data.

M&E

Large file processing, rendering large files, 8k streaming video post-production, etc.

Today’s workloads, whether traditional or containerized, demand a new class of storage that delivers the performance, manageability, and scalability required to obtain or sustain an organization’s competitive advantage.

Key Tenets of Modern Storage Platform

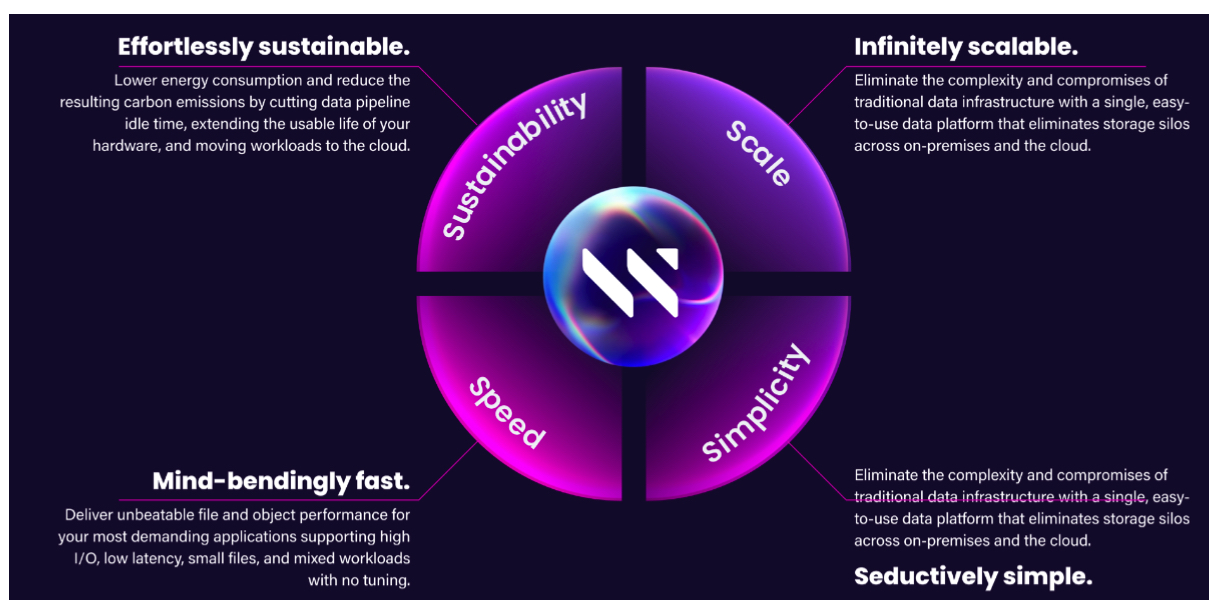

Simplicity

Consolidate all your workloads on a single platform irrespective of the data profile. For years, it was believed to get ultra-high performance you needed to sacrifice simplicity. WEKA has cracked the code, and our modern file system, WekaFS, is different, combining top-notch performance with simple and reliable management. No special tuning or reconfiguring is required even for mixed file sizes and data profiles. WEKA makes it easy to deploy, configure, and manage storage on-premises or in the cloud.

Speed

Single client performance up to 162GB/s throughput and 2 million IO/s with proven cloud performance up to 2TB/s. Deliver the performance required to power your most demanding applications and workloads to dramatically accelerate time to insights. WEKA’s modern architecture is built from the ground up for flash and optimized for NVMe and cloud to power breakthrough innovation.

Scale

WEKA enables high-performance computing at a massive scale without breaking the bank. Modern workloads and today’s hybrid cloud environments have dramatically changed the needs of the data center. In addition to offering linear scaling with a scale-out file system, WEKA redefines scalability in the cloud era and allows customers to scale in every dimension possible.

Sustainability

Evidence suggests that today, data centers account for roughly 3% of global energy consumption; left unchecked, that could rise to 8% by 2030. WEKA delivers 10-50x increased GPU stack efficiency through its high-speed data architecture reducing annual GPU operating energy. It also can shrink data infrastructure footprints by 4-7x through data copy reduction and cloud elasticity. WEKA Data Platform saves over 260 tons of CO2e per petabyte over the typical 3-5 year life cycle compared to traditional data architectures.

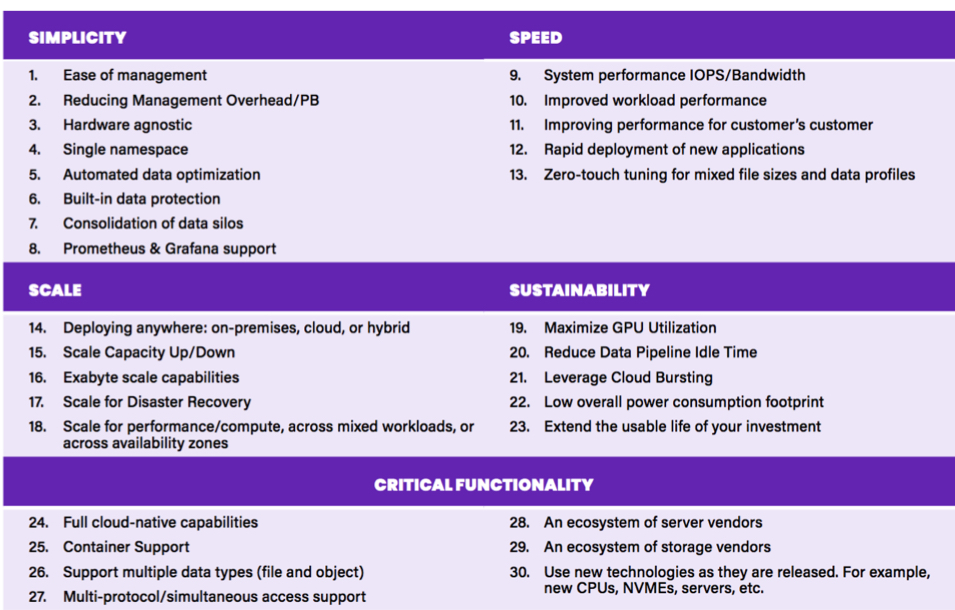

30 Points of Consideration When Choosing Modern Storage

Technical Capabilities Overview

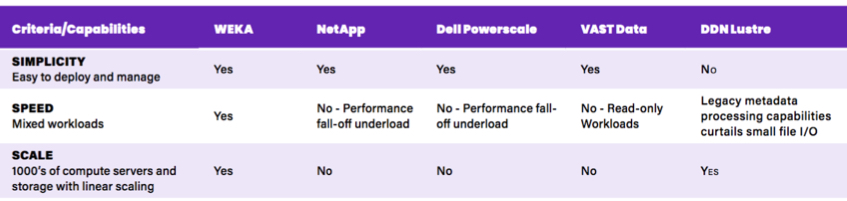

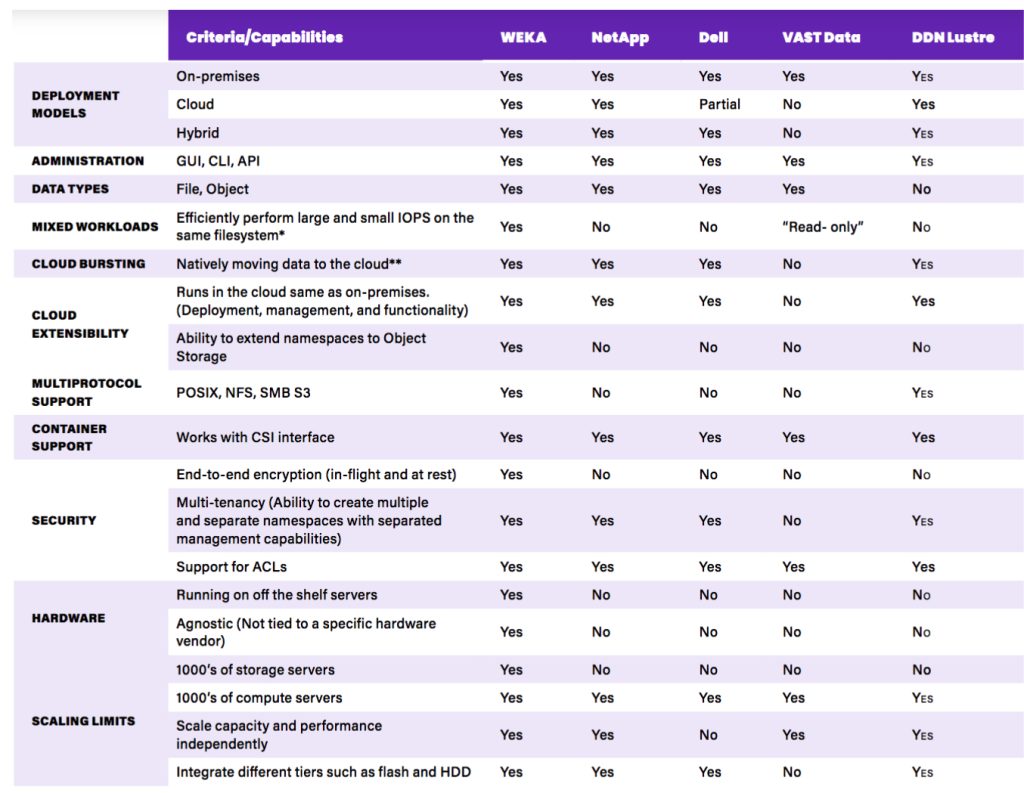

This section provides a detailed comparison of NetApp, Dell, VAST Data, DDN Lustre, and WEKA.

Comparison of Simplicity, Speed, and Scale

Comparison of Capabilities

* Same file system can accommodate maximum throughput and IOPS without a configuration requirement.

** Ability to move data between different on-premises and public cloud environments without the need of 3rd party data mover applications or backup applications.

Companies are not optimizing only for bandwidth, which is what Lustre and GPFS have historically done. They’re delivering on IO/s and metadata performance, the things that matter when you are doing anything that scales out that is not a single large-scale simulation.

WEKA – Modern Storage for Modern Workloads

Today’s workloads demand a new class of storage that delivers the performance, manageability, and scalability required to obtain or sustain an organization’s competitive advantage while at the same time aligning with your corporate strategy for sustainability and reducing carbon emissions. The WEKA Data Platform is designed and optimized for data-intensive modern workloads. It is ideally engineered to take storage performance and data availability to the next level as performance demands intensify in AI, ML, and deep learning. Its architecture and performance are designed to maximize your usage of GPUs across cloud, on-premises, or hybrid deployments, providing data management capabilities that can accelerate time to insight to EPOCH by as much as 80x.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter