Peak:AIO Sails Past Leading Benchmarks Outshining AI and HPC Storage Giants

Approach to AI storage, demonstrating RDMA and GPUDirect performance from single off-the-shelf cost-effective server, system delivered outstanding 119GB/s, harnessing full potential of 16 installed drives

This is a Press Release edited by StorageNewsletter.com on December 22, 2023 at 2:00 pmPeak:AIO announced a white paper detailing how a global tier-one vendor recently assessed a single Peak:AIO AI data server and found it to surpass the recently published benchmarks of AI GPUDirect storage vendors.

This result validates the company’s vision that AI is so fundamentally different that it needs not just new storage, but a new type of solution.

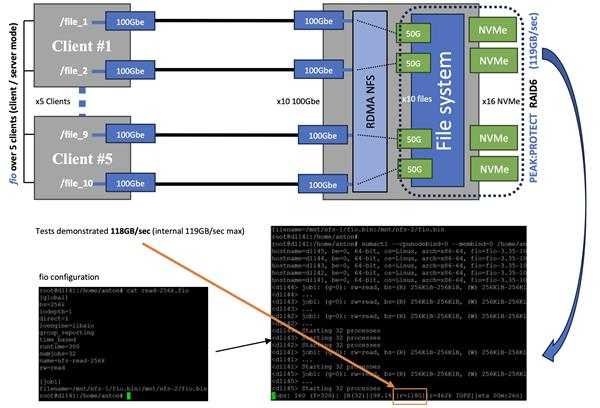

RDMA NFS tests highlighting 118GB/sec

The testing employed a single latest-generation server of a top-tier vendor, equipped with 16 NVMe gen 4 drives. The focus of the initial tests was on Peak:Protect, the firm’s parallelized RAID technology that serves as the backbone of the company’s AI Data Server. The tests validated the company’s claim that its implementation of RAID-6 could achieve read speeds comparable to RAID-0 and scale with each drive. The system delivered 119GB/s, harnessing the potential of the 16 installed drives. This established the reference point for further tests.

While RAID performance in isolation only lays a foundation and creates a pot of potential performance which must navigate through various layers before becoming usable. Peak:AIO excels in refining these layers, ensuring that the full RAID performance translates to real-world user applications, without the need for any proprietary tools or drivers.

Following the determination of maximum potential performance (119GB/s), the test lab proceeded to configure a typical 5-client solution utilizing the company’s implementation of NFS (RDMA based). Collectively, the 5 clients demonstrated the ability to sustain a total throughput of 118GB/s. This result demonstrates the fastest single node storage server to-date, an indication of how finely tuned the Peak:AIO layers are to present so little friction from RAID to user.

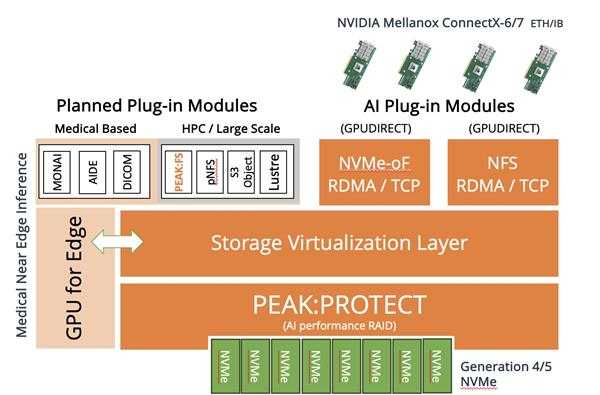

Peak:AIO Software Layers

GPUDirect

Connected to a single Nvidia DGX A100 via 5x200GbE/HDR ports, standard performance and GPUDirect tests were run, both showing the same 118GB/s. However, consecutive deep learning jobs run by Eyal Lemberger, CTO, showed GPUDirect provided a 20%-30% time improvement over non-GPUDirect workloads, demonstrating its real-life value as opposed to simple benchmark results.

“Until recently, the IT world has developed in incremental steps based on predictable roadmaps,” said Mark Klarzynski, CEO. “AI has thoroughly disrupted this traditional approach. After observing storage vendors attempting to adapt to the evolving AI market by merely rebranding existing solutions as AI-specific, akin to trying to fit a square peg in a round hole. I believed that AI required and deserved a reset, a fresh perspective and new thinking to match its entirely new use of technology. AI is changing futures; it deserves more than a force fit square peg. Peak:AIO’s view is AI has unique demands and requires an entirely new approach to storage.”

Company’s previous performance of 80GB/s from a software driven standard server was groundbreaking until the performance engineer tested the parameters of the solution, to see if it could achieve even greater performance. In fact, the load on the CPU was not significant, leaving room for growth with the gen-5 NVMe drives coupled with ConnectX-7 cards and how they will perform when they are more available.

The company has strategically streamlined its AI software to take advantage of the full power of modern servers and CPUs as they evolve and transforms a standard NVMe server into a blistering fast AI Data Server, delivering the performance of a $1 million plus HPC solution. This is achieved while maintaining the power efficiency of a single server and costing approximately $200/TB. The firm enables clients to direct their efforts and resources toward innovation instead of grappling with complex storage management.

The company was recently invited into the EMC3 consortium formed by Los Alamos Laboratories to investigate ultra-scale computing architectures.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter