Determining Full Cost and Sustainability Impact of Flash Vs. Spinning Disk

HDDs will effectively be dead by 2028 using much denser flash device technology, not COTS SSDs.

By Philippe Nicolas | September 22, 2023 at 2:03 pm Blog post from Eric Burgener, director, technical strategy, Pure Storage, Inc.

Blog post from Eric Burgener, director, technical strategy, Pure Storage, Inc.

Lately there’s been a lot of discussion in the industry purporting to compare the cost and “eco-friendliness” of HDDs and SSDs using metrics that frankly are not relevant for enterprises looking to deploy storage systems at scale. In this blog I’d like to point out how these discussions are doing a disservice to enterprises looking to deploy the most cost-effective and sustainable storage infrastructure and suggest the relevant metrics.

I’m all for using performance and storage density, energy and floorspace consumption, and TCO metrics to compare enterprise storage solutions, but you can’t get a relevant analysis for an enterprise by comparing a single HDD to a single SSD. As an IT manager building an enterprise – class storage system, you aren’t buying a single storage device – you’re buying a system that will have anywhere from tens to hundreds (or even thousands) of storage devices. The relevant comparison needs to be made at the system level. An enterprise should define its performance and storage goals, build up the systems necessary to meet those goals, and compare the size, energy and floorspace consumption, embodied carbon and TCO of those systems.

In doing this, you’ll need to understand how raw capacity maps to effective capacity in the systems you’re looking at and with the data types in your workloads. This means you will have to account for the impact of things like formatting, RAID or erasure coding, compression, de-dupe, thin provisioning, and effective capacity utilization at the device level (i.e. how full can I fill my drives and still meet my performance requirements) where they apply. Using raw capacity and its associated $/GB cost is irrelevant unless you already know how that converts to effective capacity with HDDs vs SSDs. All-HDD systems will generally need more devices and raw capacity than systems that use SSDs to meet the same performance and storage capacity goals.

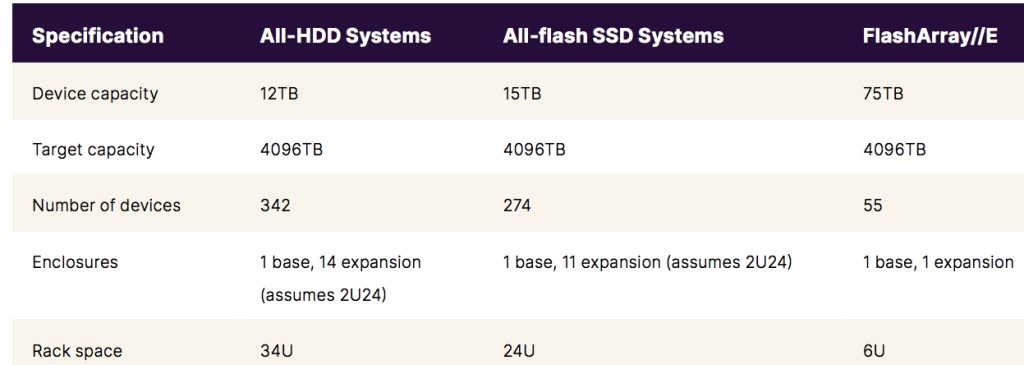

Pure Storage FlashArray//E has an 84% lower storage device count than a comparably configured all-HDD system and an 80% lower storage device count than a comparably configured AFA configured with commodity off the shelf SSDs.

Even given the imperative for a systems-level comparison, it’s ridiculous to compare energy consumption of HDDs and SSDs at the device level if you don’t normalize for performance. On average, an SSD offers 10x-20x the throughput of an HDD and more than 1,000x the IO/s on random writes. And to hit a given performance level an SSD will probably be about a 10th as busy as an HDD so it’s not appropriate to compare energy consumption under load from the technical specs for devices on an “apples to apples” basis. A workload that keeps an HDD 70% busy (and thus not operating at idle power levels) will likely have the SSD operating at 7% busy (which is pretty much an idle power level). Your workload may not need all the performance you get with all flash configurations, but it’s very likely you need more than you get with a single HDD (which again points to the need to do a system-level comparison).

I’ve also observed that many of the “HDD vs. SSD” comparisons pit the HDD vs. a high performance SSD. Given the performance requirements, it would be more appropriate to compare the HDD vs. much higher capacity but lower performance 15TB and 30TB SSDs which are more power efficient. So this would also affect any energy consumption comparisons to the advantage of the flash devices.

Identifying your combined performance and capacity requirements and configuring a system to meet those with both HDDs and SSDs brings out the key point that you need many more HDDs than SSDs. How many more? That will vary based on the capacity of the HDDs and SSDs you will configure into your system. For workloads with any level of performance-sensitivity, enterprises (but not necessarily hyperscalers) are not likely to buy very large capacity HDDs because of concerns about capacity that can’t be effectively used and disk rebuild times. Because of their much higher random read and write performance, SSDs tend to be able to use more of the capacity in a device before performance concerns kick in, and their much higher throughput also enables much faster rebuild times so for most enterprises its more reasonable to use larger device sizes in AFA systems than in all-HDD systems.

I will note that enterprise storage systems vendors routinely recommend that their customers not fill individual devices any more than 60-80% full due to performance concerns. As a result, extra raw capacity will need to be purchased in all system types (all-flash, hybrid or all-HDD) but the additional raw capacity need will be larger in hybrid and especially all-HDD systems. So before any kind of comparison can be made, you need to know what size of device you will actually be configuring in your system before you can perform a meaningful comparison.

The actual effective capacity utilization at the device level is an extremely important variable in determining the number of devices of each type (SSD or HDD) that will be required. More devices drive higher manufacturing costs and CO2(1), higher shipping costs (note that an HDD weighs about 3x as much as an SSD and takes up more volume even though it may offer far less effective capacity), more supporting infrastructure (controllers, enclosures, fans, power supplies, switches, cables) in a system, higher energy and floor space consumption, higher maintenance costs and higher e-waste disposal costs. I think it’s reasonable to assume that for typical enterprise workloads, an AFA system will have at least half as many devices as an all-HDD system configured to meet the same performance and effective capacity requirements. And that is not based on the SSDs necessarily being 2x as large as the HDDs – it’s based on a combination of the actual device capacities selected as well as other factors like the actual performance and capacity requirements, effective capacity utilization achievable at the device level and data reduction (if and where applicable).

If you run the “HDD vs. SSD” comparison assuming the 2:1 ratio of HDDs to SSDs, this doubles the impacts on manufacturing costs and CO2, shipping costs, supporting infrastructure, energy and floor space consumption, maintenance and e-waste. All manufacturing (whether it’s for HDDs or SSDs) is getting greener over time so we can expect that CO2 will in general be going down for both HDDs and SSDs, but the higher media density of flash will reduce the emissions per bit faster for SSDs than HDDs, reducing the CO2/TB for flash but maybe not the CO2 per device. All of this is swept under the rug using device-level comparisons.

The ratio of HDDs to SSDs in an enterprise system changes the landscape on both sustainability and costs in a pretty substantial way but it’s still probably not enough to pose an existential threat to HDDs. Those that claim that SSDs will not replace HDDs for enterprise use by 2028 are looking at this comparison, and I must say that it’s one with which I agree. A 2:1 advantage won’t kill HDDs (but the cost and sustainability differences between SSDs and HDDs are far smaller than a device-level comparison would suggest).

But imagine if the actual ratio was 6:1 or say, 10:1. Now we’re talking about an existential threat to HDDs. I only make this point because today, the vendor I work for (Pure Storage) has a 6:1 flash device capacity advantage over 12TB HDDs (we’re shipping a 75TB flash device today) and by 2026 will have a 10:1 advantage over 30TB HDDs (if the HDD vendors actually ship devices of that size as they claim they will and enterprises choose to use them). Pure will be shipping a single 300TB flash device (one of our Directflash Modules (DFMs), not a commodity off the shelf (COTS) SSD). And because we manage the flash media both directly and globally (at a system level) through our highly flash-optimized storage OS, we get 95%+ utilization of usable capacity and very fast rebuilds. Our assertion that HDDs will effectively be dead by 2028 is based on a comparison using our much denser flash device technology, not COTS SSDs.

Finally, let’s look at reliability and longevity. There’s a key long-term study that compares HDD vs. SSD reliability over the last decade or 2 published by Backblaze. This study is not based on forward-looking product specs – it’s based on failure rates in practice. What is evident from this study is that SSDs are more reliable than HDDs – the average annual failure rate (AFR) for HDDs in that study is 2.28% while the AFR for SSDs is 0.89% (2). [By the way, the AFR for Pure’s DFMs is 3x-5x lower than that of COTS SSDs.] There is also an inflection point towards worse reliability in HDDs as they get into years 4 and 5 of their life so they start to fail more often. HDD failure rates (and therefore support costs and data rebuild risks) increase with age much faster than with flash, and this is true even when the HDD vendor replaces a failed device within warranty at no charge. You can’t really apportion these costs with a device-level comparison so they also get conveniently left out.

HDDs will last you 5 years, at which point you’ll need to “forklift” upgrade your system buying all new devices along with all that extra supporting infrastructure. SSDs can have up to a 10-year life so you have to replace them half as often. So the shorter life cycle incurs the costs and impacts of a forklift-driven technology refresh as well as imposing more e-waste (based on whatever multiplier you get by comparing device-level HDD and SSD effective capacities) twice as often. So again, advantage to flash-based systems.

Bottom line, if we’re going to engage in a real-world comparison of the cost and sustainability of spinning disk vs. flash, we need to do it at a system, not a device level, and take embodied carbon manufacturing impacts, device weights, infrastructure density (performance and capacity), reliability and life cycle into account.

1 The carbon dioxide equivalent (CO2) is used to measure and compare emissions from greenhouse gases based on how severely they contribute to global warming. Metrics for CO2 would show how much a particular gas would contribute to global warming if it were carbon dioxide.

2 https://www.backblaze.com/blog/backblaze-drive-stats-for-q2-2023/; note that the quarterly AFRs are somewhat volatile – although the 2.28% number is the latest quarterly AFR, in the prior quarter the math worked out to a 1.54% AFR for HDDs in the study. The SSD AFR also comes from a Backblaze study although the sample size is far smaller than the HDD study.

For more information and detailed study, please refer to this white paper available here.

Comments

This new debate reminds us what we saw in the past when people compared tape and HDD when HDD was considered in secondary storage for backup many years go. Approximately 20 years ago, Data Domain introduced the model with several others jumping into the segment. I mention this because at that time, parties compare different things, tape advocates considered the tape as a independent media comparing it with a HDD and the de-dupe vendors insisted on the entire system for a similar capacity with tape, things like 500TB, 1PB or more depending what are you considered at that time. Volumes at that time were smaller obviously.

Here this is the same things with tape replaced by HDD and HDD replaced by SSD or flash-based entities. You immediately understand the shift the industry has made. A long time ago, we had HDD for primary and tape for secondary storage. HDD pushed tape to the archiving world when HDD appeared in the backup landscape. Now SSD/flash pushed the HDD to secondary storage usage and even we saw some models without any HDD at all considering SSD/flash and tape, and potentially cloud somewhere in the equation.

Speaking about capacity roadmap, SSD offers today 61.44TB for the last Solidigm SSD, Pure supports 48TB DFM , soon 75TB and prepares 300TB. Largest HDD capacity today is 22TB and the roadmap shows small capacity gain in the future.

Of course, there is the dimension of sustainability and green impact. We consider essentially 3 key parameters to that: Erasure Coding for better data protection at scale without multiplying copies, data reduction to reduce consumed space and finally energy with advanced power models.

At the end of the day, with budgets pressure and consolidation goals, end-users are invited to ask themselves why do they need to keep HDDs when they can do same things and even much more with SDDs.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter