Balance Storage Performance, Resilience, and Efficiency

To drive digital business outcomes

This is a Press Release edited by StorageNewsletter.com on May 30, 2023 at 2:02 pm![]() This report, published in May 2023, was written by Dave Pearson, research VP, infrastructure systems, platforms, and technologies group, IDC Corp., and sponsored by Nyriad Inc.

This report, published in May 2023, was written by Dave Pearson, research VP, infrastructure systems, platforms, and technologies group, IDC Corp., and sponsored by Nyriad Inc.

Balance Storage Performance, Resilience, and Efficiency to Drive Digital Business Outcomes

Modern storage infrastructure needs to provide performance, scalability, reliability, and efficiency to maximize data value and minimize time to insights for organizations in the digital business era.

At a glance, what’s important?

Data protection requirements, as well as the capacity and performance demands of modern workloads, have pushed storage buyers to consider more modern architectures to support business objectives in the digital business era.

Evaluating storage platform capabilities is critical to providing applications with the infrastructure required to derive deep insights in a timely manner. Hardware acceleration, scalability, software capabilities, increased availability and resilience, and efficiency are all key criteria for vendor selection.

Introduction

Digital transformation (DX) has given way to the advent of digital business. Businesses have increasingly embraced digital tools, platforms, and strategies to optimize their operations, improve customer experiences, and drive growth. IDC research has shown that despite uncertain economic conditions, organizations intend to increase spending on datacenter technologies including storage systems, converged and HCI, and storage software. Workloads continue to proliferate as new applications are developed and deployed at a staggering rate. Additionally, workloads such as HPC and AI are entering mainstream enterprise environments and are increasingly demanding – in terms of both performance and capacity. IDC’s Global DataSphere tells us that enterprises generated nearly 60% of the over 100ZB of data created last year – a figure that will grow to over 260ZB by 2027. Unstructured data – such as data in active archives, ML environments, and media repositories – will account for 80% of that growth. This unstructured data contains massive volumes of file and object data requiring increases in scalability and efficiency to derive maximum value from it.

As business processes become more digital and applications drive much of an organization’s competitive advantage, the value of data increases commensurately. Downtime is unacceptable; customers, employees, and partners depend on the availability of an organization’s data and the resilience of an organization’s infrastructure. Protecting that data from internal and external threats, user error, equipment failure, and other unplanned disruptions is critical to ensure the digital resiliency of an organization and a crucial factor in driving business outcomes from digital assets.

Definitions

- GPU-accelerated storage: Systems that offload computationally intensive tasks such as erasure coding, encryption, and other data processing from traditional CPUs and ASICs to GPUs in order to take advantage of the capabilities of massively multicore processing

- Erasure coding: A parity-based data protection technique in which data is broken into encoded fragments that can be distributed anywhere within an organization’s storage environment, providing robust data protection with rapid recovery but also with increased processing costs

GPU-accelerated architectures

Storage performance is consistently a top criterion for vendor selection in IDC buyer perception studies. Storage buyers are seeking even incremental performance gains to keep up with greater demands from current-gen and next-gen workloads in enterprise datacenters, increased consolidation, and greater application density and capacity requirements for storage systems. For certain computationally intensive tasks, GPUs offer performance that is an order of magnitude better than that of CPUs and at prices equivalent to those of current CPUs.

GPU-accelerated storage architectures can improve erasure coding performance, especially in petabyte-scale and block storage environments where computational overhead makes these environments less performant and attractive to buyers. GPU-accelerated storage can provide the capabilities required for the mixed block, file, and object environments of the next generation of enterprise workloads, including denser consolidation, the use of large capacity low-cost HDDs, lower power and cooling requirements, and higher levels of resiliency, availability, and data protection while ameliorating some of the cost, complexity, and computing requirements of CPU-only erasure coding implementations.

Scalability

The explosion of data growth, the proliferation of new applications and new sources of data in the enterprise, and the need to control datacenter complexity, costs, and management overhead have made scalability a critical criterion for storage system selection among buyers. Both scale-up and scale-out capabilities are sought after for a variety of reasons.

Increasing performance through scale-up capabilities can help organizations increase consolidation, as well as efficiency and utilization, while reducing complexity and administration overhead in the datacenter. Scale-out systems can increase capacity, availability, and flexibility while providing cost-effective performance.

The key to effective scaling, however, is to avoid disruptions in operation. Adding capacity or replacing existing media with newer or higher-density drives or modules, as well as acquiring new capabilities through next-generation hardware, software, or OS patching or upgrades, must be managed non-disruptively. Scheduled downtime is still downtime, and operational efficiency depends on reducing any and every instance.

Consolidation and simplicity

The number of new applications entering the enterprise and the performance attributes associated with each of them can lead organizations to seek best-of-breed solutions on a “per app” basis. This can lead to silos of data, communication, and innovation. Modern storage architectures are able to address more of the spectrum of workload requirements, allowing companies to provide denser consolidation of these applications on suitable storage platforms.

This has multiple positive effects: fewer technical and operational silos in the datacenter; easier management and reduced overhead for storage administrators; reduced complexity impacting security, compliance, and governance teams; and a smaller infrastructure footprint, which can improve efficiency and utilization while lowering space requirements and power and cooling costs. Care must be taken to avoid impacts on applications, from “noisy neighbor” problems to attribute mismatch (e.g., a policy requirement for a particular workload that impacts others on the platform due to a lack of granular management may negatively impact workload performance or capacity demands).

Rearchitecting for resiliency

As the events of the past few years drove home lessons in how digital businesses can thrive during economic and geopolitical upheaval and uncertainty, organizations came to realize that digital resilience was at least as important as innovation during this period. According to IDC surveys, preventing disruptions, as well as recovering quickly and gracefully from them when they do occur, has become a top business priority.

Resiliency needs to be a priority throughout the entire technology stack, and efficient, GPU-driven erasure coding can provide increased data integrity, availability, and resilience through a greater resistance to drive failures than older technologies. Maximizing the number of drives that can safely fail before data loss while minimizing the performance hit due to drive failure is a key benefit. Erasure coding improves data resiliency by creating redundancy that can withstand multiple disk failures or data corruption. This makes it ideal for large-scale storage systems, where the risk of data loss due to hardware failures is high.

Erasure coding can also be more cost effective and can provide faster data reconstruction times than traditional data protection methods. This is because only a subset of the parity blocks needs to be read to reconstruct the lost or corrupted data. Operational resilience is improved at the same time, as the urgency of drive replacements is reduced, even in the event of multiple drive failures.

Pushing storage efficiency for ROI, ESG, and innovation

Limited IT budgets and economic uncertainty combined with high data growth rates have already made improving storage efficiency a key consideration as IT infrastructures evolve. Doing more with less is the mantra of nearly every IT shop, but there are even wider considerations today. Environmental concerns – especially with regard to the cost and availability of power, but extending through the entire life cycle of hardware deployments – are growing among organizations in many geographies.

Consolidating workloads, implementing highly efficient infrastructure to improve utilization rates, and using the latest large capacity storage devices, whether spinning disk or flash based, are all ways to lower the footprint of storage infrastructure in the datacenter as well as reduce power and cooling requirements. While these initiatives are tied to positive environmental, social, and governance (ESG) outcomes, they also increase operational efficiency by removing silos and reducing complexity in storage infrastructure. The increased efficiency compounds the return on these initiatives by reducing skills and staff required, lowering administrative overhead, and allowing administrators and resources to return to value-added innovative activities for the business.

Considerations for storage buyers

The proliferation of storage vendors, technologies, and deployment methodologies is a double-edged sword for buyers today – options exist for every workload, but choosing the appropriate technology during the planning process is key because upgrades and migrations can be complex and costly and locking into the wrong deployment modality can be an expensive mistake for enterprises.

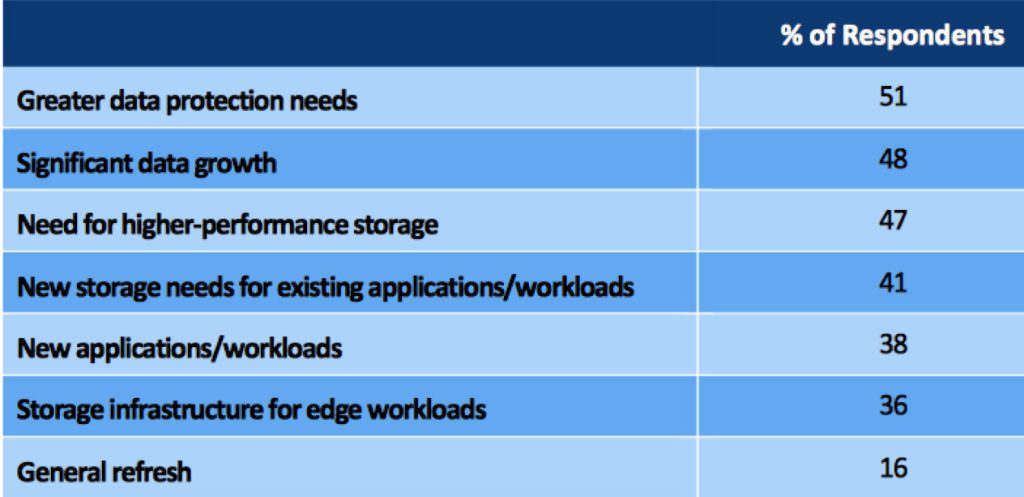

Examining TCO for a new storage solution, from acquisition costs to operating costs to the availability of skills and resources, is key for buyers. Matching application requirements while removing complexity from the storage environment can pay dividends as operational requirements, resourcing, and migrating workloads to appropriate infrastructure are all risks to TCO for enterprises. Data protection, data growth, and higher-performance storage are the top budget drivers for this year and next (see Table 1).

Table 1: Top Budget Drivers for New Storage

n=600

n=600

Source: IDC’s 4Q22 Infrastructure (Storage) Worldwide Survey

Conclusion

Better decision making in the digital business era requires better access to data, the tools and applications required to extract insight from the data, and the infrastructure to enable those applications and speed time to insight. We continue to see the demand for storage grow along the workload spectrum, from the highest performance, lowest latency, and greatest throughput to the massive scale of unstructured file and object data. Satisfying the workload requirements and storage demands of the modern digital enterprise requires similarly modern infrastructure planning, procurement, and operations.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter