Secure Sustainable Storage

Global imperative

This is a Press Release edited by StorageNewsletter.com on May 8, 2023 at 2:02 pm This blog, published on May 2, 2023, was written by Ken Clipperton, lead analyst, DCIG LLC, and commissioned by Swiss Vault Global.

This blog, published on May 2, 2023, was written by Ken Clipperton, lead analyst, DCIG LLC, and commissioned by Swiss Vault Global.

Secure Sustainable Storage A Global Imperative

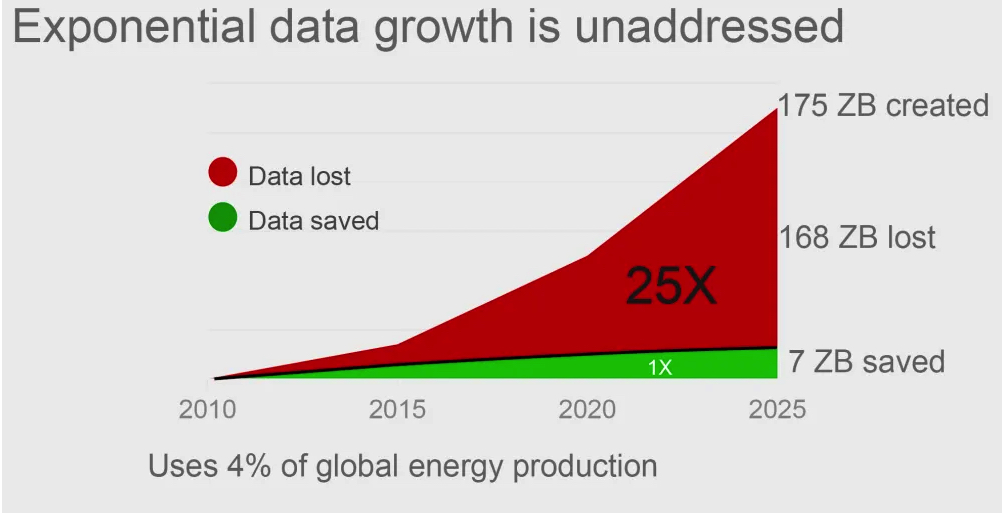

Storage consumes an estimated 4% of the global electrical energy supply, and the demand for storage will accelerate for the foreseeable future. We need innovations in storage software and hardware to enable economical, resilient, and environmentally sustainable data management.

Need for sustainable storage

Everybody has lost valuable data, due to various reasons. Most of this can never be recreated in whole or in part. In NASA’s case, valuable technical design data stored on 7-track magnetic tape has been lost, due to the unavailability of still-functioning tape drives. This will soon be the case for data stored on floppy disks. (When was the last time you saw an 8-inch floppy disk reader?)

Another major storage problem is power demand. Within the data center, storage typically accounts for a significant portion of that electricity. Overall, storage consumes an estimated 4% of the global electrical energy supply, and the demand for storage will accelerate for the foreseeable future with the coming Data Tsunami. Thus, sustainability matters when it comes to enterprise storage.

Data tsunami quantified

HPC requires vast amounts of data capacity and velocity. That data holds value for organizations and society.

One person I spoke with at the SC22 supercomputing conference is responsible for managing technology for a commercial genomics company. It processes 2PB of genomics data daily, keeping “just” 100TB of that data. They would like to save more of the raw data for future research but have not found a practical solution that enables them to do so.

Source: Swiss Vault, derived from Seagate/IDC Data Age 2025

Such a cost-effective solution would necessarily use commonly available non-proprietary hardware and provide a seamless transition to supporting new storage capacities and media types as technology evolves.

Raphael Griman, compute team lead for European Molecular Biology Laboratory-EBI, said his organization currently stores more than 250PB of public science data and expects to cross the exabyte boundary within a year. That means EMBL-EBI adds an average of 2PB to its environment each day.

Sustainability – Reducing data’s carbon footprint

Bhupinder Bhullar, Swiss Vault co-founder and CEO also worked in genomics. He recognized the need to preserve and secure this sensitive data for a lifetime.

He concluded: “Every CIO should have a strategy for 100-year data retention.”

The co-founders started Swiss Vault to solve the problem of economically and reliably archiving huge volumes of data for decades. Firm’s separate software and hardware innovations enable economical, resilient, and environmentally sustainable data management.

Bhullar, Swiss Vault CEO, said: “Our big thing is flexibility“

Company focus on sustainability caused it to prioritize flexibility. The flexibility to fully utilize existing infrastructure. The flexibility to incorporate diverse storage resources into a single namespace with no limits on the filesystem size. The flexibility to adapt to the customer’s needs instead of expecting the customer to adapt to the technology provider’s restrictions.

Flexibility to double or triple life of existing infrastructure

The vast majority of data center infrastructure enters the waste stream within a few short years. Therefore, extending the life of existing infrastructure is one way of reducing both Capex and data’s carbon footprint. Swiss Vault’s Vault File System (VFS) software can delay a data center refresh from the typical 3 to 4 years to double or perhaps triple that time.

Douglas Fortune, Swiss Vaults CTO and co-founder stated: “By making flexibility a core value in the design of the Vault File System, Swiss Vault is maximizing the useful hardware lifespan and minimizing the waste of electronic components.“

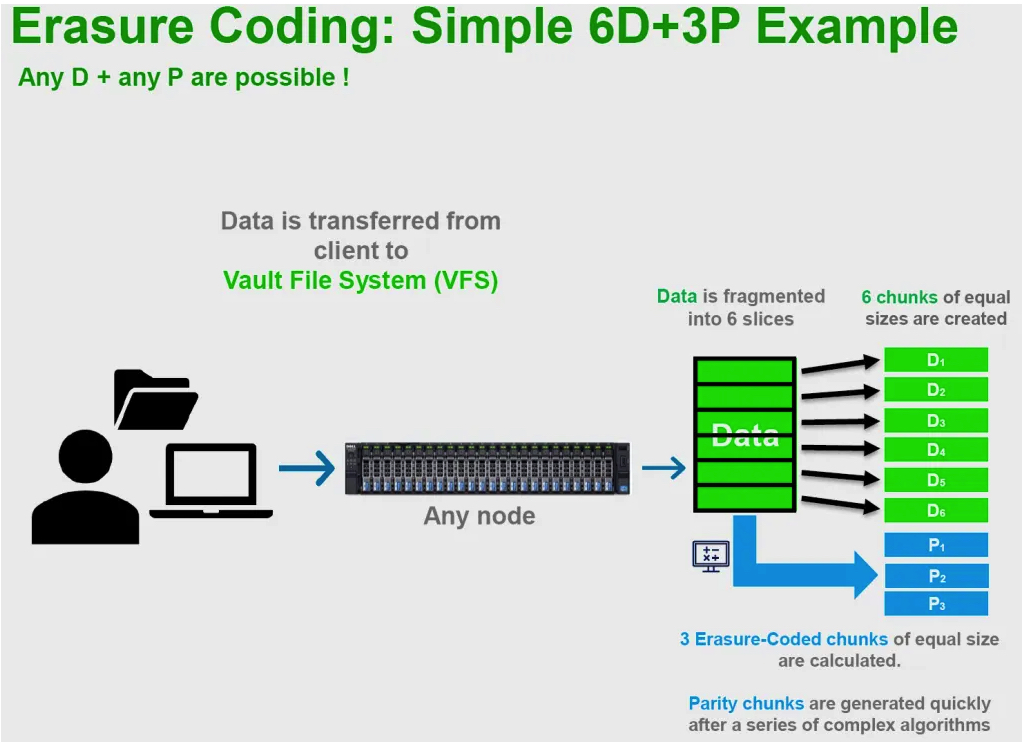

Erasure coding flexibility for sustainable storage

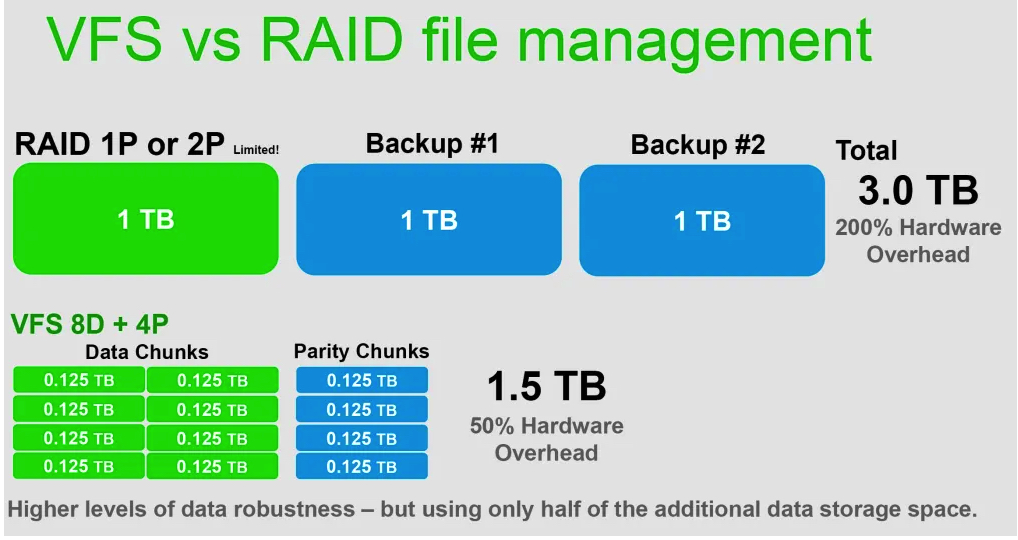

Erasure coding enables higher resilience and more efficient capacity utilization than traditional RAID schemes. Further, VFS distributes the data over multiple servers, allowing for limited node failures. The Vault File System’s erasure coding is different in that it is easy to implement and much more flexible than other solutions

Swiss Vault’s flexible erasure coding to any level of resilience means an organization can run existing HDDs to failure without risking downtime, and they can replace older low-capacity drives with more energy-efficient high-capacity drives to optimize for capacity and energy efficiency on a schedule that is optimal for the organization.

Customers can configure and re-configure erasure coding using any combination of data plus parity (D+P) chunks. Most competitors only allow customers to choose from a few vendor-defined options. With Swiss Vault, customers at any time can assign a different D+P per directory, file, file type, or file class to match the customer’s current requirements. This flexibility applies across the life of the hardware infrastructure, regardless of media size, speed, or vintage.

Hardware flexibility: VFS vs. RAID

Swiss Vault’s customers can re-use existing standard networks, servers, and storage to extend the life of that infrastructure, and can also take advantage of recent hardware advances. By connecting existing and new systems in a single namespace, organizations can scale their storage

Source: Swiss Vault

- Networking. VFS clusters can utilize basic networking but use RDMA (RoCE or IB) if available for inter-server communication. VFS does not require expensive SmartNICs or GPU’s for computational acceleration. The VFS client can also be installed on Linux workstations/instruments for parallel R/W of data (a great use case is research intense data capture from particle physics experiments and genomics).

- Servers. VFS is compiled for X86 and ARM, runs on most modern hardware-even Rasberry Pi+-and does not require GPUs or other hardware accelerators. VFS is developed and tested on Mint and Ubuntu and should run on any Linux/Unix.

- Storage. VFS can integrate existing JBOD-formatted storage and newer higher-density storage into a single storage pool, with unlimited filesystem size. Customers can put an VFS directory on an existing XFS/EXT4 disk to co-exist with other JBOD data. The VFS directory will grow dynamically as data is migrated into VFS. VFS media can be moved from server to server and slot to slot without admin configurations. VFS automatically recognizes moved disks and their contained data, even when moving disks across CPU architectures or geographic boundaries.

- Incremental scaling. Some storage solutions require adding capacity in multi-disk packs or complete nodes. VFS enables incremental expansion by as little as a single drive in arbitrary size capacity. This incremental scaling reduces waste by enabling the organization to acquire capacity as it is needed instead of the traditional practice of buying several years of capacity up front and then powering it for months or years before it is needed.

- Take advantage of hardware innovations. In addition to extending the life of existing infrastructure, Swiss Vault customers can take advantage of its low-power hardware innovations and the latest networking gear to achieve an optimal balance of capacity, cost, and sustainability based on the customer’s priorities.

- Tailored to big data workloads. Swiss Vault’s first customers include organizations in genomics, particle physics, remote sensing, and other scientific big data environments. Those industry-specific customers benefit from firm’s specialized file-type data compression which is often more efficient than generalized compression.

Swiss Vault’s power-efficient hardware innovations

Though the focus of this report is on Vault File System (VFS), the company is also innovating carbon-reduced storage through low-power storage systems. It uses low-wattage AMD EPYC, ARM, and RISC-V architectures and other mechanisms to enable a 10x improvement in storage density and power efficiency in terms of watts per petabyte.

Swiss Vault best fit use cases

As noted above, its first customers are research and science-oriented organizations generating big data. Thus, organizations with similar workloads may be especially interested in joining these early adopters.

Swiss Vault may be a good fit for you if one or more of the following applies to your organization:

- You have sub or multi-petabyte storage requirements in support of scientific big data.

- You need to enhance the resilience of your big data environment.

- You are approaching a data center refresh cycle.

- You are implementing an active archive or are rapidly outgrowing your archive storage.

- You are considering a replacement for your tape-based data archive

DCIG guidance

As with many innovators, Swiss Vault’s founders’ vision put them in touch with emerging requirements earlier than most. They recognized the need to store and securely access large amounts of sensitive data for a lifetime, and they embarked on a journey to meet that need.

Swiss Vault emerged from stealth in 2022. They have secured multiple start-up awards, including an Energy Globe Gold Award from the Energy Globe Foundation (Austria) for innovation in sustainable technologies. The Vault File System already delivers the core features needed to enable a more everlasting storage infrastructure, and they have an software and hardware roadmap to realize and expand on that vision.

The frm has now reached the commercialization stage and is offering incentives (such as industry-specific optimizations and customizations) for early adopters. That is, organizations with IT staff who 1) understand their organization’s data requirements, 2) are able to communicate effectively with product developers, and 3) are willing to implement software updates.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter