GigaOm Radar for Distributed Cloud File Storage

Leaders Nasuni, Ctera, Panzura and outperformer Hammmerspace

This is a Press Release edited by StorageNewsletter.com on December 6, 2022 at 2:02 pmThis report was published on November 16, 2022, written by 2 analysts of GigaOm:

Max Mortillaro, data, analytics, and AI, and

Max Mortillaro, data, analytics, and AI, and

Arjan Timmerman, data, Analytics, and AI.

Arjan Timmerman, data, Analytics, and AI.

GigaOm Radar for Distributed Cloud File Storagev3.0

An Assessment Based on the Key Criteria Report for Evaluating File-Based Cloud Storage Solutions

1. Summary

File storage is a critical component of every hybrid cloud strategy—especially for use cases that support collaboration—and distributed cloud file storage that supports collaboration has become increasingly popular. There are several reasons: these solutions are readily available and simple to deploy; they rely on the cloud as their backbone, making them geographically available nearly everywhere; and they are simple for end users to operate.

From a cost perspective, these solutions can also help organizations shift from a Capex cost model to an Opex model. They no longer need to purchase infrastructure upfront for multiple physical locations and can instead pay for the capacity they really use without making massive investments.

The other major advantage of distributed cloud file storage is its ubiquity, which makes it perfect for remote work. Remote work was already on the rise before the global Covid-19 pandemic, but sudden, drastic measures taken to protect public health had a significant impact on everyday logistics for organizations and workers. Remote collaboration was suddenly no longer a matter of choice or policy, and organizations quickly needed to find ways to do it better. Although the worst stages of the pandemic seem to be over, its impact on work habits is likely to last and along with it, the need for remote data access.

The classic hub-and-spoke architecture that remote workers use to connect to their corporate network and access files on NAS is no longer scalable. Data must now be available everywhere, instantly and securely, protected against threats such as ransomware. Organizations also need clear insights into their data: what data is generated, by whom, and how fast the footprint is increasing. They need clear visibility into the cost impact of data growth, ways to manage and clean up stale data, and the means to observe and take action in response to abnormal activities.

While distributed cloud file storage tenets are well-established and most solutions offer a solid distributed architecture, new challenges continue to arise. The most immediate of those are ransomware and other advanced threats, which can cause widespread chaos and irreparable damage to organizations. Already a hot topic last year, effective ransomware protection is becoming critical and requires not only foundational capabilities such as immutable snapshots from which organizations can recover data, but also proactive detection and mitigation of malicious activity.

Regulatory constraints also impact data governance. Consumer protection laws such as GDPR and CCPA give customers greater control over their data and impact how organizations manage that data.

Nation-states are also starting to understand that data is a strategic asset and are starting to impose strict data sovereignty regulations that require data assets to be physically stored within the given country’s territory. Data needs to be tracked, classified, and treated appropriately in order to comply with regulatory bodies of both governments and industries.

This report highlights key distributed cloud file storage vendors and equips IT decision-makers with the information needed to select the best fit for their business and use-case requirements. In the corresponding GigaOm report Key Criteria for Evaluating File-Based Cloud Storage Solutions, we describe in more detail the key features and metrics that are used to evaluate vendors in this market.

2. Market Categories and Deployment Types

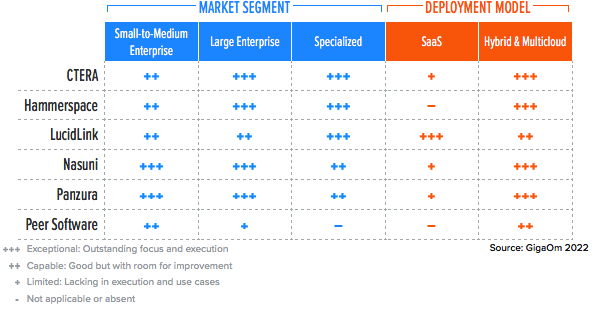

For a better understanding of the market and vendor positioning (Table 1), we assess how well solutions for distributed cloud file storage are positioned to serve specific market segments:

• Small-to-medium enterprise: In this category, we assess solutions on their ability to meet the needs of organizations ranging from small businesses to medium-sized companies. We also assess departmental use cases in large enterprises, where ease of use and deployment are more important than extensive management functionality, data mobility, and feature set.

• Large enterprise: These offerings are assessed on their ability to support large and business-critical projects. Optimal solutions in this category will have a strong focus on flexibility, performance, data services, and features to improve security and data protection. Scalability is another big differentiator, as is the ability to deploy the same service in different environments.

• Specialized: Optimal solutions are designed for specific workloads and use cases; for example, handling massively large files (for M&E, healthcare verticals, and so on). When a solution fits a specialized use case, this will be mentioned in the appropriate section.

We also recognize 2 deployment models for solutions in this report: cloud-only, or hybrid and multi-cloud:

• SaaS: The solution is available in the cloud as a managed service. Often designed, deployed, and managed by the service provider or the storage vendor, it is available only from that specific provider. The big advantages of this type of solution are simplicity and integration with other services offered by the cloud service provider.

• Hybrid and multicloud solutions: These solutions are meant to be installed both on-premises and in the cloud, fitting in with hybrid or multi-cloud storage infrastructures. Integration with a single cloud provider could be limiting compared to the other option and more complex to deploy and manage. On the other hand, they are more flexible, and the user usually has more control over resource allocation and tuning throughout the entire stack.

Table 1. Vendor Positioning

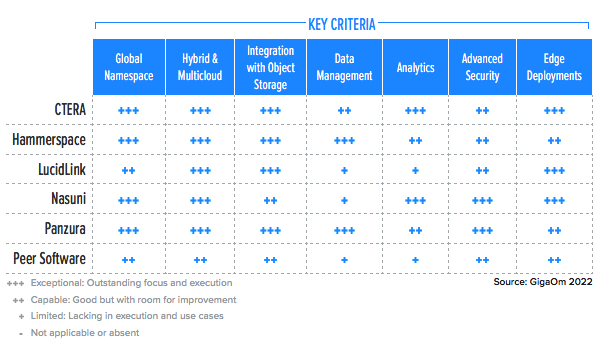

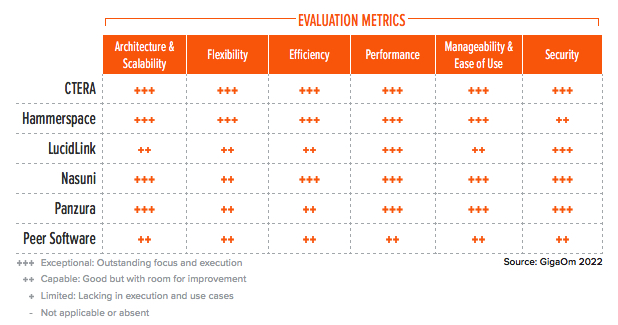

3. Key Criteria Comparison

Building on the findings from the GigaOm report, Key Criteria for Evaluating File-based Cloud Storage Solutions, Table 2 summarizes how each vendor included in this research performs in the areas we consider differentiating and critical in this sector. Table 3 follows this summary with insight into each product’s evaluation metrics – the top-line characteristics that define the impact each will have on the organization.

The objective is to give the reader a snapshot of the technical capabilities of available solutions, define the perimeter of the market landscape, and gauge the potential impact on the business.

Table 2. Key Criteria Comparison

Table 3. Evaluation Metrics Comparison

By combining the information provided in the tables above, the reader can develop a clear understanding of the technical solutions available in the market.

4. GigaOm Radar

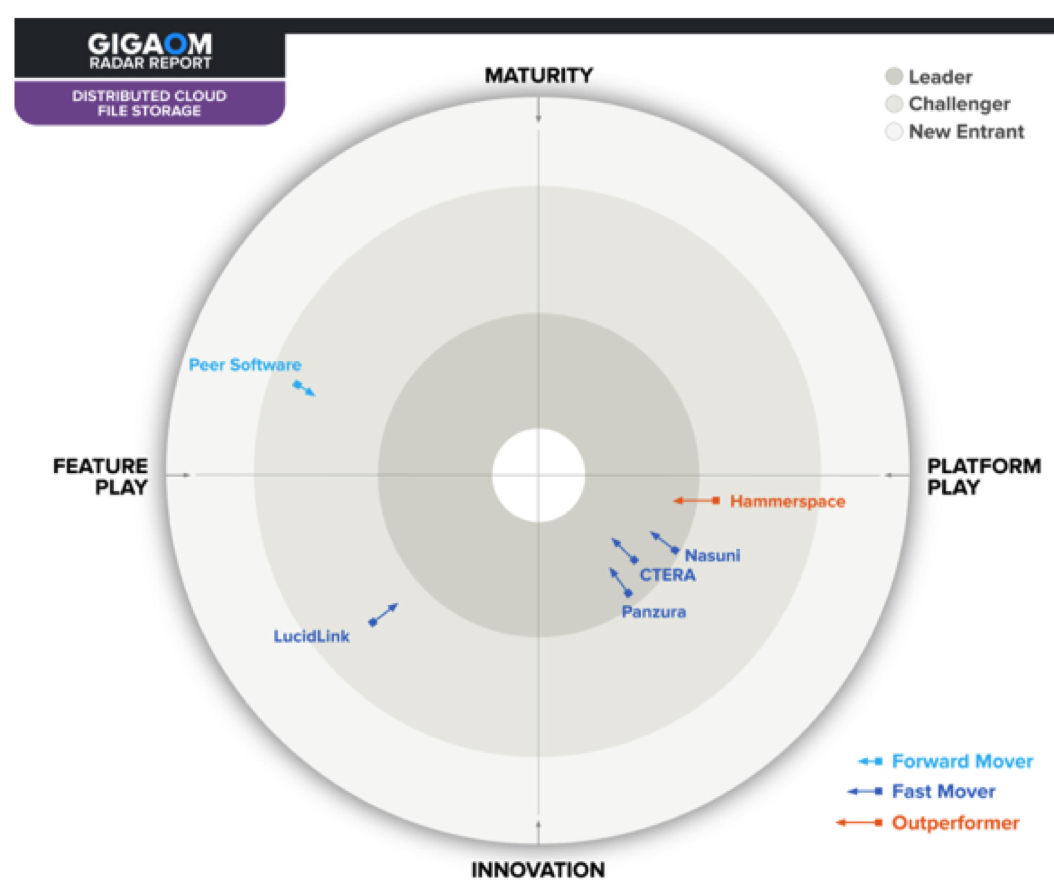

This report synthesizes the analysis of key criteria and their impact on evaluation metrics to inform the GigaOm Radar graphic in Figure 1. The resulting chart is a forward-looking perspective on all the vendors in this report, based on their products’ technical capabilities and feature sets.

The report plots vendor solutions across a series of concentric rings, with those set closer to the center judged to be of higher overall value. The chart characterizes each vendor on 2 axes – balancing Maturity vs. Innovation, and Feature Play vs. Platform Play – while providing an arrow that projects each solution’s evolution over the coming 12 to 18 months.

Figure 1. GigaOm Radar for Distributed Cloud File Storage

As you can see in in Figure 1, there is just one vendor with Outperformer status this year. The technology and solutions are maturing, and even though the Leaders continue to innovate, the pace is not as aggressive as it used to be. Innovation areas primarily cover data management, data analytics, and advanced security (most notably ransomware protection).

The right half of the Radar holds 4 innovative, Platform-Play solutions, 3 of them Leaders: CTERA, Panzura, and Nasuni. The solutions may appear to be very similar, but each has unique differentiators.

Two of them, Panzura and Nasuni, have been redesigned, moving from a monolithic architecture to a pluggable one that better integrates with existing data services and can provide simpler integration with future extensions.

CTERA offers a massively scalable solution with an accelerated, low-latency transfer protocol, best-in-class data tiering capabilities, a zero-trust security architecture, outstanding edge access features, and a data services architecture that includes connectors for CTERA microservices and third-party services such as data-classification, full-text indexing, and search.

Panzura differentiators include its Data Services, an advanced data management suite with a host of capabilities, and its Enhanced Ransomware Protection with proactive detection and alerting.

For its part, Nasuni runs fully in the cloud, with a multicloud back end, excellent ransomware protection, and edge deployment capabilities. Nasuni recently offered a hybrid-work add-on solution designed to make file management and access from remote locations faster and more secure. Data management capabilities are currently missing but should be added in the near future thanks to a recent acquisition.

Hammerspace follows the leaders closely, with a cloud file system solution based on a distributed global namespace that can also address distributed cloud file storage requirements. The solution was built with more versatility and use cases in mind, particularly for high-performance workloads. This explains a stronger focus on building a robust architectural foundation that excels in hybrid and multicloud capabilities, as well as integration with object storage.

LucidLink and Peer Software, as Feature-Play solutions, can be found in the left half of the Radar.

LucidLink, in the Innovation quadrant, focuses on globally, instantly accessible data with one particularity – data is streamed as it is read. Streaming makes the solution especially well-suited for industries and use cases that rely on remote access to massive, multiple-terabyte files, such as the media and entertainment industry. The solution also shows excellent integration with object storage and great support for hybrid and multicloud models. The solution relies on a zero-knowledge model that prevents LucidLink from accessing customer data. This security advantage unfortunately reduces the solution’s ability to deliver data management and data analytics outcomes.

Located In the Maturity quadrant, Peer Software offers a proven architecture that allows organizations to build abstracted distributed file services on top of existing storage infrastructure, while also supporting scalability in the cloud. Peer Software’s solution protects existing storage investments and extends their usage to distributed cloud file storage, while its PeerMED technology provides an astute approach to ransomware detection.

5. Vendor Insights

CTERA

It offers a distributed cloud file storage platform built around its massively scalable Global File System (GFS), which can reside in the cloud as well as in on-premises infrastructure. CTERA’s GFS presents file interfaces on the front end and leverages private or public S3 object storage on the back end.

Organizations can access GFS through 3 components: an Edge Filer gateway (SMB/NFS network filer), which can be deployed centrally or at edge locations with either a virtual edge filer, or as one of a selection of hardware edge filers with capacities varying from compact devices with 2TB to large devices up to 256TB, the CTERA Drive (a desktop/VDI agent), or the CTERA mobile application.

The solution relies on CTERA Direct, an accelerated edge-to-cloud bidirectional sync protocol that enhances existing source-based deduplication and compression by increasing parallelism. This protocol allows wire-speed cloud throughput, even when operating over relatively high network latencies in the 200ms range and continuous data sync to the cloud, making the solution suitable for data-intensive workloads. The solution is managed by the CTERA Portal, which also handles the GFS. A new instant DR Filer capability allows a CTERA filer to be deployed and become immediately operational, while metadata synchronization happens in the background. Administrators can also use policies to pin data that must always be downloaded first in any new filer deployment, and pre-seeding is also possible.

Since CTERA implements a global namespace, organizations can define zones dedicated to various departments or tenants. Administrators can define granular permissions around zones and authorized users. These zones also can segment data geographically for each tenant or enforce data sovereignty requirements in specific jurisdictions.

The global namespace is fully transparent to end users. Administrators can use CTERA Migrate – a built-in migration engine – to discover, assess, and automatically import file shares from NAS systems. Existing file systems are supported via native file synchronization and share capabilities on Windows, Linux, macOS, and mobile devices.

The solution comes with broad deployment options that cover the edge, core infrastructure, and the cloud. It concurrently supports multiple clouds, so users can transparently migrate data between clouds or to-and-from on-premises object stores.

When customers use AWS S3 as the back-end object storage for CTERA, the solution can use S3 intelligent tiering, which allows data to be moved between the S3 frequent- and S3 infrequent-access tiers, helping organizations achieve further cost savings.

CTERA also can be deployed entirely on-premises in a fully private architecture that meets the stringent security requirements of several homeland security customers.

CTERA Insight provides data analytics capabilities. This data visualization service analyzes file assets by type, size, and usage trends, and presents the information through a well-organized, customizable user interface. Besides data insights, this interface also provides real-time usage, health, and monitoring capabilities that encompass not only central components but also edge appliances. CTERA’s SDK allows API integrations, as well as S3 connectors, to enable microservices to perform data management tasks (CTERA supports Kafka in production).

The solution is architected around a zero-trust foundation with military-grade security hardening: all filers are considered untrusted and never receive privileged credentials, while every individual request is reviewed and signed digitally by the CTERA portal prior to granting access. The solution uses WORM snapshots as a foundational, built-in ransomware protection layer. Advanced ransomware protection is available through a third-party integration with Varonis. The company also offers a Kakfa-based log forwarder module, allowing any security information and event management (SIEM) solution to monitor and analyze logs, assist with data classification, and identify risky permission settings. The firm also provides true multitenancy with chargeback, isolation, reporting, and the ability to segment multiple independent customers or sub-units.

The solution’s architecture allows edge deployments for small branches or heavy-duty work-from-home (WFH) users through the remotely managed CTERA HC100 Edge appliance, a filer with 2TB embedded NVMe cache, as well as its Drive Connect software for Windows and macOS. Mobile collaboration is also possible through its Drive mobile application for iOS and Android. Edge clients maintain a direct relationship with the global file system, bypassing the filers.

Strengths: Demonstrates a selection of methods to access its GFS with multiple options, as well as solutions built for edge use cases. It has a great migration engine and multitenancy features, and the excellent high-throughput, low-latency capabilities of the firm’s Direct transfer protocol are also worth mentioning.

Challenges: Despite a very strong focus on security, the choice to offload advanced ransomware protection to 3rd-party software may be off-putting to some organizations.

Hammerspace

It parallel global file system helps organizations overcome the siloed nature of hybrid cloud file storage by providing a single file system, regardless of a site’s geographic location or whether storage provided by a storage vendor is on-premises or cloud-based, by separating the control plane (metadata) from the data plane (where data actually resides). It is compliant with several versions of the NFS and server message block (SMB) protocols, and includes RDMA support for NFSv4.2. Although its primary use case is in the cloud file system space (particularly around high-performance workloads), the solution can also meet the needs of distributed cloud file storage systems, as well as the requirements of organizations that may need a single solution for both use cases.

The solution lets customers automate through objective-based policies providing the ability to use, access, store, protect, and move data around the world through a single global namespace—the user has no need to know where the resources are physically located. The product is based on the intelligent use of metadata across file system standards and includes telemetry data – such as IO/s, throughput, and latency – as well as user-defined and analytics-harvested metadata that allows users or integrated applications to rapidly view, filter, and search the metadata in place instead of relying on file names. The company now also supports user-enriched metadata through the its Metadata Plugin, a Windows-based application that allows users to create custom metadata tags directly within their Windows GUI. Custom metadata will be interpreted by the firm and can be used not only for classification but also to create data placement, disaster recovery, or data protection policies.

Hammerspace can be deployed on-premises or to the cloud, with support for AWS, Microsoft Azure, Google Cloud Platform (GCP), Seagate Lyve, and several other cloud platforms. It implements share-level snapshots as well as comprehensive replication capabilities, allowing files to be replicated automatically across different sites through the vendor’s Policy Engine. Manual replication activities are available on demand as well. These capabilities allow organizations to implement multisite, active-active disaster recovery with automated failover and failback. Scalability has been improved, with twice the number of sites supported in multisite deployments, and up to 100 object buckets.

Integration with object storage is also a core capability of the company because data can be not only replicated or saved to the cloud, but also automatically tiered on object storage, thus reducing the on-premises data footprint and leveraging cloud economics to keep storage spend under control.

One of firm’ss key features is its “Autonomic Data Management” ML engine. It runs a continuous market economy simulation which, when combined with telemetry data from a customer’s environment, helps users make real-time, cross-cloud data placement decisions based on performance and cost. Although the company categorizes this feature as data management, in the context of the GigaOm report, Key Criteria for Evaluating Cloud-Based File Storage Solutions, this capability is more related to the hybrid and multicloud and integration with object storage key criteria.

Ransomware protection is offered through immutable file shares with global snapshot capabilities as well as an undelete function and file versioning, allowing users to revert to a file version not affected by ransomware-related data corruption. Auditing has also been improved, with a now-global audit trail capability.

It’s also worth noting the solution’s availability in the Azure Marketplace as well as its integration with Snowflake, allowing Snowflake analytics to run directly on Hammerspace without having to move data to the Snowflake cloud. The firm is also partnering with Seagate on the release of a Corvault-based appliance capable of running Hammerspace at the edge.

Strengths: Distributed Global File System offers a very balanced set of capabilities with replication and hybrid and multicloud capabilities through the power of metadata.

Challenges: Ransomware detection capabilities are missing.

LucidLink

Its Filespace is a cloud-native, high-performance file system for distributed workloads, designed to address the limitations of NFS and SMB protocols. Delivered as a SaaS solution, it stores files in an S3-compatible object storage back end and streams files on demand. When a file is stored in LucidLink, the metadata is separated from the actual data and the file is split into multiple chunks, each of which consists of an individual S3 object in the back end.

Applications still see the file as a single entity, and the company streams the file in the back end, presenting the data to the application as requested without affecting performance or bandwidth. This method of handling data lets organizations work seamlessly with multiple-terabyte files without having to wait for full file replication or synchronization. This shortcut makes it particularly suited for organizations manipulating huge files, such as the M&E industry.

From an architectural perspective, the solution consists of 2 components: the Client and the Service. The client runs at the OS level on servers and desktops (mobile platforms are on the roadmap), presenting files as if they were local and handling compression, prefetching, encryption, and caching.

The solution offers a shared global namespace and full file-system semantics. The firm’s service runs in the cloud and manages metadata coordination, garbage collection, file locking, snapshots, and other optimization features. The metadata is encrypted by the client, then synchronized across all connected clients to ensure the company has zero knowledge of either the data or metadata, and the cloud providers cannot view the data either. File data itself is stored in the object store.

The vendor relies primarily on public-cloud object storage providers, allowing organizations to implement volumes in the petabyte range, with hundreds of millions of files on each volume. The solution supports any S3 object store, whether on-premises or in the cloud, as well as on Microsoft Azure Blob.

Streaming data from the cloud does have an impact on egress fees. Firm’s partners with Wasabi for general purpose storage (leveraging free egress traffic) and IBM Cloud Object Storage for high-performance access (with attractive egress traffic pricing). Customers are also able to provide their own cloud storage if they wish.

From a security perspective, the company implements a zero-trust access model, with full support for in-flight and at-rest encryption, as well as multitenancy.

Organizations can bring their own key management systems and users create their own encryption keys when they initialize a filespace. Data is encrypted per tenant and protected at the filesystem level by immutable read-only snapshots. Dubbed a zero-knowledge security model, the design decision to fully encrypt data with customer – provided encryption keys is a 2-edged sword: it provides security for the organization, but it also completely obfuscates data, reducing the scope of data management and data analytics capabilities as the firm has no access to customer data.

Company’s management interface is well designed but analytics and monitoring capabilities are an area for potential improvement, with advanced capabilities still mostly on the roadmap, taking into account the architectural limitations described above.

Strengths: Offers a compelling solution for organizations that require data to be stored globally and accessed instantly. Its architecture enables users to access huge files seamlessly through residential network connections, making collaboration and remote work completely transparent. The roadmap is interesting, with improvements planned in public API and automation support as well as data tiering.

Challenges: The solution’s security-centric architecture and strong focus on end-to-end, customer-controlled encryption significantly limits the implementation of advanced data management and data analytics capabilities.

Nasuni

It offers a SaaS solution for enterprise file data services, with an object-based global file system as its main engine and many familiar file interfaces, including SMB and NFS. It is integrated with all major cloud providers and works with on-premises S3-compatible object stores.

The company recently changed its platform to branch out its non-core capabilities and take a modular approach to data services. The solution now consists of one core platform with add-on services across multiple areas, including ransomware protection and hybrid work, with data management and content intelligence services planned. Many customers implement the solution to replace traditional NAS and Windows File Servers, and its characteristics enable users to replace several additional infrastructure components as well, such as backup, disaster recovery, data replication services, and archiving platforms.

The firm offers a global file system called UniFS, which provides a layer that separates files from storage resources, managing one master copy of data in public or private cloud object storage while distributing data access. The global file system manages all metadata – such as versioning, access control, audit records, and locking – and provides access to files via standard protocols such as SMB and NFS.

Files in active use are cached using company’s Edge Appliances, so users benefit from high-performance access through existing drive mappings and share points. All files, including those in use across multiple local caches, have their master copies stored in cloud object storage so they are globally accessible from any access point.

The Nasuni Management Console delivers centralized management of the global edge appliances, volumes, snapshots, recoveries, protocols, shares, and more. The web-based interface can be used for point-and-click configuration, but the vendor also offers a REST API method for automated monitoring, provisioning, and reporting across any number of sites. In addition, its Health Monitor reports to its Management Console on the health of the CPU, directory services, disk, file system, memory, network, services, NFS, SMB, and so on. The vendor also integrates with tools like Grafana and Splunk for further analytics. In contrast, data management capabilities are currently absent, but Nasuni’s purchase of data management company Storage Made Easy in June 2022 suggests notable improvements in this area in the coming months.

The company provides ransomware protection in its core platform through its Continuous File Versioning and its Rapid Ransomware Recovery feature. To further shorten recovery times, the company recently introduced its Ransomware Protection as an add-on paid solution that augments immutable snapshots with proactive detection and automated mitigation capabilities. The service analyzes malicious extensions, ransom notes, and suspicious incoming files based on signature definitions that are pushed to firm’s Edge Appliances, automatically stops attacks, and gives administrators a map of the most recent, clean snapshot to restore from. A future iteration of the solution (on the roadmap) will implement AI/ML-based analysis on edge appliances.

Edge Appliances are lightweight VMs or hardware appliances that cache frequently accessed files using SMB or NFS access from Windows, macOS, and Linux clients with performance. They can be deployed on-premises or in the cloud to replace legacy file servers and NAS. They encrypt and de-dupe files, then snapshot them at frequent intervals to the cloud, where they are written to object storage in read-only format.

The company’s Access Anywhere add-on service provides local synchronization capabilities, secure and convenient file sharing (including sharing outside of the organization), and integration with Microsoft Teams. Finally, the edge appliances also provide search and file acceleration services.

Strengths: Offers a great and efficient distributed file system solution that is secure and scalable. The solution offers protection against ransomware at a very fine level, and with the edge appliances, customers can access their frequently used data in a fast and secure way.

Challenges: Data management services are currently absent, but this missing capability should be addressed relatively soon.

Panzura

It offers a distributed cloud file storage solution based on its CloudFS file system. The solution works across sites (public and private clouds) and provides a single data plane with local file operation performance, automated file locking, and immediate global data consistency. Recently, the solution was redesigned to provide a modular architecture that will gradually allow more data services to seamlessly integrate with the core firm’s platform.

It implements a global namespace and tackles data integrity requirements through a global file-locking mechanism that provides real-time data consistency regardless of where a file is accessed from around the world. It also provides efficient snapshot management with version control, and allows administrators to configure retention policies as needed. Backup and DR capabilities are offered as well.

The firm relies on S3 object stores and supports a range of object storage solutions, whether in the public cloud or on-premises. A feature called Cloud Mirroring enables multiple back-end capabilities by writing data to a second cloud storage provider to ensure data is always available, even if a failure occurs at one of the cloud storage providers. Tiering and archiving also are implemented in Panzura.

Analytics capabilities are offered through Panzura Data Services, a set of advanced features that provide global search, user auditing, one-click file restoration, and monitoring functions aimed at core metrics and storage consumption, showing, for example, frequency of access, active users, and the health of the environment. For data management, the company provides various API services that allow users to connect their data management tools to Panzura. Data Services also allows the detection of infrequently accessed data so that subsequent action can be taken.

Security capabilities include user auditing (through Data Services) and ransomware protection. Ransomware protection is handled with a combination of immutable data (a WORM S3 back end) and read-only snapshots taken every 60s at the global filer level, regularly moving data to the immutable object store, and allowing seamless data recovery in case of a ransomware attack. These are complemented with Enhanced Ransomware Protection (part of Data Services), a paid feature that currently supports proactive ransomware detection and alerting. In the future, it will also support end-user anomaly detection.

The solution also includes a secure erase feature that removes all versions of a deleted file and subsequently overwrites the deleted data with zeros, a feature available even with cloud-based object storage.

One of the new capabilities of the solution is Edge Access, which extends vendor’s CloudFS directly to users’ local machines.

Strengths: Provides a cloud file system that offers local-access performance levels with global availability, data consistency, tiered storage, and multiple back-end capabilities. Panzura Data Services delivers advanced analytics and data management capabilities that help organizations better understand and manage their data footprint.

Challenges: The Edge Access service was introduced just recently, so there is no customer confirmation or independent verification that the solution works adequately.

Peer Software

It takes an interesting approach to distributed cloud file storage with its Global File Service (GFS) solution. Whereas other vendors implement their own global distributed file system, it implements a distributed service on top of existing file systems across heterogeneous storage vendors. This solution’s key attributes include global file locking, active-active data replication across sites, and centralized backup.

PeerGFS architecture consists of 2 components: the PeerGFS Agent and the PeerGFS Hub. The PeerGFS Hub is in charge of configuration, management, and monitoring, as well as acting as a message broker that talks to other PeerGFS Hubs.

PeerGFS has several differentiating features. Instead of implementing a proprietary global namespace, it uses Microsoft DFS to create a namespace across hybrid and multicloud environments. Companies can deploy this solution on-premises or in the cloud. It also supports most hardware-based file systems with SMB support, as well as Azure Blob and any S3-compatible public cloud storage. NFS support should be available by the end of 2022.

The solution can replicate data across data centers and clouds in an active-active model. It also supports multicloud implementations and replication across availability zones. Only delta changes are replicated to save bandwidth and speed up transfers, and global file locking prevents file version conflicts.

The solution also provides data migration capabilities. Data can be backed up to S3-compatible object stores and public cloud platforms. Backed-up data is stored as individual files, which enables file-level restores and allows immediate data reuse for use cases relying on file-based datasets.

The product delivers monitoring and other capabilities through the PeerGFS management interface. The ability to detect user or application activity on the distributed file service is built into PeerGFS through an analysis and detection engine named Peer Malicious Event Detection (MED), which analyzes the environment and bait files to detect malicious activity, including ransomware attacks. Pattern matching is another way to identify malicious activity by looking at certain types of behaviors. The last measure is trap folders, a series of hidden, recursive folders created by Peer MED to target malware. Whenever one of these activities happens or files are touched, it triggers Peer MED’s action engine, which results in a variety of responses: anything from an alert to disabling an agent and stopping a job. Further ransomware protection measures, such as enabling immutable snapshots on the target S3 storage used for backups, have yet to be implemented.

The firm is evaluating roadmap improvements to enhance Peer MED with AI/ML-based detection. On the analytics side, the solution may be improved in the future with additional storage trending analysis for archiving, capacity planning, and other efficiency and optimization outcomes.

Another vendor’s solution, PeerFSA, provides insights about an organization’s environment and creates reports about time-based metrics/usage, directories, users, and file types. Those reports are static and delivered in an Excel format.

There is no edge-access model currently available (as defined in GigaOm’s report Key Criteria for Evaluating Cloud-Based File Storage Solutions) because the solution relies on its hub/agent architecture to operate. Users have to connect to the corporate network to access standard file resources monitored by PeerGFS.

Strengths: Lets organizations implement a distributed cloud file storage solution on top of existing infrastructure and make the best use of existing investments. The Peer Malicious Event Detection Engine takes a sophisticated approach to mitigating malware attacks.

Challenges: The design decision to act as a distributed file service layer on top of existing hardware makes the solution dependent on underlying hardware and software capabilities. Some features, such as file system analytics, are currently limited but improvements are on firm’s roadmap.

6. Analysts’ Take

Distributed cloud file storage has proven to be a pivotal capability, particularly to ensure business continuity during the prolonged disruptions caused by the Covid-19 pandemic. These disruptions not only had a negative impact on the workforce and its access to data, they also impeded organizations’ ability to provision and deploy collaboration infrastructure. Distributed cloud file storage solutions were able to alleviate those concerns rapidly, thanks to their natively distributed and cloud-based architectures.

Across all vendors, we identified no gaps or shortcomings regarding the key criteria of global namespace, hybrid and multicloud deployments, and integration with object storage. Additionally, most vendors were determined to be in either outstanding or good shape with regard to their own implementation.

Areas where we found the most significant variances were data management, analytics, advanced security, and edge deployments. All of these areas are important, but we can’t stress enough the urgency of advanced security measures as a mitigation strategy against elevated persistent ransomware threats. Well-designed and varied edge deployment options are also critical in a period during which organizations must accommodate a massively distributed workforce.

While data management and analytics may appear to be secondary, these are also key business intelligence enablers, not only because of the ability to reuse data for improved outcomes, but also due to more efficient resource utilization and spend control.

Although the evaluated solutions provided excellent outcomes across the key criteria, none delivered features around the emerging technologies highlighted in the Key Criteria Report for Evaluating File-Based Cloud Storage – data classification, data compliance, and data sovereignty. The solutions may provide coverage in one way or another for components needed to implement such capabilities, for example, manual “segmentation” of storage locations for geo-sovereignty or the ability to tag files with metadata. Overall, however, orchestration is nonexistent.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter