Recap of 46th IT Press Tour

With participation of AuriStor, Data Dynamics, GRAID, HYCU, N-able, Panzura, Pavilion Data, Protocol Labs, ScaleFlux and Smart IOPS

By Philippe Nicolas | November 7, 2022 at 2:01 pm This report was written by Phillipe Nicolas, organizer of The IT Press Tour.

This report was written by Phillipe Nicolas, organizer of The IT Press Tour.

The recent 46th edition of The IT Press Tour organized in California around IT Infrastructure, data management and storage was the opportunity to meet 10 companies, I should say 9, as Pavilion Data exploded during the week tour. These 9 others are AuriStor, Data Dynamics, GRAID Technology, HYCU, N-able, Panzura, Protocol Labs, ScaleFlux and Smart IOPS.

AuriStor

The company based in New-York City is now operating fully remote since the Covid illustrating perfectly what they provide to enterprises, the capability to work in a distributed let’s say dispersed way on same central file-based documents. AuriStor develops AuriStorFS, a distributed file system while maintaining full compatibility with OpenAFS and IBM AFS. In other words, if users have already some OpenAFS cell deployed, AuriStorFS can include them in its global environments. As the company has significantly extended file system capabilities, the reverse supports is not true and users must continue to use AuriStor on new volumes created with new characteristics. Today AuriStor is available for lots of Linux distributions, MacOS and Windows but also Unix like AIX, Solaris and various BSD flavors. Red Hat and SUSE are two strong partners of AuriStor.

The solution is a data access product that offers multi-site access to central data via intelligent data propagation and caching techniques to provide a coherent file data view. Less known than usual suspects, the technology behind the scene is seen as a pioneer in the market with data existing for more than 30 years in a DFS, now AuriStorFS, cell. Even if the remote access model differs, users consider them against more recent approaches such like CTera Networks, Nasuni or Panzura, the cloud file system leaders according to their respective installed base, for different use cases. Working on local file system data, users access data via their local representation with local file system semantics. The solution has demonstrated large scale deployments with thousands of nodes supporting multi writer/multi reader mode. At the same time, the team has improved security, performance and file system features.

The team prepares a new release with volume feature policy framework that offers a per volume control instead of a per server control available today, significant RPC enhancements in the RX RPC with the ACK control that boost transfers by 450% on 10Gb/s links or faster and a few others improvements around volume dump stream, volume name length and a new cache manager. As Red Hat is key partner, no surprise the team is working on a container approach and integration with OpenShift.

The company continues to sign some key sites like University of Maryland and the Swiss National Supercomputer Centre beyond department of energy SLAC and Fermilab, the US geological survey (USGS) and some financial institutions.

Data Dynamics

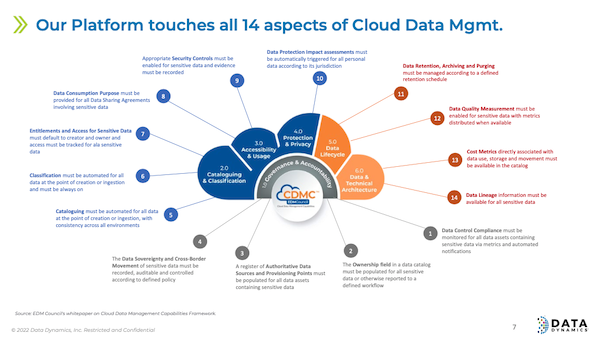

Recognized for 2 decades, StorageX belongs to the small group of key products in unstructured data management for enterprises. Data Dynamics, the company behind StorageX since 2012, has made progress signing new customers and partnerships like Dell and VAST Data beyond Lenovo or NetApp. With 145 employees today, the team has secured 28 Fortune 100 reaching almost 500PB of data under management with 80% of its installed base being in USA. The company positions its product as a unified unstructured data platform based on 4 pillars: analytics, mobility, security and compliance, what we can name data governance.

Beyond StorageX, to offer this platform approach, the company extends its portfolio with Insight AnalytiX and ControlX. It starts by the understanding of the data landscape, users don’t really know what they store as it is so easy to generate file data. This file discovery phase is critical as next phases are based on this one. It provides a full visibility with deep information on access patterns and security settings among others. Following this classification and indexing phase, the discovered content also can feed some compliance rules to augment redundancy and protection, this is done by the second module. And finally automatic data movements can be triggered to optimize the environment, evacuate old, large or unaccessed data or just archive it to more cost effective storage entities like on-premises or cloud-based object storage. The ROI is rapid with fine file identification, duplicates removal and data migrations to the right destination. It’s even more visible for large enterprises with different sites and uncontrolled data proliferation.

And doing this right data placement really contributes to the sustainability objectives of enterprises.

A solution of choice when users run NetApp and Windows-based environments, the platform has been extended to support Linux and other file servers/NAS systems.

Microsoft has launched a special Azure file migration program and has selected Data Dynamics for such need confirming implicitly the leader position of Data Dynamics. Data can be migrated from on-premises to Azure Blob, Azure Files and Azure NetApp Files.

The next development phase will be around ML for data classification and the addition of Hadoop based environments, a good candidate with tons of files not always well identified and managed.

GRAID Technology

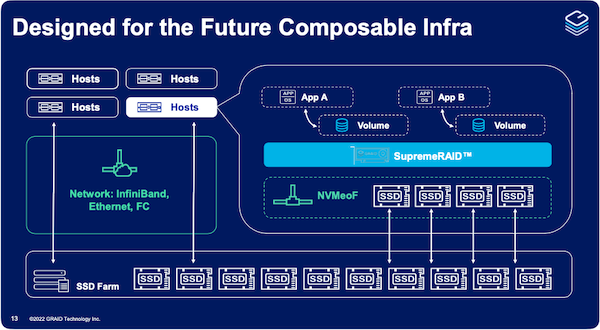

The company has created a new product on the market dedicated to the protection of production data. It is a computer board named SupremeRAID being a GPU-based RAID PCIe Gen 3 or Gen 4 card delivering high throughput and IO/s numbers.

The team has recognized that RAID has become a bottleneck in many situations. Data volumes explode and data redundancy for production data presents obvious risks. We saw double parities for many years and also erasure coding coming to the market to solve that challenges. With NVMe connected devices, it is now a real limiting factor. At the same time the industry has developed several key technologies that combined together can address these limitations, it is the case of GPU, NVMe and PCIe Gen 3 or 4. GRAID has realized that opportunity and designs, develops and builds such solutions with this SupremeRAID card.

In terms of performance, the Gen 3 – SR-1000 – delivers 16 million IO/s and 110GB/s throughput and the Gen 4 – SR-1010 – 19 million IO/s and same throughput. The team prepares of course a Gen 5 model. Today each card supports up to 32 SSDs connected via NVMe and NVMeoF but also SAS and SATA. Pricing is based on number of SSDs of course with 4, 8, 16 or 32 configurations, MSRP is $3,995 for the SupremeRAID SR-1010 card and 32 SSDs support. Today the offering is promoted as Enterprise Edition and the team will introduce a Datacenter Edition in 2023 with Kubernetes and VMware support and later SPDK support, erasure coding and of course Gen 5.

Even if the product is presented as a card, it relies on a key software development with agent installed on hosts. In write mode, the card process parities computation and data placement but in read mode, except the location information, data is pulled directly from the SSD without any transfer via the board itself.

The company, based in Santa Clara, CA, with a R&D center in Taiwan with a total of 45 employees, has raised so far $18 million and plans to add a new round very soon now. Really an OEM product, the company has signed several of these, the last one being Supermicro and is validated by Kioxia, Seagate, Western Digital, Gigabyte, AIC… We’ll see if the company will stay independent a long time as it represents a perfect prey for some vendors.

Listen to the CEO interview with Leander Yu for the French Storage Podcast.

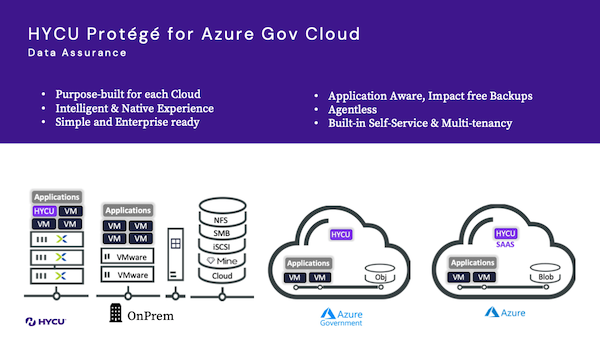

HYCU

Emerging leader in Backup-as-a-Service, it shows an impressive growth trajectory for a few years covering today a real multi-cloud play. Started as a Nutanix backup tool, then growing in the VMware ecosystem, the product has been significantly improved and extended to support on-premises and public clouds and some specific applications workloads. The philosophy of the product is to be agent-less to avoid any intrusive approach and provide more than backup as the data mover engine can be applied to migration and DR obviously. This model helps HYCU to become a leader in data protection as-a-service, we remember the launch of HYCU Protégé during the 31st edition of The IT Press Tour in June 2019. Lots of progress illustrated by a rapid market adoption with more than 3,600 customers fueled by 400+ partners in 75+ countries.

The recent series B VC round of $53 million in June confirms the ambition of the team totalizing more than $140 million in just 2 rounds. They add in October Okta in the investors pool.

This last event is strategic for HYCU as Okta, and a few IAM other players, connect users and applications to facilitate data exchanges and usages. HYCU will join Oktane conference this week. With more than 15,000 SaaS vendors in USA according to HYCU, it seems to be super large, this partnership makes sense to offer a universal data protection model. What is true is the number of SaaS applications used by average company, several dozens of apps. Again the question is not to protect apps but the need is to protect data created and used by apps. The IT industry has created its own complexity and protected enterprise’s information is a real mission especially in the current ransomware period. Imagine an easy secured connection coupled with an agent less and application discovery system and you get the idea.

The company has introduced a free fully managed backup for AWS, running on EC2, leveraging EBS snapshots with VM, folder and file-level recovery granularity to protect all AWS workloads.

The other recent news is the support of Azure Government cloud and WORM, de-dupe appliances or tape storage via special software in front of it.

We heard that the company is cooking something and will be ready to unveil this in a few weeks.

N-able

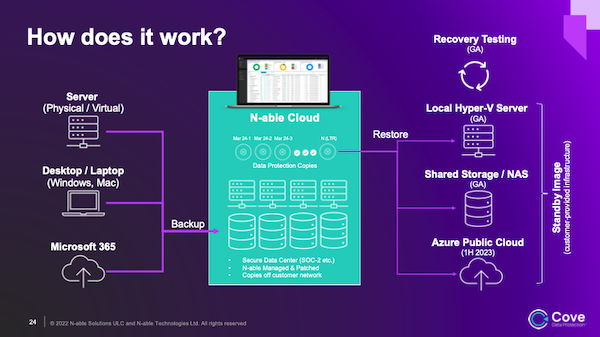

A reference in IT solutions for MSP, it operates at a $360 million annual run rate supporting around 25,000 MSPs WW. The entity was the MSP business unit of SolarWinds and mid 2021, a spinoff was decided to establish N-able as an independent public company. With more than 1,400 employees, the firm develops 3 solutions: remote monitoring and management, security and data protection.

The latter is covered by Cove Data Protection (CDP), a pure multi-tenant software product that protects data on any servers – physical and virtual – endpoints in a cloud-first model and of course data under Microsoft 365. The company leverages 30 WW data centers and managed today 150PB and more than one million Microsoft 365 users.

CDP is well established on the market with its launch in 2008 under the IASO brand in the Netherlands. In details, the local agent serves to identify and select file candidates, then process the de-dupe process, named here TrueDelta, at the source, encrypt and finally send final data to the remote secured data center. The data reduction engine works at the byte level and per system, the result is a very small transfer size except the very first backup. These signatures are not globalized on a central database, at least they’re not computed to offer this global reduction. Let’s consider 100 Windows laptops, the first copy consumed network and storage space and will copy 100 times the OS. Then the transfer is reduced but that first full copy for 99 machines could be avoided if the very first machine serves as a reference. 99 copies occupy some space.

To address RTO needs, CDP delivers the standby image capability, named Local Speed Vault, to keep a bootable image locally, close to protected machines. The team plans to support Azure cloud in H1/2023 for this standby image feature.

CDP can be also used for disaster recovery and a certain form of archiving in fact here a long term backup image.

Listen to the CEO interview with John Pagliuca for the French Storage Podcast.

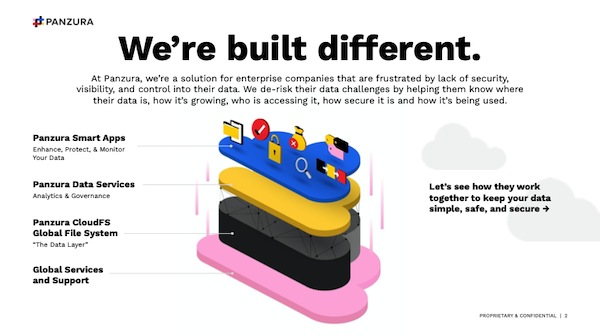

Panzura

Among the leaders of the cloud file storage for several years, it has evolved and plays now in the hybrid multi-cloud segment. Two and half years ago the company was acquired and is owned now by a new management team led by Jill Stelfox, CEO, and Dan Waldschmidt, CRO. With all company’s lives, we can say that the current period is the 5th one. Since that, the company has grown very fast with 485% AAR with a 87 NPS score with 344 employees and more than 500 customers. It was listed in Inc. 5000 list with ≠1,343 in the America’s fastest-growing private companies category and ≠76 in IT. Beyond all these circumstances, the firm has kept almost all customers signed in previous eras illustrating the robustness of the solution and its alignment to market needs.

Positioned as a visibility, security and control solution for unstructured data, the firm’s product relies on the fundamental layer CloudFS operating as the global file system service plus a layer of data services and finally Smart Apps from the bottom to the top. CloudFS is connected to on-premises or public cloud object storage to store data as the firm is only a data access solution exposing central data close to consumers via NFS or SMB. CloudFS is the engine that propagates data between sites i.e Panzura instances. Data Services offers analytics and governance with visibility, audit and global search based on ElasticSearch. At the top Smart Apps delivers edge access on various endpoints such tablet, smartphone or web browser and a ransomware protect feature. To deploy the product users just need a connection to an object store and the Panzura VM and the product can run in the cloud.

The solution is sold via channel partners and is charge per capacity controlled by CloudFS and per active directory user for Panzura Data Services and Smart Apps. The number of nodes, migration or implementation all are included in the consumption price.

Listen to the CEO interview with Jill Stelfox for the French Storage Podcast.

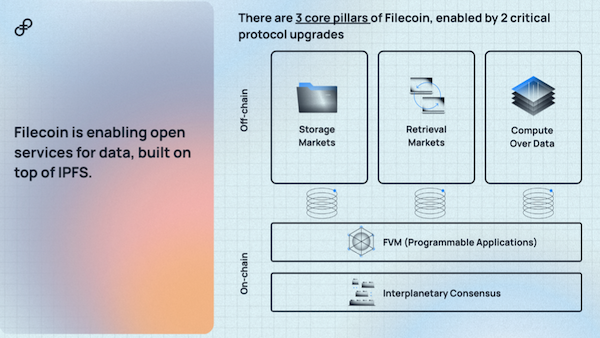

Protocol Labs

This session have introduced new approaches claiming to build the next gen of Internet with Web3. This is a debate, is it Web3 or Web 3.0? The entity was founded in 2013 with the creation of IFPS and libp2p as fundamental pieces for decentralized storage. On top of these, the company has built Filecoin, a crypto powered storage network, with the mission to store all the planet information. The team promotes Filecoin as the Web3 storage layer with the ambition to become the world’s largest decentralized storage network. So far, around 4,500 storage providers have joined the project representing more than 17EB with North America the ≠1 growing region followed by Hong Kong, Singapore and Korea. It represents the largest decentralized storage network by capacity and utilization according to the vendor. To boost the recruitement of contributors and then adopters, Filecoin has built a special program named ESPA – Enterprise Storage Provider Accelerator – with established partners such as Seagate and AMD but also new dedicated ones like Piknik or Chainsafe. At the same time, for ones who are interested in this, Filecoin is green giving some sort of insurance for sustainability. The other argument in favor of Filecoin is the cost being less than 0.1% of AWS S3.

In terms of perspective, the first phase is to support Dapps and allow new business models and in the next 3 years Web2 data not stored today. A sort of ecosystem has been launched with applications such a Zoom-like app named Huddle01, Audius a steaming music platform or Metaverse and game apps to name a few. This approach already helps to storage very large datasets coming from research centers or public entities with the open data model. Brave, the browser, has added Filecoin support to its wallet. To complete the visibility and promotion effort, hackathons are organized and so far 7,000 builders participated with 2,800+ project submissions on Filecoin and IPFS. Almost 500 startups have joined the Filecoin, IPFS and Protocol Labs ecosystems. We understand that storage is only the start as the next step is the compute at large scale addressing the data gravity challenge.

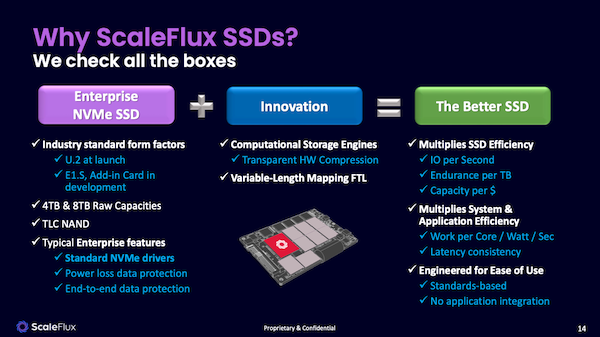

ScaleFlux

The firm is on a mission to deliver a “better” SSD with improved performance, endurance and capacity for enterprises and data centers. It is a pioneer in computational storage and promotes a data-centric model where compute runs where data resides as data gravity is still a big challenge. The product embeds some processing capability built for the first generation with FPGA and now ASIC for the CSD-3000 series. It delivers first encryption and compression that translate into the main objectives of the company i.e capacity with compression, reliability with optimized data placement and data reduction again and performance.

In a nutshell, ScaleFlux couples enterprise NVMe SSDs with their storage engines, it is transparent for enterprises at it is recognized as a classic NVMe SSD just a bigger, faster and more reliable one. This is a radical change as many of classic approaches only address one of the 3 challenges being mutual exclusive expect with such model.

The first and spectacular application of the technology is visible with database, classic ones like MySQL, PostgreSQL or MariaDB but also for NoSQL ones like Aerospike and even RocksDB. Results are significant with 4:1 compression ratio for classic databases and even 5:1 for Aerospike. Adopting CSD-3000 gives immediate results being transparent for the engine, top applications and users of course. The second product, the NSD-3000, is almost the same but without compression, both of them being accelerated by the SoC storage processor SFX 3000.

In terms of go-to-market as a good candidate for OEM, ScaleFlux partners with HPE and has validation with Aerospike and added Scale Computing on the list for the edge HCI appliance.

The company plans to add PCIe Gen 5 obviously, new form factors and capacity options and will also unveil some partnerships for processors.

Listen to the CEO interview with Hao Zhong for the French Storage Podcast.

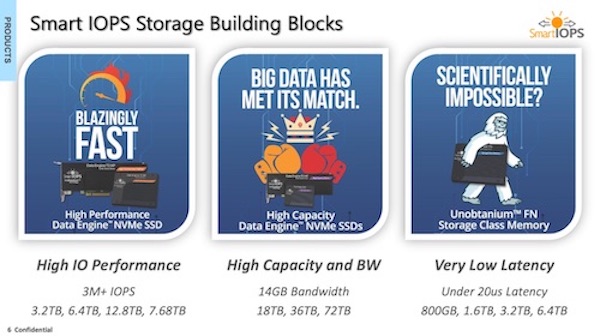

SmartIOPS

Pretty confidential player until 2021 due to an intensive design and development phase, it has started to sell its products in 2021 and immediately a few big sales happened with China Telecom or TheTradeDesk. Project initiated late 2013 and founded early 2014, the company has spent time to develop it TruRandom technology as a full flash OS for its SSDs and PCIe cards. Among others, we see Pradeep Sindhu as investor, he is the founder of Juniper Networks and Fungible, one of the DPU and NVMe/TCP pioneer.

The team markets 3 products: a high performance NVMe SSDs or PCIe cards delivering 3 million+ IO/s for a capacity of 7.68TB, a high capacity with 36TB and on demand 72TB for 14GB bandwidth and a storage class memory named Unobtanium based on Kioxia XL-Flash for a capacity of 6.4TB with a latency below 20 microseconds. TruRandom allows to reach theoretical maximum IO/s for PCIe NVMe, for Gen 4 x8 this maximum is 3.48 million IO/s and the card delivers 3.4 almost at that top number. Same remark for Gen 3 x8 with a theoretical maximum of 1.74 million IO/s aligned with the performance delivered of 1.7 million IO/s for Gen 4 x4 or Gen 3 x8.

The company has marketed also a full NVMe/TCP 2U storage appliance named SwitchStor, still available on demand.

The company targets the high-end of the market and already published some good benchmarks numbers with Aerospike, Oracle, MySQL or Cassandra. And the other good effect is the energy efficiency with higher number of IO/s per watt in a 70% read and 30% write schema.

A new product is under preparation, the Functional Storage Engine aka FSD, with some processing capability on the card itself approaching a computational storage model. This product will be a CXL Gen 5 x8.

The next phase shows some ambitious goals in sales, partnerships and products.

Lo listen to the CEO interview with Ashutosh Das for the French Storage Podcast.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter