Leaders of High-Performance Object Storage

DDN, Dell, IBM, MinIO, NetApp, Nutanix, Pure Storage, Red Hat, Scality, Vast Data, and Weka

This is a Press Release edited by StorageNewsletter.com on May 19, 2022 at 2:12 pmAuthors of this report from GigaOm are Enrico Signoretti, Max Mortillaro, and Arjan Timmerman, and was written on April 4, 2022.

GigaOm Radar High-Performance Object Storagev3.0

1. Summary

For some time, users have asked for object storage solutions with better performance characteristics. To satisfy such requests, several factors must first be considered:

• Data consolidation: Combining and storing various types of data in a single place can help to minimize the number of storage systems, lower costs, and improve infrastructure efficiency.

• New workloads and applications: Thanks to the cloud and other technology, developers have finally embraced object storage APIs, and both custom and commercial applications now support object storage. Moreover, there is a high demand for object storage for AI/ML and other advanced workflows in which rich metadata can play an important role.

• Better economics at scale: Object storage is typically much more cost-effective than file storage and easier to manage at the petabyte scale. And dollar/GB is just one aspect; generally, the overall TCO of an object storage solution is better than it is for file and block systems.

• Security: Some features of object stores, such as the object lock API, increase data safety and security vs. errors and malicious attacks.

• Accessibility: Object stores are easier to access than file or block storage, making it the right target for IoT, AI, analytics, and any workflow that collects and shares large amounts of data or requires parallel and diversified data access.

Many applications find object storage a natural repository for their data because of its scalability and ease of access. However, older object stores were not designed for flash memory, nor were they optimized to deal with very small files (512KB and less). Many vendors are redesigning the backend of their solution to respond to these new needs, but in the meantime, a new gen of fast object stores has become available for these workloads.

These new object stores usually offer a subset of the features of traditional object stores, in particular geo-replication or S3 API compatibility, but they excel in other ways that are even more important for interactive and high-performance workloads, including strong consistency, small file optimization, file-object parity, and features aimed at simplified data ingestion and access with the lowest possible latency. Their design is based on the latest technology: flash memory, persistent memory, and high-speed networks are usually combined with the latest innovations in software optimization. Even though object stores will never provide the performance of block or file storage, it is important to note that they are more secure and easier to manage at scale than the others, offering a good balance among performance, scalability, and TCO.

Maintaining a consistent response time under multiple different workloads is also very important. On the one hand, there are the primary workloads for which these object stores are usually selected, but on the other, it is unusual to find fast object stores serving only a single workload over a long period. Users tend to consolidate additional data and workloads, and multitenancy quickly becomes another important requirement. These solutions typically offer good file storage capabilities, allowing data to be consolidated even further.

Presently, high-performance object stores do not overlap with traditional object stores except for a limited set of use cases. This distinction will change over time because both traditional and high-performance object stores will eventually add the features necessary for parity. The products with the most balanced architecture and the ability to optimize for the latest media will end up in the leading positions.

2. Market Categories and Deployment Types

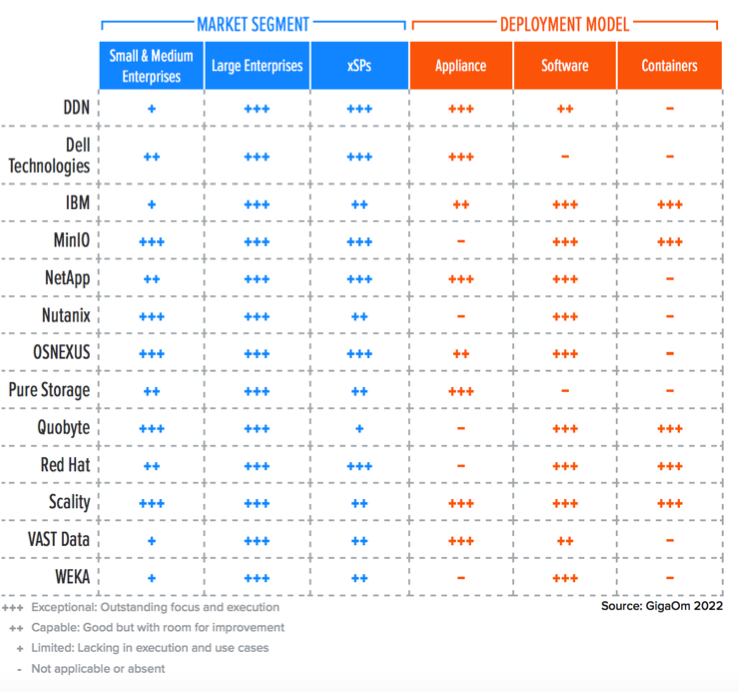

To better understand the market and vendor positioning (Table 1 below), we assess how well solutions for high-performance object storage are positioned to serve specific market segments.

• SMEs: In this category, we assess a solution’s ability to meet the needs of organizations ranging from SMEs. Also assessed are departmental use cases in large enterprises, where ease of use and deployment are more important than extensive management functionality, data mobility, or feature set. However, in high-performance object storage, ease of management is considered important, while multitenancy is given less weight.

• Large enterprises: Here, offerings are assessed on their ability to support large and business-critical projects. Optimal solutions in this category will strongly focus on flexibility, performance, data services, and features to improve security and data protection. Scalability is another big differentiator, as is the ability to deploy the same service in different environments. Security and multitenancy are not to be underestimated either, since large systems are accessed concurrently by many users and applications.

• xSPs: Optimal solutions will be designed for multitenancy, security, OpEx-based purchasing models, attractive dollar/GB, and good overall flexibility. A strong API for automation and resource provisioning is mandatory in this case.

In addition, we recognize 3 deployment models for solutions in this report: appliance, software, and containers.

• Appliance: In this category, we include solutions that are sold as fully integrated hardware and software stacks, with a simplified deployment process and support.

• Software: This refers to software installed on top of third-party hardware and OS. This category includes solutions with hardware compatibility matrices and pre-certified stacks sold by resellers or directly by hardware vendors.

• Containers: This category has been added to reflect the latest industry trend in which object storage is installed on top of a Kubernetes cluster. This type of solution is usually favored by developers and other organizations that need extreme flexibility to address next-gen workloads and use cases.

Table 1: Vendor Positioning

3. Key Criteria Comparison

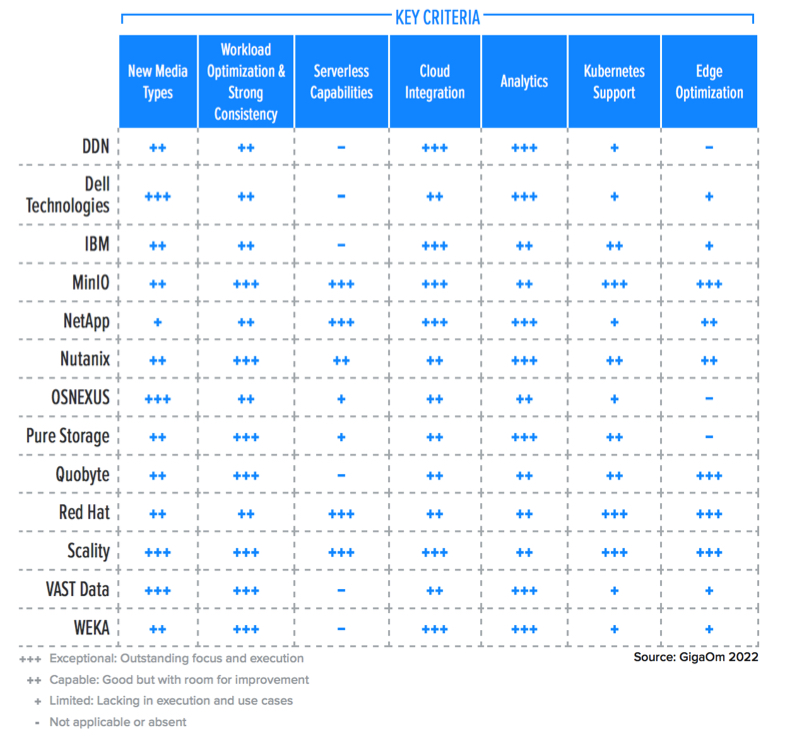

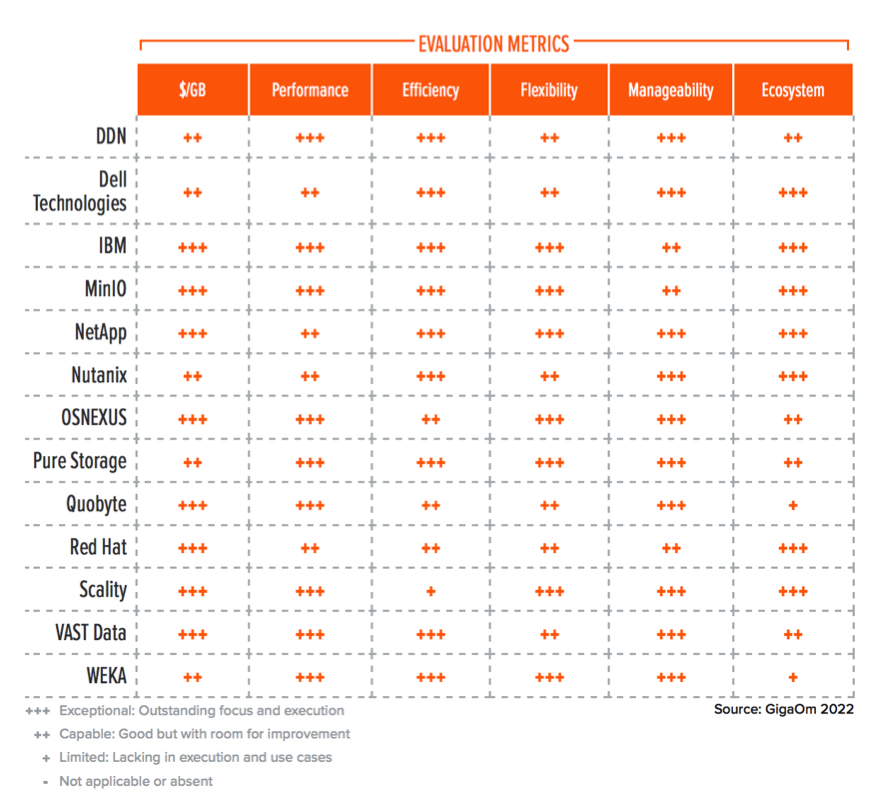

Building on the findings from the GigaOm report, Key Criteria for Evaluating Object Storage, Table 2 and Table 3 below summarize how each vendor included in this research performs in the areas that we consider differentiating and critical in this sector. The objective is to give the reader a snapshot of the technical capabilities of different solutions and define the perimeter of the market landscape.

For this specific Radar report about high-performance object storage, performance and other features that support demanding workloads are seen as more relevant for the user, and so are given greater weight. In the companion Radar report dedicated to enterprise object storage, we tend to give a more balanced view of the solutions.

Table 2. Key Criteria Comparison

Table 3. Evaluation Metrics Comparison

By combining the information provided in the tables above, the reader can understand the technical solutions available in the market.

Unlike the evaluation of traditional object stores (see the Radar Report for Enterprise Object Storage), the goal is to highlight features that make these platforms suitable for demanding workloads in enterprise environments, including big data analytics, AI, and HPC. For this reason, readers should consider the caveat that some products are less rich in functionality than traditional object stores.

At the same time, optimizations for high-performance workloads produced a series of features not always available in traditional scenarios. Readers should understand that we are comparing only products with specific characteristics and a direct comparison of traditional and high-performance object stores would be quite difficult. Furthermore, the products discussed in both of the GigaOm Radar reports have specific configurations or features to address demanding workloads.

4. GigaOm Radar

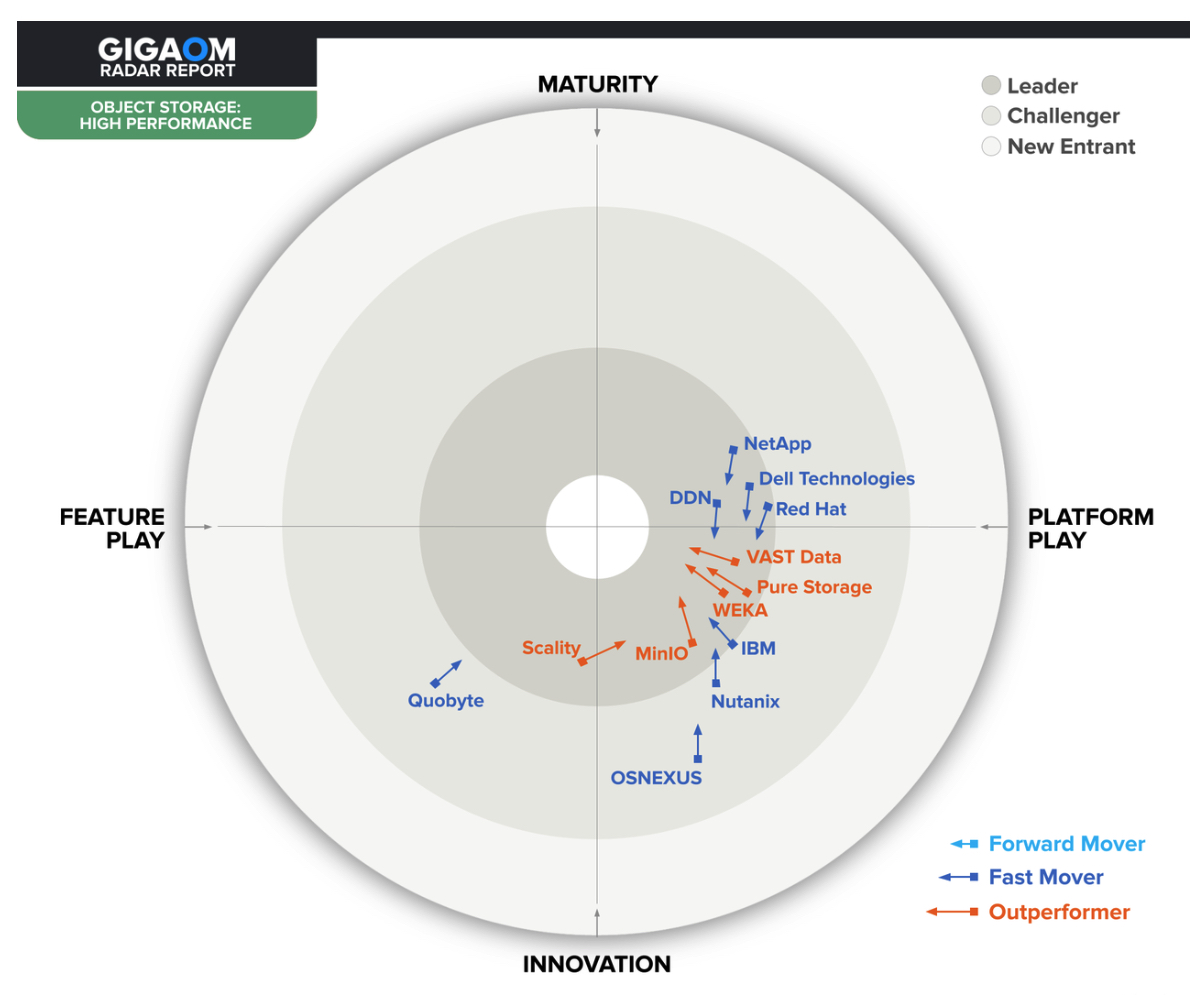

This report synthesizes the analysis of key criteria and their impact on evaluation metrics to inform the GigaOm Radar graphic in Figure 1. The resulting chart is a forward-looking perspective on all the vendors in this report, based on their products’ technical capabilities and feature sets.

The GigaOm Radar plots vendor solutions across a series of concentric rings, with those set closer to the center judged to be of higher overall value. The chart characterizes each vendor on 2 axes – Maturity vs. Innovation, and Feature Play vs. Platform Play-while providing an arrow that projects each solution’s evolution over the coming 12 to 18 months.

Figure 1: GigaOm Radar for High-Performance Object Storage

As you can see in this figure 1, the high-performance object storage market unsurprisingly shows a concentration of vendors focused on innovation while also delivering platform-oriented data services stacks. This lucrative and performance-oriented market requires constant innovation, which is depicted by the lack of any forward mover vendors in the radar.

The first group of leading solutions consists of Vast Data and Weka, both side by side and closely followed by Pure Storage.

Vast Data proposes a solution built on the latest flash technologies (storage-class memory and QLC flash), delivering scalability, performance, and durability with QLC flash economics.

Weka takes a different approach by proposing a software-defined, high-performance file and object scale-out storage solution which is massively scalable and entirely optimized for a full-NVMe backend. The solution demonstrates consistent throughput for all workload types, is capable of running natively in the cloud, and offers tiering to cheaper storage.

Pure Storage offers FlashBlade, its UFFO (Unified Fast File and Object) which delivers equal performance with both small and large objects, as well as good scalability, advanced analytics, AIO/s, Kubernetes support, and potential improvements for more cost-effective storage options. In a similar position,

MinIO also delivers great performance, frequent enhancements, and an impressive set of enterprise-grade features with best-in-class Kubernetes integration. Its comprehensive feature set and fast development pace drives staggering adoption and deployment rates by customers. MinIO will soon reach a critical point where it will need to scale the support to meet growing demand, particularly with paid customers.

It’s worth noting, Scality also proposes a compelling value proposition with its new Artesca solution, which supports NVMe, Intel Optane, and QLC optimizations, making it capable of offering outstanding performance with reasonable storage costs. Scality collaborates closely with HPE, offering optimized, integrated, and easy to deploy appliances. The solution is crossing from a feature play to a platform play thanks to a promising roadmap that will complete its feature set.

The second group of leading solutions covers vendors which are either already transitioning towards the innovation area or closing in. Some have new products with a progressively growing feature set or are improving existing platforms.

DDN offers an S3 gateway on ExaScaler, allowing object workloads to take advantage of its scale-out file system’s fast parallel architecture. The solution offers good multiprotocol support, enabling versatility in deployments and providing the ability to run natively in the cloud as well.

Red Hat has made major improvements to Red Hat Ceph Storage (RHCS) with serverless support, cloud integration, outstanding Kubernetes support (particularly with OpenShift), and a complete edge strategy. In addition, Red Hat is committing to further innovation with a compelling roadmap for RHCS, including NVMe-specific optimizations.

With its next-gen PowerScale appliances, Dell Technologies delivers object storage services through the OneFS scale-out file system, a flexible, performant, and scalable software-defined solution running on purpose-built appliances. PowerScale gets the benefits of the Dell Technologies ecosystem (including CloudIQ and DataIQ). The solution presents a good potential for feature set expansion possibilities in the future thanks to an ambitious roadmap.

NetApp provides object storage capabilities through its proven and feature-rich StorageGRID platform. The solution’s solid architecture allows it to deliver good performance and additional flash optimizations, plus the eventual availability of NVMe drives would make the solution deliver even more value.

The third group of solutions covers challengers, some of which are very close to the leaders’ circle or closing towards it.

Among these, IBM offers great value with Spectrum Scale, a proven high-performance parallel scale-out file system based on GPFS, with multiprotocol support (including object storage).

Nutanix also offers a flexible, performant, and scalable solution with Objects. Although less known than popular high-performance object storage solutions, Nutanix’s offering provides one of the most complete feature sets in terms of data management and enterprise-grade features (including ransomware protection) in this market segment.

Close behind are Quobyte and OSNEXUS, 2 solutions capable of delivering very good performance but unfortunately lacking serverless support and Kubernetes support for object storage. Quobyte offers a flexible and highly performant multiprotocol scale-out file system that can be deployed through containers on Kubernetes. This makes the solution highly portable and simple to use, with support for multiple media types and a very good high-speed back-end architecture. OSNEXUS proposes a performance-oriented solution based on Ceph, with innovative and efficient use of NVMe-oF, ease of management in mind, and multiple improvements to Ceph such as multicluster management, strong multitenancy, and remote replication.

5. Vendor Insights

DDN

Its EXAScaler proposes a front-end S3 object storage implementation to its scale-out file system, providing concurrent access to data via multiple interfaces. Thanks to its fast parallel architecture, EXAScaler enables scalability and performance, supporting low-latency workloads and high-bandwidth applications such as GPU-based workloads, AI frameworks, and Kubernetes-based applications.

EXAScaler systems can be deployed on all-NVMe flash nodes starting at 250TB for a 2RU appliance or on hybrid nodes (NVMe and HDD) that can provide up to 6.4PB capacity across 22RU. DDN is exploring QLC and other technologies but has no plans to leverage them in the immediate future.

EXAScaler can run natively on AWS, Azure, and Google Cloud Platform (GCP) via EXAScaler Cloud, a cloud-optimized implementation of EXAScaler available via each cloud platform’s marketplace. Cloud sync features allow EXAScaler to leverage public clouds for archive, data protection, and cloud bursting. EXAScaler can also store objects to any S3 backend via DataFlow, a data management platform that is tightly integrated with EXAScaler. Although it’s a separate product, a broad majority of DDN users rely on DataFlow for platform migration, archiving, data protection use cases, data movement across cloud, repatriation, and so on.

The solution comes with a comprehensive management and monitoring platform backed by AI-based analytics, providing real-time telemetry about the environment and historical data. It also includes advanced call-home features, including proactive and automated support actions.

DDN offers a native EXAScaler CSI driver to support Kubernetes environments. In addition, DDN has developed a special EXAScaler parallel client that can mount the filesystem within a container, enabling full parallel data paths for containerized applications. This functionality provides high-throughput, low-latency, and maximum concurrency data access while allowing secure deployments in multitenant environments.

Strengths: EXAscaler offers an interesting platform for high-performance workloads with converged file and object capabilities, allowing versatility in deployments and use cases. It is also integrated with the A3I converged system for AI, simplifying adoption of this type of infrastructure in enterprise environments.

Challenges: It’s’ S3 gateway is still immature compared to other options in the market, as it lacks support for important APIs and features. However, DDN is working on adding features that will close the gap.

Dell Technologies

For high-performance workloads relying on object storage, the company offers an all-flash version of the ECS platform, the EXF900 appliance. It also has a powerful solution in its portfolio with PowerScale, a scale-out solution that provides simultaneous multiprotocol access to the same data through file, object, or HDFS protocols and with an S3-compliant API. Although perfectly capable of handling objects, the firm doesn’t position PowerScale as object storage, but only as a scale-out file system that also provides object access.

The ECS EXF900 is based on the mature and proven ECS architecture, the appliance is highly optimized for all flash and leverages NVMe-oF between nodes to accelerate internode communication. ECS offers a broad set of features, an easy-to-use GUI, and comprehensive API and CLI interfaces. Nevertheless, performance, especially with small files, is not the strongest aspect of ECS, and neither is flexibility.

On the other hand, PowerScale can cover a broad spectrum of use cases, including emerging and modern workloads such as AI/ML/DL, GPU-based processing, HPC, analytics. It is pre-installed on purpose-built appliances, which support a broad range of storage media with all-flash (NVMe and SAS flash), hybrid, and archive-oriented appliances. The solution can scale from 11TB to 92PB in a single namespace with fast node addition. It supports policy-based cloud tiering to AWS and ECS. A cloud offering is currently available on Google Cloud, an integrated native Google Cloud service operated by Dell Services.

Management-wise, PowerScale includes its own management interface and provides advanced data monitoring capabilities with CloudIQ (including AI-based analytics, automation, and AIO/s), as well as advanced data management capabilities through DataIQ.

The PowerScale platform supports Kubernetes integration through Dell’s Container Storage Modules (CSM), a regularly updated open-source suite of modules developed for Dell EMC products. CSM covers storage support (through CSI drivers) and other capabilities such as authorization, resiliency, observability, snapshots, and replication. It also provides integration points with Prometheus and Grafana, as well as Ansible and Python; the OpenShift and Docker platforms are also supported. Other integration points include VMware Tanzu, which can take advantage of PowerScale volume snapshots through the PowerScale CSI driver.

Because PowerScale is appliance-based, it offers no particular optimizations for edge deployments; however systems can start at 11TB, making it suitable for edge DC deployments.

Organizations seeking compact edge deployments within the Dell ecosystem may want to explore the upcoming ObjectScale solution, covered in the GigaOm Radar for Enterprise Object Storage solutions.

The Dell ecosystem is one of the most extensive on the market and includes integrations with other firm’s products and third-party applications, allowing users to take full advantage of the offerings to consolidate more data and applications on a single system.

Strengths: PowerScale offers comprehensive capabilities coupled with performance optimizations, good scalability, and advanced monitoring/data management capabilities. ECS EXF900 offers an upgrade path to ECS customers looking for additional performance.

Challenges: Some capabilities are currently absent from both PowerScale and ECS, such as support for serverless workflows. The ECS architecture is not optimized for small files.

IBM

It offers high-performance object storage capabilities through Spectrum Scale, a software-defined solution that is very popular within the HPC and Analytics community and capable of addressing AI use cases. Spectrum Scale combines a proven design with multiprotocol storage services (file and object, with SMB, NFS, POSIX, S3, and HDFS). Its architecture is flexible and scalable, delivers strong consistency, supports a broad range of node types (including all-NVMe nodes), and is well suited for mixed workload environments where file and object coexist. The solution offers a single 8YB (yottabyte) namespace and migration policies that enable transparent data movement across storage pools without impacting the user experience.

Besides on-premises deployments, it is also possible to run Spectrum Scale on AWS, and the solution is available on AWS’ marketplace, allowing for fast deployments. Furthermore, Spectrum Scale can also run on Azure through a partnership between IBM and Syscomp. Regardless of the deployment model, the solution supports multiple replication topologies and policy-driven storage management capabilities. Thanks to a feature branded Transparent Cloud Tiering, organizations can tier data to the cloud with efficient data replication mechanisms.

Spectrum Scale comes with a simple and intuitive management interface, offering various monitoring capabilities that include data usage profiles and pattern tracking. In addition, organizations can connect Spectrum Scale with IBM Cloud Pak for Data to gain further insights.

The solution proposes a CSI driver and a cloud-native client with several advanced capabilities and provides an S3 integration to Red Hat OpenShift. Being software-based, Spectrum Scale can run at edge locations on compact deployments; however, no particular optimizations exist.

Strengths: Spectrum Scale provides a robust, efficient, and scalable solution built on GPFS and capable of supporting demanding workloads. It includes multiprotocol support, cloud integration options, and additional synergies within the IBM ecosystem, including IBM Cloud Object Storage.

Challenges: Spectrum Scale currently lacks serverless capabilities.

MinIO

It offers a versatile object storage solution that targets a broad range of use cases. The company’s friendly open source strategy attracts a huge community of developers, technology partners, and users who can take advantage of the product’s lightweight design to start testing with minimal infrastructure or by using a container and building from there. At the same time, enterprises can count on innovative and DevOps-friendly support services and the breadth of features in the large ecosystem that surrounds this solution.

The company supports mixed SSD and HDD deployments, allowing organizations to define server pools on the storage class relevant to their workload performance. It only requires consistent Erasure Coding SLA across pools. This allows for seamless tiering across media types and over long time horizons. Recently, the firm also showcased its ability to deliver high performance on all-NVMe flash nodes.

The MinIO object store is very efficient and can be configured to serve high-performance workloads, assuring strong consistency for the most demanding applications, including common data analytics and AI frameworks. MinIO also provides optimizations for small objects by combining the metadata and the data on the object. It also supports small objects in TAR and ZIP formats, with data extraction capabilities. These formats also improve small file storage (millions of IoT sensor logs in archives, for example) and reduce data upload times. Worth noting, the solution also supports S3 Select.

The solution supports serverless computing through bucket notifications, with a broad range of notification targets and support for bucket and object-level S3 events similar to the Amazon S3 event notifications. In addition, MinIO supports integrations with messaging services like AWS SNS/SQS, Apache Kafka, or RabbitMQ.

It is inherently cloud-native and, since its inception, adheres to the principles of containerization, orchestration, RESTful APIs, and microservices. It supports multiple cloud providers, Kubernetes distributions, and services (VMware Tanzu, Red Hat OpenShift, HPE Ezmeral, SUSE Rancher, as well as EKS, AKS, and GKE), and provides native tiering, first-class replication capabilities, and management services across clouds.

For monitoring, MinIO is well integrated with Prometheus and Grafana. The management GUI, SUBNET, is constantly being improved with a weekly release cadence, providing tremendous improvements over the past year.

The firm has a strong commitment to Kubernetes and next-gen workloads, including edge use cases. The solution is extremely lightweight: the MinIO binary is less than 100MB, half of which is the graphical console. The solution is small enough to fit on a sensor and powerful enough to run on data centers, providing complete coverage in terms of performance and footprint. Also worth mentioning, MinIO is optimized for Intel and ARM chips, with a very broad developer community involved in other ports.

Strengths: The company offers an impressive set of enterprise-grade features which are constantly evolving, with frequent enhancements. Its lightweight yet performant design offers flexible deployment options and broad workload support, including high-performance ones. MinIO also offers the most advanced integration with Kubernetes on the market.

Challenges: Some features, like multitenancy, are designed to be operated in a Kubernetes cluster. The new UI helps in this case, but this aspect could remain a challenge for some organizations.

NetApp

Its StorageGRID is a distributed scale-out object storage system that supports the S3 API and offers multiple deployment options, either on engineered appliances or through software-defined deployments.

StorageGRID appliances offer flexible performance, capacity, and scalability choices. It features a dynamic policy engine that supports data management policies and ILM rules to meet regulatory compliance, privacy, security, and data management/lifecycle requirements. They allow object handling with granular precision, with the ability to create storage pools with different erasure coding policies for efficient data placement.

The S3 Object Lock API (for object immutability) and the new S3 Select API are supported. S3 Select enables customers to execute SQL-like queries on objects and directly return the results without having to retrieve the entire object or data set. This also includes computational operators in the queries. Currently, only CSV objects are supported, but support for additional formats is planned.

Additional capabilities include an integrated load balancer and QoS for objects.

The solution supports external serverless capabilities with AWS SNS, which can be used to trigger events and invoke functions in the public cloud. SNS and Lambda as well as SNS and Kafka are supported, and some customers use StorageGRID and SNS with Apache Pulsar.

StorageGRID supports hybrid cloud workflows, and Cloud Storage Pools enable targets at all major hyperscalers (AWS, Azure, or GCP) to be used as resources for policy-based data placement rules. In addition, Cloud Mirror enables mirroring of StorageGRID buckets to cloud-based S3 targets.

The UI is well designed, with monitoring and analytics providing a consistent experience across the board. Integration with external tools like Prometheus confers additional flexibility upon these features.

Kubernetes support is available as a public preview, but not yet available due to low customer demand. Each StorageGRID release is regularly tested on top of Kubernetes. NetApp has also performed a successful PoC implementation of the COSI standard (currently pre-alpha) with StorageGRID.

For small edge deployments, ONTAP with S3 combines a small footprint and high performance lightweight object store with the ability to replicate data to a centralized StorageGRID deployment at core data centers. Other StorageGRID deployment options (appliance or software-defined) can also be used for edge data centers.

StorageGRID’s multitenant capabilities make this product appealing to large enterprise users, as well as to regional and local service providers. StorageGRID can be configured to support strong consistency for interactive workloads.

Strengths: Balanced and solid product that delivers its best when deployed with its integrated load balancer for added multitenancy and QoS features. Easy to use, with granular ILM policies, outstanding implementation of the S3 Select API, and support for serverless workloads.

Challenges: Stronger Kubernetes integration is not imminent. Even though performance is aligned with other object storage vendors, the product needs additional optimizations for all-flash configurations.

Nutanix

It provides object storage capabilities through Objects, which runs on top of Nutanix Cloud Infrastructure (NCI). The solution can be deployed either as a standalone instance, as distributed scale-out storage for larger scale and better cost efficiency, or integrated alongside Nutanix HCI deployments. Objects scales linearly both in capacity and performance, and it can support clusters with up to 48 physical nodes per cluster, delivering up to tens of petabytes in capacity. Based on NCI, Objects supports all Nutanix-compatible servers, implying support for media types ranging from NVMe flash to HDDs. The company also supports storage-class memory devices, and the Nutanix Objects solution inherits all these efficiencies.

The solution is optimized for high-performance active object access and delivers consistent performance for small and large objects, for GETs and PUTs, all while ensuring strict consistency for all written data. It uses the underlying AOS distributed storage layer, resulting in high sequential throughput for larger objects, but also block-like performance for small objects. Nutanix Objects also supports R/W access to objects using the NFS protocol to offer compatibility with older applications.

Serverless capabilities are delivered through a built-in notification system utilizing NATS streaming, but Kafka is also supported. The solution supports object tiering to any S3 compatible endpoint, including AWS S3, Azure Blob, and GCP. The process is transparent to end users and applications. Objects minimizes the number of PUTs when tiering lots of small files by coalescing small objects into larger chunks and utilizing range reads for GETs.

It is managed through Prism Central, Nutanix’s centralized management console built with simplicity and efficiency in mind. The firm also offers support for NATS that allows customers to build their own dashboard with the ELK stack.

The solution supports Kubernetes, Nutanix Karbon (Nutanix’s own Kubernetes solution), and Red Hat OpenShift. Objects is built on top of Kubernetes, and the design of firm’s NCI platform allows organizations to run these different container platforms alongside, with Objects providing storage services to each of them.

Edge deployments are supported with flexible and compact installations ranging from 1 or 2 nodes and starting as small as a single physical server with 2TB capacity. Mixed deployments are also supported with Objects coexisting alongside application workloads.

Finally, Nutanix Data Lens, an enterprise-grade SaaS-based data governance platform, delivers actionable insights into access patterns, data age, types, and other contextual information to enable efficient data lifecycle management, improved protection vs. ransomware attacks and insider threats to help achieve compliance.

Strengths: The firm proposes a flexible and scalable solution that offers very good performance and support for big data, analytics, and ML workloads, including S3 Select. Objects offers a good balance across all key criteria and benefits from Nutanix Data Lens with outstanding data management and ransomware protection features. The company also has a compelling roadmap to deliver upon.

Challenges: Some minor improvements on serverless support are on the roadmap.

OSNEXUS

Its QuantaStor is a SDS solution based on the open-source Ceph project. Its biggest differentiator stems from its system management tools, which hide Ceph’s complexity behind the scenes while offering advanced control on the supported hardware. The solution offers unified block, file, and object storage capabilities on top of its storage grid technology, a globally distributed file system that can be managed as a single entity from anywhere.

The solution can be deployed on-premises or in the cloud through a virtual storage appliance. It supports all major media types such as NVMe/SAS flash and HDDs. Intel Optane storage-class memory and QLC 3D NAND are also supported. Hybrid configurations are more cost-effective as OSNEXUS generally requires around 3% of the raw capacity to be made of NVMe drives to boost write performance and metadata operations. The solution also supports the NVMe-oF protocol and integrates with Western Digital OpenFlex systems.

QuantaStor benefits from the large Ceph ecosystem for integration with ElasticSearch and other tools for searching and event notifications, but the company itself doesn’t offer an integrated solution in this regard.

QuantaStor provides object storage and cloud integration through a NAS gateway that provides access to cloud-based S3 buckets through the SMB and NFS protocols. A feature called Backup Policies allows data replication and movement to cloud-based object storage. When files are moved, stubs are left behind, allowing access to the cloud-based object.

To manage QuantaStor deployments, organizations can take advantage of a globally distributed and easy-to-use management platform with good analytics, available on all nodes and accessible from anywhere. Although the management platform UI remains the preferred method of accessing OSNexus’ customer base, users also can leverage REST APIs, a CLI, and a Python Client interface to automate operations. In addition, QuantaStor can integrate with Grafana.

The Ceph community is very active in containerization of the entire solution, and it is expected that QuantaStor will take advantage of this community support. At the moment, a CSI plug-in for Kubernetes is available. Like with other Kubernetes-based topics, COSI support will be tied with Ceph implementation of the upcoming standard and may be available in the future.

The solution doesn’t include any particular optimizations for edge deployments, although OSNEXUS is monitoring the market. It’s worth noting that OSNEXUS made several additions to Ceph including multicluster management, strong multitenant capabilities, and remote replication. Security has been improved, and the product is certified to comply with several demanding regulations.

Strengths: Extremely easy to deploy and manage. Innovative and efficient use of NVMe-oF in the backend while still supporting traditional hardware configurations and mixed vendor deployments.

Challenges: Some areas of interest for next-gen applications, including Kubernetes, serverless notifications, and advanced indexing and searching are available only via third-party solutions.

Pure Storage

It has pioneered the FlashBlade as an all-flash storage fast file and object solution in addition to their all-flash storage line. It is a mature and solid solution with multiprotocol support, end-to-end control of the technology stack, a rich feature set, and an easy-to-use interface are just a few of the features of this product. With the Pure portfolio growing in both hardware and software, the company delivers a compelling set of products for companies of all sizes.

The solution is built for massive scalability and embeds proprietary flash modules (Directflash) that embed flash chips with the Flash Translation Layer managed by the OS, Purity/FB. Pure also uses raw NAND and does not rely on SSD drives from other manufacturers, giving them better control over performance, availability, and resiliency as well as a faster time to market as new media is introduced. This allows the company to control data placement and implement multiple data efficiencies optimally. The solution provides strong consistency, is capable of handling small and large objects, and excels with AI/ML/DL and analytics use cases.

With QLC and Intel Optane now being implemented in the FlashArray solution, the FlashBlade technology will probably follow soon, which would allow Pure Storage to propose a better dollar/GB for capacity-oriented object storage workloads. FlashBlade object storage is built for high-performance applications, including analytics.

The FlashBlade object store is built on top of a transactional key-value store. The software automatically distributes the metadata and data across the different blades. This combined with a “variable block size” provides high throughput for small or large objects.

FlashBlade has a native GUI and provides REST API based information for capacity and performance metrics of the object store and replication metrics. This can be obtained per bucket. Pure1 provides users the ability to monitor the customer’s fleet of FlashBlade and FlashArray systems, providing end-to-end enterprise-grade monitoring enhanced by AI/ML, and with AIO/s/self-driven characteristics. The solution also offers excellent data management capabilities and ransomware protection through the SafeMode feature.

With Portworx, Pure provides the ability to provision and manage local volumes and shared objects residing on flashBlade, along with the associated data services. Applications deployed in Kubernetes environments can use standard S3 APIs or FlashBlade’s rich set of management REST APIs.

FlashBlade will support the COSI model as soon as it is validated and certified; flashBlade can scale from about 65TB usable to multiple petabyte making it ideal for smaller deployments, from edge data centers to large data center deployments.

Strengths: FlashBlade is extremely easy to deploy and use, with a good balance among features, performance, and scalability. Entry-level configurations are quite small and accessible, making the product appealing to small and midsize companies. Users report very good support experiences.

Challenges: Lack of a tiering mechanism for cheaper storage is a limitation for customers who need additional capacity at a lower cost. No concurrent access to data via both object and file interfaces.

Quobyte

It offers a flexible and highly configurable parallel file system that supports multiple protocols. Its clusters support heterogeneous server configurations that can vary in specs, gen, capacity, and models.

Because Quobyte presents a single namespace, it pools local media on servers and offers transparent migration and tiering on top of NVMe, SSDs, and HDDs. Sold as a software solution with a subscription license based on capacity, this solution can be deployed on bare-metal servers and VMs, and clusters can be federated to build a geo-distributed infrastructure through data replication mechanisms.

It offers strong consistency inside a cluster with good small file support; clusters can be stretched geographically for remarkable data availability and automatic fast failover for any application. The high-performance, low latency file system layer underneath the firm can provide low latency object storage (on flash) as well as high, parallel throughput and handle small file workloads well. The solution also includes data movers to migrate or synchronize data, supporting bi-directional synchronous replication across locations and clouds. Data can be accessed simultaneously from the S3 and file interfaces, expanding the number of use cases while adding flexibility for data ingestion and access.

Quobyte runs on all major cloud providers, and tiering to external object stores is planned for 2Q22 and will include Google and Azure. Serverless integrations are currently not supported and not on the roadmap either.

Although the solution follows an API-first approach, it can also be managed through an extensive web-based user interface, through command-line tools, and can integrate with Prometheus. Quobyte also provides real-time performance analytics.

Quobyte supports Kubernetes through a CSI plug-in that provides volumes with quotas, snapshots, an access key, and multitenancy support. Persistent volumes can be accessed via S3 concurrently, and the S3 access/secret keys can be used to authenticate file systems as well. Currently, the COSI protocol is not supported.

The Quobyte solution can be deployed through containers on Kubernetes, and the company provides a Helm chart to simplify installation and updates of Quobyte in containerized environments, making it particularly handy for low-touch edge deployments.

The solution incorporates building blocks to enable ransomware protection with object immutability but lacks detection and remediation features. Roadmap items include federation support with policy-based data movement capabilities.

Strengths: The firm offers a scalable and flexible shared namespace multiprotocol architecture that combines multiple media types and is easy to manage. Very good back-end architecture that allows the file system to reach up to 100Gb/s per cluster node.

Challenges: Tiering to cloud is not yet available; serverless support is absent.

Red Hat

Red Hat Ceph Storage (RHCS) is an open-source object store with integrated file and block interfaces. The company is showing impressive progress with its core product and its ecosystem, which is also the foundation for Red Hat Container Storage. In fact, Ceph is well integrated with OpenShift and is a key component of the company’s strategy around Kubernetes.

Ceph supports all-flash, hybrid, and HDD-only nodes, as well as the ability to mix node types within a cluster. If a cluster consists of all-flash and mixed media nodes, Ceph will support tiering and automatically move cold data from flash to non-flash media through data management policies. Red Hat is working on further optimizations for NVMe media.

RHCS is strongly consistent and includes specific optimizations for building large-scale data lakes for AI/ML, with massive scalability and support for billions of objects. On the performance side, the company is working on optimizing the data path, with upcoming enhancements that will reduce CPU usage for I/O to flash and NVMe, while also improving performance alongside scalability. All-flash configurations are now supported, and new reference architectures for running high-performance workloads are available as well.

The solution offers a growing number of serverless capabilities with support for SNS, SQS, and Lambda both for notification and function-as-a-service. In addition, Ceph integrates with OpenShift Serverless to create fully automated ingest data pipelines. Furthermore, Ceph also supports the S3 Select API (for CSV files) and Hadoop workloads using a S3A driver.

It offers compelling cloud integration with fully automated data federation, data distribution, and replication across on-premises deployments; AWS and Azure are supported with multicloud gateway integration. In the future, it will also support fully automated tiering and archiving to AWS based on bucket policies, as well as tiering and archiving to Azure and GCP.

The product offers native Kubernetes integration with Red Hat OpenShift, providing outstanding outcomes for organizations that leverage both solutions. In fact, OpenShift Data Foundation is based on Red Hat Ceph Storage. Red Hat has a compelling roadmap for Kubernetes support capabilities of RHCS, all related to OpenShift. These include regional DR, the ability to deploy single node edge deployments (for telco use cases, for example), and NVMe-specific optimizations.

The firm Hat also has developed a complete edge strategy from device edge to network and end-user edge, as well as enterprise and provider edge. This includes moving objects from a device edge to enterprise edge or even core data centers using Ceph through object bucket notifications, which spawn events through other tools such as Kafka, Trino, and Knative. In addition, Red Hat S3 multicloud object gateway can act as an edge gateway for S3 and allow replication of objects to a core Ceph object store.

Strengths: RHCS is a robust solution with massive scalability, strong integration with Kubernetes and Red Hat OpenShift, and a compelling edge and serverless feature set. Integration with OpenStack also makes RHCS appealing to MSPs and CSPs.

Challenges: The solution has improved small object handling but it still has to prove in the field for these optimizations to have an impact with multiple and mixed workloads.

Scality

It is well known for its Ring offering, which has been enhanced enormously over the last decade. Over the last year, it added the Artecsa solution together with HPE. Both offerings are a great fit for companies adding object storage to their environments but differ in use cases. They can manage and support both solutions from one interface, and therefore mix and match. The offering is a promising and extended solution for companies of all sizes and can be implemented in multiple petabyte environments (Ring) all the way to the edge (Artecsa).

The Ring solution is a solid and mature object storage platform with plenty of multipetabyte installations around the globe and a growing number of workloads supported, with customers in all major market segments. Some of the most advanced products, like Zenko multicloud data controller, are now fully integrated into Ring, making the product even more appealing. Kubernetes support has been improved as well, with more components now fully containerized and an interesting roadmap that will soon bring additional flexibility to Ring and new products. Moreover, the scale-out file system can now be used in the cloud with Azure or GCP, further simplifying the creation of hybrid cloud infrastructures to manage unstructured data.

The Artecsa system is designed as a set of distributed microservices deployed as containers on Kubernetes. The system provides API-based monitoring, management, and provisioning, including Prometheus (monitoring access) and COSI for automated provisioning. The architecture is portable to third party on-premises Kubernetes distributions, including OpenShift, VMware Tanzu, Google Anthos, and public-cloud Kubernetes environments (AWS EKS, Azure AKS, Google GKE).

Both solutions offer NVMe support, but the Artecsa offering can also be optimized for QLC and Intel Optane, where Scality and Intel tested and validated this together. It makes sure that both systems can be offered forcapacity and performance. Cloud integrtion into the big three is covered in both products, and the support and certification for many industry-leading solutions is offered for both solutions.

Although COSI is not supported for Ring yet, the Artecsa solution offers automated S3 service provisioning through a Scality defined REST API. The company is represented on the CNFC storage steering committee and is tracking the COSI spec. Once accepted, they will implement the COSI API spec.

Strengths: Global presence and mature products with a strong ecosystem. Ring and Artecsa are evolving quickly. The company is leveraging the established engagement in the open-source community and expertise in Kubernetes to move from a traditional design to a next-gen object store able to target a broader range of workloads at scale.

Challenges: Migrating from traditional object storage implementation to the next iteration of the technology is still a challenge. Given this scenario, Ring customers can count on long-term support and a viable roadmap before transitioning.

Vast Data

Vast Data Universal Storage is based on an innovative architecture that combines the performance of Intel Optane memory-class storage with the economics of consumer-grade QLC flash memory. The first is used as a staging area to improve response times and perform all metadata handling and data optimization operations, while the latter is where data is stored permanently. Front end and back end can scale separately, depending on user needs. This hardware architecture works thanks to a series of advanced data placement and optimization techniques, and it enables very high performance and a dollar/GB that can be compared to hybrid systems with flash memory and traditional HDDs.

Universal Storage is strongly consistent and designed to support a wide range of applications, file/object sizes, and I/O patterns with sub-millisecond latency. It also provides simultaneous data access from S3, SMB, and NFS.

Remote replication is available to S3 repositories, making it easy to migrate data to secondary storage systems or the cloud. Hybrid offerings are under development, including VAST as a service in public clouds.

The solution collects thousands of health and performance metrics that are presented in user-defined and pre-packaged dashboards in the Vast management interface. They are also consolidated into telemetry data for Vast central monitoring and support integrations with Grafana. The firm also provides sophisticated visualizations that include user data flows through the system, space consumption by bucket/folder, and the impact of data reduction capabilities.

Kubernetes support is provided through a CSI driver. It is worth noting that Vast Universal Storage runs in standard docker containers, allowing the firm to consider future deployment models where Vast software could be co-resident with user/application containers.

Although there is no particular optimization for small footprint edge deployments, new compact 1U enclosures can be deployed on edge data centers.

Strengths: The company provides a showcase implementation of new flash media devices to deliver outstanding performance at a reasonable cost. The solution is flexible and massively scalable, includes multiple data efficiency features and compelling monitoring features.

Challenges: There is currently no support for serverless use cases. Cloud integration is also an area for improvement.

Weka

It offers the Weka Data Platform, a massively scalable solution (up to 14EB) that offers a single data platform with mixed workload capabilities and multiprotocol support (SMB, NFS, S3, POSIX, GPU Direct, and Kubernetes CSI). It is deployed as a set of containers and offers multiple deployment options on-premises (bare metal, containerized, virtual) in the cloud. It can run fully on-premises, in hybrid mode, or solely in the cloud, and all deployments can be managed through a single management console.

The solution is built on an optimized full-NVMe backend, allowing Weka to support high-performance workloads. Weka shows impressive throughput in every scenario, even with very small files and even on the S3 interface, making the product capable of serving IoT applications and any other workload that requires low latency and high throughput or IO/s, including ML/AI applications. This solution also provides simultaneous object and file access and can handle different storage tiers (SSD/HDD), which can be scaled independently. Its auto-tiering functionality allows data to be moved across tiers bidirectionally, depending on whether it is cold or hot data.

The firm implements a Snap-To-Object feature that allows data and metadata to be committed to snapshots for backup, archive, and asynchronous mirroring. This feature can also be used for cloud-only use cases in AWS, such as pausing or restarting a cluster, protecting vs. single availability zone failure, or migrating file systems across regions. Organizations can deploy Weka directly on AWS from the marketplace, and run a certified Weka deployment on AWS Outposts, thanks to WEKA’s ISV Accelerate partnership with AWS.

The management interface is simple and easy to use, with a GUI, as well as an API and CLI access available, and offers a Prometheus exporter and Grafana support. WEKA also supplies a proactive cloud-based monitoring service called Weka Home that collects telemetry data (events and statistics) and provides proactive support in case of detected irregularities.

The company supports a broad Kubernetes ecosystem and integrates with Rancher, HPE Ezmeral, and Red Hat OpenShift. The Kubernetes CSI plug-in supports manual and dynamic volume provisioning. Further, direct quota integration is supported on a per-pod or per-container level.

The solution offers no particular edge deployment optimizations, although small footprint configurations (six nodes minimum) can be deployed in edge data centers, where local data processing can happen.

Strengths: A compelling solution for high-performance use cases focused on HPC, AI, and other demanding workloads. Includes the ability to take advantage of external object stores to keep costs under control when scaling capacity.

Challenges: Weka doesn’t offer serverless capabilities yet.

6. Analyst’s Take

High-performance object storage momentum is continuing in the enterprise space. Many software vendors want to take advantage of it for interactive workloads, though the consolidation of these workloads requires good throughput, low latency, and high IO/s, even if the size of the system is relatively small.

Most of the innovation in this area comes from the correct use of flash memory associated with several optimization techniques to better handle small files and next-gen applications and, when necessary, offloading cold data to the cloud or HDDs. Dollar/GB is not the primary concern from this point of view.

This is a market that is moving quickly, so there are only fast movers and outperformers in this Radar. However, because of the changing business needs of many enterprises, vendors with traditional solutions are working to improve the performance of their products, in many cases, by completely overhauling their product architecture and deployment options. In fact, several of the vendors covered in the High-Performance Radar are also present in the Enterprise Radar.

However, some of the solutions evaluated for high-performance do not offer a general-purpose object storage solution or tiering to cheaper object storage solutions. This can become a challenge for organizations looking at deploying object storage at scale to cover mixed workloads or those looking at optimizing their dollar/GB ratio. In other cases, organizations may want to run the same high-performance object storage solution in the cloud because it is adjacent to large amounts of data already stored in the cloud. Unfortunately, not all solutions currently support these deployment models.

Part of the success of high-speed object stores is multiprotocol access to data and ease of deployment and use. These capabilities make the transition from file storage to object storage easier and improve overall system TCO by hiding the complexity of scale-out storage behind the scenes while delivering a familiar user experience.

The next step in the evolution of object storage will be around data management. Currently, traditional object stores are better positioned to create or improve data management solutions because they already include features like index and search, bucket notification, and serverless functions for metadata tagging or other complex operations. The support for these features is more prevalent in Enterprise solutions but less present among High-Performance solutions due to their core focus on performance. Availability of serverless support and the availability of object storage support for Kubernetes can become a differentiating feature that will set apart leaders from challengers in the future.

The demand for better management of the enormous amounts of data companies must handle nowadays will foster convergence in the development paths of different types of object stores.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter