Ocient Hyperscale Data Warehouse V19 Available in Google Cloud Marketplace

Engineered for complex, continuous analysis of hyperscale data sets, unlocks queries on 5x–10x more data.

This is a Press Release edited by StorageNewsletter.com on May 3, 2022 at 2:03 pmOcient, an hyperscale data analytics solutions company serving organizations that derive value from analyzing trillions of data records in interactive time, announced the general availability of V19 of its Hyperscale Data Warehouse.

Earlier versions of have been used for hyperscale solution deployments over the past year with a select group of enterprise customers.

Engineered to deliver price-performance for rapid complex and continuous analysis of massive structured and semi-structured datasets, the Hyperscale Data Warehouse enables organizations to execute previously infeasible workloads in interactive time. Organizations can tackle CPU-intensive workloads with ease, including large-scale joins and full-table scans with I/O performance, returning results in seconds or minutes vs. hours or days.

The Hyperscale Data Warehouse is designed to address the growing need for enterprises and government agencies to rapidly harness an ever-increasing volume of data from disparate sources to inform mission-critical decisions. In a recent survey of 500 IT leaders conducted by Propeller Insights on behalf of Ocient, 85% of C-level respondents indicated that increasing the amount of data analyzed by their organization over the next 1 to 3 years would be “very important” and implementing a faster approach to data analytics would grow their company’s bottom line.

“Organizations clearly want and need to be able to harness massive amounts of data to stay competitive and drive better decisions, but IT budgets aren’t as scalable as public cloud resources,” said Doug Henschen, VP and principal analyst, Constellation Research. “Constellation sees leading vendors applying cutting-edge infrastructure, breakthrough architecture, advanced optimization, and software intelligence to the problem rather than just relying on expensive computing resources and redundant copies of data.”

“The Hyperscale Data Warehouse started as a research project over six years ago and is now a full-fledged, enterprise-ready product designed from the ground up to maximize price performance at every layer. We also help customers realize the benefits of hyperscale data analytics as quickly as possible through a customer-centric go-to-market model that is rare in the industry. We’ve seen customers transform their businesses with the rapid, interactive, and virtually limitless insights possible with the Hyperscale Data Warehouse and look forward to continually helping them scale with their data growth and use of modern analytics to achieve impactful business results,” said Chris Gladwin, co-founder and CEO, Ocient.

Th company enables mixed OLAP-style workloads for hundreds to thousands of concurrent users 10 to 50x faster than existing solutions.

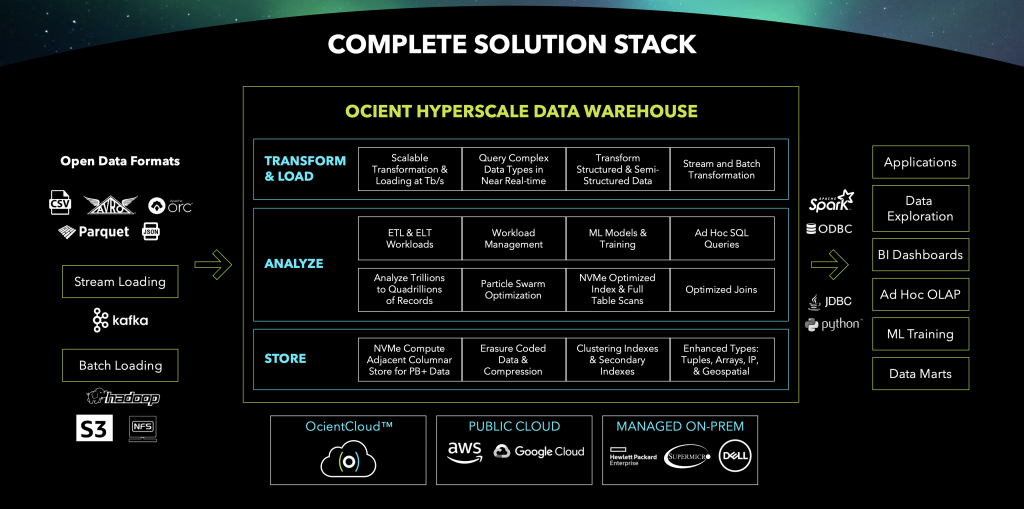

Optimized for data sets of hundreds of terabytes to petabytes and beyond, the Hyperscale Data Warehouse’s key features include:

-

Compute Adjacent Storage Architecture (CASA) which places storage adjacent to compute on industry standard NVMe SSDs delivering hundreds of millions of 4KB random read IO/s and enabling massively parallelized processing across simultaneous loading, transformation, storage and data analysis of complex data types.

-

Megalane, firm’s high-throughput custom interface to NVMe SSDs that uses highly parallel reads with high queue depths to saturate drive hardware and maximize the benefits of leveraging performant standard hardware.

-

Hyperscale Extract, Transform and Load (ETL) service that transforms and loads or streams data directly making complex semi-structured, multidimensional, geospatial, and network data types ready for querying within seconds of ingest with no extra tools needed.

-

Hyperscale SQL Optimizer, a lock-free, massively parallel cost optimizer that ensures each query plan is executed to the best of its ability within its service class and without impacting performance of other workloads or users.

-

Zero Copy Reliability, delivering enterprise-grade reliability and availability without replication and enabling customers to decrease their storage footprint by up to 80% to lower Capex and operational overhead.

-

Support for structured and semi-structured data types including multidimensional and geospatial data enabling customers to harness new and disparate data sources to better inform mission-critical decisions in interactive time.

-

Rich analytics features such as workload management, intra-database ML, and intra-database ELT to enable data marts and data science modeling.

-

Support for ANSI SQL and open standards, including ODBC, JDBC, Python, Kafka, making the Hyperscale Data Warehouse easy to integrate into existing data ecosystems and quick to train new and existing users.

Now generally available in the OcientCloud, on-prem, and in Google Cloud Marketplace

The Hyperscale Data Warehouse V19 is generally available as a managed service hosted in OcientCloud, on-prem in the customer’s data center, and in Google Cloud Marketplace. Google Cloud customers looking to leverage Google’s core infrastructure and procurement channels can now integrate with Ocient’s data analytics solutions powered by the Hyperscale Data Warehouse for complex OLAP-style workloads on trillions or more records in interactive time.

Comments

Ocient is a new new name for many of our readers but if they stop a few seconds and realize who is behind the project, they will recognize a pioneer of the storage industry, Chris Gladwin, who launched Cleversafe in 2005.

As a leader of the object storage market segment, Cleversafe has made an exceptional exit with the IBM acquisition at $1.3 billion in 2015. Several other object storage vendors and associated leaders have dreamed about similar exits, even thinking being a unicorn, but the reality caught them up with an abrupt return to basics and real world.

Since 2015 and as of today only MinIO exists as a pure object storage vendor and has reached recently the unicorn status with their oversubscribed last round. Others have tried to extend their footprint beyond object with file access method and invite readers to ask questions about their future existing for more than 12 or 13 years.

![]()

For this announcement of the V19 of its Hyperscale Data Warehouse, we met Chris Gladwin at Ocient HQ in Chicago where we record a new podcast for The French Storage Podcast. You can listen to it here.

Cleversafe and Ocient are 2 examples of extreme computing models, Cleversafe was for capacity at scale for secondary storage with a dispersed approach coupled with strong resiliency model. Ocient is more on the other side, still with high data capacity, but this time with extreme OLAP performance numbers.

Addressing the biggest challenge for unstructured data at large scale, Gladwin recognized around 2014 the need for super fast analytics for trillions of records for very large structured data sets. He decided to investigate how to build a unique analytics platform never designed before relying on current technologies with clear roadmap such multi-core CPUs, PCIe, SSD, NVMe... In other words without NVMe, this solution would not exist. It reminds us a few others players that strictly tied to some storage and connectivity technologies... As the industry managed to drive SSD cost down with high numbers of layers and TLC then QLC models associated with the raise of NVMe interface, these have changed radically several approaches and invite people to think about new disruptive architectures and designs.

It took 6 years to finally release the GA of V19, previous versions were more controlled release distributed to a selected customers list. Hyperscale Data Warehouse is an other example of SDS at Scale.

The product relies on commodity hardware with Intel CPU, today Ice Lake with PCIe Gen 4, and classic NVMe SSDs, nothing spectacular and no storage class memory such Optane at all.

We noticed several key innovations in such system, among others CASA for Compute Adjacent Storage Architecture, Megalane and Zero Copy Resiliency and of course other related to database and data warehouse landscape:

- CASA is a sort of DAS model where each NVMe SSD is attached to the server via PCIe to avoid any network traffic and latency. Each SSD uses 4 PCIe lanes and each 1U chassis is optimized to fit in the right ratio. The idea is to maximize tons of random 4k reads over trillions of records.

- Megalane is the Ocient custom interface to NVMe SSDs in user space to maximize parallelism and high queue depths without any filesystem and kernel penalties. This is a critical component when you imagine the number of SSD per U, 42U per rack and in a multi-rack config dealing with millions of requests per second.

- Zero Copy Resiliency, it is in fact a Reed-Solomon N+2 model deployed with 10 data segments among 12 globally.

Ocient engineering pays attention to L1, L2 and L3 caches to deliver maximum throughput.

Speaking with Gladwin, he confirmed that his team only targets very large complex projects as differentiators from Ocient are obvious at scale. The product is price per core and not per capacity or any other storage related parameter as Ocient is about throughput, IO/s, queries per second...

In term of deployment 3 models exist: on-premises, on OcientCloud as managed services or on the public today on AWS or GCP.

It's interesting to see a new player emerging developing some real innovative approach to tackle new analytics challenges at scale. It's a perfect companion to Snowflake, Firebolt, Yellowbrick Data, Apache Druid or even Rockset among others and the battle will be interesting to watch.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter