Use AWS Systems Manager Automation to Automate Snowflake Storage Integrations With Amazon S3

Systems Manager Automation lets use predefined playbooks, or build, run, and share wiki-style automated playbooks to enable AWS resource management across multiple accounts and AWS Regions.

This is a Press Release edited by StorageNewsletter.com on February 16, 2022 at 2:02 pm By Kanishk Mahajan, ISV solutions architect lead, AWS (Amazon Web Services, Inc.)

By Kanishk Mahajan, ISV solutions architect lead, AWS (Amazon Web Services, Inc.)

AWS Systems Manager lets you safely automate common and repetitive IT operations and management tasks. Furthermore, Systems Manager Automation lets you use predefined playbooks, or you can build, run, and share wiki-style automated playbooks to enable AWS resource management across multiple accounts and AWS Regions.

Snowflake, the data cloud, is an APN Partner that provides enterprises with a cost-effective combination of the power of data warehousing, the flexibility of big data platforms, and the elasticity of the cloud.

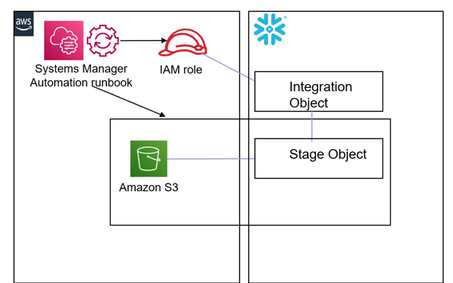

Snowflake storage integrations are Snowflake objects that enable Snowflake to read and write data to Amazon Simple Storage Service (S3). Snowflake storage integrations uses AWS Identity and Access Management (IAM) to access Amazon S3. The S3 bucket is referenced by the Snowflake integration from an external (in this case, Amazon S3) Snowflake stage object. In this post, I describe a AWS Systems Manager Automation runbook I built to automate all of the steps required by Snowflake to create a storage integration with Amazon S3 in an AWS account.

Solution architecture

Each time you launch the AWS Systems Manager Automation runbook in your account, it provisions a Snowflake storage integration object and attaches an IAM role to it. It also creates an external Snowflake stage object for Amazon S3 by using the integration object and your supplied S3 bucket as parameters. The runbook uses AWS Secrets Manager to store and retrieve Snowflake connection information. You can launch the runbook as many times as needed to create new integrations between Snowflake and additional S3 buckets in your account.

The AWS IAM role that is created by the runbook provides trusted access to Snowflake to reference the S3 bucket in your account. The Principal element and external ID in the role’s trust policy are extracted by the runbook from the Snowflake integration object.

The runbook deployment is fully automated using 1-click automation via AWS CloudFormation. First, the CloudFormation template takes your Snowflake connection information and stores it in AWS Secrets Manager. Then, it provisions an AWS Lambda Layer that wraps the Snowflake connector for Python and provisions a Lambda function that that uses the connector to create the Snowflake integration. It then provisions the Systems Manager runbook in your account that uses this Lambda.

Refer to the following architecture diagram that illustrates the interactions of the previously-mentioned components of Systems Manager, Snowflake, and Amazon S3 integration.

Prerequisites

Complete the following prerequisites before implementing the Snowflake and Amazon S3 storage integration automation using Systems Manager Automation runbook:

-

Subscribe to Snowflake via AWS Marketplace. In the upper right, choose Continue to Subscribe. After successfully subscribing to Snowflake, choose Accept Terms. To begin using the software, you will be redirected to the Snowflake website. To sign up with Snowflake and complete your registration, select the Set Up Your Account

-

Once you’re set up with your Snowflake account, and if you’re new to Snowflake, complete this Snowflake in 20 minutes At the end of this tutorial, you will know how to create the required Snowflake objects (warehouses, databases, and tables) for storing and querying data. You will also understand how to load a small amount of sample data from CSV files into a Snowflake table and query the table.

-

Note the Snowflake connection information for your account. You will need this in the solution setup step section later.

-

-

Create an S3 bucket: s3-snowflakeintegration-accountId-region. Replace accountId and Region with the AWS Account ID and Region of your AWS account.

-

-

Create a folder in your S3 bucket called SnowflakeIntegration_Lambda_SSM, and upload the SnowflakeIntegration_Lambda_SSM.zip file. This Lambda uses the Snowflake connector for Python to query and update Snowflake.

-

Upload the snowflakelayer.zip in the root folder of this S3 bucket. This zip file packages the Snowflake Python Connector as a Lambda layer.

-

-

Solution setup

The AWS CloudFormation templates and a detailed README for this solution are available on Snowflake Labs.

Solution is installed in single step:

-

Create a stack from the AWS CloudFormation console by launching the aws-snowflake-ssm.yml The template takes your Snowflake Connection information from Step 1 of the prerequisites section as well as the S3 bucket that you created in Step 2 of the prerequisites section as input parameters.

Test and run the Systems Manager automation for the Snowflake and Amazon S3 storage integration

-

Navigate to the AWS Systems Manager console in your AWS account. From the left panel, select Documents, and then on the console, select the Owned by me In the search filter, search for the ‘Custom-Snowflakestorageintegration’ document. Select this document, and then from the right corner of your console select Execute automation. On the Execute automation document screen, select Simple execution, provide the S3 bucket name in the Input parameters section, and select Execute.

-

Navigate back to the AWS Systems Manager console. In the left panel, select This is where you can track the execution of your automation runbook on the Automation executions screen. This will let you make sure that the status column displays Success.

-

Navigate to the AWS IAM console of your AWS account and check that a new IAM role has been provisioned that ends with ‘S3INTxxxxx’ suffix. This entire suffix that starts with ‘S3INT’ will also be the name of your new Snowflake integration object.

-

Log in to your Snowflake web interface and make sure that the URL contains your account identifier. Alternatively, you can use snowsql as outlined here.

- Validate that a new Snowflake integration object has been created using

DESC INTEGRATION 'integrationobjectname‘. -

Obtain the AWS_IAM_USER_ARN and AWS_EXTERNAL_ID parameters from the preceding step (4a). Navigate to the IAM console of your AWS account and check that the IAM role in step 3 uses those parameters in its trust policy as the principal and external ID parameters respectively.

- Validate that a new storage object has been created in Snowflake that references the S3 bucket and uses the integration object using

SHOW.

STAGES IN ACCOUNT

- Validate that a new Snowflake integration object has been created using

Cleanup

To clean up your account after deploying the solution outlined in this post, delete the CloudFormation stack for aws-snowflake-ssm.yml template.

Conclusion

Snowflake storage integrations are Snowflake objects that enable Snowflake to read and write data to Amazon S3. In this post, I’ve described an AWS Systems Manager Automation runbook that you can download and use to automate all of the steps required by Snowflake to create storage integrations with Amazon S3 in an AWS account.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter