From Qumulo, How NetApp File on AWS Makes Cloud Complex and Slow?

Qumulo builds petabyte-scale file environments that are price and performance optimized for specific use case.

This is a Press Release edited by StorageNewsletter.com on September 14, 2021 at 1:31 pm This blog was authored by David A. Chapa, head, competitive intelligence, technical evangelist and strategist, Qumulo, Inc. on September 2, 2021.

This blog was authored by David A. Chapa, head, competitive intelligence, technical evangelist and strategist, Qumulo, Inc. on September 2, 2021.

How NetApp File on AWS Makes the Cloud Complex and Slow

Editorial update:

“In the original blog (posted September 2, 2021) I had made some assumptions about AWS FSx for NetApp ONTAP and how it seemed the solution may be configured from a storage perspective and some of its features. Thanks to our partner, AWS, those assumptions have been corrected as part of this update. I also took time to add more thoughts to this blog to be absolutely clear about the points that I am making.”

File on AWS doesn’t get any better with NetApp

Today at AWS Storage Day the general availability of Amazon FSx for NetApp ONTAP was announced. AWS FSx is a managed service offered by AWS, and this makes the third such offering by AWS. Sounds like a big win, but it’s the same legacy complexity of on-prem but now on AWS.

The main issue with this Storage Day announcement is that NetApp brings with it the same complexity everyone has come to associate with their filers and operating system, ONTAP, into the cloud. A managed service should be more ‘plug ‘n play,’ but in this case, the onus is on you, the customer, to determine how to dial in your data to this solution. First of all it says that it can scale to petabytes of storage. Let’s dissect all of this a bit.

Throughput gets lost when you scale up

The announcement claims this solution is ‘virtually unlimited’ in scale. However, based on what I read, there are two separate storage tiers. The primary volume is 211TB (rough conversion from its advertised 192TB in the release and on the AWS product page) so in order to achieve this scale to petabytes means there has to be some tearing, I mean tiering. They also introduced an ‘intelligent tiering’ feature with the offering. Sounds simple, but if you aren’t satisfied with its ‘auto tiering’ default option you have to configure the what, when, and how for your data to be ‘intelligently’ moved.

It also appears the larger volume that yields greater capacities, is an S3 bucket. Okay, first reality check, FSx for NetApp ONTAP does not scale to petabytes of storage as we all think about a filesystem scaling. It seems as though this solution is taking a page out of the ‘tape’ manufacturer’s playbook and using S3 as a substitute for tape in order to ‘market’ petabyte scale. Technically you have petabytes of data, but the real question is how quickly the data can be accessed and put to use. Let’s be frank, petabyte scale to me means, petabyte scale in a single namespace or volume. I mean, any ‘old-school’ HSM solution can claim even multiple zettabytes or “virtually unlimited” storage but the data isn’t necessarily in a reasonable state where you can easily and quickly consume it. It is more of an archive than the single namespace intelligent storage solution Qumulo offers its customers. It feels like a throwback to the 1990s, minus the mullet.

Second reality check is data access. In the event you need that data ASAP, will you have any guarantees, besides uptime, on the performance of that data retrieval? If it is true that the capacity pool is S3, then I assume the SLA I found when I searched for ‘AWS S3 SLA’ is what applies to this solution for customers of FSx for NetApp ONTAP. When I read this, I don’t see anything around performance guarantees, only uptime. It would appear any performance guarantee from the capacity pool to the primary volume or namespace is unknown, as far as I can tell. So, what does a customer who has ½ a PB of fairly active data do to avoid hitting the capacity pool for this fairly active data? Well, if you have more than ~211TB of data it would appear you are buying two instances of FSx for NetApp ONTAP. Why would you do that when you can get it all in a single namespace without any compromise from Qumulo?

It also seems to be on you, the customer, to determine what your file efficiency will be with deduplication, compression, and compaction. You’ll probably hear your NetApp or AWS FSx rep tell you, ‘your mileage may vary’ when you ask for help. That’s the reality with tech such as that, it really is a guessing game and if you’re off, then more than likely your pricing will go up. AWS FSx for NetApp ONTAP is complexity at its best.

Intelligent tiering? A copout for complexifying the cloud

The open question I still have about this solution, even given the ‘intelligent tiering,’ is if NetApp will still strongly urge its customers not to exceed 80% of the primary storage. I mean, it would make sense since it is ONTAP and ONTAP doesn’t like it when it gets too full. Will performance be gated, will the tiering criteria be overridden and create a mass data migration from the primary to the secondary to save space and avoid a performance nightmare? Unknown right now, but knowing what I know about ONTAP, it wouldn’t surprise me to see this happen.

We all know NetApp does have performance issues, that’s an inherent problem with all scale-up solutions. That’s why you want a scale-out, distributed file system like Qumulo. We are achieving up to 40GB/s performance compared to FSx for NetApp ONTAP performance of 2GB/s of read performance and 1GB/s of write performance. So now I’m more curious. Does the 2GB/s read include the read from the capacity pool or is it only from the primary storage volume on FSx for NetApp ONTAP? Unclear, I can’t find any reference other than what I mentioned above. You know I had to dig a little to find the actual performance numbers. It was buried in the FAQ. Typically you want to showcase your performance ‘hero’ numbers in the announcement, but it’s no wonder in the announcement and feature section they say it is capable of multiple gigabytes of performance, I’d be embarrassed to state those numbers too. Hero to zero at a whopping 2GB/s.

Adding up costs at petabyte-scale

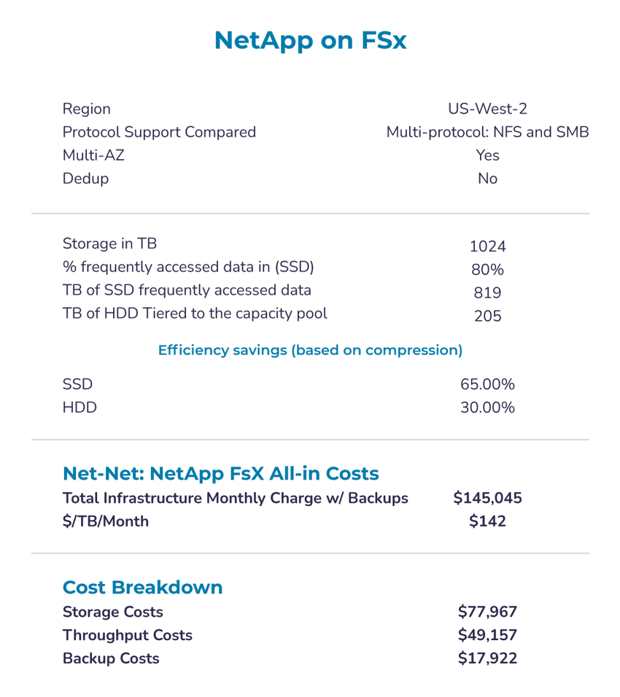

NetApp uses a 100TB example in their announcement but when you look at a petabyte scale use case with data that you need to actually use for workloads such as AI model training, genomic sequencing or video production the cost model breaks the bank.

Here we took the exact calculation NetApp published and scaled it to a petabyte (which you can’t actually do in NetApp unless you like managing multiple volumes). It runs you at $72TB per month, and if you need to frequently access that data (let’s assume 80% of the time) the price shoots up to $142TB per month. When that same use case on Qumulo on AWS can run you at $52TB per month.

If there’s any indication of complexity, just look at the number of ‘knobs and dials’ you have to configure, each racking up the cost of your storage like a lottery ticket!

-

Start with your volume size.

-

Next, create a tiering profile by guessing how much data will be in your primary tier (frequently accessed data). That’s a charge.

-

Now you have to decide how big your ‘capacity pool’ is (infrequently accessed data). That’s another charge.

-

Then you have to scope your throughput requirements. Yup! You guessed it…another charge.

-

What about backups? That’s another dial you’ll have to hone in, and add an additional charge.

You shouldn’t need to make a complex set of decisions like you’re sending a person to the moon just to get your storage up and running.

With Qumulo you don’t need an abacus to decide any of that. Pick your capacity, your performance, and pump in as much data in as you’d like. And, it doesn’t matter how often or how little you use your data. Qumulo automatically tiers your data with intelligent caching, no more guessing games. And when we say ‘intelligent caching’ it doesn’t mean you have to turn dials and tweak configurations. It has it covered for you, intelligently, within a single namespace capable of scaling to several petabytes of storage. That’s it. It really is that simple. No knobs, dials, or management headaches with hidden costs. Qumulo is a software platform with an all-inclusive pricing that won’t break the bank.

The net on NetApp

The need for file services in the cloud is growing exponentially and many customers are seeking out solutions that will meet their needs and an ability to ‘get out of the data center business’ while focusing on the core of their own business. The problem is that legacy dinosaurs like NetApp are just bringing their outdated and complex architecture to the cloud and not able to deliver on the golden trifecta of scale, performance, and cost. Instead, customers should consider cloud native platforms that have proven scalability and performance and can deliver cost-efficiency at scale. Yes, I’m talking about Qumulo.

Qumulo can help you build petabyte-scale file environments that are price and performance optimized for your specific use case.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter