HPE Alletra 9000 Primera Available

High end tier-0 array

This is a Press Release edited by StorageNewsletter.com on May 20, 2021 at 2:31 pm![]() By Dimitris Krekoukias, blogger on Recovery Monkey

By Dimitris Krekoukias, blogger on Recovery Monkey

The evolution of the HPE Primera (which was the evolution of 3PAR) is now available.

It’s called the HPE Alletra 9000 and is the mission-critical tier-0 complement to the tier-1 Alletra 6000 (which in turn is the evolution of Nimble).

Alletra 9000 4 Node storage base

It retains the rich feature set of Primera and the 100% uptime guarantee. The main enhancement vs. Primera is the increased speeds, and the fact that all the performance is possible in a 4U configuration, making it a performance-dense full-feature tier-0 system. It is managed via the HPE Data Services Cloud Console.

A welcome enhancement (that is also coming to Primera) is that of Active Peer Persistence, which allows a LUN to be simultaneously read from and written to from two sites synchronously replicating. This means that each site can do local writes to a sync replicated LUN without the hosts needing to cross the network to the other site.

Optimized architecture

The Alletra 9000 builds on the Primera architecture. This means there are multiple parallelized ASICs per controller helping out the CPUs with various aspects of I/O handling.

The main difference is how the internal PCIe architecture is laid out, and how PCIe switches are used. In addition, all media is now using the NVMe protocol.

These optimizations have enabled a sizable performance increase in real-world workloads.

Predictable and consistent experience for mission critical workloads

The Alletra 9000 is a high end, tier-0 array. This means that it needs to be fast, and also that the performance needs to be predictable and consistent, even when faced with unpredictable, conflicting workloads.

It’s not just about high IO/sand throughput in a single workload – latency is a key factor, especially with multiple mission-critical applications that simultaneously demand a great result.

The vast majority of IO happens well within 250μs latency, for instance.

Intelligence is the other important factor. Can the array automatically determine what workloads to auto-prioritize? Can it automatically deal with many conflicting workloads, each having wildly different IO characteristics?

You see, it’s easy to make a system that will work well at a fixed block size and simple operations like you’d see in a typical performance benchmark.

It’s a different matter altogether to make a system that can host hundreds of conflicting applications, each with extremely different IO characteristics.

Performance Improvements – Most Performance-Dense Tier-0 System

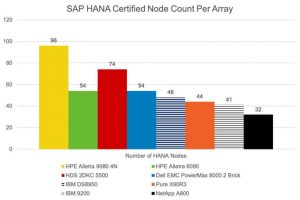

Just like with the Alletra 6000 blog, I will use the SAP HANA certification numbers to show real-world differences between different arrays.

I like it since all major vendors participate and it’s not easy to ‘game’ the benchmark. It’s also easy to see who’s faster. The more HANA nodes, the faster it is, no need to have a degree to interpret the results.

Before I show the results, some notable things to be aware of:

-

All this performance is achieved within 4U of space, which means rack space savings for customers

-

The Alletra 9000 is a tier-0 system for mission-critical workloads and offers a 100% uptime guarantee (most other vendors have no such SLA)

-

The vast majority of IO happens within 250μs

-

It has features meant for mission-critical workloads – things like Active Peer Persistence, complex replication topologies, Port Persistence and more

-

It is assisted by both InfoSight and Data Services Cloud Console, making it both easy to consume and able to lower customer risk.

Anyway, on to the numbers! All current as of May 13, 2021.

Some of you may think I’m cherry-picking for marketing purposes (plus the systems above aren’t all in the same tier-0 class) so allow me to explain.

The point I’m trying to make is the sheer amount of performance doable in 4U. With drive densities being what they are these days, it’s easy to configure enough capacity for most customers in just the base 4U system, making it an attractive option for saving on rack space.

The physically smallest possible HDS 5500 shown for comparison would need 18U to achieve 74 nodes – so, the Alletra 9000 can do 30% more speed in 4.5x less rack space. One could do an Alletra 9000, 2 switches and 12 servers in the same exact amount of rack space

The HDS can go faster with a lot more drives and controllers, and you’d need more than a full rack to realize that speed: 222 HANA nodes possible with over a full rack of stuff. An Alletra 6000 4-way cluster – in 16U – would do 216 nodes, but arguably it’s not the same class of system when it comes to replication options.

A PowerMax 8000 2-Brick (4 controllers) needs 22U and only does 54 nodes. A 3-brick system (6 controllers) can do 80 nodes and takes almost a whole rack (32U). So even with more controllers, a PowerMax needs 8x more rack space to provide less performance than an Alletra 9000. A maxed-out PowerMax 8000 can do 210 nodes but needs 2 full racks and massive power consumption – to achieve about 2x the speed of a 4U Alletra 9000… (EMC HANA numbers in more detail here, page 19, and PowerMax hardware details here – page 49). So much for all the hero numbers we’ve seen for that system.

Something like an IBM DS8950 is also not 4U, but much bigger physically and can only do half the performance. An IBM 9200R is multiple 9200s and if you have a rack of the stuff it could do 164 nodes… but a single 9200 appliance won’t do more than 41.

Same goes with NetApp, you’d need 3x A800 to hit the same node count as the Alletra 9000, which means the A800 has 3x less performance density than an Alletra 9000 (plus the A800 is not truly active-active in the sense that a single volume can only be served by a single controller, whereas in the Alletra 9000 all 4 controllers serve all volumes simultaneously).

As you can see, the Alletra 9000 performance density is something quite special.

Links to the results: Alletra 9000, Alletra 6000, HDS 5000, IBM DS8000, IBM 9200, Pure, PowerMax, NetApp.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter