Critical Capabilities for Object Storage

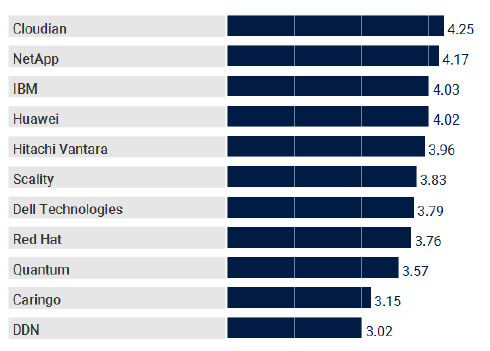

Cloudian leads in all categories.

This is a Press Release edited by StorageNewsletter.com on November 12, 2020 at 2:39 pmThis is an abstract of a report of 23 pages, published on October 21, 2020, and written by Chandra Mukhyala, Julia Palmer, Jerry Rozeman, Robert Preston, analysts at Gartner, Inc.

Critical Capabilities for Object Storage

Growing adoption of the Amazon S3 protocol by application vendors is expanding interest in on-premises object storage to store static data. In this research, we evaluate 11 object storage products vs. eight critical capabilities in use cases relevant to I&O leaders.

Overview

Key Findings

- Traditional enterprise applications that were dependent on NFS and SMB protocols are increasing their adoption of the Amazon S3 protocol to benefit from the scalability and geographical distribution available in object storage.

- Given the rise of the Amazon S3 protocol as a de facto access protocol for object storage, distributed file storage vendors are also offering S3 protocol access for objects stored in file

systems. - With increasing options for low-cost storage tiers and granular security, public cloud continues to increase its attractiveness as a viable alternative to on-premises object storage.

- Object storage vendors continue to add file protocol access, but the file access mostly remains a gateway and is not scalable and resilient like the underlying object storage.

Recommendations

I&O leaders responsible for unstructured data infrastructure should:

- Evaluate object storage systems for data that is mostly read-only once the data is written to eliminate the performance issues involved in modifying objects.

- Deploy object storage for applications that support the Amazon S3 API, instead of file interfaces, unless the object storage system has a scalable native file support to fully benefit from the scalability of the object store.

?Continue using your existing distributed file system if it supports native Amazon S3 and can meet your scalability requirements to avoid addition of another storage silo. Object storage becomes a necessity when global collaboration and scaling are key requirements. - Verify that you cannot get similar cost savings from your existing file-based storage (if cost is the primary driver) to avoid addition of another storage silo. Cost savings from object storage typically show up in large-scale deployments and not at entry-level configurations.

Strategic Planning Assumptions

By 2024, large enterprises will triple their unstructured data stored as file or object storage on-premises, at the edge or in the public cloud, compared with 2020.

By 2024, 40% of I&O leaders will implement at least one hybrid cloud storage capability, up from 15% in 2020.

By 2024, 50% of the global unstructured storage capacity will be deployed as SDS on-premises or in the public cloud, up from less than 20% in 2020.

What You Need to Know

Many storage requirements that object storage systems were created for originally are now also being met by distributed file systems on-premises, except at a smaller scale. In addition, public cloud continues to be an attractive alternative for on-premises object storage due to new lower-cost storage tiers and the overall agility of public cloud.

Key requirements that object storage systems were created for include:

- An HTTP-based protocol for accessing the underlying objects, not just locally but also from geographically distributed locations. Amazon Simple Storage Service (S3) has become the de facto standard for web-based access. Given S3’s popularity among application developers, many distributed file systems are also offering the same access protocol. Developers dealing with massive amounts of unstructured data like the simple means by which they can put and get objects using the S3 protocol. In 2019, many independent software vendor (ISV) applications started supporting S3 as an access protocol. Initially, backup and archive ISV applications started supporting S3 interfaces. But the same trend is now also seen in industry-specific applications, such as picture archiving and communication system and media asset management applications, where NFS and/or Server Message Block High-performance analytics applications are also now supporting the S3 protocol to write persistent data to object storage after processing the data in-memory.

- Low-cost storage from leveraging industry-standard x86 servers. Object storage systems typically adopt some form of software-based erasure coding to protect from drive, node or other hardware failures. This method of data protection provides a more resilient system vs. hardware failures and makes running on a standard x86 server acceptable, which leads to lower costs. However, erasure-coding techniques are not limited to object storage. The same capabilities are now available in many distributed file systems, making them an equally good choice if low-cost storage is a primary driver.

What remains unique to object storage and difficult for file storage systems to emulate is the elimination of the overhead involved in the number of operations required to write or read an object. Objects are written in a flat namespace, making them extremely scalable without the overhead of managing file system trees or the overhead of file locking present in file systems. This scalability can easily expand across geographical locations, making them the ideal storage system for collaboration use cases. In addition, object storage is ideally suited for applications that can leverage metadata associated with an object. Object storage allows for a variable amount of metadata that can be attached to the object to uniquely identify an object and to fetch it very quickly from massive unstructured data volumes.

The number of applications that require the massive global scalability or leverage custom metadata remains small in the on-premises world. This in turn makes the need for object storage systems relatively small compared with distributed file systems. Distributed file systems now support the S3 protocols and software-based protection from disk and hardware failures using erasure coding. Consequently, distributed file systems address two of the four reasons that object storage products were originally developed for.

Infrastructure and operations (I&O) leaders should pay careful attention to what capabilities they need from a distributed storage system and make careful assessment of what storage is best suited for a given application – distributed file or object.

They should evaluate:

- Distributed file systems, rather than object storage products, for workloads that frequently modify the underlying objects

- Object storage products for use cases that require massive scaling across geographical locations, and for applications leveraging custom metadata describing the objects

I&O leaders who need highly scalable, self-healing and cost-effective storage platforms for large amounts of unstructured data should evaluate the suitability of object storage platforms. They should use this research to identify appropriate products for their use cases.

Analysis

Critical Capabilities Use-Case Graphics

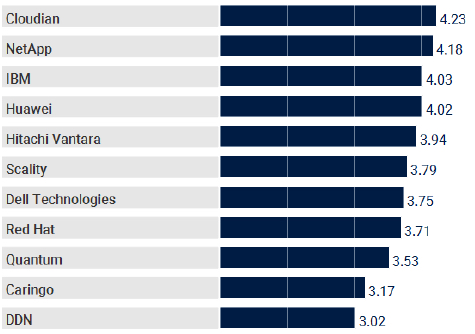

Vendors’ Product Scores for Analytics Use Case

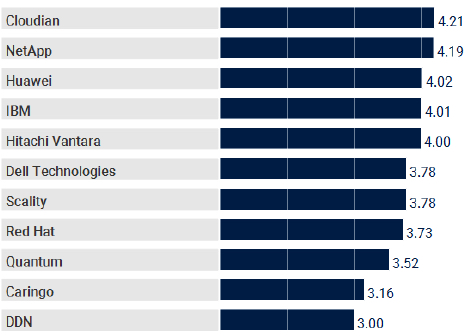

Vendors’ Product Scores for Archiving Use Case

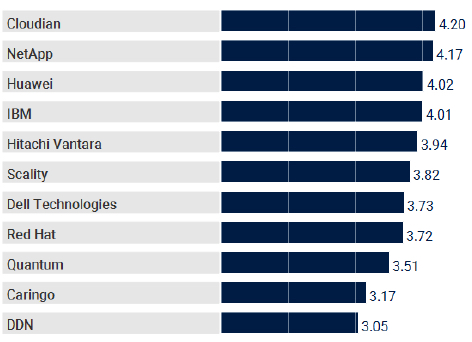

Vendors’ Product Scores for Backup Use Case

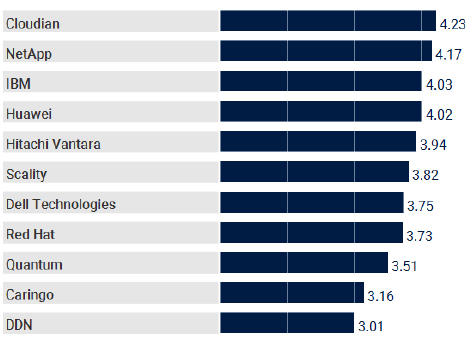

Vendors’ Product Scores for Cloud Storage Use Case

Vendors’ Product Scores for Hybrid Cloud Storage Use

Read also:

Critical Capabilities for Object Storage – Gartner

IBM Cloud Object Storage and Scality Ring with better positions

February 8, 2019 | Press Release

Critical Capabilities for Object Storage

Top companies: IBM/Cleversafe, Scality, HDS HCP, EMC, Cloudian

April 22, 2016 | Press Release

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter