Vast Data LightSpeed Storage Platform To Power Next Decade of Machine Intelligence

Combines disaggregated, web-scale architecture with hardware, Nvidia GPU application acceleration and reference architectures to democratize flash for AI computing.

This is a Press Release edited by StorageNewsletter.com on September 21, 2020 at 2:18 pmVast Data Ltd. announced the availability of its next gen storage architecture designed to eliminate the compromises of storage scale, performance and efficiency to help organizations harness the power of AI as they evolve their data agenda in the age of machine intelligence.

Dubbed LightSpeed, this concept combines the light-touch of the company’s NAS appliance experience with performance for Nvidia Corp.‘s GPU-based and AI processor-based computing, taking the guesswork and configuration out of scaling up AI infrastructure.

LightSpeed is both a product and a concept, designed to address all facets of storage deployment that system architects face when designing next-gen AI infrastructure, that includes:

-

Flash at HDD economics to eliminate tiering: The firm’s Disaggregated, Shared Everything (DASE) storage architecture was invented to break down the barriers to performance, capacity and scale – where flash economics make it finally possible to afford flash for all of a customer’s training data. By eliminating storage tiering, the exabyte-scale DASE cluster architecture ensures that every I/O request is serviced in real-time, thereby eliminating the storage bottleneck for training processors.

-

2X faster AI storage HW platform: LightSpeed NVMe storage enclosure delivers 2X the AI throughput compared to previous gens of hardware. This makes the system for read-heavy I/O workloads like those found within deep learning frameworks, such as computer vision (PyTorch, TensorFlow, etc.), Natural Language Processing (BERT), big data (Apache Spark), Genomics (Nvidia Clara Parabricks) and more. LightSpeed enclosures help customers increase their computing power with less hardware.

-

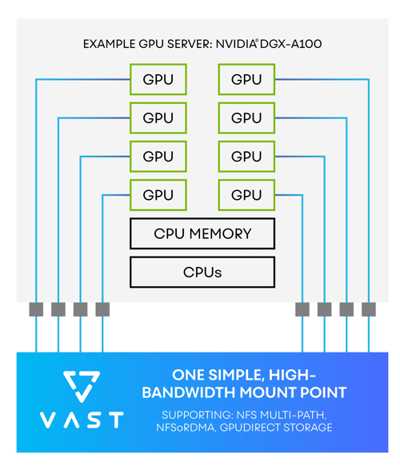

NFS support for Nvidia GPUDirect storage: The company supports performance with Nvidia GPUDirect Storage technology, currently in beta. GPUDirect enables customers running Nvidia GPUs to accelerate access to data and avoid extra data copies between storage and the GPU by avoiding the CPU and CPU memory altogether. In initial testing, the company demonstrated over 90GB/s of peak read throughput via a single Nvidia DGX-2 client, nearly 3x the performance of the firm’s NFS-over-RDMA and nearly 50x the performance of standard TCP-based NFS. By eliminating all bottlenecks, the 2 companies have paved the way for GPU-efficient computing using NFS file system.

Click to enlarge

In addition, the company also announced LightSpeed cluster configurations that make it simple for customers and partners to balance their GPU cluster scale with capacity and performance-rich storage:

-

The Line: 2xLightSpeed Nodes pair 80GB/s of storage bandwidth for up to 32 GPUs

-

The Pentagon: 5xLightSpeed Nodes pair 200GB/s of storage bandwidth for up to 80 GPUs

-

The Decagon: 10xLightSpeed Nodes pair 400GB/s of storage bandwidth for up to 160 GPUs

“Today, Vast is throwing down the gauntlet to declare that customers no longer need to choose between simplicity, speed and cost when shaping their AI infrastructure strategy,” said Jeff Denworth, co-founder and CMO. “Let the data scientists dance with their data by always feeding their AI systems with training and inference data in real-time. The LightSpeed concept is simple: no HPC storage muss, no budgetary fuss, and performance that is only limited by your ambition.“

“At AI scale, efficiency is essential to application performance, which is why it’s critical to avoid any unnecessary data copies between compute and storage systems,” said Charlie Boyle, VP and GM, DGX systems, Nvidia. “Vast Data’s integration of GPUDirect Storage in the new LightSpeed platform delivers a distributed NFS storage appliance that supports the intense demands of AI workloads.“

Customer adoption:

On the heels of its growing collaboration with Nvidia, the company is being selected by a variety of industry customers who are adopting AI to modernize their application environment, including LightSpeed customer wins at the Athinoula A. Martinos Center for Biomedical Imaging and with the US Department of Energy. With the firm’s flash economics, the Martinos Center has started a strategic shift to all-flash universal storage to help accelerate image analysis across massive troves of MRI and PET scan data, while simultaneously eliminating the uptime challenges previously faced with HDD-based systems.

“Vast delivered an all-flash solution at a cost that not only allowed us to upgrade to all-flash and eliminate our storage tiers, but also saved us enough to pay for more GPUs to accelerate our research. This combination has enabled us to explore new deep-learning techniques that have unlocked invaluable insights in image reconstruction, image analysis, and image parcellation both today and for years to come,” said Bruce Rosen, executive director, Martinos Center.

Resources:

LightSpeed e-Book

Blog: The Philosophy of LightSpeed

Blog: Mass General’s Martinos Center Adopts AI for COVID, Radiology Research

Read also:

2Q20 Revenue Growth of 490% Y/Y for Vast Data

Former NetApp president Tom Mendoza board’s director

September 1, 2020 | Press Release

Vast Data in Collaboration With Intel to Deliver Scalable Solutions for Life Science

Universal Storage offer verified as solution provider for Intel Select Solutions for genomics analytics

June 24, 2020 | Press Release

Vast Data Universal Storage Architecture V.3 With Support for Windows and MacOS Applications, Cloud Data Replication and Native Encryption

Some feature release delivers resilience, interoperability and security features required to enable enterprise, government and media customers to transition away from shared-nothing architectures.

May 7, 2020 | Press Release

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter