81% of Users With More Than One Storage Vendor

DataCore 8th consecutive storage market survey

This is a Press Release edited by StorageNewsletter.com on May 26, 2020 at 2:21 pmStorage Diversity Seen as Imperative to IT Modernization Efforts

Findings from DataCore Software Corp. 8th Consecutive Survey on the Storage Market

Summary of Findings

• 73% of respondents have more than one data center and 81% have more than one storage vendor. This heterogeneous storage infrastructure is a fact of life in most IT departments – and the problems that come with this type of diversity are numerous.

• The top 3 capabilities that respondents want from their storage infrastructure – but feel that are not currently receiving – are HA, BC / DR, and capacity expansion without disruption.

• Limited flexibility is the top reported technology disappointment or false start that respondents have encountered in their storage infrastructures.

• Block storage is a principal investment priority in terms of powering high-performance, mission-critical applications such as databases and other enterprise applications, as well as serving as primary storage.

• Roughly half of the market is looking to SDS or HCI to satisfy their primary and secondary storage requirements for the future – reflecting the increasing movement toward software-defined infrastructure to address many of the industry’s pain points.

• In terms of deployments, 64% of respondents fell within the range of “strongly considering” SDS to “standardizing on it.” The top business drivers for implementing SDS are to future-proof infrastructure, to simplify management of different types of storage and to extend the life of existing storage assets.

• The adoption rate of hyperconvergence is fairly similar to SDS, with 60% of respondents falling within the range of strongly considering it to standardizing on it. HCI has seen broad adoption in recent years, but the numbers show that SDS has caught up. IT increasingly realizes that HCI is primarily a subset of SDS – another way to implement it.

• Containers show strong growth, with 42% of respondents deploying containers in some manner, a high perentage for a relatively new technology. However, those who are deploying containers want better storage tools, because their top surprises include lack of sufficient storage tools or data management services.

• 86% of respondents agreed that predictive analytics is important in simplifying and automating storage management.

Introduction

The survey explored the impact of SDS on organizations across the globe.

The need for speed, agility, and efficiency is pushing demand for modern data center technologies (and architectures) that can lower costs while providing new levels of scale, quality, and operational e ciency1. However, the storage industry is still in the midst of a cost and complexity crisis, primarily due to the pace of change and innovation in enterprise computing during the last decade – which has created enormous pressure on the underlying storage infrastructure.

IDC research found that businesses are grappling with 50% data growth each year. Organizations are managing an average of 9.7PB of data in 2018 vs. 1.45PB in 2016. This increased pressure on IT has amplified complexity, as well – 66% of IT decision makers surveyed by ESG say IT is more complex than it was just two years ago. To keep up with these escalating demands, IT teams have rapidly expanded storage capacity, added expensive new storage arrays, and deployed a range of disparate point solutions. The resulting chaotic storage layer continues to be the root of many IT challenges, including the inability to keep up with rapid data growth rates, vendor lock-in, lack of interoperability and increasing hardware costs.

In addition, with the Covid-19 pandemic causing hardware shortages and reduced budgets putting certain IT projects on hold, companies have no choice but to nd ways to get more out of their existing storage resources.

As a result, IT managers are calling for the modernization of IT infrastructure – fueling an industry-wide shift toward software – defined infrastructure to help break silos and hardware dependencies, enabling storage to be smarter, more effective, and easier to manage.

This shift is also in part due to IT leaders’ realization that it’s the data and data services that matter and not the actual storage system-the software that controls where data is placed is where the value lies, not in the type of hardware or the media. At the same time, many companies are seeing the power of consolidating storage under a single, unified, software-defined platform to simplify and optimize primary, secondary, and archive storage tiers, managed by modern technologies such as predictive analytics/AI.

The findings of survey touch on these issues and explore how the industry views its current state and plans for the future.

Current State of Storage Industry

The survey sought to ascertain the storage industry’s needs, technology maturity, 2020 budget planning, current and future deployment plans and more. The survey polled 550 IT professionals across a range of markets to distill the expectations and experiences of those who are currently using or evaluating a variety of storage infrastructures, including SDS, HCI, object, file and cloud storage, to solve critical storage challenges.

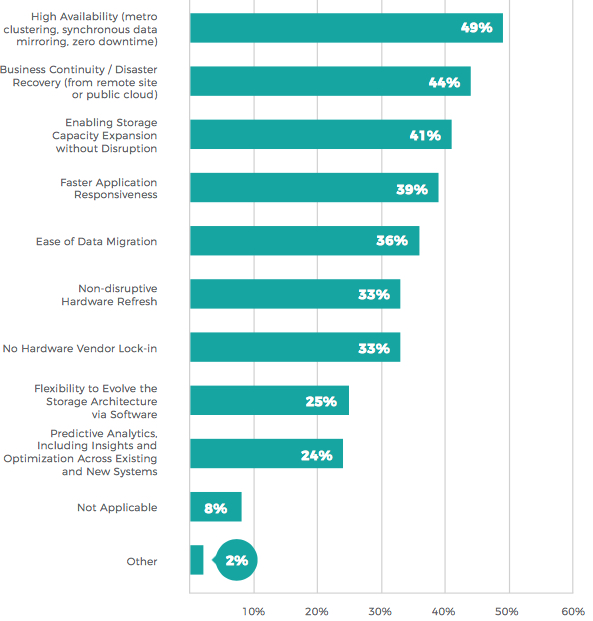

Respondents were asked; “What are the primary capabilities that you would like from your storage infrastructure (that you are not getting)?”

The answers were:

Let’s take a look at the breakdown of what the market wishes their storage infrastructure would provide.

The top 3 capabilities (HA, BC/DR and enabling storage capacity expansion without disruption) were the same chosen by respondents in our 7th consecutive market survey. Furthermore, the 6th market survey asked a similar question, “What are the primary capabilities that you would like from your storage infrastructure when virtualizing storage?” In that case as well, the majority of respondents identified BC from HA (metro clustering, synchronous mirroring) as number one, enabling storage capacity expansion without disruption in second, and DR following in a close third, among their top concerns.

So, the overarching goals for storage infrastructure (minimize disruptions; HA, BC and DR, and enable storage capacity expansion without disruption) remain the same year after year.

According to ESG, integrating and optimizing new infrastructure technologies while managing existing investments is a perpetual burden. “Organizations, therefore, have 2 choices: either increase their personnel and budgets enough to survive the evolution with just traditional tools, or redirect those people and budgets toward maximizing IT infrastructure flexibility, including abstracting from their applications any technology changes that they make to the infrastructure. Choosing that second option means IT can also choose the HA and DR architectures they want, the vendors they want, and the best storage according to price/performance … without the costs, complexity, and effort associated with data migrations.”

As IT architects and decision-makers look for ways to effectively address these challenges, software-de ned storage is increasingly being recognized as a viable solution for both the short and long term.

HA with Zero Downtime

When problems prevent applications from reaching data at one location, capabilities such as synchronous data mirroring provided by DataCore SDS are critical. Failover to the mirrored copy occurs instantaneously and automatically without disruption, scripting or manual intervention. Similarly, built-in automation takes care of resynchronization and failback to normal operations after the original cause of the outage is resolved.

This software-defined methodology brings a common, integrated approach to continuous availability across the variety of applications, OSs, hypervisors and storage hardware in place today, and those to come in the future. Using existing and/or different storage devices on each side allows companies to avoid the expense of buying additional storage hardware. This ultimately delivers “zero downtime, zero touch” failover to maximize BC.

BC and DR Planning in Today’s Complex Storage Environment

Previously, one would standardize on a single all-inclusive model of storage. But these days such singularity is neither practical nor affordable, resulting in multiple diverse systems that make up the storage infrastructure.

The most viable alternative separates BC/DR functions from the discrete storage systems. This is accomplished by up-leveling the services responsible for data replication, snapshots, CDP and rollbacks. Rather than embedding them inside each unit, the functions run outside in a device-independent layer. With a uniform control plane for BC/DR data services in place, the location, topology and type of storage become interchangeable. In other words, there’s very little change to BC/DR plans when any of these parameters change or expand.

The clear separation of data services from where the data is stored results in maximum flexibility to adapt proven downtime avoidance and recovery methods to new scenarios. This helps to reduce the cost and complexity of BC/DR plans, and achieve high levels of application availability while accelerating the pace of IT modernization.

Enabling Storage Capacity Expansion Without Disruption

Many forward-looking IT initiatives stop dead in their tracks due to the potential for disruption that they bring. The technologies involved are often simply too different, with very diverse methods of operation.

This is especially true when introducing new options to the storage infrastructure. One would think that all of the standardization in network protocols and I/O interfaces would make the addition of next-gen technology plug-and-play, and to a degree they do-but only from the standpoint of physical connectivity. It’s another matter altogether when it comes to administrative functions, where the procedures for provisioning capacity, protecting data and monitoring behavior vary drastically from one model to another-even among those from the same manufacturer. Blame it on separate development teams, OEM deals, or desire to innovate – the result is the same, broken processes and transition headaches.

However, these difficulties may be sidestepped with a universal control plane that treats new storage options as interchangeable components under a common set of administrative services. The user employs the same familiar operations to allocate disk space, safeguard data and track the overall health and performance of the storage resources – varied as they may be.

ESG states that SDS alleviates problems associated with perpetual migrations from one technology or vendor to the next. SDS is optimized to deliver infrastructure flexibility by abstracting the underlying storage technology from the application layer.

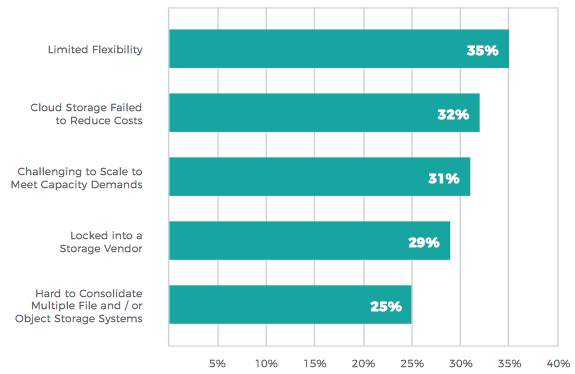

Along those lines, question was: “What technology disappointments or false starts have you encountered in your storage infrastructure?”

The top answers were:

IT teams have been rapidly expanding storage capacity and deploying a range of disparate point solutions to keep up with the pace of change. For example, they may have purchased a new storage solution to meet the needs of a specific project, but now find themselves locked in to a storage vendor as a result. This vendor lock-in creates limited flexibility, challenges in scaling capacity demands, systems that are hard to consolidate, and more.

It has become clear that a more fundamental solution is required to address the cost and complexity issues of the storage infrastructure. Analysts again point to SDS as a potential answer to these disappointments.

IDC noted that businesses need to leverage newer capabilities enabled by SDS that help them eliminate management complexities, overcome data fragmentation and growth challenges, and become a data-driven organization to propel innovation.

Gartner also noted that “IT leaders are counting on SDS to resolve many upcoming challenges.”

Gartner made several additional notable points in referenced report, including:

• SDS supports an expanding number of use cases and protocols.

• The 3 main benefits of SDS are agility, reliability and scalability.

• SDS can deliver performant storage solutions and reduce TCO.

• Because SDS is compatible with most industry-standard hardware, infrastructure and operations (I&O) leaders can utilize the latest storage media and quickly adopt high-performance protocols.

• By decoupling the software from the industry-standard hardware, I&O leaders can maintain hardware for a longer period without being forced to upgrade and migrate, while avoiding hardware vendor lock-in and forklift upgrades.

To further explore some of the potential causes of this overwhelming dissatisfaction, let’s review the additional data below.

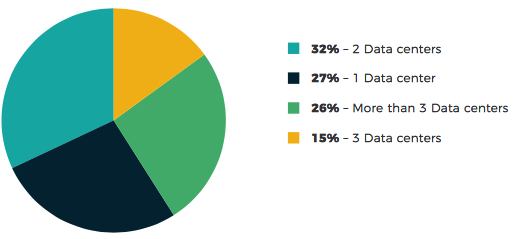

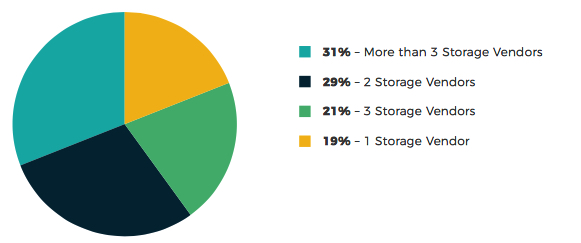

To begin with, questions were, “What is your total number of data centers (not including HA/DR sites)?” and, “How many total storage vendors do you currently use to support your infrastructure, across all data centers, including block, file and object?”

How many data centers do you have?

How many storage vendors do you have?

In aggregate, 73% of respondents have more than one data center and 81% have more than one storage vendor. This heterogeneous storage infrastructure is a fact of life in most IT departments-and the problems that come with this type of diversity are numerous.

First, many of these devices are incompatible, even from the same vendor, creating silos of capacity that can’t be shared. In addition, they have varying levels of performance, availability and services, making it a challenge to consistently meet the needs of mission- critical applications. Lastly, all these differences mean that a necessarily heterogeneous storage environment takes more time to manage, in finates costs, and makes it more difficult to respond to the needs of the business.

Furthermore, these discrete storage systems are often used separately for frequently accessed data that requires high performance, and for infrequently used data that requires lower-cost storage. For most organizations, this results in higher management overhead, difficulties migrating data on a regular basis, significant inefficiencies, and more.

The separation is often a result of the storage industry’s ingrained hardware-centric view AFAs, hybrid flash arrays, JBODs and other terms are used to describe the type of media used by the system. Instead, technologies such as SDS can abstract multiple types of storage, vendors, protocols, and network interfaces so storage is … just storage.

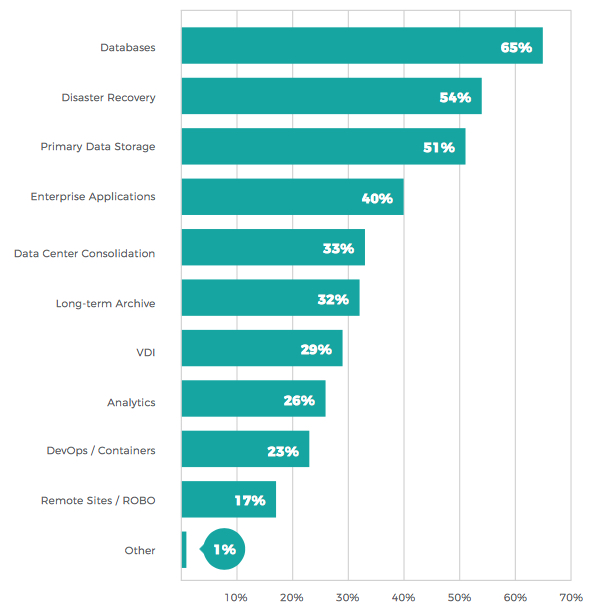

What use cases/applications are priorities for your investments in storage technologies?

The data illustrate that block storage is still a principal investment priority in terms of powering high-performance, mission-critical applications such as databases and other enterprise applications, as well as serving as primary storage. Elements such as DR, backup to the cloud, data center consolidation, long-term archive, etc., which often include archival systems such as file and object storage, are also a critical investment priority because of the sheer volume of data involved.

This diversity creates many of the challenges and disappointments introduced above and further highlights the need for unification and consolidation of storage under a universal control plane. Having a single solution to consolidate all of the storage resources, regardless of which of these different use cases is applied, will be necessary for implementing a modern software-defined infrastructure that will make the best use of existing resources while future-proofing the IT environment.

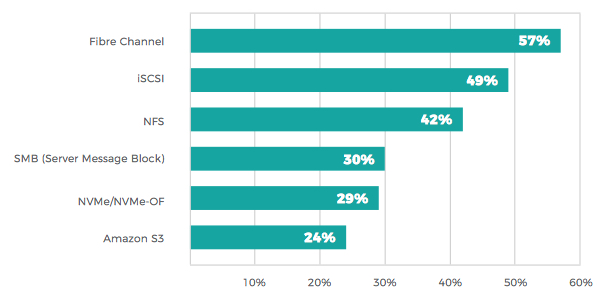

Which of the technologies listed below do your current storage solutions leverage?

Which of the technologies listed below will you leverage in your future storage solutions?

There has been a lot of talk in the market about FC vs. iSCSI. As this data shows, there is clearly still an overlap between them, although FC is the dominant of the two. However, the fact that this strong overlap exists means that IT departments need to have a technology solution that also handles the two.

The difference between NFS and SMB may be loosely correlated to the popularity of Linux compared to Microsoft. The reliance on S3 shows how many are using the cloud for long-term archival. The NVMe/NVMe-oF numbers are also interesting in terms of the speed of adoption of new technologies. NVMe/NVMe-oF has now been on the market for some time and is available in most newer servers. However, it still accounts for less than a third of the technologies being used in respondents’ current storage solutions. The majority expecting to leverage it will face the same challenges of integrating new technologies and migrating data.

For many of the reasons outlined above, SDS has been proven in recent years to help IT teams adopt technology faster.

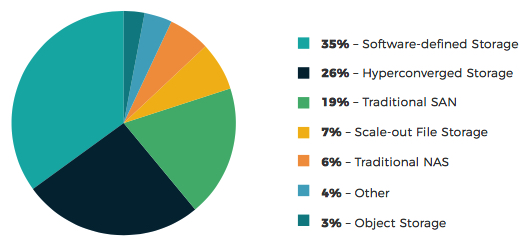

What technology will be your primary storage technology for the future?

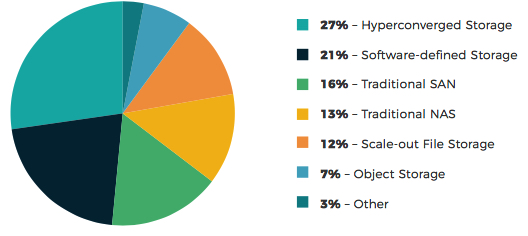

What technology will be your secondary storage technology for the future?

In aggregate, roughly half of the market is looking to SDS or hyperconverged storage as their primary and secondary storage technology of choice for the future. This strongly supports the conclusion that the industry is increasingly moving toward software-defined infrastructure to address many of its current pain points, and to continue to shape the modern data center.

IDC believes that organizations that do not leverage proven technologies such as SDS to evolve their data centers risk losing their competitive edge.

Evaluator Group notes: “Primary storage continues to evolve with improvements to performance, data management and protection. Over the next 12 months we expect significant changes with NVMe adoption, new telemetry for data management and adoption of technologies to integrate with the cloud.” In regards to secondary storage, “As data expands and is used in more places, the approaches and management need to change. NAS, object, scale-out file, along with SDS, are options to address this growth and scale.”

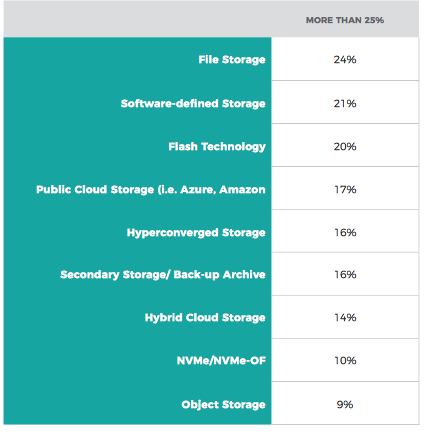

Which percentage of storage budget in 2020 is allotted to each of technologies listed?

File storage leads in the “more than 25%” category at 24%, likely due to the sheer volume needed to support unstructured data, with SDS right behind at 21%. This figure is in line with our previous survey showing that 22% of respondents were planning to allocate more than 25% of their budget to SDS. It’s important to note that file storage services also fit within the SDS umbrella.

Gartner states that end users have expressed increased interest in SDS solutions and vendors, especially in the areas of HCI, distributed le systems and object storage.

Moving forward, IDC notes that “the in flux of new storage technologies will help broaden the scope of legacy workloads that software-de ned storage will be able to handle at scale, resulting in very strong revenue growth for the sector as a whole …“

As this growth continues, the common belief that there is a need to migrate from one storage platform to another, such as file to object, will be dispelled. Users are starting to realize that these are just artificial constructs for how to interface with the data. As data becomes increasingly dynamic and the relative proportion of hot, warm, and cooler data changes over time, the temperatures/tier classifications will eventually become “shades of gray.”

Instead, a SDS will unify the diverse storage environments and make intelligent decisions on each piece of data, placing it in the tier it belongs given its performance and cost requirements, and optimizing performance, utilization, and cost dynamically.

The benefits of this approach include:

• Freedom: Abstraction from hardware, no lock-in

• Flexibility: Evolve the IT architecture via software

• Integration: No silos, maximum utilization

• Availability: Zero-downtime architectures

• Intelligence: Optimization across existing and new systems

SDS Observations

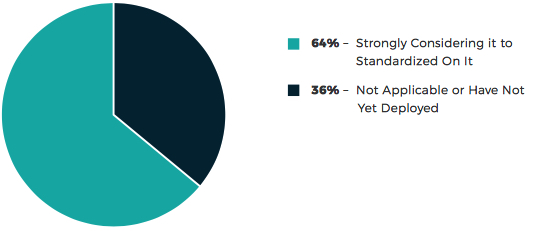

The report also explores the market’s receptivity to SDS by asking, “Where are you in your deployment of SDS?” 64% of respondents fell within the range of “strongly considering it” to “standardizing on it,” while only 36% were either not considering it or felt the question was not applicable to them.

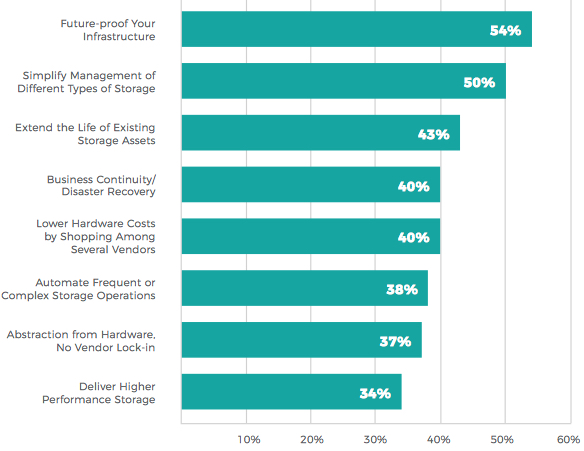

At the question “What are the primary business drivers for implementing SDS?” ,the top response was to future-proof infrastructure, selected by more than half (54%) of respondents. Additionally, respondents felt that SDS was important for simplifying management of different types of storage (50%) and to extend the life of existing storage assets (43%).

Additional business drivers identified were:

In the 7th consecutive market survey, the top business drivers identified were automate frequent or complex storage operations (60%); simplify management of different types of storage (56%); and extend the life of existing storage assets (56%). These business drivers share many similarities with this year’s survey findings, except that future-proofing concerns are rising back to the top with the advent of new technologies.

In the survey conducted in 2017, SDS business drivers showed the same top results as this year’s report.

These results are similarly in line with the potential of SDS to address the multiple challenges the industry has experienced to date, and illustrate the market’s recognition of the economic advantages of SDS and its power to maximize IT infrastructure performance, availability, and utilization. IDC forecasts the SDS market to grow at 15% CAGR to $21.3 billion by 2022 globally.

As enterprises continue to adopt SDS, they also realize that they can use existing storage investments longer than expected, and if they invest in something new, there is no fear it won’t work. In short, there is no need to toss out what you have or dread the process of adding something new.

Hyperconverged Infrastructure in Transition

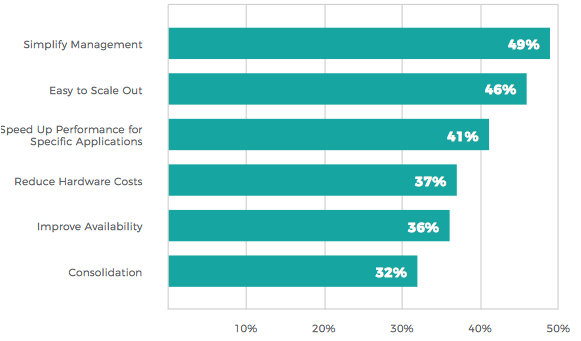

The study also explores where the market was in regards to HCI by asking, “Where are you in your deployment of hyperconverged storage?“

The adoption rate is fairly similar to SDS, with 60% of respondents falling within the range of strongly considering it to standardized on it. HCI has seen broad adoption in recent years; however the survey results show that IT has realized HCI is primarily a subset of SDS, or just another way of implementing it. As such, the true value in the technology lies in SDS. This is in line with the points expressed above by industry analysts that HCI is not always a separate decision from software-de ned storage-it’s often akin to a packaging decision.

When asked “Why are you currently deploying hyperconverged storage?” the top responses were to simplify management (49%); easy to scale out (46%); and to speed up performance for specific applications (41%). In the 7th market survey, speed up performance for specific applications (41%) and simplify management (38%) were also top factors.

Simplicity is a still a key reason to deploy HCI, year after year. This squares with analyst observations as well, including 451 Research, which stated: …”of organizations deploying HCI, 79% say its role is to simplify infrastructure acquisition, management and maintenance.”

Emerging Technology Trends

1. Containers

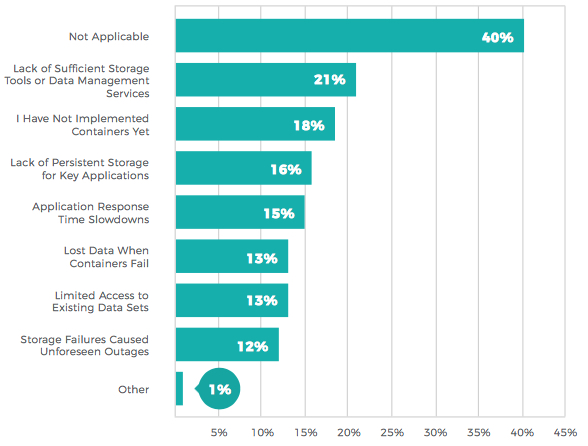

“If you are deploying containers, which of the following surprises/unforeseen actions

did you encounter after implementing?”

It can be inferred from the data above that 42% of respondents are deploying containers in some manner, a high percentage for a relatively new technology (40% responded it was not applicable and 18% stated that have not implemented containers yet). However, those who are deploying containers want better storage tools, as the top surprises reported include lack of sufficient storage tools or data management services at 21%. This is clearly an unmet criterion, as 19% answered in the same manner in our previous market report. 16% also answered that lack of persistent storage for key applications was a surprise/unforeseen action in their container deployments, nearly matching the 18% of respondents noting this in 7th consecutive market survey.

Containers are also applications that have their own unique needs. From a storage perspective, this technology should not be treated in isolation. As with many of the other technologies previously discussed, the best approach is to have a single way to address it as yet another important consumer of storage.

2. Predictive analytics

There has been growing excitement over the last few years about the application of ML and AI in predictive analytics.

We asked respondents, “How important do you feel predictive analytics is in simplifying and automating storage management?” An overwhelming 86% agreed that it was important, with only the remaining 14% feeling that this technology was not so important or at all important.

Forrester agrees. Its recent 2020 Predictions Report noted that “2020 will be the year when companies become laser-focused on AI value, accelerate adoption, learn through mistakes, overcome data issues, and embrace new talent realities.“

AI will be increasingly used in the storage industry to make intelligent decisions about data placement based on information such as telemetry data and metadata. These predictive analytics will deliver actionable insights to avoid problems and provide guidance for proactive optimizations, and will be able to determine where data should be stored and how much performance to give it. This will also help offload the administrative and manual activities associated with data placement and result in better capacity planning.

For example, automated storage tiering techniques will migrate data in the background across arrays and even into the cloud-using not only access frequency in choosing whether high-performance storage or lower-cost archival storage is appropriate, but also to determine when is the right time to migrate between them based on urgency, resiliency, aging and location. For enterprises, this will accelerate both time and money savings.

Furthermore, using AI and ML to analyze telemetry data will enable the detection of early warning signs of potential issues – assessing their relative severity and prescribing steps to prevent them or mitigate their impact.

ESG also predicted that intelligent data management will become more pervasive in 2020. Its Data Protection Predictions for 2020 brief said to “look for ‘insights’ features, data classification, self-service data reuse, compliance modules, data analytics-friendly workflows, and better BC/DR.”

Conclusion

2020 is predicted to be an important year for data strategy initiatives. However, the industry is in the midst of a cost and complexity crisis that will need to be addressed.

The pace of change in enterprise computing during the past decade has created enormous pressure on the underlying storage infrastructure. To keep up with these demands, IT teams have rapidly expanded storage capacity, added new storage systems, and deployed a range of disparate point solutions.

Many of these storage devices are incompatible, creating silos of capacity that can’t be shared. This diversity has created many of the challenges and technology disappointments noted throughout the 8th consecutive market survey, including the inability to keep up with rapid data growth rates, vendor lock-in, lack of interoperability and increasing hardware costs.

It has become clear that a new approach is required to grow and thrive in 2020 and beyond, and IT managers are calling for the modernization of IT infrastructure through a uniform control plane capable of tapping the potential of diverse equipment. SDS is increasingly being recognized as the solution to accomplish this, helping to break silos and hardware dependencies and enabling smarter, more effective, and cost-effective use of storage.

Enterprises are already seeing the power of consolidating storage under a single, unified, software-defined platform to simplify and optimize primary, secondary, and archive storage tiers, managed by technologies such as AI/ML-based predictive analytics. This will become necessary in implementing a modern software-defined infrastructure that will make the best use of existing resources while future-proofing the IT environment for years to come.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter