Latency Bottleneck and Impact on Enterprise Application Performance

Detailed look to improve application SLA

By Philippe Nicolas | April 9, 2020 at 2:28 pm Blog post published on CTERA Networks Ltd.’s web site, written by CTO Aron Brand

Blog post published on CTERA Networks Ltd.’s web site, written by CTO Aron Brand

Latency is the least understood but most important factor in application performance.

Network performance is measured by two independent parameters: bandwidth (measured in megabytes per second) and round trip latency (measured in milliseconds).

While the average user is more aware of how bandwidth impacts performance, in the world of broadband connectivity, it’s latency that is actually the bottleneck for the performance of most enterprise applications.

Latency as a Business-Critical Factor

Fast-responding applications lead to better productivity. As network performance improves on a global basis, users today are highly sensitive to even small latencies. A prime example is Amazon, which found that every 100ms increase in the load time of its website decreased their total sales by 1%.

Your organization likely also has seen increased bounce rates when the website hasn’t loaded at top speed. And if you personally have ever used a laggy application that hesitates when performing specific operations, you know how extremely disconcerting – and frustrating – it can be.

The latency problem is made worse by ‘chatty’ application protocols or naive programs that were designed by failing to take latency into account.

Real-World Example

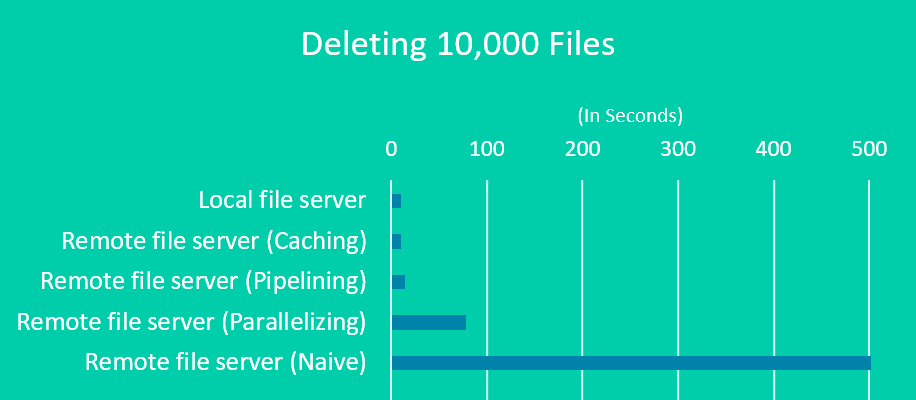

Consider a simple straightforward script that deletes a folder. The script recursively deletes all the files in the folder, and then deletes the folder. Deleting a file is a very quick exercise, around one millisecond (1 ms).

Now, let’s say we have a folder with 10,000 files.

The script running over a local file server would perform quite quickly, deleting the 10,000 files in 10s.

But the script would not perform as well if it were running over a file server located in a remote location with a round trip latency of 80ms (a typical latency for distributed organizations in the US reaching from coast to coast).

Now the operation takes 1ms but there is another 80ms round trip latency on top of it. Therefore, the same 10s deletion process would take… 13.5mn.

That’s 81x slower. Talk about a monster productivity killer.

Latency Solutions

What could an organization do to overcome the effects of latency on this deletion script? One solution would be to parallelize the script so that it deletes multiple files at once. Instead of a single thread, we could use 10 threads, which would result in a 10X performance gain and a decrease of the total time spent to 1.3mn. Still not great.

A better approach would be to send 10,000 filenames to the server without waiting for response for each one. This approach, often called pipelining, asynchronous processing, or bulk operations‘ would decrease the execution time to just over 10s, virtually eliminating the effect of latency.

However, this depends on the network protocol actually supporting such asynchronous processing operations, which often they do not. It also requires the client program (or script in our case) to be written in a specific way that allows the OS to properly queue the deletion operations and use the asynchronous facilities of the protocol (rather than a naive flow that instructs the file system to delete one by one). A better approach, but also much more complicated.

Caching Method

Another method to eliminate latency problems is through caching. For example, if I keep a local cache of the file system in a remote location, then accessing the files in that cache can be achieved with zero latency.

And if the cache is ‘write through’, i.e. allowing local writes, the user would not observe the latency problem because even write operations such as file deletion will be done locally, and then later propagated over the WAN.

(Caching has its own challenges, with different approaches to cache coherence, but this is a topic for another blog post.)

Latency, Proximity, and Speed of Light

Unlike bandwidth speeds of network links, which are growing at a fast pace, latency is not expected to significantly drop over the foreseeable future because it is governed by laws of physics. Typically, the speed of light in fiber optic cable measures approximately 200,000km/s. To put it another way, the signal will take 5ms to travel 1,000km in fiber.

Thus the round trip latency between Sydney and New York, a 16,000km distance, can never be less than 80ms. And in practice, it likely will be much longer because the fiber probably would travel a longer route, and there could be additional delays due to various network equipment in the path.

In reality the observed latency for the Sydney-New York link is a tad over 200ms.

Here are some commonly observed round trip latencies on the Internet:

| Barcelona | Tokyo | Toronto | Washington | |

| Amsterdam | 42 | 252 | 88 | 95 |

| Auckland | 259 | 185 | 230 | 218 |

| Copenhagen | 47 | 268 | 111 | 107 |

| Dallas | 134 | 134 | 44 | 45 |

| London | 30 | 216 | 92 | 76 |

| Los Angeles | 148 | 107 | 69 | 56 |

| Moscow | 74 | 300 | 128 | 126 |

| New York | 91 | 206 | 12 | 9 |

| Paris | 29 | 256 | 88 | 96 |

| Stockholm | 54 | 273 | 110 | 102 |

| Tokyo | 300 | 163 | 167 |

Latency is not going away; at least, not until we reach significant breakthroughs in quantum physics.

That’s why we believe that latency is today the most important factor in application performance, but one that’s least understood or appreciated by users.

To solve the latency problem software developers and IT engineers must be aware of software methods in order to optimize the communications – including multi-threading, caching, and pipelining – in order to reduce the amount of round trips that need to be done over the wire.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter