Comparing Relative Performance of Four Parallel File Systems – Panasas

BeeGFS, IBM Elastic Storage Server, Lustre ClusterStor L300, Panasas ActiveStor

This is a Press Release edited by StorageNewsletter.com on November 8, 2019 at 2:56 pm This report, sponsored by Panasas, Inc., was written by Robert Murphy, its director of product marketing, on October 27, 2019.

This report, sponsored by Panasas, Inc., was written by Robert Murphy, its director of product marketing, on October 27, 2019.

Comparing the Relative Performance of Different Parallel File Systems

Commercially available solutions that integrate parallel file systems have different form factors, different server/JBOD ratios and HDD counts which makes it challenging to compare relative performance and price/performance (this analysis is limited to HDD-based systems).

Instead of comparing performance per server or performance per rack, let’s look at throughput per HDD as a comparable figure of merit, since the number of disks has the primary impact on the system footprint, TCO, and performance efficiency. And isn’t that what a high-performance file system is supposed to do: read and write data to the storage media as fast as possible?

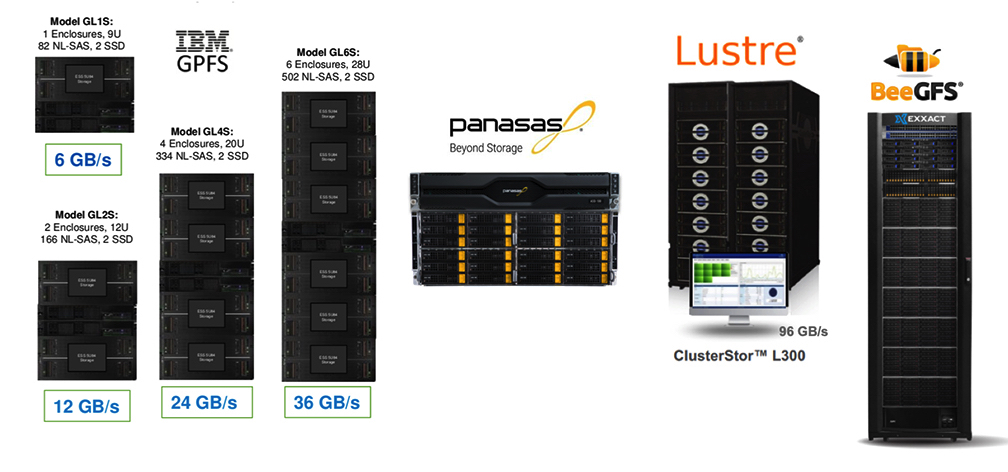

The diagram below shows an example of some of the solutions with different form factors on offer: PanFS, IBM Spectrum Scale (GPFS), Lustre and BeeGFS.

How to Compare Performance?

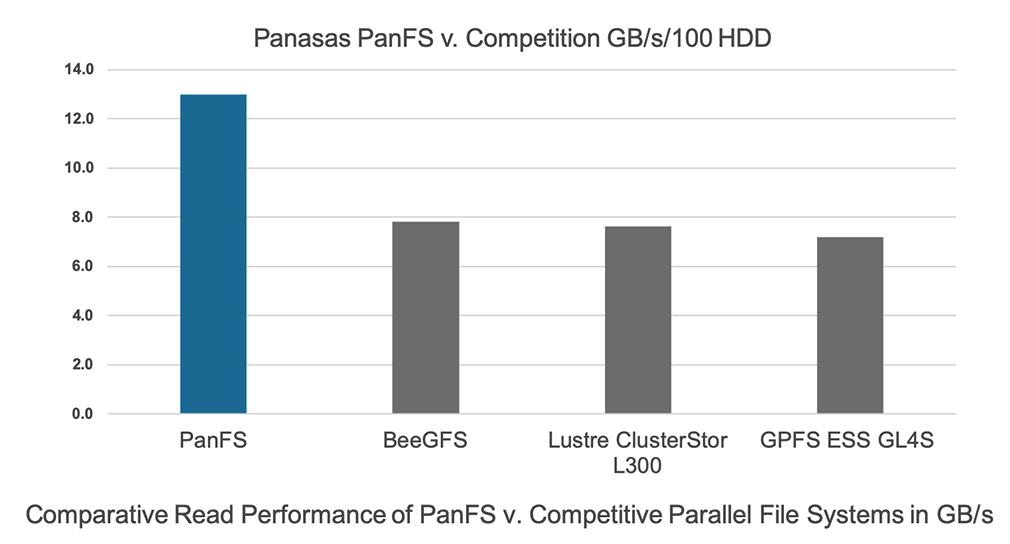

Let’s start with the published performance results of the IBM Elastic Storage Server (ESS) detailed here. The HDD-based Model GL4S is rated at 24GB/s and has 334 disk drives, or 71.86MB/s/HDD, and multiplying the per HDD throughput number by 100 results in 7.2GB/s/100 HDD.

For comparison, a 4 ASU Panasas ActiveStor Ultra with PanFS and 96 HDDs has a read throughput of 12,465MB/s, or 13.0GB/s/100 HDD.

For Lustre, let’s look at data from a large customer site (slides 67, 68) with the figure of merit calculated to be 50,000MB/s/656 HDDx100=7.6GB/s/100 HDD.

Lastly, let’s look at BeeGFS. There are several example systems with performance documented on the BeeGFS websitehttps://www.beegfs.io/content/documentation/#whitepapers, ranging from fast unprotected RAID-0 systems to slower ZFS-based systems. Since BeeGFS recommends servers with 24 to 72 disks per server for a high number of clients in cluster environments, let’s look at this ThinkParQ performance whitepaper. The results there were 3,750MB/s/48 HDDx100=7.8GB/s/100 HDD.

A summary slide showing the comparative performance of the parallel file systems mentioned is shown below.

Under the Hood: What Makes PanFS on ActiveStor Ultra So Fast?

PanFS features a multi-tier intelligent data placement architecture that matches the right type of storage for each type of data:

• Small files are stored on low-latency flash SSD

• Large files are stored on low-cost, high-capacity, high-bandwidth HDD

• Metadata is stored on low-latency NVMe SSD

• An NVDIMM intent-log stages both data & metadata operations

• Unmodified data and metadata are cached in DRAM

Because PanFS protects newly written data in NVDIMM, it allows the other drives to write data fully asynchronously, coalescing writes and placing data on the HDDs in tan efficient pattern for performance. Accumulating newly-written data in larger sequential regions reduces data fragmentation, so later reads of the data will also be sequential. In addition, ActiveStor Ultra features a balanced design that deftly optimizes the right amount of CPU, memory, storage media, and networking – with no hardware bottlenecks to deliver maximum PanFS performance and best price/performance.

Conclusion

Admittedly, this is just a simple first-order method to assess the relative performance of parallel file system-based high-performance storage using easily found public information. Everyone’s application mix and use-cases are different and specific actual targeted benchmarking is required to see how each system would perform vs. an organization’s specific workload. But it does show that the performance of PanFS on ActiveStor Ultra is serious competition compared to these systems and should be on your short list for new high-performance storage deployments.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter