Tech Alert From QuoByte: Reducing Operational Costs of AI/ML Architectures

Storage performance to take advantage of investment, and building flexible environment that grows when needed without maintenance and management overhead

This is a Press Release edited by StorageNewsletter.com on August 2, 2019 at 2:31 pmAI and ML applications are becoming ubiquitous in companies of all sizes, across all industries, and require significant up-front investment in hardware.

Along with initial costs, however, come ongoing operational costs of running these data-intensive workloads. Experts at Quobyte Inc. offer the following tips for reducing the operational expenses of AI/ML infrastructures.

Smart storage

AI/ML workloads have different performance profiles, including the high-throughput, low-latency requirements in the model training stage, plus there can be large-block sequential, small-block random, or mixed general workloads during the ingest or other stages. The storage must be performant enough to keep up with the varying requirements. GPUs are one of the most expensive assets in the whole system and should be kept busy, not wastefully underutilized.

Avoid point solutions

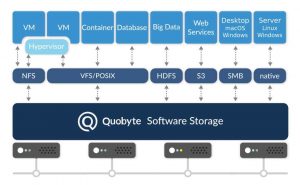

There should be no need to build and maintain a separate storage architecture for AI/ML. It’s tempting to keep production workloads on a different system, but cobbling together two or three products to handle AI/ML data requirements adds a layer of complexity, and incurs management and maintenance costs. Instead of an isolated infrastructure, use the performance and capacity resources you have.

No more tiers

Likewise, avoid cumbersome tiering. A unique characteristic of I/ML workloads is that all data is conceivably hot data. Training data is used very frequently, and should remain accessible. Typical tiering strategies introduce bottlenecks and can ultimately add to the overall costs, when you probably intended to save. Rather than using different storage based on whether data is hot or cold, mix HDD and SSD to get the best price/performance for your needs.

Eliminate migration

With one tier-free, unified storage infrastructure throughout the data center, you can eliminate the time-consuming and error-prone process of copying data from stage to stage and locality to locality. Data should never leave the file system at any point during the entire AI/ML lifecycle.

Consider cost of scale

AI/ML data sets easily grow to the hundreds of petabytes. A high-capacity storage solution may be as easy to manage as a single box today, but will require exponentially more manual labor when you have 200 of them. Maintenance looks very different at scale when drive failures, network issues, broken hardware, or updates become a daily occurrence.

Be ready for change

As project requirements change – sometimes more quickly than anticipated – the infrastructure must adapt. Downtime equals dollars wasted as GPUs sit idle. You should be able to add disks or servers when you need more performance, without any interruption to applications or services. Storage software can automatically detect and remove broken hardware; self-healing capabilities will use available resources elsewhere in the cluster until it can be replaced when convenient.

“Hardware and GPUs are a big up-front investment, but there are running costs to consider and the way the AI/ML infrastructure is built can add to or mitigate it,” said Bjorn Kolbeck, CEO, Quobyte. “Containing ongoing costs starts with getting the storage performance to take full advantage of your investment, and building a flexible environment that grows when needed without a maintenance and management overhead.“

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter