Reliability and Endurance of HDDs for NAS

By Toshiba

This is a Press Release edited by StorageNewsletter.com on July 26, 2019 at 2:33 pm![]() The author of this blog is Rainer W. Kaese, senior manager business development storage products, working for the introduction of enterprise HDD products into datacenters, cloud computing and enterprise applications, Toshiba Electronics Europe GmbH.

The author of this blog is Rainer W. Kaese, senior manager business development storage products, working for the introduction of enterprise HDD products into datacenters, cloud computing and enterprise applications, Toshiba Electronics Europe GmbH.

Reliability and Endurance of Storage Products for NAS Systems

Abstract:

When developing NAS solutions it is critical that the selected storage is carefully selected. Drives that are designed specifically for NAS applications provide the optimal combination of reliability and lifetime that users, whether home or business, rely upon to keep their data safe. This paper reviews the various factors that impact upon the reliability and endurance of storage products and how dedicated NAS drives fulfil these demands.

Content:

1. Reliability Specs

1.1 Operating Duty

1.2 Warranty Lifetime

1.3 Mean Time to Failure (MTTF)

1.4 AFR (Annualized Failure Rate)

2. Reliability Constraints / Operating Conditions

2.1 Operating Temperature

2.2 Rated Workload (HDD)

2.3 Load/Unload Cycle (HDD)

2.4 Start/Stop Cycle (HDD)

3. Reliability Specifications for different HDD Classes

3.1 Reliability Specification for NAS Drives Compared to Alternative HDD Classes

4. Conclusion

1. Reliability Specifications

The reliability specifications define the extremes of operation for a drive and the statistical likelihood of failure if they are stuck to. These can be compared with the requirements for the target application, such as 24/7 operation, to determine if the HDD is suitable or not. The following chapter explains in detail the main reliability specifications and how to interpret them.

- 1.1 Operating Duty

A major reliability-related criterion for the selection of storage components is the operating duty, which refers to how many hours in a day a drive has been designed to be active for. Client drives for desktop or laptop computers are typically designed to handle operation of, on average, 8 hours per day. This reflects a typical use case for these types of machines. In order to provide always-on, ever-available backup and recovery, NAS drives are optimized for use 24 hours a day in systems that, without good reason, are never powered down. - 1.2 Warranty Lifetime

The reliability of a product is warranted by the manufacturer for a defined period of time. For enterprise components this will typically be 5 years, whereas for NAS applications this lies at 3 years. Client drives will offer a warranty of somewhere between 1 and 3 years. This warranty is, however, dependent upon correct usage and deployment, meaning that environmental conditions, such as humidity and temperature, need to be observed during operation (see chapter 2). Should the drive be used outside these limits, the manufacturer can no longer be expected to exchange a failed drive under warranty. This also applies to failures that may occur outside of the warranty period. - 1.3 Mean Time to Failure (MTTF)

Unfortunately, there is no such thing as a perfect device. To provide some measure of the risk of failure during the lifetime of a drive, manufacturers guarantee that, amongst a population of devices, a defined failure rate will not be exceeded. As mentioned before, this does require that the drive is operated within the constraints defined. The measure provided for this value is MTTF, given in hours. This is a statistical value that defines after how much time a first failure in a population of devices may occur. If MTTF is given as 1 million hours, and the drives are operated within the specifications, one drive failure per hour can be expected for a population of 1 million drives. For the more realistic quantity of 1,000 drives, a MSP should plan for a failure every 1,000 hours (almost 42 days). In isolation, a single drive operating 1 million hours is equivalent to 114 years. However, it must be remembered that the MTTF specification is only valid for the warranty period of the drive. If the single drive were to be regularly replaced at the end of a warranty period of 5 years, the first drive failure could be statistically expected after 114 years and 22 replacement drives. - 1.4 AFR (Annualized Failure Rate)

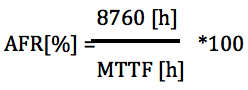

The expected statistical failure rate per year can be calculated from the MTTF by the following formula: AFR=1-e(-8760h/MTTF[h]) (1 year = 8760 hours). The reduction by an exponential term is required because the drives that have failed during this timeframe have to be considered in the statistics. However, for small AFR percentage, reflecting drives that already failed in the formula has negligible impact, allowing the formula to be approximated as:

A MTTF of 1 million hours would mean an AFR of 0.876% – or up to 9 drives in a population of 1,000 drives in operation may fail within a year. MSPs would have to budget for this number of repairs or replacement drives. Should the failure rate rise above 9 drives per year, it would be necessary to check that the drives are being operated within the temperature, humidity, vibration, and other specification limits provided for the model of drive. Some products provide MTBF as a statistical measure instead of MTTF. However, this indicates the time from one failure to the next after the first failure has been resolved through repair. Since storage devices cannot typically be repaired, only replaced, MTTF, rather than MTBF, remains the correct term for drives.

2. Reliability Constraints/Operating Conditions

The commitment to a specified MTTF for the warranty period is made on the understanding that the drive is operated within the limits of all the operating conditions specified for the product. Operating duty, covered in chapter 1.1, is just one of many elements that may be defined. In this chapter we review other constraints and conditions that must be considered.

- 2.1 Operating Temperature

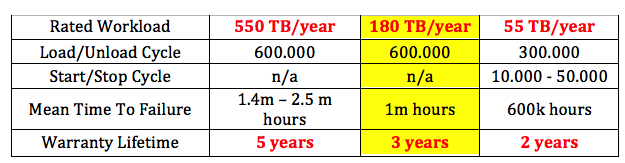

Heat, especially excessive heat, is highly detrimental for storage products. The spindle to which the platters are affixed relies upon a fluid dynamic bearing to reduce friction. The long-term impact of raised temperature is the leaking of the bearing’s oil which, ultimately, results in increasing the likelihood of failure. In order to provide the longest possible warranty period and highest MTTF, the operating temperature range is defined to best match the target application. For MSPs running cooled data centers, enterprise drives are usually specified for use from 5°C to 55°C. Consumer or client drives are rated for 0°C to 60°C, reflecting the combination of indoor and outdoor use laptops can be subjected to. NAS drives are specified to the same temperature range as enterprise drives since, typically, these are located in a cooled and secured location. The temperature specification is typically defined as either the ambient temperature, Ta, or case temperature, Tc. Ambient temperature is the temperature of the air around the immediate vicinity of the drive, whereas case temperature is measured on the surface of the drive itself. Operating drives outside of the temperature specification will increase component wear and reduce the MTTF value, negatively impacting the AFR. - 2.2 Rated Workload (HDD)

With their spinning platters and moving heads, HDDs have a number of components that can suffer wear. It should be clear then that the workload, i.e. the amount of data written and read, will have an impact on reliability. Drive manufacturers typically define a maximum workload per year for which the MTTF and AFR values remain valid. NAS drives are typically rated at up to 180TB/year. This is less than enterprise drives (550TB/year) but more than client drives (55TB/year). The difference between the split between read and write workloads has no impact on rated workload. - 2.3 Load/Unload Cycle (HDD)

Energy saving is an important aspect of all electronic devices, especially in applications such as NAS where many drives can be built into a single system. To support green initiatives, HDDs support an idle-mode. In this mode the R/W head is parked on a mechanical ramp while the spinning platters are brought to a standstill. When access to the drive is required again, the platters are spun-up and the head is brought out of its parked position again. This is known as a load/unload cycle. Because this feature introduces further stress on the HDD’s mechanics, the number of load/unload cycles is limited. High quality drives can support more than 100k load/unload cycles. This is equivalent to 10 load/unload cycles per minute for a drive operating 24/7 over its warrantied lifetime. The time taken to bring the platters rotating to the required speed and getting the heads back in position (load/unload cycle time) used to be a key factor during drive selection. However, with today’s drives offering load/unload cycle times of 20s or less, this has become essentially irrelevant with respect to HDD reliability. - 2.4 Start/Stop Cycle (HDD)

For drives that are not specified for 24/7 operation, the maximum number of start/stop cycles for the spindle motor will be defined. This normally lies at or around 50k cycles. For NAS systems, as 24/7 operating devices, this is not of relevance.

3. Reliability Specifications for Different HDD Classes

HDDs are designed with a specific application in mind. The demands of that application impact the warranty period offered, along with temperature range, rated workload and the other aspects included in the specification. Careful review of this information, along with a comparison with those of other HDD classes is highly recommended. Here the reliability specifications of NAS drives are compared with enterprise and client options.

- 3.1 Reliability Specification for NAS Drives Compared to Alternative HDD Classes

When selecting an HDD for a NAS it can be tempting to allow price to unfairly influence the drives chosen. In fact, client HDDs have often been the choice for NAS solutions, balancing the risks of reduced endurance against the redundancy provided by RAID array architectures. However, as can be seen in the following table, this results in suboptimal endurance due to the operating duty and rated workload, putting the essential and important data being stored at risk:

The HDDs developed for use in NAS systems provide an MTTF of 1 million hours over their 3-year warranty. The operational temperature range is limited to 5°C – 55°C like enterprise drives as they are typically installed in a room with a controlled environment. Their rated workload reflects their primary purpose, namely backing-up and restoring files. Contrasting them with the alternatives shown, they lie between the specifications of enterprise drives, which are over specified for NAS, and client HDDs, which are clearly unsuited to the expected 24/7 operating and workload demands. It should be noted that high end 19″ rackmount NAS systems are increasingly taking the place of the classic SAN storage systems. NAS systems for such business-critical applications should continue to be equipped with enterprise HDDs. This is because their operating environment and workload are comparable with traditional enterprise storage systems. Such rackmount NAS solutions are typically fitted with SAS backplane connectors. Even if typical NAS drives, with their SATA interfaces, would function in such systems, nearline enterprise drives with SAS interfaces offer better performance and reliability.

4. Conclusion

The availability of HDDs targeting NAS applications underscores the importance of this application segment. In this paper we have clearly listed and explained the key specifications that need to be considered when selecting a drive to ensure that its endurance and reliability match that of the target use case. It is clear that the demands on a storage drive built into a NAS are not as high as those of an enterprise application. However, the lower endurance and reliability, especially the 8 hour/day operating duty, of client drives is clearly not satisfactory for a NAS, even with the redundancy provided by a RAID array. Dedicated drives for NAS, offering an optimal price-performance balance, ensure that an organization’s critical data remains accessible and available whenever it is needed.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter