Qnap Mustang-V100 and -F100 Accelerator Cards for AI Deep Learning Inference

For NAS or PC, also working with OpenVINO toolkit to optimize inference workloads for image classification and computer vision

This is a Press Release edited by StorageNewsletter.com on June 26, 2019 at 2:13 pmQnap Systems, Inc. introduced two computing accelerator cards designed for AI deep learning inference, including the Mustang-V100 (VPU based) and the Mustang-F100 (FPGA based).

Users can install these PCIe-based accelerator cards into Intel-based server/PC or the company’s NAS to tackle the demanding workloads of computer vision and AI applications in manufacturing, healthcare, smart retail, video surveillance, and more.

“Computing speed is a major aspect of the efficiency of AI application deployment,” said Dan Lin, product manager, Qnap. “While the QNAP Mustang-V100 and Mustang-F100 accelerator cards are optimized for OpenVINO architecture and can extend workloads across Intel hardware with maximized performance, they can also be utilized with QNAP’s OpenVINO Workflow Consolidation Tool to fulfill computational acceleration for deep learning inference in the shortest time.“

Click to enlarge

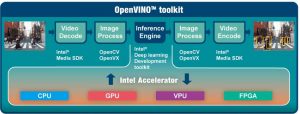

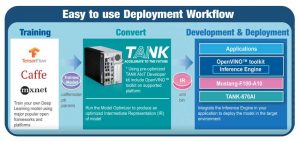

Both the Mustang-V100 and Mustang-F100 provide economical acceleration solutions for AI inference, and they can also work with the OpenVINO toolkit to optimize inference workloads for image classification and computer vision. The OpenVINO toolkit, developed by Intel Corp., helps to fast-track development of HPC vision and deep learning into vision applications. It includes the ‘Model Optimizer’ and ‘Inference Engine’, and can optimize pre-trained deep learning models (such as Caffe and TensorFlow) into an intermediate representation (IR), and then execute the inference engine across heterogeneous Intel hardware (such as CPU, GPU, FPGA and VPU).

Click to enlarge

As company’s NAS evolves to support a wider range of applications (including surveillance, virtualization, and AI), the combination of large storage and PCIe expandability are advantageous for its usage potential in AI. The firm has developed the OpenVINO Workflow Consolidation Tool (OWCT) that leverages OpenVINO toolkit technology. When used with the QWCT, Intel-based the company’s NAS presents an Inference Server solution to assist organizations in building inference systems. AI developers can deploy trained models on a company’s NAS for inference, and install the Mustang-V100 or Mustang-F100 accelerator card to achieve performance for running inference.

Firm’s NAS supports Mustang-V100 and Mustang-F100 with the latest version of the QTS 4.4.0 OS.

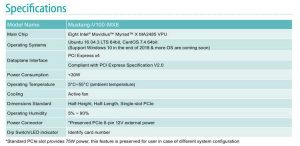

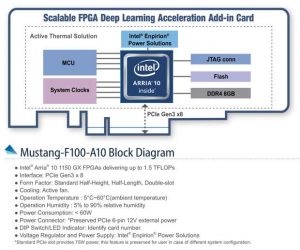

Key specs:

-

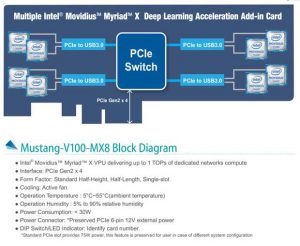

Mustang-V100-MX8-R10

Half-height, 8 Intel Movidius Myriad X MA2485 VPU, PCIe Gen2 x4 interface, power consumption lower than 30W

-

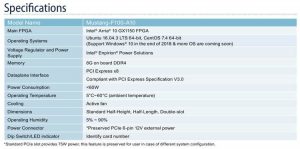

Mustang-F100-A10-R10

Half-height, Intel Arria 10 GX1150 FPGA, PCIe Gen3 x8 interface, power consumption lower than 60W

View the company’s NAS models that support QTS 4.4.0, visit the firm website.

Download and install the OWCT app for Qnap NAS, visit the App Center.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter