Object Storage More Than One Trick Pony – IDC

Article on blog of Hitachi Vantara

This is a Press Release edited by StorageNewsletter.com on May 6, 2019 at 2:38 pm This article was written on a blog of Hitachi Vantara on April 30, 2019, by Scott Baker, senior director of intelligent data operations.

This article was written on a blog of Hitachi Vantara on April 30, 2019, by Scott Baker, senior director of intelligent data operations.

IDC: Object Storage More Than A One Trick Pony

Since its introduction in the early 2000s, object storage has predominantly only been used for archival scenarios, where data is stored for extended periods of time – primarily for regulatory compliance reasons.

While archiving is still a compelling use case today, a recent IDC InfoBrief, sponsored by Hitachi Vantara, titled Object Storage: Foundational Technology for Top IT Initiatives (registration required), shows that object storage will take on a much more dynamic role in the years ahead – leveraging data analytics to unearth a slew of business insights.

“Object-based storage will certainly be playing an increased role in production-tier workloads,” says Amita Potnis, research manager, file and object-based storage systems, IDC. “As we continue to see adoption across all environments – both on-and off-premises – features like All-flash will help usher in new, more performant use cases beyond archiving that drive additional value for organizations.“

Moving Beyond Archiving

To better understand the landscape surrounding object storage, Hitachi Vantara commissioned IDC to conduct a survey with executives across the globe about their use-of and intentions-with the technology. As it turns out, this transition from a mere archiving platform to a robust, integrated, intelligent data management solution that drives business value is already underway.

In fact, of those surveyed, 80% of respondents believe object storage can support their top 3 IT initiatives related to storage – which include security, IoT and analytics for unstructured data.

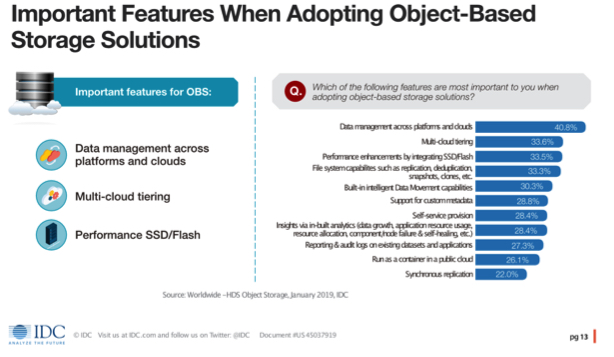

When asked which features are most important when adopting object-based storage solutions, respondents listed Data Management across Platforms and Clouds, Multicloud Tiering and Performance enhancements of integrating SSD/flash as the most crucial – signaling a demand for flexible solutions that not only operate across platforms but do so with speed. This also signals the shift to support higher-tier application workloads that demand more seamless scale and performance as well as higher quality data.

Data Cleansing – or Lack Thereof

Given the scalability of object storage, it should come as no surprise that archiving data has been a primary use case for most organizations. Many enterprises are often unable to reap the real value of archived data due to the lack of tools necessary to perform discovery and analytics operations. Thus, the untouched, or dark data, in the archive are forgotten and can become increasingly risky to the business over time. Data cleansing and classification operations can shine a light on the data you are retaining – making it easier to find when it’s needed and reducing the time spent on data preparation before it is analyzed.

With the amount of data being created continuing to grow, only 18% of respondents have processes in place to classify and categorize relevant data and purge irrelevant data. The lack of this process creates more work to cleanse and prepare data before it can add value in analytics activities. In addition, 55% of IT leaders surveyed indicated they retain their unstructured data for 5 years or more. Continued growth, combined with longer retention requirements, continues the problem of Data Deluge – or Information Overload, to put it simply.

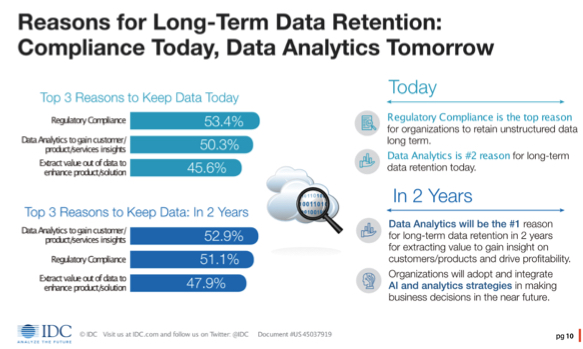

Data governance is the primary reason organizations retain these long-term data sets today, but in just 2 years’ time governance as a primary reason will give way to blending retained data sets into analytics use cases to gain customer, product, and services insights on an even greater history of its own data. Therefore, the more an organization leverages the data intelligence capabilities of a modernized object storage platform like the Hitachi Content Platformhttps://www.hitachivantara.com/en-us/products/cloud-object-platform/content-platform-anywhere.html (HCP), the less time will be spent on making data usable and the less like the data is to go dark or be forgotten.

Putting Object Storage to Work: Lessons from our Customers

One of the more cutting-edge object storage deployments we’ve seen to date was deployed by CARFAX, creators of a comprehensive vehicle history database available in North America. It receives information from more than 100,000 data sources including every U.S. and Canadian provincial motor vehicle agency, auto auctions, fire and police departments, collision repair facilities, fleet management and rental agencies, and more. Aggregating these data sources, blending and enriching the data, and tiering the data to the appropriate cloud platform in a performant and timely manner is critical to their multicloud strategy.

By combining the HCP with Pentaho Data Integration (PDI), CARFAX was able to aggregate thousands of data sources onto a centralized data hub. Once centralized, PDI is used to evaluate data quality, cleanse data sets to match defined quality standards, blend disparate data sources together, enrich metadata values, and direct the data to an appropriate tier based on their multicloud data strategy and downstream data analytics requirements.

As a result, it was able to achieve a 20-30% compute cost reduction and a 50%-60% storage cost reduction while improving governance of both the structured and unstructured data delivered to their business and data science teams by managing it all in one place.

What You Should Know About HCP

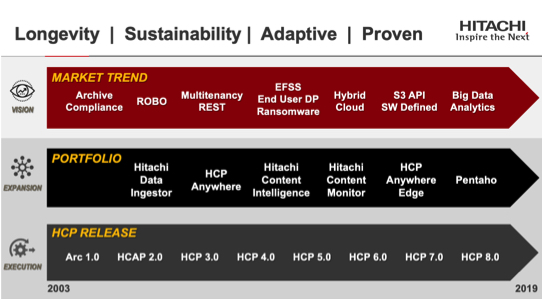

As the use cases for object storage are evolving, so too are the features and functionality we build into our products. With a keen eye on the market and customers, HCP has transitioned from an archival compliance tool to one fine-tuned for big data analytics.

As the market looks to the platform for new applications, we’re excited about the most recent updates to the HCP portfolio, which introduces some exciting new capabilities to support larger, more performant workloads, stringent security and more advanced data management activities:

- HCP All-flash G10 configuration: This high-performance configuration unlocks new uses for object storage and delivers increased performance for applications requiring low latency and higher IO/s or for applications that require faster metadata indexing and searching.

- Security Improvements: HCP regularly undergoes third-party security audits where the results are continuously incorporated into incremental product updates so that customers can stay ahead of the latest threats. With version 8.1.2 of HCP, the following security enhancements were added:

- New AWS user agent string: This feature provides greater security for data tiered to AWS S3 buckets. Through an AWS S3 bucket policy, access can be restricted based on the user-agent to prevent other applications from accidentally or maliciously accessing the HCP bucket.

- Apache common vulnerabilities and exposures (CVEs): Kernel upgraded from version 4.9.99 to 4.9.130 to protect against vulnerabilities.

- HCP S11 and S31: available, new S series nodes provide economical deep storage and protection in a small footprint to seamlessly extend private or hybrid cloud architectures. Additionally, users can safely and securely offload infrequently accessed content from valuable primary storage to this more cost-effective tier. The S series nodes deliver the scale and economics of the public cloud locally with large capacity drives, always on self-optimization processes to maintain data integrity, availability and durability, along with erasure coding to deliver long-term compliance and protection at the lowest cost. Key updates include:

- Higher density in a smaller footprint: 81% more disks in the same rack space than the current models, and 154% more capacity in the same rack space with 14TB HDDs.

- Lower cost of ownership by reducing data center space requirements and up to 55% lower maintenance cost per terabyte.

- Enclosures and new Intel Skylake processing technology that packs 81% more disks in the same rack space than the current models, and 154% more capacity in the same rack space based on new 14TB HDD.

• Hitachi Content Intelligence delivers new enhancements to workflow designer, content search, and content monitor. In addition to new AI and ML features for improved performance monitoring of HCP, this release includes data connectors to new data sources, processing stages to transform data in new ways, and a more streamlined user experience.

Building Object Storage Into Your Strategy

It’s clear that object storage has reached a maturity tipping point. In addition to traditional archiving, it is now viewed as a platform for newer cloud, critical and next generation of workloads. However, to get the most out of data, organizations should think beyond managing a single workload at a time and instead at a bigger data operations strategy. As highlighted in the IDC findings, today’s reason for long term data retention will be transitioning from compliance, data protection and risk mitigation to focus now on data search, analytics and insights. Having the right portfolio, capable of handling yesterday’s challenges in addition to delivering more compelling and value-added data management and analytics benefits, can lead to longer term investment protection and operational success.

The HCP portfolio is integrated to address challenges and adaptive to future needs. The portfolio includes HCP for object storage; HCP Anywhere for file synchronization and sharing and end-user data protection; HCP Anywhere edge as a cloud storage gateway to HCP; Hitachi Content Intelligence, where rapid insights are extracted from data; and Hitachi Content Monitor, a HCP reporting and analytics tool.

The HCP portfolio is a data services foundation addressing data needs from the edge of the business to the core data center and managed across a multicloud architecture. By consolidating and aggregating relevant data onto a performant and centralized data services platform, you’ll be better positioned to understand, govern and control your data, identify new insights, and extract more value from your most strategic asset, your data.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter