WekaIO Matrix Software Saturates GPU Cluster With 5Gb/s Per Client

Running Nvidia TensorRT inference optimizer over Mellanox EDR 100Gb IB

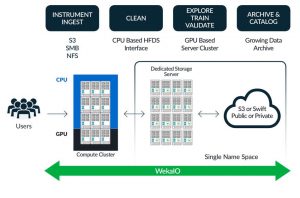

This is a Press Release edited by StorageNewsletter.com on April 6, 2018 at 2:11 pmWekaIO, Inc. announced that its Matrix software outperforms legacy file systems and local-drive NVMe on GPUs, delivering a performance boost and cost-savings for high performance AI applications when coupled with Mellanox Technologies Ltd.‘ IB intelligent interconnect solutions.

Click to enlarge

Matrix achieved 5Gb/s of throughput per client running Nvidia Corp.‘ TensorRT inference optimizer over Mellanox EDR 100Gb/s IB on eight Nvidia Tesla V100 GPUs, meeting the performance deep learning networs require. Customers with demanding AI workloads could expect to achieve 11Gb/s 256K read performance from a single client host, a performance benchmark that was achieved on a six-host cluster with twelve Micron 9200 drives.

Additionally, benchmark results showed that customers will experience a boost in performance over local-drive NVMe even when link speeds are reduced to 25Gb/s. Together, The company with Mellanox IB over local NVMe delivers performance for customers who need higher loads from single DGX-1 servers

Nvidia Tesla V100

The work with Mellanox provides a comprehensive view and understanding of Matrix software’s ability to distribute data across multiple GPU nodes to achieve higher performance, scalability, lower latency, and better cost savings for machine learning and technical compute workloads.

Mellanox SB7700 Series Switch-IB 1803 EDR

100Gbs InfiniBand switch

Mellanox is a supplier of performance interconnect solutions for performance GPU clusters used for deep learning workloads. When customers couple ConnectX-series IB with Matrix software, they realize performance improvements to data-hungry workloads without making modifications to their existing network.

“We are very excited by the results we are achieving with WekaIO,” said Gilad Shainer, VP, marketing, Mellanox. “By taking full advantage of our smart IB acceleration engines, WekaIO Matrix delivers world leading storage performance for AI applications.“

Click to enlarge

“In deep learning environments we see large compute nodes, almost universally augmented with GPUs, where customers need performance scaling so that they can train their large neural networks faster. Local file systems with NVMe fall short of the 5Gb/s of storage performance required to leverage the processing power of GPUs-leaving expensive GPU resources underutilized,” said Liran Zvibel, co-founder and CEO, WekaIO. “Our work with Mellanox demonstrates that WekaIO with IB provides the best infrastructure for environments with GPUs, delivering superior performance and economics for our customers.“

The solution with Matrix software was demonstrated at the Nvidia GPU Conference, March 27-29, 2018, in San Jose, CA.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter