HPE Demonstrates Memory-Driven Computing Architecture

New concept that puts memory, not processing, at the center of computing platform

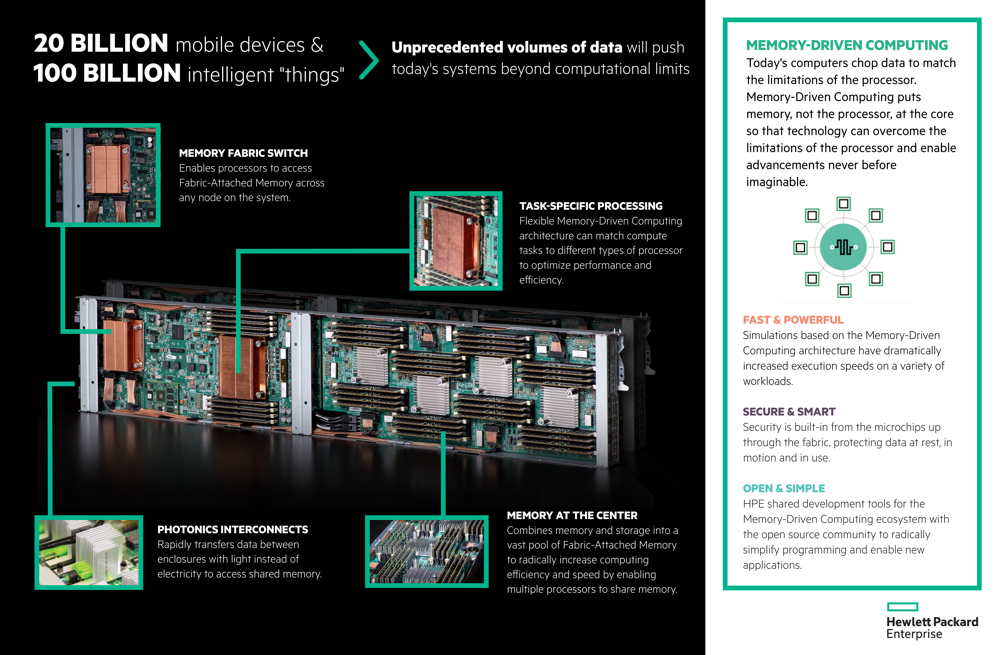

This is a Press Release edited by StorageNewsletter.com on December 8, 2016 at 2:59 pmHewlett Packard Enterprise Development LP (HPE) demonstrated Memory-Driven Computing, a concept that puts memory, not processing, at the center of the computing platform to realize performance and efficiency gains not possible today.

Click to enlarge

Developed as part of The Machine research program, the company’s proof-of-concept prototype represents a milestone in the company’s efforts to transform the fundamental architecture on which all computers have been built for the past 60 years.

Gartner predicts that by 2020, the number of connected devices will reach 20.8 billion and generate an unprecedented volume of data, which is growing at a faster rate than the ability to process, store, manage and secure it with existing computing architectures.

“We have achieved a major milestone with The Machine research project – one of the largest and most complex research projects in our company’s history,” said Antonio Neri, EVP and GM, enterprise group, HPE. “With this prototype, we have demonstrated the potential of Memory-Driven Computing and also opened the door to immediate innovation. Our customers and the industry as a whole can expect to benefit from these advancements as we continue our pursuit of game-changing technologies.“

The proof-of-concept prototype, which was brought online in October, shows the fundamental building blocks of the new architecture working together, just as they had been designed by researchers at the company and its research arm, Hewlett Packard Labs.

The firm has demonstrated:

-

Compute nodes accessing a shared pool of Fabric-Attached Memory;

-

An optimized Linux-based OS running on a customized SoC;

-

Photonics/optical communication links, including the X1 photonics module, are online and operational; and

-

New software programming tools designed to take advantage of abundant persistent memory.

During the design phase of the prototype, simulations predicted the speed of this architecture would improve current computing by multiple orders of magnitude. The company has run new software programming tools on existing products, illustrating improved execution speeds of up to 8,000 times on a variety of workloads. The company expects to achieve similar results as it expands the capacity of the prototype with more nodes and memory.

In addition to bringing added capacity online, The Machine research project will increase focus on exascale computing. Exascale is a developing area of HPC that aims to create computers several orders of magnitude more powerful than any system online today. Firm’s Memory-Driven Computing architecture is scalable, from tiny IoT devices to the exascale, making it a foundation for a range of emerging high-performance compute and data intensive workloads, including big data analytics.

Memory-Driven Computing and commercialization

The company is committed to rapidly commercializing the technologies developed under The Machine research project into new and existing products. These technologies currently fall into four categories: Non-volatile memory, fabric (including photonics), ecosystem enablement and security.

Persistent Memory

Non-Volatile Memory (NVM)

The firm continues its work to bring true, byte-addressable NVM to market and plans to introduce it as soon as 2018/2019. Using technologies from The Machine project, the company developed Persistent Memory – a step on the path to byte-addressable non-volatile memory, which aims to approach the performance of DRAM while offering the capacity and persistence of traditional storage. The company launched Persistent Memory in the ProLiant DL360 and DL380 Gen9 servers.

Fabric (including photonics)

Due to our photonics research, the company has taken steps to future-proof products, such as enabling Synergy systems that will be available next year to accept future photonics/optics technologies currently in advanced development. Looking beyond, the firm plans to integrate photonics into additional product lines, including its storage portfolio, in 2018/2019. The company also plans to bring to market fabric-attached memory, leveraging the high-performance interconnect protocol being developed under the recently announced Gen-Z Consortium, of which the firm recently joined.

Ecosystem enablement

Much work has already been completed to build software for future memory-driven systems. The company launched a Hortonworks/Spark collaboration this year to bring software built for Memory-Driven Computing to market. In June 2016, the company also began releasing code packages on Github to begin familiarizing developers with programming on the memory-driven architecture. The firm plans to put this code into existing systems within the next year and will develop next-generation analytics and applications into new systems in 2018/2019. As part of the Gen-Z Consortium the company plans to start integrating ecosystem technology and specifications from this industry collaboration into a range of products during the next few years.

Security

With this prototype, the firm demonstrated secure memory interconnects in line with its vision to embed security throughout the entire hardware and software stack. It plans to further this work with new hardware security features in the next year, followed by new software security features over the next three years. Beginning in 2020, the company plans to bring these solutions together with additional security technologies currently in the research phase.

More on the subject

Innovation at work:

How HPE is leading future of Memory-driven computing

By Meg Whitman, president and CEO, Hewlett Packard Enterprise

By Meg Whitman, president and CEO, Hewlett Packard Enterprise

Since the beginning of the computing age, we have come to expect more and more from technology and what it can deliver-faster, more powerful, more connected.

But today’s computing systems – from smartphones to supercomputers – are based on a fundamental platform that hasn’t changed in sixty years. That platform is now approaching a maturation phase that, left unchecked, may limit the possibilities we see for the future. Our ability to process, store, manage and secure information is being challenged at a rate and scale we’ve never seen before.

Computing in a time of ‘Everything Computes’

By 2020, 20 billion connected devices will generate record volumes of data. That number doesn’t even account for the information that we expect will be generated by the growing internet of industrial assets. Even if we could move all that data from the edge to the core, today’s supercomputers would struggle to keep up with our need to derive timely insights from that mass of information. In short, today’s computers can’t sustain the pace of innovation needed to serve a globally connected market.

To many, this feels like uncharted waters. But at HPE, we’ve invented the future over and over again. With a legacy of innovation that stretches over 75 years, from the audio oscillator to the first programmable scientific calculator to the first commercially available RISC chipset to composable infrastructure, we didn’t just seek to address problems – we sought to solve them by rethinking computing.

Today I’m proud to share that our team has successfully achieved a major breakthrough in our work to redefine computing as we know it. We have built the first proof-of-concept prototype that illustrates our Memory-Driven Computing architecture, a significant milestone in The Machine research program we announced just over two years ago. We believe this has the potential to change everything.

Introducing Memory-Driven Computing

Even the most advanced computing systems store information in many different layers and spend time shuffling data between them. Memory-Driven Computing enables a massive and essential leap in performance by putting memory, not the processor, at the center of the computing architecture – dramatically increasing the scale of computational power that we can achieve.

In this era of ‘everything computes’ – from smartphones to smart homes, sophisticated supercomputers to intelligent devices at the edge – Memory-Driven Computing has the potential to power innovations that aren’t possible today. And we’re working overtime to commercialize this technology throughout our product roadmap – from servers and software for enabling the data center of the future to providing advanced platforms for capturing value from the IoT.

We began research into Memory-Driven technologies to solve a fundamental and emerging challenge. We sought to take that challenge head-on by discovering new ways of increasing computational speed, efficiency and performance. That became The Machine research project. Since that time, we have invented new technologies and made discoveries that have resulted in breakthroughs in the fields of photonics communications, non-volatile memory and systems architecture. In October, we successfully integrated these breakthroughs into a unified Memory-Driven Computing architecture that allowed us to share memory across multiple computing nodes for the first time.

This milestone in The Machine research program demonstrates that Memory-Driven Computing is not just possible, but a reality. Working with this prototype, HPE will be able to evaluate, test and verify the key functional aspects of this new computer architecture and future system designs, setting the industry blueprint for the future of technology.

This is perhaps the largest and most complex research project in our company’s history, and I’m proud of what the team has been able to achieve.

And this is just the first of many milestones to come. As our founders might have said: we aren’t waiting to find out what’s next, we’re inventing what’s needed. We are confident that a Memory-Driven future is ahead and that HPE will continue to advance the technologies that will not only take the data deluge head-on but will also enable possibilities we have yet to imagine.

More on the subject

Behind-The-Scenes of The Machine Research project

Supersized-Science: on how Memory Driven Computing will change our understanding of the universe

Q&A with Sean Carroll, theoretical physicist, Theoretical Physics and Astrophysics, Walter Burke Institute for Theoretical Physics, Physics Department, Division of Physics, Mathematics, and Astronomy, California Institute of Technology, Pasadena, CA

Q&A with Sean Carroll, theoretical physicist, Theoretical Physics and Astrophysics, Walter Burke Institute for Theoretical Physics, Physics Department, Division of Physics, Mathematics, and Astronomy, California Institute of Technology, Pasadena, CA

Hewlett Packard Labs recently announced that it has successfully demonstrated Memory-Driven Computing for the first time. Enabling one of the most significant shifts in computing architecture in more than 60 years, this milestone for The Machine research program promises enormous potential in the realms of technology and beyond.

A handful of observers inside and outside the tech industry have had a preview of the technology, including Sean Carroll, a theoretical physicist at the California Institute of Technology. He is not only a deep thinker and prolific author but also a frequent contributor to television and film, translating science for the big screen, including serving as a consultant to the TV scientists of the CBS hit comedy The Big Bang Theory. Below he paints a picture of how science and research can change when no longer limited by today’s computing technology.

Question: Tell us about your background and the work that you’re doing at Caltech. What role, if any, does technology play in your field?

Answer: My research focuses on fundamental physics and cosmology, quantum gravity and spacetime, and the evolution of entropy and complexity. At Caltech, we often use supercomputers and sophisticated software to test theories and try simulations. A recent project from my colleagues involved simulating the formation of the Milky Way Galaxy.

What are your initial impressions of Memory-Driven Computing and The Machine research project? Can you liken it to any other breakthroughs you’ve seen over the years?

Every scientist knows that the amount of data we have to deal with has been exploding exponentially – and that computational speeds are no longer keeping up. We need to be imaginative in thinking of ways to extract meaningful information from mountains of data. In that sense, Memory-Driven Computing may end up being an advance much like parallel processing – not merely increasing speed, but also putting a whole class of problems within reach that had previously been intractable.

How does it spark your imagination? How do you think it changes the field of theoretical physics and related fields?

As soon as you hear about Memory-Driven Computing, you start thinking about two kinds of issues: data analysis and numerical simulations. Having too much data doesn’t sound like a problem, but it’s a real issue faced by modern scientists. We have experiments like the Large Hadron Collider, where we’re looking at colliding particles; the Laser Interferometric Gravitational-Wave Observatory, in which we study gravitational waves in spacetime; and the Large Synoptic Survey Telescope, in which we’re monitoring the sky in real time. Each of these projects produces far more data than we can thoroughly analyze.

On the simulation side, scientists are building better models of everything from galaxy formation to the Earth’s climate. In every case, the ability to manipulate large amounts of data directly in memory will allow us to boost our simulations to unprecedented levels of detail and accuracy. Taken together, these advances will help us understand the universe at a deeper level than ever before.

Take us forward 20 years. What do you expect you and your colleagues will be able to do when the computing paradigm shifts and exponential processing power is unleashed?

We might plausibly be discovering natural phenomena that would have completely escaped our notice. With modern, ultra-large data sets, there is a large cost associated with simply looking through them for new signals. If we know what we’re looking for, we do a pretty good job; but what you really want to do is probe the completely unknown. Memory-Driven Computing will help us find new surprises in how nature works.

What are the first problems you’d work to solve when having access to Memory-Driven Computing system?

As one of many possible examples: we know we live in a universe that has more invisible ‘dark matter’ than ordinary matter (the atoms and particles we examine in the lab). How does that dark matter interact, and how does it influence the structure of galaxies and the universe? To answer these questions we need to be able to simulate different theoretical models in extraordinary detail, examining how dark matter and ordinary matter work together to make stars and galaxies. By doing so, we might just discover entirely new laws of physics.

What are the long-term benefits and consequences of putting compute with orders of magnitude more power to work in the world? What do you expect it to mean for science, entertainment, everyday life?

Big data doesn’t just come from scientific instruments; it’s generated by ordinary human beings going through their day, using their phones and interacting online. I’m very excited by the prospects of artificial intelligence and brain/computer interfaces. Imagine a search engine that you can talk with like an ordinary person, one that understands what you’re really after. We’ll be connected not just through devices, but through virtual-reality environments and perhaps even direct interfaces with our brains. The idea of checking facts on Wikipedia is just the very beginning, and will look incredibly primitive in comparison with next-generation ways of connecting human intelligence to the world of information. To make that happen we need the kind of vast improvements in processing power that Memory-Driven Computation can enable.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter