Rising Deployment of Flash Tiers and Private/Hybrid Clouds Vs. Public for HPC – DDN

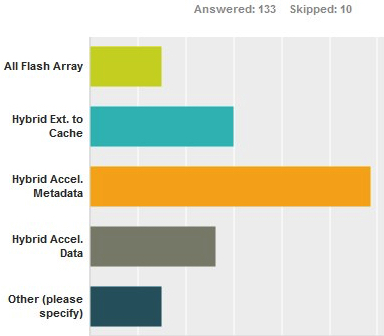

Only 10% of users using all-flash array, 80% hybrid flash arrays

This is a Press Release edited by StorageNewsletter.com on November 7, 2016 at 4:00 pmDataDirect Networks, Inc. (DDN) announced the results of its annual HPC Trends survey, which showed that end users in the world’s most data-intense environments, like those in many general IT environments, are increasing their use of cloud.

However, unlike general IT environments, the HPC sector is overwhelmingly opting for private and hybrid clouds instead of public. They are also increasingly choosing to upgrade specific parts of their environments with flash as they modernize their data centers. Managing mixed I/O performance and rapid data growth remain the biggest challenges for HPC organizations driving these infrastructure changes according to the survey.

Conducted by DDN for the fourth consecutive year, the survey polled a cross-section of 143 end-users managing data intensive infrastructures worldwide and representing hundreds of petabytes of storage investment. Respondents included individuals responsible for HPC and also networking and storage systems from financial services, government, higher education, life sciences, manufacturing, national labs, and oil and gas organizations. The volume of data under management in each of these organizations is staggering and steadily increasing each year.

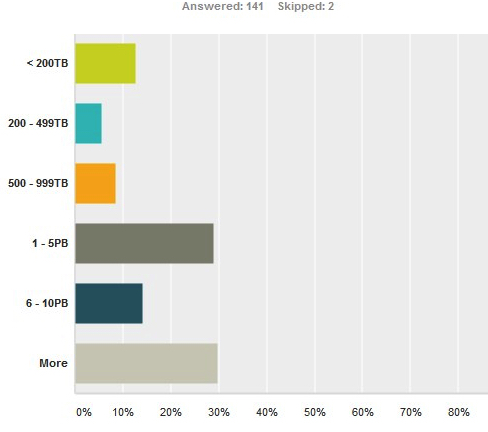

Of organizations surveyed:

- 73% manage or use more than 1PB of storage; and

- 30% manage or use more than 10PB of storage, up 5 percentage points year-over-year.

How much storage does your team manage or use

Data and storage remain the most strategic part of the HPC data center, according to an overwhelming majority of survey respondents (77%), as end users seek to solve data access, workflow and analytics challenges to accelerate time to results.

Survey respondents revealed the rising use of private and hybrid clouds within HPC data centers. Respondents planning to leverage a cloud for at least part of their data in 2017 rose to 37%, up almost 10% points year-over-year. Of those, more than 80% are choosing private or hybrid clouds versus a public cloud option.

“These responses are consistent with the trends DDN observes in customerconversations, the most prevalent of which is organizations rebounding from public cloud due to cost, poor latency and sheer data immobility issues,” said Laura Shepard, senior director of marketing, DDN.

Use of flash in HPC data centers has intensified with more than 90% of respondents using flash storage at some level within their data centers today. Perhaps surprisingly, while all-flash arrays are perceived by many to be the fastest storage available in the market, only 10% of surveyed users from these most data-intense environments are using an all-flash array. The vast majority of respondents (80%) are using hybrid flash arrays either as an extension to storage-level cache, to accelerate metadata, or to accelerate data sets associated with key or problem I/O applications.

Which of the following best describes your current use of flash storage?

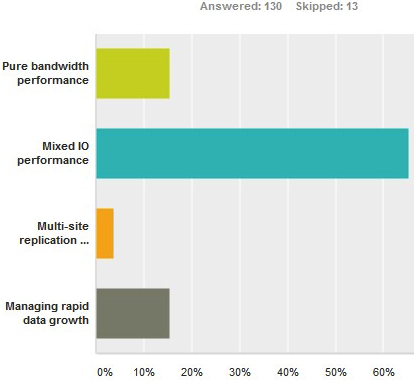

A diverse set of applications and an upsurge in site-wide file systems, data lakes and active archives are driving fast-paced data growth in large-scale environments and analytical workflows, which are placing rigorous demands on storage infrastructures and creating unique challenges for HPC users. Performance ranks as the number one storage and big data challenge by strong majority (76%) of those polled; and mixed I/O performance was cited as the biggest concern by a strong majority (61%) of the respondents, which represented an 8% point increase compared with last year’s survey results.

What is your biggest storage/big data challenge?

An even higher portion of respondents (68%) identify storage I/O as the main bottleneck specifically in analytics workflows. As these responses demonstrate, performance issues have escalated as big data environments contend with a proliferation of diverse applications creating mixed I/O patterns and stifling the performance of their storage infrastructure.

Only a small and diminishing percentage of respondents believe today’s file systems and data management technologies will be able to scale to exascale levels, while almost 75% of respondents believe new innovation will be required.

This belief is illustrated in respondents’ views

on addressing performance issues:

- The strong majority (60%) view burst buffers to be the most likely technology to take storage to the next level as users seek faster and more efficient ways to offload I/O from compute resources, to separate storage bandwidth acquisition from capacity acquisition and to support parallel file systems to meet exascale requirements. As an increasing number of HPC sites move to real-world implementation of multi-site HPC collaboration, concerns about security, privacy, and data sharing have intensified. A strong majority of those surveyed (70%) view security and data-sharing complexity as the biggest impediments to multi-site collaborations.

- One HPC organization that is at the forefront of employing technologies to keep its research data secure and private is Weill Cornell Medicine, a large, multi-site organization managing massive volumes of patient data. To watch a video of Vanessa Borcherding, Weill Cornell Medicine’s director of scientific computing unit, discuss their approach to securing potentially sensitive genomic data that needs to be available for collaborative research, click here.

With storage performance a critical requirement for today’s large-scale and petascale-level data centers, site-wide file systems continue to be a significant infrastructure trend in HPC environments, according to 72% of HPC customers polled. Site-wide file systems allow architects either to consolidate storage multiple compute clusters on the same storage platform and/or to provide the flexibility to upgrade storage and servers independently as needed. In addition to some of the largest supercomputing sites like those at Oakridge National Lab (ORNL), National Energy Research Scientific Computing Center (NERSC) and Texas Advanced Computing Center (TACC), site-wide file systems are expanding into more mid-sized data centers with a smaller number or smaller sized compute clusters.

“The results of DDN’s annual HPC Trends Survey reflect very accurately what HPC end users tell us and what we are seeing in their data center infrastructures. The use of private and hybrid clouds continues to grow although most HPC organizations are not storing as large a%age of their data in public clouds as they anticipated even a year ago. Performance remains the top challenge, especially when handling mixed I/O workloads and resolving I/O bottlenecks. Given this, it’s not surprising that 90% of those surveyed are using flash within their data centers, but what is notable is that the more storage experience a site has, the more likely they are to use flash to accelerate multiple tiers of storage rather than putting it all in one tier for one part of the workflow,” added Shepard. “Survey respondents also reaffirmed that storage is the most strategic part of the data center.”

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter