Key Storage Dimension: Latency Vs. Capacity Storage

Projections 2012-2026 by Wikibon CTO David Floyer

This is a Press Release edited by StorageNewsletter.com on September 2, 2015 at 2:54 pm This article, published on August 25, 015, has been written by David Floyer, Wikibon‘s resident CTO. He spent more than twenty years at IBM holding positions in research, sales, marketing, systems analysis and running IT operations for IBM France. He directly worked with IBM’s largest European customers including BMW, Crédit Suisse, Deutsche Bank and Lloyd’s Bank. He was a research VP at IDC.

This article, published on August 25, 015, has been written by David Floyer, Wikibon‘s resident CTO. He spent more than twenty years at IBM holding positions in research, sales, marketing, systems analysis and running IT operations for IBM France. He directly worked with IBM’s largest European customers including BMW, Crédit Suisse, Deutsche Bank and Lloyd’s Bank. He was a research VP at IDC.

Latency vs. Capacity Storage Projections 2012-2026

Premise

Wikibon believes latency storage vs. capacity storage is a key storage dimension, with different functional requirements and different cost profiles. Latency storage is found within the datacenter supporting more active applications, and in general has a high read bias. Latencies can vary from 1 millisecond down to a few microseconds; the lower (better) the latency the closer to the processor resources it is likely to be. It is also found for the metadata layer for capacity data. The boundary for latency storage will reduce down to 500 microseconds over the next three years.

Capacity storage is found in archive, log, time-series databases for the Internet of things and many other similar applications. In general it is write-heavy. Latencies are generally above 1 millisecond, do not have to be so close to the processor, and are suitable for remote private, public and hybrid cloud storage. Some parts of the capacity market place will have latencies as low as 500 microseconds over the next three years.

Wikibon has added this dimension to the other storage dimensions projected, which include HDD vs. Flash, Hyperscale Server SAN vs. Enterprise Server SAN vs. Traditional SAN/NAS storage, Physical Capacity vs. Logical Capacity and SaaS Cloud vs. IaaS Cloud vs. PaaS Cloud. All these dimensions are projected for both revenue and terabytes. There is a strong correlation and interaction between the latency/capacity dimension and flash/HDD dimension.

Latency/Capacity Functional Requirements

Wikibon believes there is an increasingly significant difference between latency driven storage and capacity driven storage. This is illustrated by internet service providers, who collect vast amounts of data, but supply back only a very small fraction of this data. There is latency sensitive data, utilizing read-heavy low-latency storage devices, and capacity mainly WORN* or WORAN* data that is Written Once Read Never, or Written Once Read Almost Never, in heavy write process.

Spinning disks currently provide a high percentage of high-write capacity storage. New disk functionality such as the HGST Shingled 10TB helium drive is designed for cold storage. The Shingled technology makes them suitable for writing, but the reading is neither fast nor high throughput. Multiple disks can be striped together to increase bandwidth, especially for sequential write data. However, investment in new types of capacity hard drives has almost completely dried up.

Wikibon expects flash to be increasingly adopted for latency storage, providing much faster read capabilities and more consistent IO performance. With low latencies, NVMe and capabilities such as atomic writes can significantly lower the IO path lengths, and increase the amount of data available to applications by three orders of magnitude (thousands of times faster). This, together with PCIe switching across clusters of processors (e.g., EMC’s DSSD product) with revolutionize application development, and speed the introduction of systems of intelligence.

In addition, Wikibon expects flash drives to take an increasing amount of capacity storage market over time, with flash products designed to meet the capacity requirement. The rapid introduction of volume 3D flash products for commercial use will drive down enterprise IT flash costs at significantly higher than -30% CAGR over the next two years. In addition, Wikibon expects the metadata for capacity storage to be increasingly held in latency flash storage, similar to the Flape (combination of flash and tape) concept introduced by Wikibon. The introduction of NVMe and atomic writes will lower the processor overheads and increase the bandwidth for capacity storage. Wikibon expects new technologies and techniques using erasure coding will allow much lower costs for local data (e.g., Pivot3’s implementation) and for distributed data (e.g., Scality’s RING storage technology).

Wikibon Projections 2012-2026

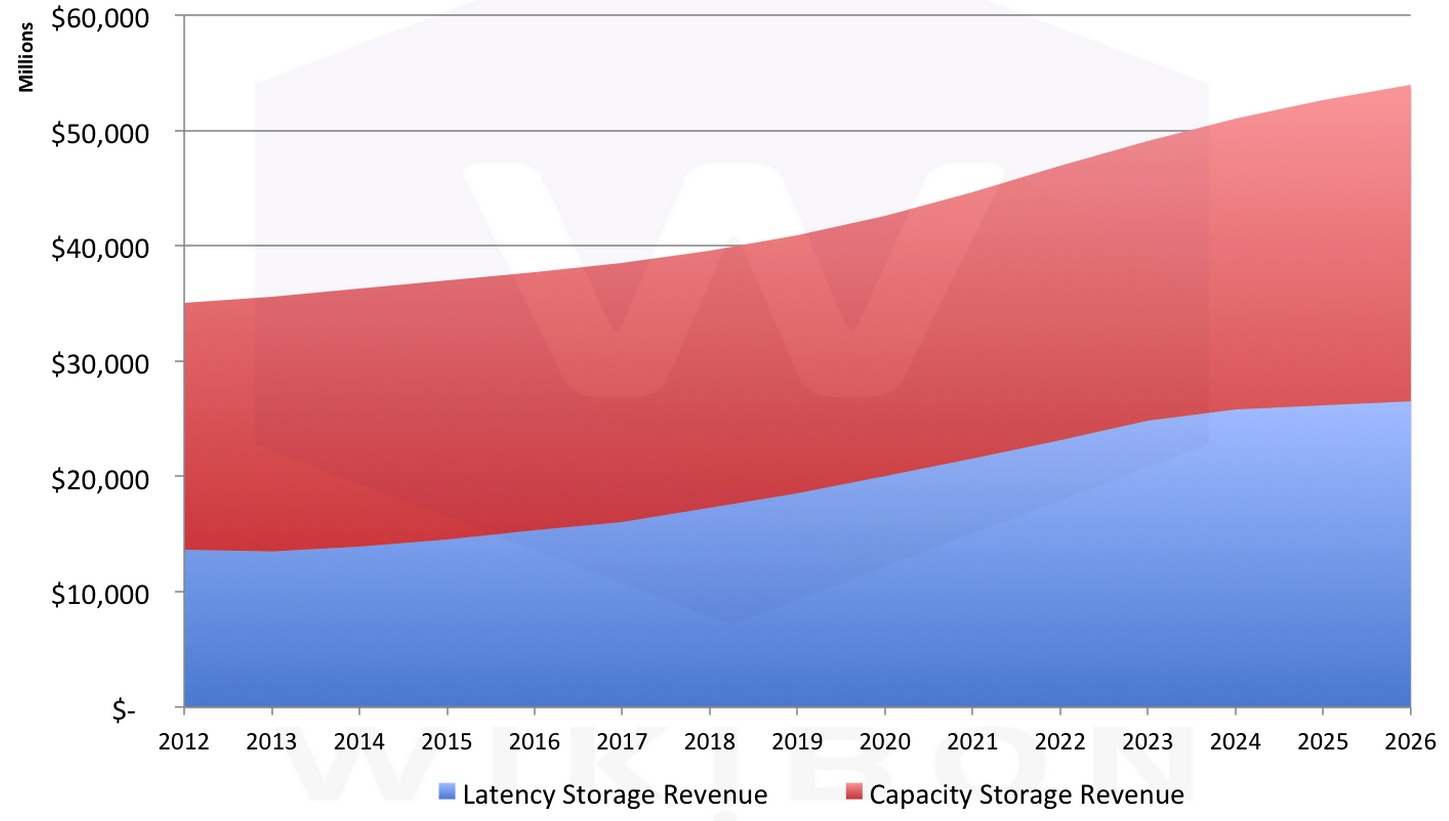

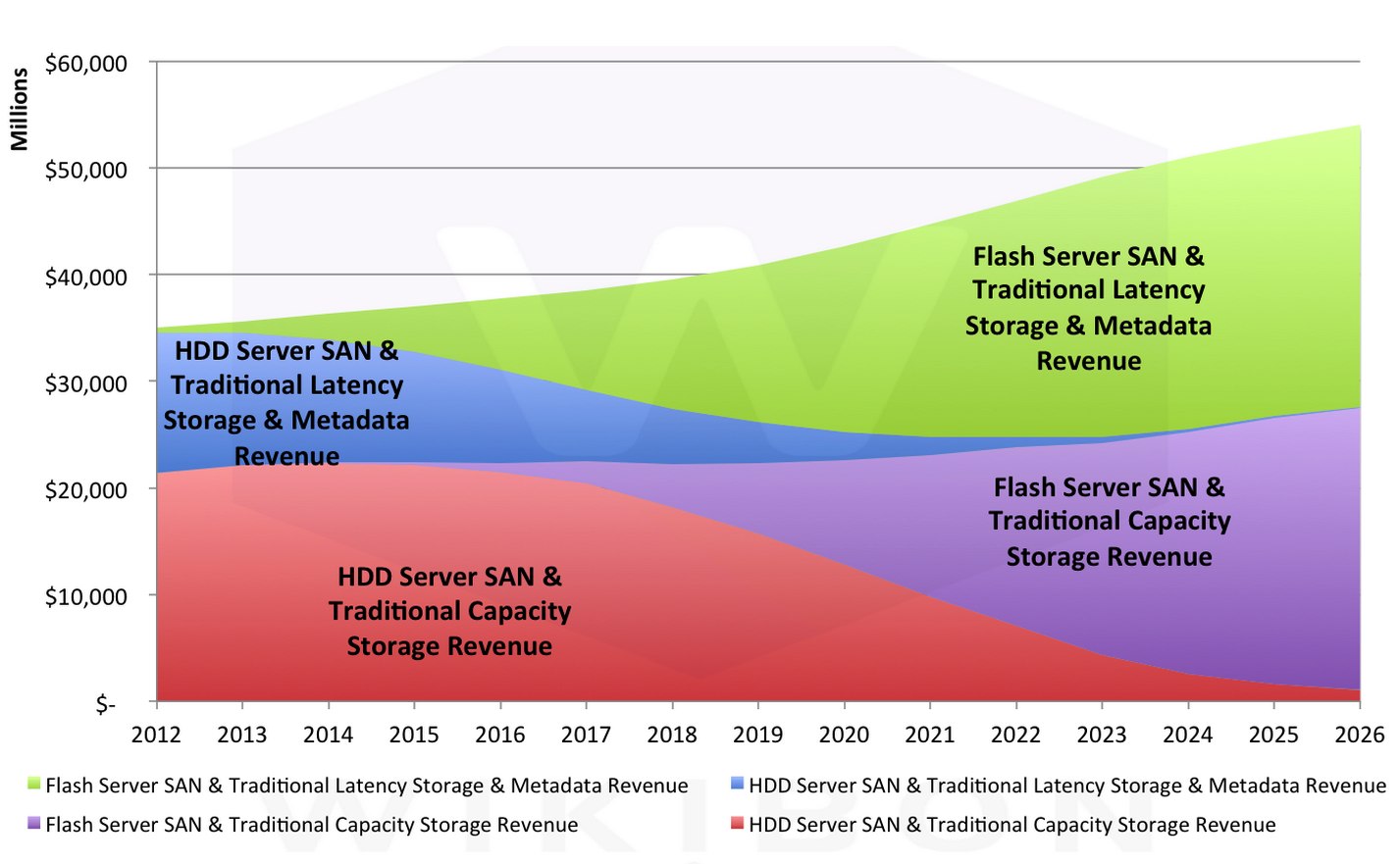

Figure 1 shows the 2012-2026 historical and projected storage latency vs. capacity revenues. It shows that capacity and latency markets are about equal, and are expected to remain in balance going forward. The lower the latency, to higher the cost/terabyte. Wikibon expects EMC to deliver its DSSD product with IO times of 50 microseconds within a PCIe server cluster network in 2015, and a range of similar products from multiple vendors to be introduced in 2016 and beyond.

Figure 1: Storage Projection by Latency vs. Capacity, 2012-2026

(Source: Wikibon Server SAN & Cloud Research Projects 2015)

(Source: Wikibon Server SAN & Cloud Research Projects 2015)

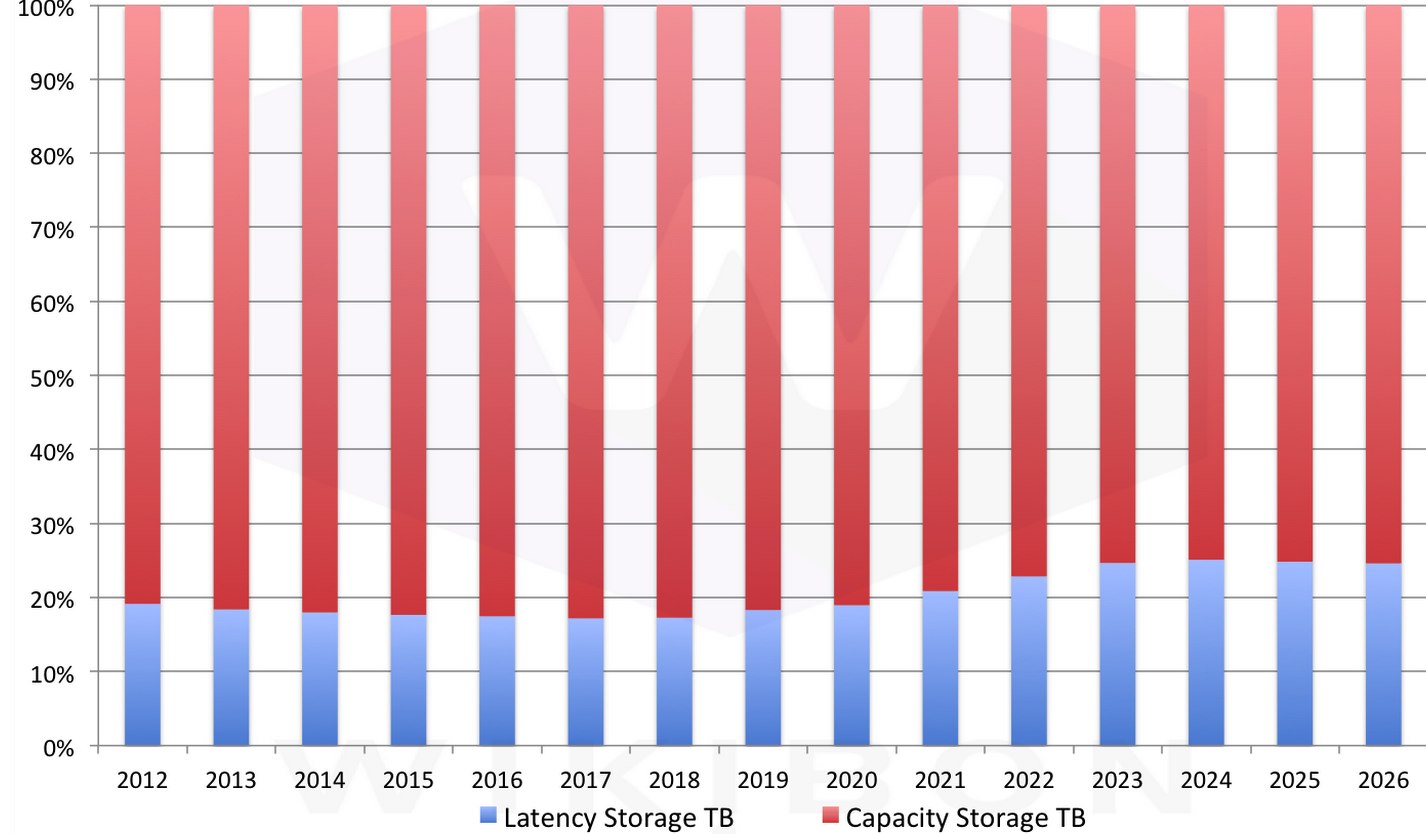

Figure 2 shows the capacity projections of latency and capacity storage as a percentage of the total capacity. It shows that the capacity market is about 4 times the volume of the latency market. The price of capacity flash is already under $1,000/TB without compression or deduplication (e.g., SanDisk’s OEM InfiniFlash, being re-sold by Tegile following the investment by SanDisk in Tegile).

Figure 2: Storage Terabyte Projection by Latency vs. Capacity, 2012-2016

(Source: Wikibon Server SAN & Cloud Research Projects 2015)

(Source: Wikibon Server SAN & Cloud Research Projects 2015)

Figure 3 shows the split between HDD and flash within the Capacity/Latency Split in Figure 1. The HDD for latency storage within the total combination of Hyperscale Server SAN, Enterprise Server SAN and the traditional SAN/NAS market is shown to be decreasing rapidly over the next few years. Within the Hyperscale Server SAN, Wikibon projects a short-term increase in latency HDD revenue, but declining sharply after 2018. In the capacity space, Wikibon projects an increase in capacity HDD revenue in 2015, with a slow decline of the HDD market until the early part of the next decade.

Figure 3: Storage Revenue Projection by HDD and Flash within Latency vs. Capacity

from Figure 1, 2012-2016

Enlarge

(Source: Wikibon Server SAN & Cloud Research Projects 2015)

Action Item

Wikibon recommends CIOs and senior IT executives plan for separate latency and capacity storage networks. It may be useful to conceptualize this as streams of data from many sources. The streams come in from people, from IT system processes, from video and surveillance, from sensors coming in from mobile devices and the Internet of Things, and from other many other sources. A fraction of this data is diverted to active processing, and the remainder written out and very rarely accessed again. After the active data is processed, again the majority it written out as WORN* or WORAN* data. What remains is metadata about the data, saying what is stored where.

*WORN (Write Once and Read Never) and WORAN (Write Once and Read Almost Never) data is initially written to capacity storage, and metadata created within latency storage. When required it is accessed initially through the metadata. Capacity storage is a strong candidate for geographically distributed cloud storage services, using techniques such as erasure coding.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter