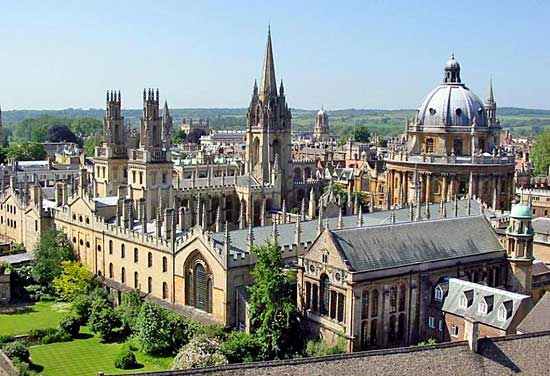

New HPC Cluster at University of Oxford Designed and Integrated by OCF

With Panasas storage upgraded providing 400TB

This is a Press Release edited by StorageNewsletter.com on April 21, 2015 at 2:48 pm- 5,280 core cluster serves as central resource for entire University

- SLURM job scheduler manages workload across both GPUs and 3 generations of CPUs in Lenovo servers and existing Dell services,

- Panasas storage upgraded providing over 400TB of usable storage capacity

Researchers from across the University of Oxford will benefit from an HPC system designed and integrated by OCF PLC, a big data management, storage and analytics provider.

The Advanced Research Computing (ARC) is supporting research across four divisions; mathematical, physical and life sciences; medical sciences; social sciences; and humanities.

With around 120 active users per month, the new HPC resource will support a broad range of research projects across the university. As well as computational chemistry, engineering, financial modeling, and data mining of ancient documents, the new cluster will be used in collaborative projects like the T2K experiment using the J-PARC accelerator in Tokai, Japan. Other research will include the Square Kilometer Array (SKA) project, and anthropologists using agent-based modeling to study religious groups

The new service will also be supporting the Networked Quantum Information Technologies Hub (NQIT), led by Oxford, envisaged to design new forms of computers that will accelerate discoveries in science engineering and medicine.

The new HPC cluster built by OCF comprises of Lenovo NeXtScale servers with Intel Haswell CPUs connected by 40GB IB to an existing Panasas storage system. The storage system was also upgraded by OCF to add 166TB giving a total of 400TB of capacity. Existing Intel Ivy Bridge and Sandy Bridge CPUs from the University of Oxford’s older machine are still running and will be merged with the new cluster.

20 NVIDIA Tesla K40 GPUs were also added at the request of NQIT, who co-invested in the new machine. This will also bring benefit to NVIDIA’s CUDA Centre of Excellence, which is also based at the University.

“After seven years of use, our old SGI-based cluster really had come to end of life, it was very power hungry, so we were able to put together a good business case to invest in a new HPC cluster,” said Dr Andrew Richards, head of advanced research computing, University of Oxford. “We can operate the new 5,000 core machine for almost exactly the same power requirements as our old 1,200 core machine.

“The new cluster will not only support our researchers but will also be used in collaborative projects as well; we’re part of Science Engineering South, a consortium of five universities working on e-infrastructure particularly around HPC. We also work with commercial companies who can buy time on the machine so the new cluster is supporting a whole host of different research across the region.“

Simple Linux Utility Resource Manager (SLURM) job scheduler manages the new HPC resource, which is able to support both the GPUs and the three generations of Intel CPUs within the cluster.

Julian Fielden, MD, OCF comments: “With Oxford providing HPC not just to researchers within the University, but to local businesses and in collaborative projects, such as the T2K and NQIT projects, the SLURM scheduler really was the best option to ensure different SLAs can be supported. If you look at the Top500 list of the World’s fastest supercomputers, they’re now starting to move to SLURM. The scheduler was specifically requested by the University to support GPUs and the heterogeneous estate of different CPUs, which the previous TORQUE scheduler couldn’t, so this forms quite an important part of the overall HPC facility.“

The University of Oxford has unveiled the new cluster, named Arcus Phase B, on 14th April.

Richards continues: “As a central resource for the entire University, we really see ourselves as the first stepping stone into HPC. From PhD students upwards i.e. people that haven’t used HPC before – are who we really want to engage with. I don’t see our facility as just running a big machine, we’re here to help people do their research. That’s our value proposition and one that OCF has really helped us to achieve.“

Additional comments from Richards:

- “We see around 300 new users per year signing up to use our HPC facility, but there is a natural churn rate so on average we’re seeing around 120 active users each month. Since our first cluster in 2006 we’ve supported over 4000 students and researchers.

- One of our remits is to help students build their knowledge of using HPC machines so we sit down with them and get involved with their research workflow, their datasets and how they need to be processed. We give them knowledge on how to use HPC machines so that they can go on to bid for time on national facilities like ARCHER. We won’t write their software for them, but will help them to understand how to think differently.

- One of the key requirements for our new cluster was the ability to grow the machine at any given time. This brings huge benefits to the entire University; Departments can come to us with funds and request specific upgrades to the central HPC, and we can do it. It benefits both that Department, who we’ll have SLAs in place with, as well as the University as a whole.

- HPC is just one part of the typical research data lifecycle. There are compliance requirements around the retention and storage of research data so ARC works with other parts of the University’s IT infrastructure to ensure that when research projects are finished, data can be migrated and stored for the long term.“

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter