Critical Capabilities for General-Purpose High-End Storage Arrays

Comparison of 12 high-end storage arrays

This is a Press Release edited by StorageNewsletter.com on February 23, 2015 at 2:53 pmCritical Capabilities for General-Purpose, High-End Storage Arrays (20 November 2014 ID:G00263130) is a report frim analysts Valdis Filks, Stanley Zaffos, Roger W. Cox, Gartner, Inc.

Summary

Here, we assess 12 high-end storage arrays across high-impact use cases and quantify products against the critical capabilities of interest to infrastructure and operations. When choosing storage products, I&O leaders should look beyond technical attributes, incumbency and vendor/product reputation.

Overview

Key Findings

- With the inclusion of SSDs in arrays, performance is no longer a differentiator in its own right, but a scalability enabler that improves operational and financial efficiency by facilitating storage consolidation.

- Product differentiation is created primarily by differences in architecture, software functionality, data flow, support and microcode quality, rather than components and packaging.

- Clustered, scale-out, and federated storage architectures and products can achieve levels of scale, performance, reliability, serviceability and availability comparable to traditional, scale-up high-end arrays.

- The feature sets of high-end storage arrays adapt slowly, and the older systems are incapable of offering data reduction, virtualization and unified protocol support.

Recommendations

- Move beyond technical attributes to include vendor service and support capabilities, as well as acquisition and ownership costs, when making your high-end storage array buying decisions.

- Don’t always use the ingrained, dominant considerations of incumbency, vendor and product reputations when choosing high-end storage solutions.

- Vary the ratios of SSDs, SAS and SATA hard-disk drives in the storage array, and limit maximum configurations based on system performance to ensure that SLAs are met during the planned service life of the system.

- Select disk arrays based on the weighting and criteria created by your IT department to meet your organizational or business objectives, rather than choosing those with the most features or highest overall scores.

What You Need to Know

Superior nondisruptive serviceability and data protection characterize high-end arrays. They are the visible metrics that differentiate high-end array models from other arrays, although the gap is closing. The software architectures used in many high-end storage arrays can trace their lineage back 20 years or more.

Although this maturity delivers HA and broad ecosystem support, it is also becoming a hindrance with respect to flexibility, adaptability and delays to the introduction of new features, compared with newer designs. Administrative and management interfaces are often more complicated when using arrays involving older software designs, no matter how much the internal structures are hidden or abstracted. The ability of older systems to provide unified storage protocols, data reduction and detailed performance instrumentation is also limited, because the original software was not designed with these capabilities as design objectives.

Gartner expects that, within the next four years, arrays using legacy software will need major re-engineering to remain competitive against newer systems that achieve high-end status, as well as hybrid storage solutions that use solid-state technologies to improve performance, storage efficiency and availability. In this research, the aggregated scores among the arrays are minimal. Therefore, clients are advised to look at the individual capabilities that are important to them, rather than the overall score.

Because array differentiation has decreased, the real challenge of performing a successful storage infrastructure upgrade is not designing an infrastructure upgrade that works, but designing one that optimizes agility and minimizes TCO.

Another practical consideration is that choosing a suboptimal solution

is likely to have only a moderate impact on deployment and TCO

for the following reasons:

- Product advantages are usually short-lived and temporary. Gartner refers to this phenomenon as the ‘compression of product differentiation.’

- Most clients report that differences in management and monitoring tools, as well as ecosystem support among various vendors’ offerings, are not enough to change staffing requirements.

- Storage TCO, although growing, still accounts for less than 10% (6.5% in 2013) of most IT budgets.

Analysis

Introduction

The arrays evaluated in this research include scale-up, scale-out, hybrid and unified storage architectures. Because these arrays have different availability characteristics, performance profiles, scalability, ecosystem support, pricing and warranties, they enable users to tailor solutions against operational needs, planned new application deployments, and forecast growth rates and asset management strategies.

Midrange arrays with scale-out characteristics can satisfy the HA criteria when configured with four or more controllers and multiple disk shelves. Whether these differences in availability are enough to affect infrastructure design and operational procedures will vary by user environment, and will also be influenced by other considerations, such as host system/capacity scaling, downtime costs, lost opportunity costs and the maturity of the end-user change control procedures (e.g., hardware, software, procedures and scripting), which directly affect availability.

Critical Capabilities Use-Case Graphics

The weighted capabilities scores for all use cases are displayed as components of the overall score (see Figures 1 through 6).

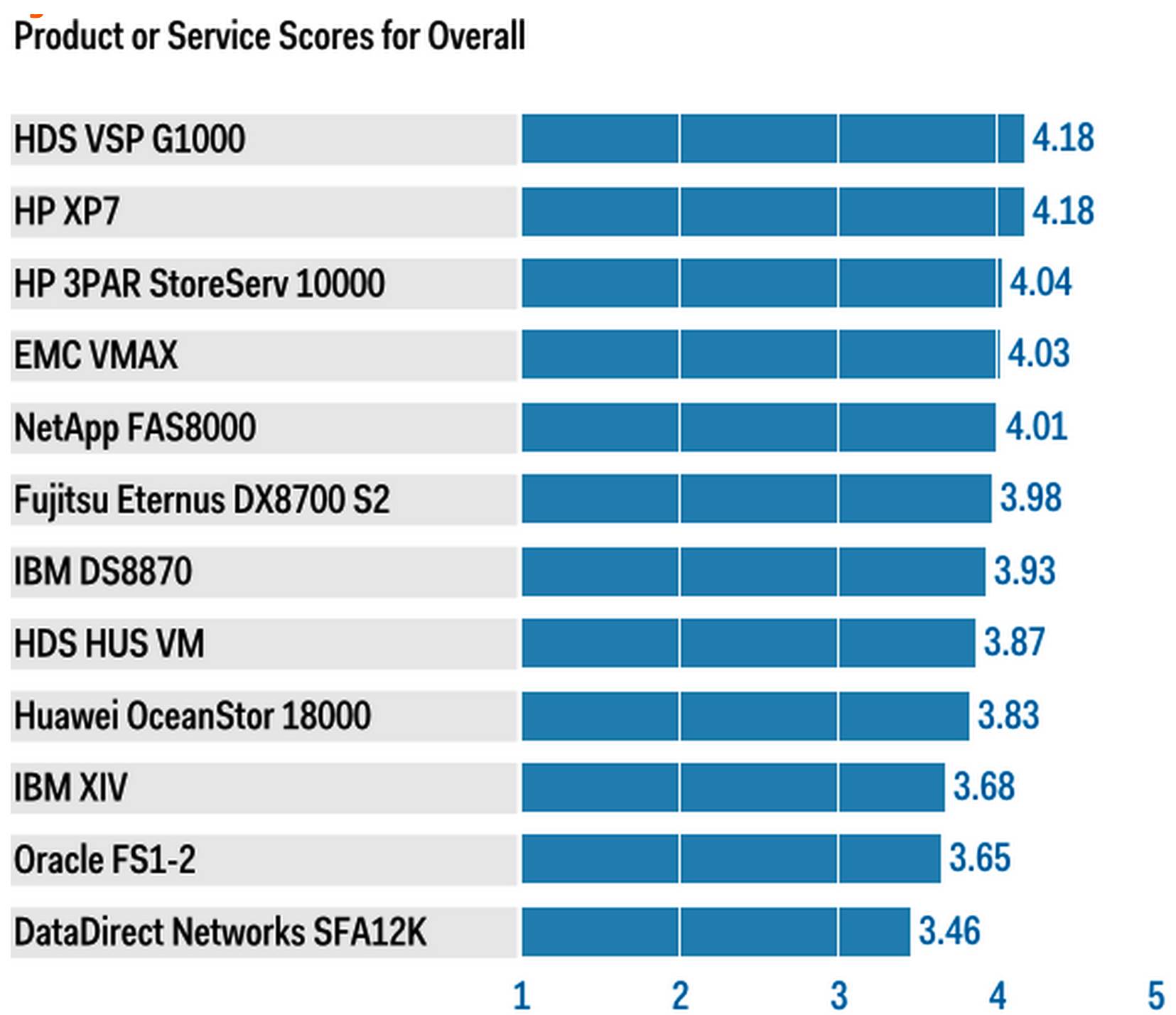

Figure 1. Vendors’ Product Scores for the Overall Use Case

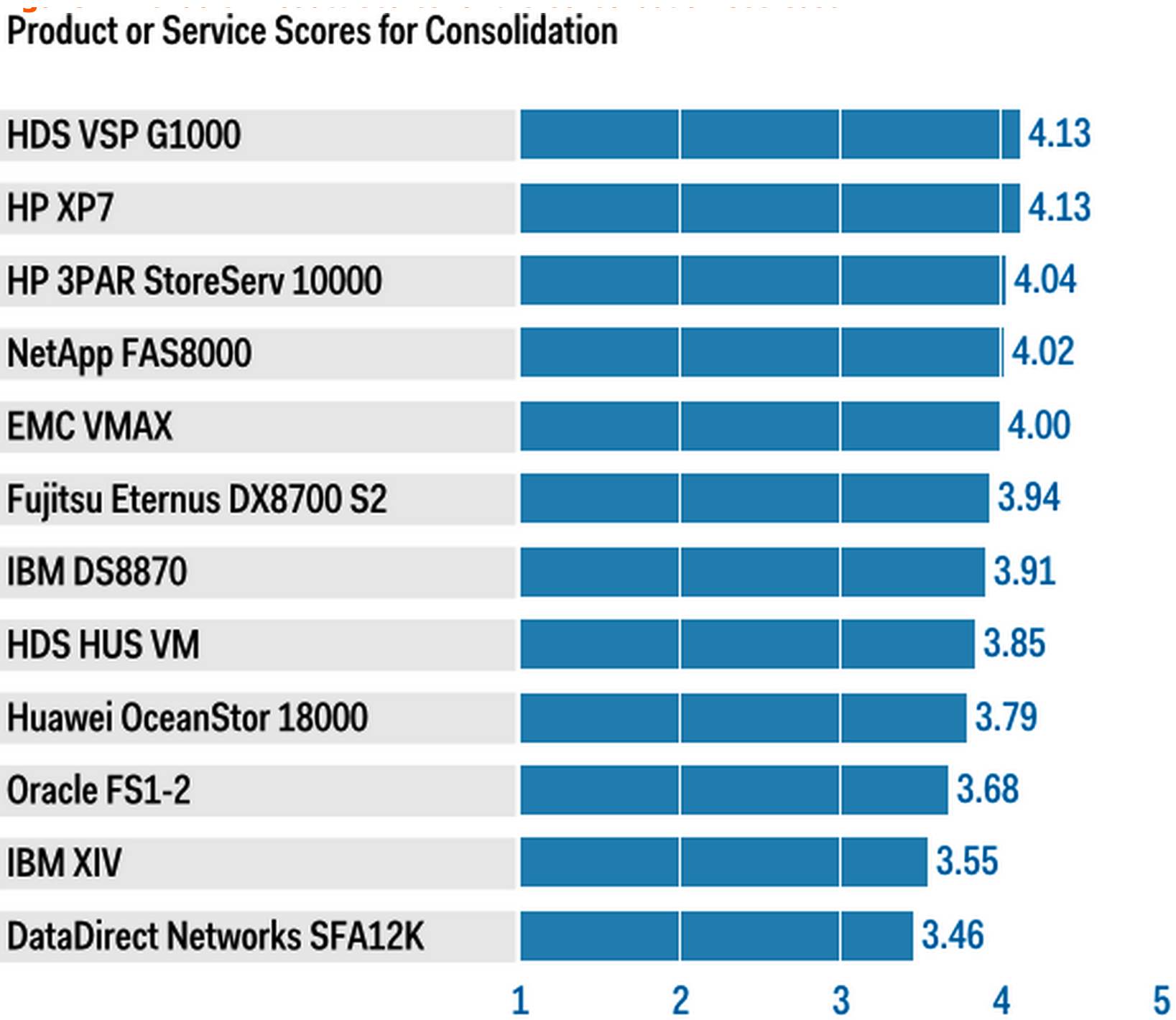

Figure 2. Vendors’ Product Scores for the Consolidation Use Case

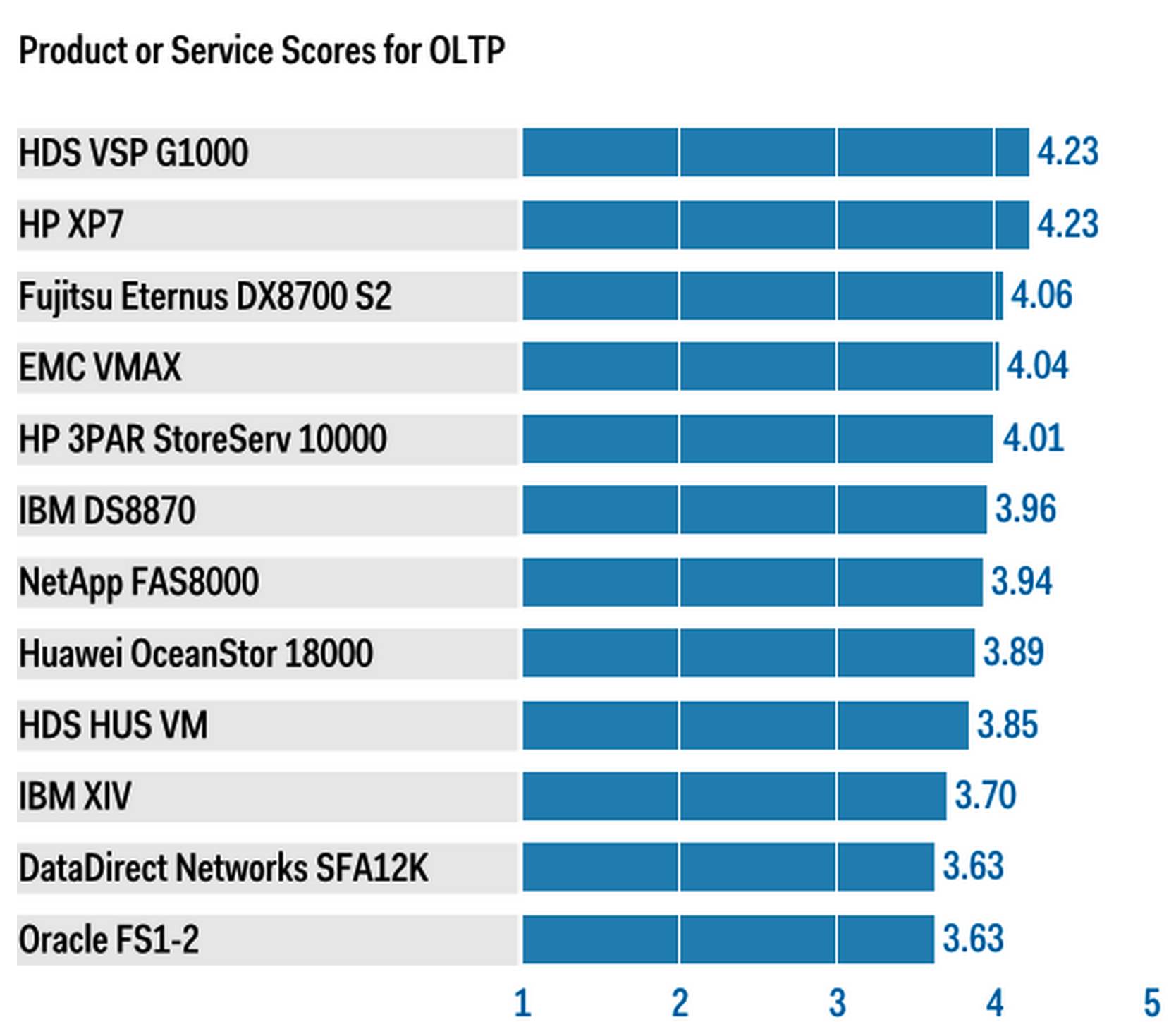

Figure 3. Vendors’ Product Scores for the OLTP Use Case

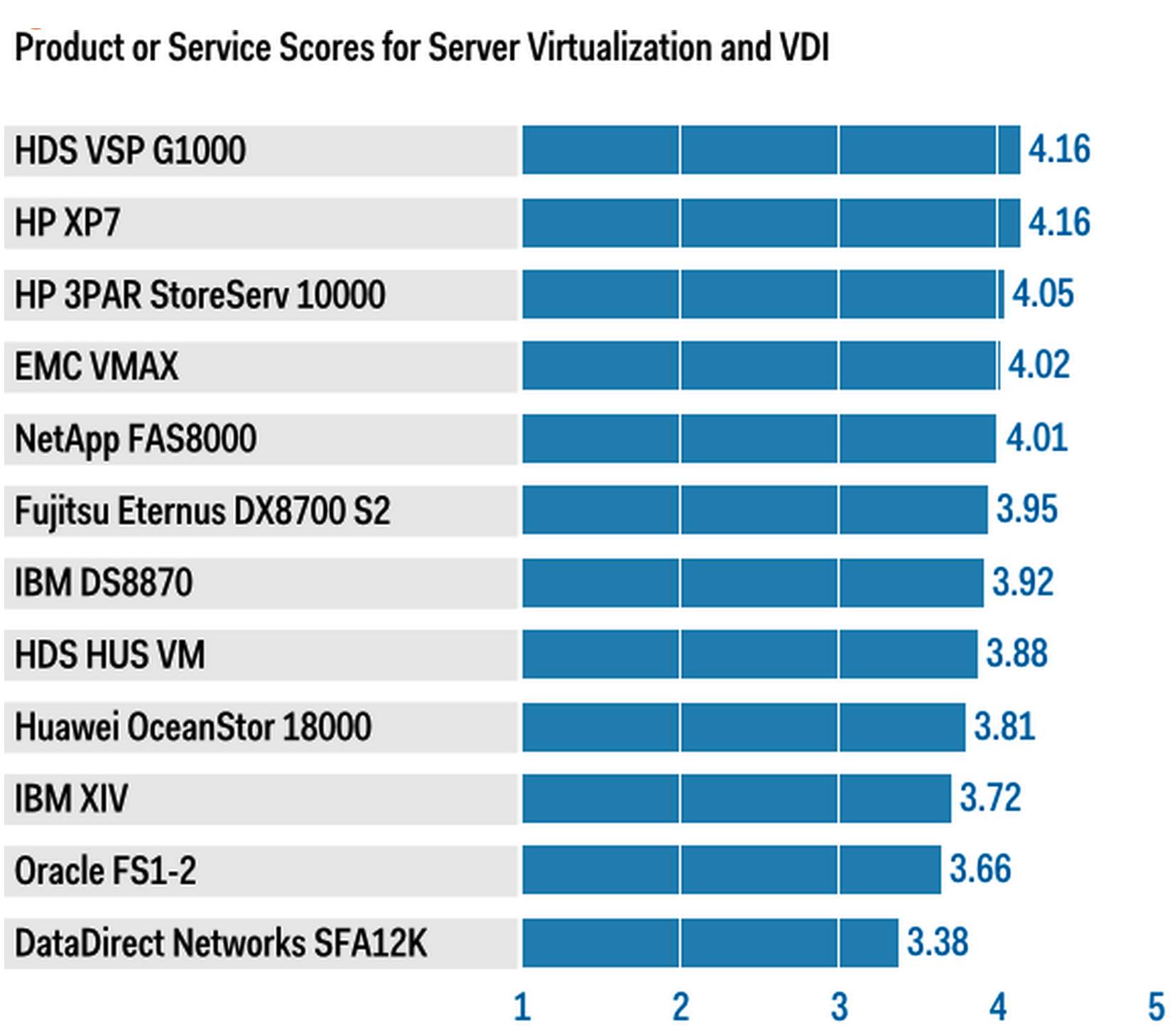

Figure 4. Vendors’ Product Scores for the Server Virtualization and VDI Use Case

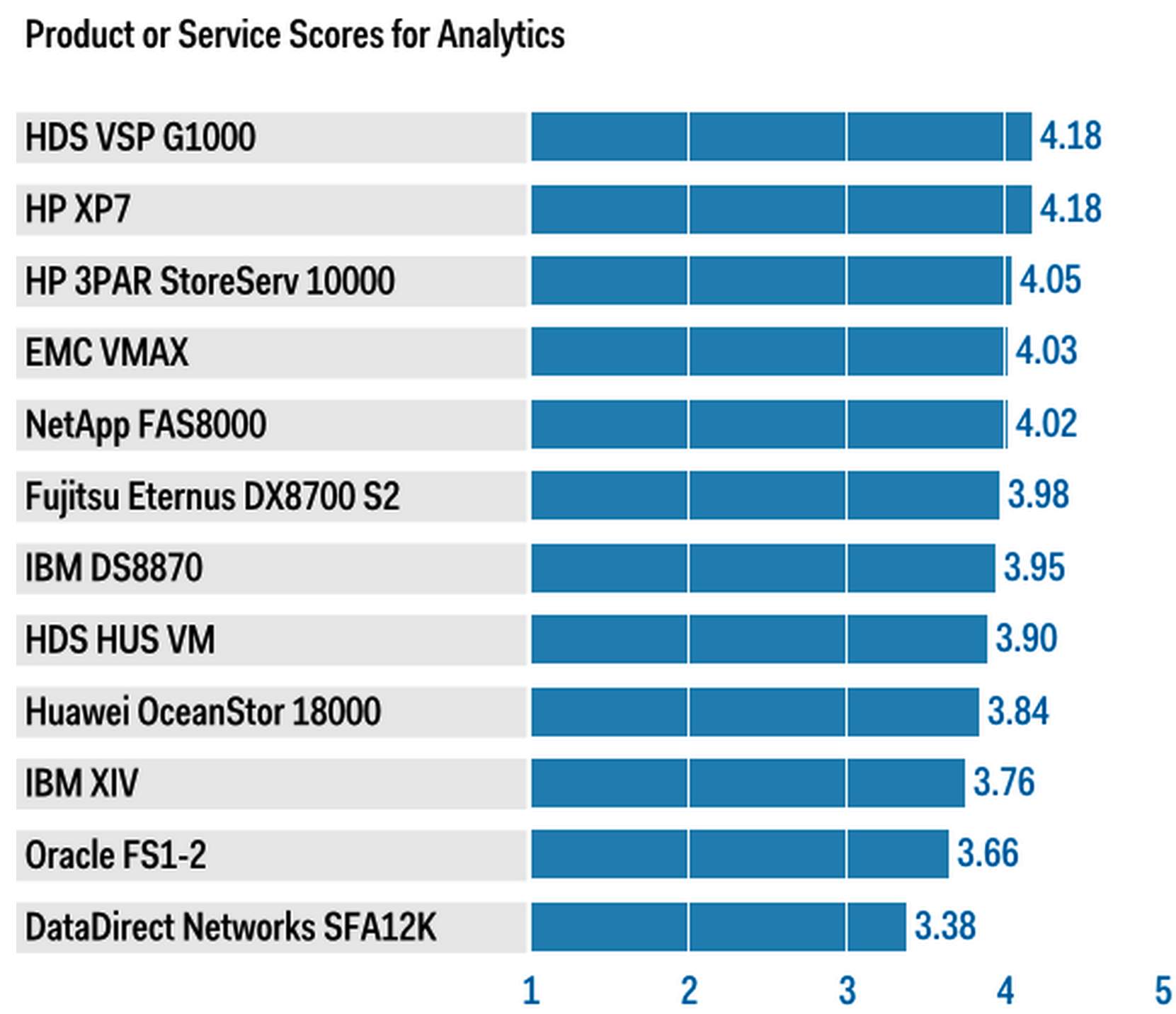

Figure 5. Vendors’ Product Scores for the Analytics Use Case

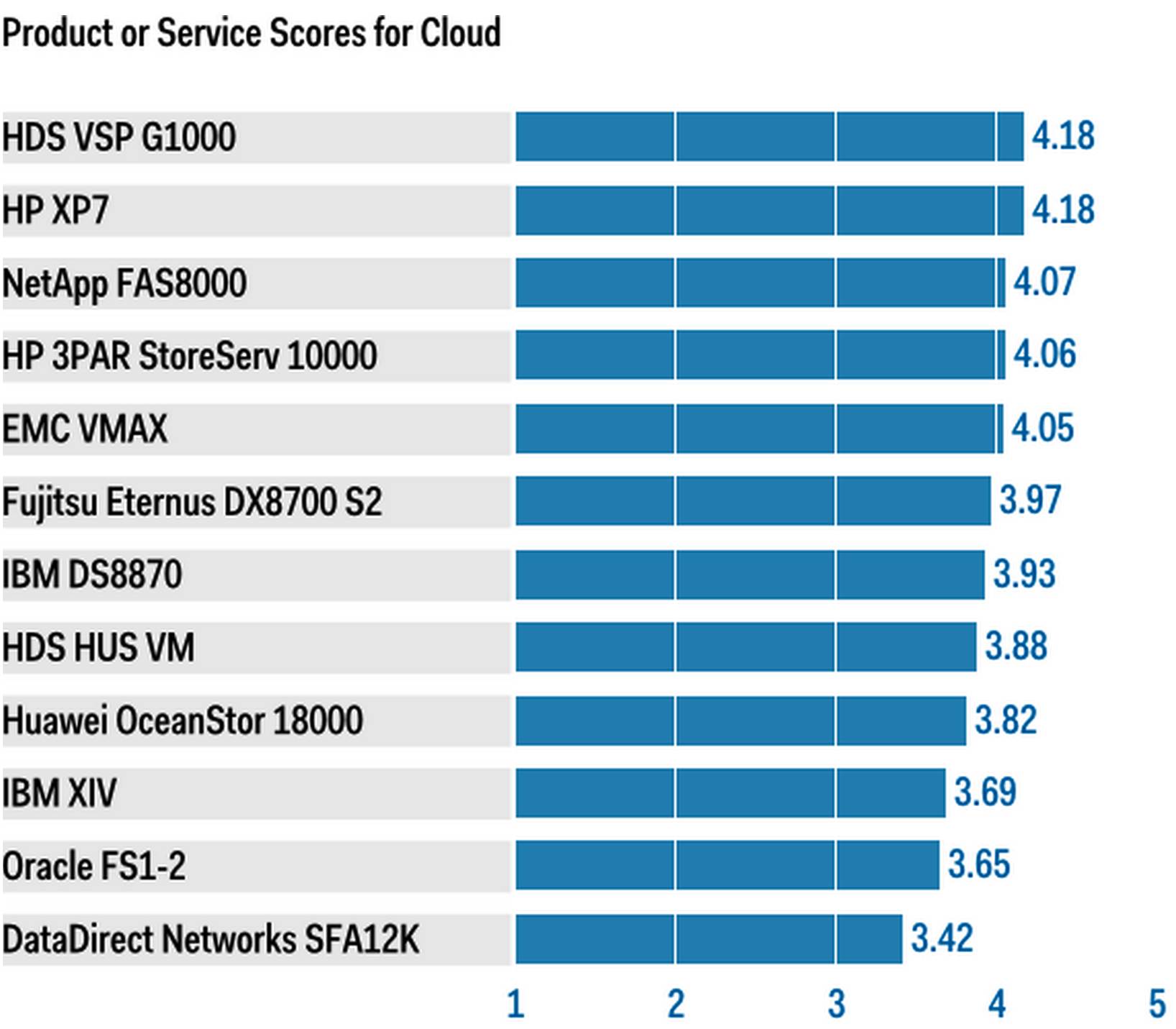

Figure 6. Vendors’ Product Scores for the cloud Use Case

(Source: Gartner, November 2014)

Vendors

DataDirect Networks SFA12K

The SFA12KX, the newest member of the SFA12K family, increases SFA12K performance/¬throughput via a hardware refresh and through software improvements. Like other members of the SFA12K family, it remains a dual-controller array that, with the exception of an in-storage processing capability, prioritizes scalability, performance/throughput and availability over value-added functionality, such as local and remote replication, thin provisioning and autotiering. These priorities align better with the needs of the high-end, HPC market than with general-purpose IT environments. Further enhancing the appeal of the SFA12KX in large environments is dense packaging: 84 HDDs/4U or 5PB/rack, and GridScaler and ExaScaler gateways that support parallel file systems, based on IBM’s GPFS or the open-source Lustre parallel file system.

The combination of high bandwidth and high areal densities has made the SFA12K a popular array in the HPC, cloud, surveillance and media markets that prioritize automatic block alignment and bandwidth over IO/s. The SFA12K’s high areal density also makes it an attractive repository for big data and inactive data, particularly as a backup target for backup solutions doing their own compression and/or deduplication. Offsetting these strengths are limited ecosystem support beyond parallel file systems and backup/restore products; lack of vSphere API for Array Integration (VAAI) support, which limits its appeal for use as VMware storage; zero bit detection, which limits its appeal with applications such as Exchange and Oracle Database; and QoS and security features that could limit its appeal in multitenancy environments.

EMC VMAX

The maturity of the VMAX 10K, 20K and 40K hardware, combined with the Enginuity software and wide ecosystem support, provides proven reliability and stability. However, the need for backward compatibility has complicated the development of new functions, such as data reduction. The VMAX3, which has recently become available, has not yet had time to be market-validated, because it only became available on 26 September 2014. Even with new controllers, promised Hypermax software updates and a new IB internal interconnect, mainframe support is not available, nor is the little-used FCoE protocol. Nevertheless, with new functions, such as in-built VPLEX, recover point replication, virtual thin provisioning and more processing power, customers should move quickly to the VMAX3, because it has the potential to develop further.

The new VMAX 100K, 200K and 400K arrays still lack independent benchmark results, which, in some cases, leads users to delay deploying a new feature into production environments until the feature’s performance has been fully profiled, and its impact on native performance is fully understood. The lack of independent benchmark results has also led to misunderstandings regarding the configuration of back-end SSDs and HDDs into RAID groups, which have required users to add capacity to enable the use of more-expensive 3D+1P RAID groups to achieve needed performance levels, rather than larger, more-economical 7D+1P RAID groups.

EMC’s expansion into software-defined storage (SDS; aka ViPR), network-based replication (aka RecoverPoint) and network-based virtualization (aka VPLEX) suggests that new VMAX users should evaluate the use of these products, in addition to VMAX-based features, when creating their storage infrastructure and operational visions.

Fujitsu Eternus DX8700 S2

The DX8700 S2 series is a mature, high-end array with a reputation for robust engineering and reliability, with redundant RAID groups spanning enclosures and redundant controller failover features. Within the high-end segment, Fujitsu offers simple unlimited software licensing on a per-controller basis; therefore, customers do not need to spend more as they increase the capacity of the arrays. The DX8700 S2 series was updated with a new level of software to improve performance and improved QoS, which not only manages latency and bandwidth, but also integrates with the DX8700 Automated Storage Tiering to move data to the required storage tier to meet QoS targets. It is a scale-out array, providing up to eight controllers.

The DX8700 S2 has offered massive array of idle disks (MAID) or disk spin-down for years. Even though this feature has been implemented successfully without any reported problems, it has not been adopted, nor has it gained popular market acceptance. The same Eternus SF management software is used across the entire DX product line, from the entry level to the high end. This simplifies manageability, migration and replication among Fujitsu storage arrays. Customer feedback is positive concerning the performance, reliability, support and serviceability of the DX8700 S2, and Gartner clients report that the DX8700 S2 RAID rebuild times are faster than comparable systems. The management interface is geared toward storage experts, but is simplified in the Eternus SF V16, thereby reducing training costs and improving storage administrator productivity. To enable workflow integration with SDS platforms, Fujitsu is working closely with the OpenStack project.

HDS HUS VM

The HDS Hitachi Unified Storage (HUS) VM is an entry-level version of the Virtual Storage Platform (VSP) series. Similar to its larger VSP siblings, it is built around Hitachi’s cross-bar switches, has the same functionality as the VSP, can replicate to HUS VM or VSP systems using TrueCopy or Hitachi Universal Replicator (HUR), and uses the same management tools as the VSP. Because it shares APIs with the VSP, it has the same ecosystem support; however, it does not scale to the same storage capacity levels as the HDS VSP G1000. Similarly, it does not provide data reduction features. Hardware reliability and microcode quality are good; this increases the appeal of its Universal Volume Manager (UVM), which enables the HUS VM to virtualize third-party storage systems.

HDS offers performance transparency with its arrays, with SPC-1 performance and throughput benchmark results available. Client feedback indicates that the use of thin provisioning generally improves performance and that autotiering has little to no impact on array performance. Snapshots have a measurably negative, but entirely acceptable, impact on performance and throughput. Offsetting these strengths are the lack of native iSCSI and 10GbE support, which is particularly useful for remote replication, as well as relatively slow integration with server virtualization, database, shareware and backup offerings. Integration with the Hitachi NAS platform adds iSCSI, CIFS and NFS protocol support for users that need more than just FC support.

HDS VSP G1000

The VSP has built its market appeal on reliability, quality microcode and solid performance, as well as its ability to virtualize third-party storage systems using UVM. The latest VSP G1000 was launched in April 2014, with more capacity and performance/throughput achieved via faster controllers and improved data flows. Configuration flexibility has been improved by a repackaging of hardware that enables controllers to be packaged in a separate rack. VSP packaging also supports the addition of capacity-only nodes that can be separated from the controllers. It provides a larger variety of features, such as a unified storage, heterogeneous storage virtualization and content management via integration with HCAP. Data compression and reduction are not supported. Performance needs dictate and independently configure each redundant node’s front- and back-end ports, cache, and back-end capacity. However, accelerated flash can be used to accelerate performance in hybrid configurations. Additional feature highlights include thin provisioning, autotiering, volume-cloning and space-efficient snapshots, synchronous and asynchronous replication, and three-site replication topologies.

The VSP asynchronous replication (aka HUR) is built around the concept of journal files stored on disk, which makes HUR tolerant of communication line failures, allows users to trade off bandwidth availability against RPOs and reduces the demands placed on cache. It also offers a data flow that enables the remote VSP to pull writes to protected volumes on the DR site, rather than having the production-side VSP push these writes to the DR site. Pulling writes, rather than pushing them, reduces the impact of HUR on the VSP systems and reduces bandwidth requirements, which lowers costs. Offsetting these strengths are the lack of native iSCSI and 10GbE support, as well as relatively slow integration with server virtualization, database, shareware and backup offerings.

HP 3PAR StoreServ 10000

The 3PAR StoreServ 10000 is HP’s preferred, go-to, high-end storage system for open-system infrastructures that require the highest levels of performance and resiliency. Scalable from two to eight controller-nodes, the 3PAR StoreServ 10000 requires a minimum of four controller-nodes to satisfy Gartner’s high-end, general-purpose storage system definition. Competitive with small and midsize, traditional, frame-based, high-end storage arrays, particularly with regard to storage efficiency features and ease of use, HP continues to make material R&D investments to enhance 3PAR StoreServ 10000 availability, performance, capacity scalability and security capabilities. Configuring 3PAR StoreServ storage arrays with four or more nodes limits the effects of high-impact electronics failures to no more than 25% of the system’s performance and throughput. The impact of electronic failures is further reduced by 3PAR’s Persistent Cache and Persistent Port failover features, which enable the caches in surviving nodes to stay in write-in mode and active host connections to remain online.

Resiliency features include three-site replication topologies, as well as Peer Persistence, which enables transparent failover and failback between two StoreServ 10000 systems located within metropolitan distances. However, offsetting the benefit of these functions are the relatively long RPOs that result from 3PAR’s asynchronous remote copy actually sending the difference between two snaps to faraway DR sites; microcode updates that can be time-consuming, because the time required is proportional to the number of nodes in the system; and a relatively large footprint caused by the use of four-disk magazines, instead of more-dense packaging schemes.

HP XP7

Sourced from Hitachi Ltd. under joint technology and OEM agreements, the HP XP7 is the next incremental evolution of the high-end, frame-based XP-Series that HP has been selling since 1999. Engineered to be deployed in support of applications that require the highest levels of resiliency and performance, the HP XP7 features increased capacity scalability and performance over its predecessor, the HP XP P9500, while leveraging the broad array of proven HP-XP-series data management software. Beyond expected capacity and performance improvements, the new Active-Active HA and Active-Active data mobility functions that elevate storage system and data center availability to higher levels, as well as providing nondisruptive, transparent application mobility among hosts servers at the same or different sites are two notable enhancements. The HP XP7 shares a common technology base with the Hitachi/HDS VSP G1000, and HP differentiates the XP7 in the areas of broader integration and testing with the full HP portfolio ecosystem and the availability of Metro Cluster for HP Unix, as well as by restricting the ability to replicate between XP7 and HDS VSPs.

Positioned in HP’s traditional storage portfolio, the primary mission of the XP7 is to serve as an upgrade platform to the XP-Series installed base, as well as to address opportunities involving IBM mainframe and storage for HP Nonstop infrastructures. Since HP acquired 3PAR, XP-Series revenue continues to decline annually, as HP places more go-to-market weight behind the 3PAR StoreServ 10000 offering.

Huawei OceanStor 18000

The OceanStor 18000 storage array supports both scale-up and scale-out capabilities. Data flows are built around Huawei’s Smart Matrix switch, which interconnects as many as 16 controllers, each configured with its own host connections and cache, with back-end storage directly connected to each engine. Hardware build quality is good, and shows attention to detail in packaging and cabling. The feature set includes storage-efficiency features, such as thin provisioning and autotiering, snapshots, synchronous and asynchronous replication, QoS that nondisruptively rebalances workloads to optimize resource utilization, and the ability to virtualize a limited number of external storage arrays.

Software is grouped into four bundles and is priced on capacity, except for path failover and load-balancing software, which is priced by the number of attached hosts to encourage widespread usage. The compatibility support matrix includes Windows, various Unix and Linux implementations, VMware (including VAAI and vCenter Site Recovery Manager support) and Hyper-V. Offsetting these strengths are relatively limited integration with various backup/restore products, configuration and management tools that are more technology- than ease-of-use-oriented, a lack of documentation and storage administrators familiar with Huawei, and a support organization that is largely untested outside mainland China.

IBM DS8870

The DS8870 is a scale-up, two-node controller architecture that is based and dependent on IBM’s Power server business. Because IBM owns the z/OS architecture, IBM has inherent cross-s,ing, product integration and time-to-market advantages supporting new z/OS features, relative to its competitors. Snapshot and replication capabilities are robust, extensive and relatively efficient, as shown by features such as FlashCopy; synchronous, asynchronous three-site replication; and consistency groups that can span arrays. The latest significant DS8870 updates include Easy Tier improvements, as well as a High Performance Flash Enclosure, which eliminates earlier, SSD-related architectural inefficiencies and oosts array performance. Even with theaddition of the Flash Enclosure, the DS8870 is no longer IBM’s high-performance system, and data reduction features are not available unless extra SVC devices are purchased in addition to the DS8870.

Overall, the DS8870 is a competitive offering. Ease-of-use improvements have been achieved by taking the XIV management GUI and implementing it on the DS8870. However, customer reports are that the new GUI still needs a more detailed administrative approach, and is not yet suited to high-level management, as provided by the XIV icon-based GUI. Due to the dual-controller design, major software updates can disable one of the controllers for as long as an hour. These updates need to be planned, because they can reduce the availability and performance of the system by as much as 50% during the upgrade process. With muted traction in VMware and Microsoft infrastructures, IBM positions the DS8870 as its primary enterprise storage platform to support z/OS and AIX infrastructures.

IBM XIV

The current XIV is in its third generation. The freedom from legacy dependencies is apparent from its modern, easy-to-use, icon-based operational interface, and a scale-out distributed processing and RAID protection scheme. Good performance and the XIV management interface are winning deals for IBM. This generation enhances performance with the introduction of SSD and a faster IB interconnect among the XIV nodes. The advantages of the XIV are simple administration and inclusive software licenses, which make buying and upgrading the XIV simple, without hidden or additional storage software license charges. The mirror RAID implementation creates a raw versus usable capacity, which is not as efficient as traditional RAID-5/6 designs; therefore, the scalability only reaches 325TB. However, together with inclusive software licensing, the XIV usable capacity is priced accordingly, so that the price per TB is competitive in the market.

A new Hyper-Scale feature enables IBM to federate a number of XIV platforms to create a petabyte+ scale infrastructure under the Hyper-Scale Manager to enable the administration of several XIV systems as one. Positioned as IBM’s primary high-end storage platform for VMware, Hyper-V and cloud infrastructure deployments, IBM has released several new and incremental XIV enhancements, foremost of which are three-site mirroring, multitenancy and VMware vCloud Suite integration.

NetApp FAS8000

The high-end FAS series model numbers were changed from FAS6000 to FAS8000. The upgrade included faster controllers and storage virtualization built into the system and enabled via a software license. Because each FAS8000 HA node pair is a scale-up, dual-controller array, to qualify for inclusion in this Critical Capabilities research requires that the FAS8000 series must be configured with at least four FAS8000 nodes managed by Clustered Data Ontap. This supports a maximum of eight nodes for deployment with SAN protocols and up to 24 nodes with NAS protocols. Depending on drive capacity, Clustered Data Ontap can support a maximum raw capacity of 2.6PB to 23.0PB in a SAN infrastructure, and 7.8PB to 69.1PB in a NAS infrastructure.

The FAS system is no longer the flagship high-performance, low-latency storage array for NetApp customers that value performance over all other criteria. They can now choose NetApp products such as the FlashRay. Seamless scalability, nondisruptive upgrades, robust data service software, storage-efficiency capabilities, flash-enhanced performance, unified block-and-file multiprotocol support, multitenant support, ease of use and validated integration with leading ISVs are key attributes of an FAS8000 configured with Clustered Data Ontap.

Oracle FS1-2

The hybrid FS1-2 series replaces the Oracle Pillar Axiom storage arrays and is the newest array family in this research. Even though the new system has fewer SSD and HDD slots, scalability in terms of capacity is increased by approximately 30% to a total of 2.9PB, which includes up to 912TB of SSD. The design remains a scale-out architecture with the ability to cluster eight FS1-2 pairs together. The FS1 has an inclusive software licensing model, which makes upgrades simpler from a licensing perspective. The software features included within this model are QoS Plus, automated tiered storage, thin provisioning, support for up to 64 physical domains (multitenancy) and multiple block-and-file protocol support. However, if replication is required, Oracle MaxRep engine is a chargeable optional extra.

The MaxRep product provides synchronous and asynchronous replication, consistency groups and multihop replication topologies. It can be used to replicate and, therefore, migrate older Axiom arrays to newer FS1-2 arrays. Positioned to provide best-of-breed performance in an Oracle infrastructure, the FS1-2 enables Hybrid Columnar Compression (HCC) to optimize Oracle Database performance, as well as engineered integration with Oracle’s VM and its broad library of data management software. However, the FS1 has yet to fully embrace integration with competing hypervisors from VMware and Microsoft.

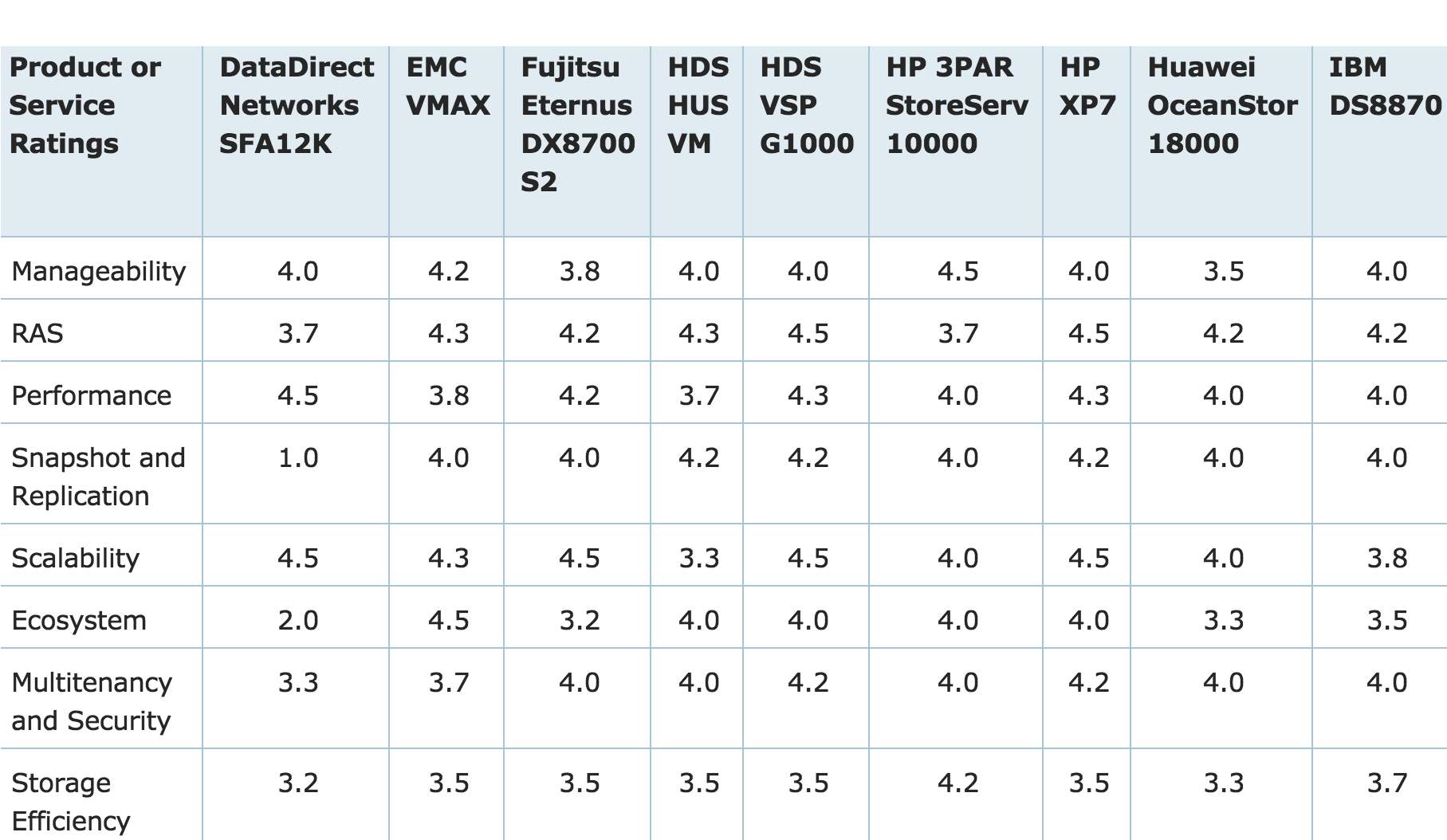

Critical Capabilities Rating

Each product or service that meets our inclusion criteria has been evaluated on several critical capabilities on a scale from 1.0 (lowest ranking) to 5.0 (highest ranking). Rankings (see Table 3) are not adjusted to account for differences in various target market segments. For example, a system targeting the SMB market is less costly and less scalable than a system targeting the enterprise market, and would rank lower on scalability than the larger array, despite the SMB prospect not needing the extra scalability.

Table 3. Product/Service Rating on Critical Capabilities

(Source: Gartner, November 2014)

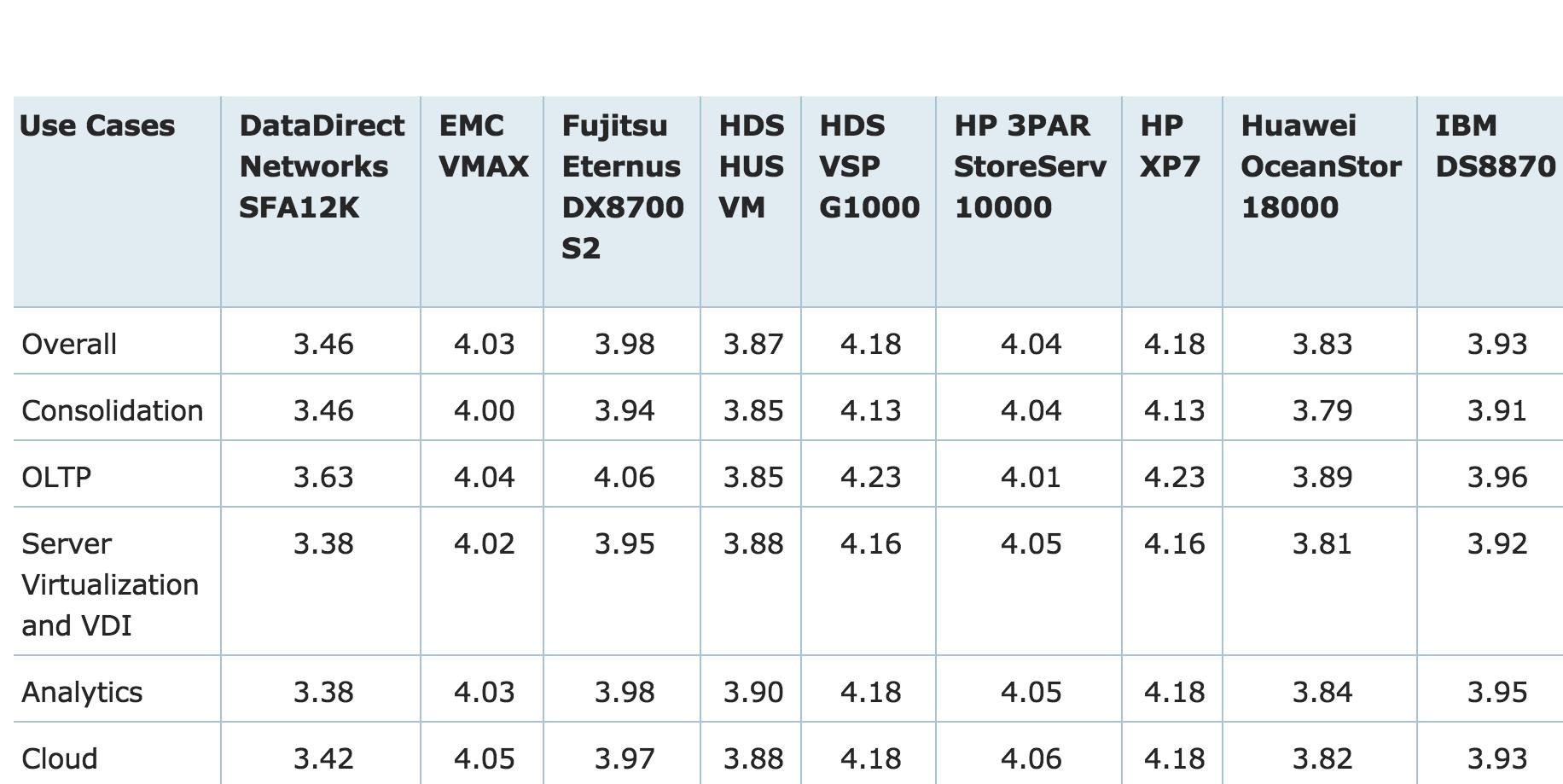

Table 4 shows the product/service scores for each use case. The scores, which are generated by multiplying the use case weightings by the product/service ratings, summarize how well the critical capabilities are met for each use case.

Table 4: Product Score on Use Cases

(Source: Gartner, November 2014)

To determine an overall score for each product/service in the use cases, multiply the ratings in Table 3 by the weightings shown in Table 2.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter