Long-Term Storage Solutions Heat Up to Manage Explosive Data Growth – IDC

Report sponsored by Storiant

This is a Press Release edited by StorageNewsletter.com on February 12, 2015 at 2:41 pmThis article, Long-Term Storage Solutions Heat Up to Manage Explosive Data Growth January 2015 was adapted from IDC‘s Worldwide Long-Term Storage Ecosystem Taxonomy, 2014 by Ashish Nadkarni, Laura DuBois, John Rydning, et al., IDC #246732, and sponsored by Storiant, Inc.

Today’s enterprise datacenters are dealing with new challenges that are far more demanding than ever before. Foremost is the exponential growth of data, most of it unstructured data. Big data and analytics implementations are also quickly becoming a strategic priority in many enterprises, demanding online access to more data, which is retained for longer periods of time. Legacy storage solutions with fixed design characteristics and a cost structure that doesn’t scale are proving to be ill-suited for these new needs. This technology spotlight examines the issues that are driving organizations to replace older archive and backup-and-restore systems with BC and always-available solutions that can scale to handle extreme data growth while leveraging a cloudbased pricing model. The paper also looks at the role of Storiant and its long-term storage services solution in the strategically important long-term storage market.

Introduction

It’s no secret that the rampant proliferation of smartphones, camera phones, tablet devices, mobile devices, big data, and the Internet of Things has led to an ‘uber-connected’ world – for businesses and consumers alike. We live in a world in which social media is considered to be an existential strand of human-to-human interaction and in which humans are increasingly demanding information access, services, and entertainment delivered instantaneously via any and all of their devices.

We also live in a world where data is the new digital currency. We’re only scratching the surface of a potential ‘value’ gold mine derived from analyzing data from devices and sensors. In 2013, IDC forecast that the Digital Universe contains 4.4 zettabytes worth of data, but less than 1% of that data is currently being analyzed, creating opportunity and unrealized value extraction.

This explosive data growth has led to newer data access paradigms such as cloud. But more importantly, it has led to a situation where more and more data sets are short-lived, with shelf lives spanning hours, minutes, and evens in many cases. This fact is not lost on most cloud services and social media providers, which must grapple with maintaining an agile infrastructure.

As a result, long-term storage solutions are gaining traction as a new form of ultra-cheap storage with data accessibility that’s faster than tape, yet designed specifically for long-term data. Long-term data is becoming more prevalent, thanks to the changing nature of human interactions, devices, sensors, and applications. Coupled with this trend is the rise of hyperscale datacenters that strive to achieve near 100% uptime.

The Changing Storage Infrastructure Landscape

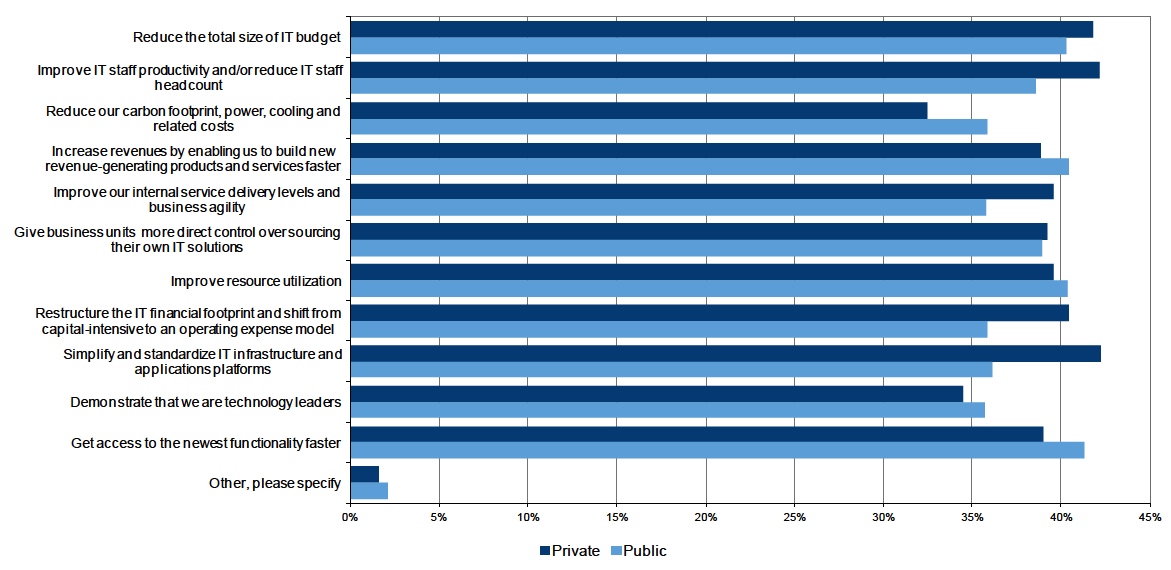

As humans, we take comfort in retaining physical exhibits of our memories forever – and our habits have quickly extended to the digital and social world. The same holds true with businesses. As businesses transform into data-driven entities, they are storing more data with the expectation that it has future value that can be extracted someday or, in many cases, storing data to comply with regulations or adhere to corporate policies. Storage infrastructures are requiring more capacity to store the increasing volume of data and retain the data for longer periods of time. However, for all of this additional data that businesses are generating, IT budgets are not keeping up. Furthermore, IT staff productivity, which results in indirect costs, is also impacted by this data deluge. As a recent IDC survey shows, these issues are top of mind for CIOs deploying private cloud or consuming public clouds.

Important Drivers for Moving to Cloud

Q. Of the following potential reasons for moving to cloud, which are considered important drivers that you expect to achieve when moving to cloud? (Choose all that apply.)

n = 1,109 Click to enlarge

(Source: IDC, 2015)

The data deluge trend can be observed in the demand for various storage infrastructures:

- Aggregate capacity shipped by storage system OEMs for capacity-optimized, performance-optimized, and I/O-intensive disk storage systems is expected to reach 107EBs in 2016.

- Capacity shipped for building file- and object-based storage (FOBS) solutions is expected to reach 173EB in 2017. This includes FOBS solutions built on traditional storage system platforms as well as on self-built platforms, the approach more typical for hyperscale service provider datacenters.

- Capacity shipped in support of public cloud services and private cloud deployments is expected to reach 141EB in 2017. Similar to the estimate for FOBS, this estimate includes storage capacity shipped with traditional storage systems in support of cloud services as well as storage capacity acquired by service providers through non-OEM channels.

Note that these capacity-shipment expectations are not mutually exclusive.

The Birth of Long-Term Storage

Today’s data environment features two types of data:

- High-response-time data: This data is frequently accessed (not necessarily modified or transacted with) and can be and often is modified directly by applications that need high I/O capabilities. In most cases, access times for production data are in the sub-millisecond to single-digit millisecond range, whereas access times for nonproduction data can range from double-digit milliseconds to seconds, depending on protocol types. Long-term storage systems such as Storiant are now raising the bar in that they can consistently return any piece of data in seconds.

- Always-available data for archiving or BC: Always-available data stored in an archive is infrequently or never accessed after it’s written. Given the more relaxed SLAs around less active data and the use of offline media, data access/retrieval times can be anywhere froms or minutes to even hours. Amazon AWS Glacier, for example, has a stated access time of four hours. In many cases, suppliers make fast metadata query/access available in lieu of the actual data fetch. For the BC use case that is becoming increasingly important for long-term capacity-optimized data, businesses need this data to be also available, but they don’t need to perform any transactions with it.

Several factors can influence a storage decision.

IDC has identified the following top pain points that govern the management of data:

- Identification: The challenges and difficulties in discovering which data is ‘high response’ and which data is ‘always available’ for archiving (Businesses almost always overestimate the percentage of data that they actually need and use. The reality is that the vast majority of data is generally suitable for ‘always-available archiving,’ particularly if one assumes a wait time of 30s or less versus minutes or hours.)

- Placement: The ability to move or transition data to the appropriate solution to meet the cost objective once the data type is identified

- Change in data type over time: Managing the transition period from high-response data to always-available archived data while ensuring that there is no impact on the production application in terms of response time

In the storage market, balancing response time and cost is critical because most applications are generally programmed to have response times of less than 50 milliseconds from online storage (under normal operations). An examination of storage response time at a high level shows that the overriding key performance indicator is cost. For example, storing data on tape offsite is less expensive than storing data on always-on spinning disk. However, the response/retrieval time for tape in this solution can be up to 24 hours. Furthermore, even if the tape media is cheaper, the infrastructure may be a different story, depending on how many tapes are needed for reading and writing at the same time. Essentially, there is a response time chasm between online applications, at milliseconds, and offsite tape, at up to 24 hours.

Purpose-built backup appliances (PBBAs) provide a solution for lower storage capacity cost with acceptable application response time through the ability to transition data from inactive to active quickly (i.e., applications don’t have to be rewritten to use a PBBA). With the PBBA, the response time chasm is nows to 24 hours, and at the end of the day, PBBAs are still using spinning disk.

Dramatically reducing storage cost requires trade-offs to be made between service and risk, usually affecting response time. Long-term storage is a running mode or method operation of a storage device or system for always-available archived data where an explicit trade-off is made, resulting in response times that, historically, would be considered unacceptable for online or production applications to achieve significant capital and operational savings. Examples of deployment could result in underlying media such as tape or disk that would not be spinning but that would be readily available for access. Defining an arbitrary number to define response time for long-term storage is difficult because what is acceptable varies per organization or application or even transaction.

Given the pace at which the amount of inactive data is growing vis-à-vis the pace of overall data growth, many service providers, infrastructure suppliers, and ISVs are offering or planning to offer specialized infrastructure for long-term data retention.

Considering Storiant

One such vendor is Storiant, a Boston, MA-based firm focused on solving the problem of rising storage costs driven by exponential data growth. The inspiration for Storiant’s long-term storage service came from companies such as Facebook, Yahoo!, and Amazon, which operate datacenters optimized for ultra-low cost and infinite scalability. Storiant’s mission is to deliver an economically viable way for organizations to achieve Facebook- and Amazon-like scale and cost efficiencies inside private datacenters belonging to enterprises and specialized service providers.

Storiant delivers a long-term storage solution that scales to exabytess, with a TCO less than $0.01 per gigabyte per month. With Storiant’s cloud-based long-term storage, datacenter professionals can reap the benefits of storage on disk at the price points of storage on tape. According to the company, this scale and low TCO will open the door to the next wave of big data solutions while solving the cost-control challenges facing private datacenters today.

Storiant is an intelligent storage software solution that runs on open standard hardware and economical cloud or desktop-class drives. The combination enables enterprises to achieve a long-term storage TCO rivaling public cloud economics. It’s designed for enterprises interested in performance, compliance, and control of petabyte-scale backup, archive, and big storage.

Storiant’s storage system includes the following components:

- Granular power control that operates to reduce power consumption by up to 80% while still offering a maximum time to first byte of 15s (Active power management will aggressively power down drives that are not servicing active read requests.)

- Scalable and flexible metastorage based on Cassandra (Storing object metadata in a database rather than as file attributes allows the Storiant solution to easily set and enforce policies such as retention periods and optional automated deletion based on object metadata.)

- 18-9s of data durability that are achieved through the use of OpenZFS and replication (Storiant includes 5% active spare drives, a fully configured monitoring and alerting system, and drive diagnostics that result in an easy-to-operate solution with a mean time to repair of up to one year.)

- High throughput for very high ingest and read rates as well as data migration (wire speed on 10GbE)

- SEC 17a-4(f) compliance with immutability

- Multitenant (for infrastructure-as-a-service providers)

Storiant offers a choice of the following application interfaces:

- REST (Swift, S3)

- Client library – Java/Python/.NET

- Hadoop – MapReduce NIFS/CIFS via Storiant Link gateway

- Native integration with backup products from Symantec and CommVault

Challenges

A crucial measure of a vendor’s success in the long-term storage market is how well the vendor helps its customers and prospects achieve a dramatic reduction in their storage TCO. As much as infrastructure innovations such as those being championed by Storiant help customers embark on this TCO reduction journey, ultimately customers cannot be successful unless they bring about a change in how their application developers access this storage infrastructure. While traditional file and block access mechanisms will survive in the short term, the future lies in object-based API access. As part of its mission to gain traction, Storiant needs to motivate changes in developer behavior, which in will turn help infrastructure owners realize greater savings.

Recommendations and Conclusion

Organizations evaluating long-term storage solutions should look for the following features and functionality:

- Open standard access: The solution must offer open or standards-based API access such as AWS S3 and OpenStack Swift and/or programmable metadata access for command/control to query the structural integrity of the data. It should also offer the ability to tag data as inactive and facilitate the movement of data to the long-term storage tier. However, prudent vendors such as Storiant will also offer file-access gateways that allow customers to bridge the time gap, while they develop application-to-storage access via APIs. Significant time and money will be invested by organizations to develop applications to take advantage of long-term storage solutions. Therefore, to protect their investment, organizations do not want to be bound to vendor-controlled or proprietary standards. Finally, the solution must offer programmatic service levels and quality attributes for data availability, resiliency, retrieval, and access.

- Media characteristics: Media used in long-term storage will need to support characteristics such as sustained (per device) power-off states and unrecoverable bit error protection (or bit rot protection). Simply providing the ability to spin down a drive or shelf of drives when idle will not be enough. Long-term storage solutions must manage incoming and outgoing traffic intelligently to ensure a minimal amount of drive spin-up and sustained periods of disk drive shutdown to maximize operational savings. Similarly, unrecoverable bit errors on the underlying media will be a condition that long-term storage solution providers cannot ignore. The solution provider should have mechanisms in place to search for bit rot and recover proactively with minimal overall impact to performance, power, and cost.

- Data security, compliance, and durability: Regulatory issues will be a key driver for the long term storage market. Therefore, providing write once, read many access would be a standard requirement for most enterprise implementations for all or a portion of their long-term storage usage. From a durability perspective, single-site solutions will be sufficient for initial deployments; however, as the capacity of the solution grows, the data will need to be replicated for site resiliency. Service providers should have various preferences on whether the replication will be synchronous or asynchronous. However, it will be important to offer both methods at a very granular policy-based level. Similarly, ‘hyperscale’ Web properties and service providers may limit the general-purpose nature of a long-term storage device to achieve maximum efficiency at scale. Therefore, granular policy-based management will be a key feature in the private cloud market. Encrypting data at rest will be an important consideration – though not a requirement. In the short term, long-term storage solutions will likely leverage software-based encryption solutions, but over the longer term, encryption at the device level may be a necessity, via self-encrypting devices (SEDs).

- Private cloud access: Service and cloud providers, as well as hyperscale Web properties, have been the primary adopters of long-term storage technologies. For various reasons, traditional enterprises will not be able to adopt an external strategy for long-term storage such as security policies, compliance, and scale. Therefore, IDC believes there will be significant demand for enterprises to incorporate long-term storage solutions into their existing private cloud offerings. The private cloud opportunity for long-term storage will require the ability to customize long-term storage for various applications and use cases within their environment.

As the perceived value of data increases, more data will be kept for longer periods of time, placing tremendous burden on IT infrastructures and budgets but also creating new opportunities for data analytics and business advantage. The willingness to sacrifice online storage response times to a range of a fews (however, substantially less than offsite tape retrieval) to reduce storage capacity cost dramatically hΩas created a new and emerging long-term storage market. IDC believes the long-term storage market is still developing and taking shape, but the time has come for organizations to consider these solutions. IDC believes that Storiant is well positioned to monetize the current capabilities of its innovative storage platform as it builds a leadership position in the long-term storage market.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter