Why Businesses Ought to Cache, Tier and Tune Storage Automatically

By Joe Disher, Overland

This is a Press Release edited by StorageNewsletter.com on December 24, 2013 at 3:01 pm![]() This article, Why growing businesses ought to cache, tier and tune their storage automatically, was written by

This article, Why growing businesses ought to cache, tier and tune their storage automatically, was written by

By Joe Disher, senior director product marketing, Overland Storage, Inc.

With business growth comes the inevitable hurdle of how to deal with an increased demand for data.

The need to increase application and information system responsiveness becomes more apparent as an organisation grows in size. This growth increases the data appetite of mission-critical applications. Storage caching, tiering and tuning techniques enable extending and enhancing the capabilities of SAS, nearLine-SAS and SSD storage devices.

These improvements allow administrators to maximise system performance by intelligently and autonomously combining multiple storage device types and leveraging their storage management features. Each of the functions mentioned – storage caching, tiering and tuning – have a unique role to play. The combination is powerful but the individual capabilities are valuable in their own right. Businesses should be relying on these multiple technologies to drive price/performance, improve data availability and keep data safe as they grow.

Caching for Performance

Caching allows storage system controllers to use SSD or flash technology to extend its on-board cache, also known as Level 2 (L2) cache. I/O requests are usually serviced from primary Level 1 (L1) cache, but if the request rate is too high, the primary cache may be fully consumed. Caching’s goal is to increase I/O by increasing the cache hit ratio. The storage system identifies frequently accessed data, sometimes called ‘hot data’, based on MFU/MRU algorithms (Most Frequently Used /Most Recently Used). As I/O patterns change over time, storage systems monitor and record the patterns to automatically align storage resources to workflow requirements.

Hot data may be small (i.e. 3-5%) compared with total system storage capacity, but it may represent as much as 50-60% of all I/O activity. I/O intensity is why L2 cache is so valuable. By using a secondary cache, a very large proportion of I/O can be serviced from cache; thereby greatly reducing the time required to access data compared to traditional spinning HDDs. If the storage system determines using the extended cache would maximise I/O performance, it migrates data onto L2 cache without the need for intervention by the administrator. The storage system updates its understanding of I/O demand by continuously monitoring I/O patterns.

There are significant benefits to using advanced caching functions, particularly for dynamic environments like virtualised servers and for applications requiring low latency because I/O patterns can change dramatically even within a single workday. Caching has been shown to increase I/O performance by up to 800% depending on the application.

Caching is Not Easy:

Effective caching requires evaluating multiple, intersecting factors. Consider, for example, the level of IO/s. To work effectively for an organisation, a caching feature should automatically measure every transaction and the level of IO/s per data transaction. Every business knows the value of maintaining performance when it comes to customer satisfaction. In today’s ‘always on’ connected world, providing reliable service and meeting the expected service levels is everything. Being able to keep performance levels high, even in the face of congestion or a hardware failure, is key.

Automatic caching can also help maintain good performance during hardware failures. Usually an enterprise storage system includes two controllers in active/active mode. If one controller fails, the other takes over. The surviving controller must cope with twice the traffic until the failed hardware is replaced, and in the absence of some form of automatic caching, users will likely experience performance degradation. In a storage solution containing a secondary L2 cache, performance degradation is minimised and up to 80% of I/O performance can be maintained.

How Caching Works: Whenever a read transaction is requested from the host application, it will first attempt to retrieve data from the primary cache controller memory (L1 cache). If the requested data is available, the request will be serviced from L1 cache. If the data is not available, the controller checks the secondary L2 cache map table to see if the requested data has already been promoted to the L2 cache located on SSD or flash. If data has not been promoted, the controller will service the read request directly from disk storage. Thereafter, the data remains cached in case additional read requests are initiated.

Tiering for Efficiency

In today’s enterprise it is critical for data to be properly provisioned to maximise performance and keep price/performance as low as possible. Tiered storage enables allocating data assets in the most optimum manner, which requires simultaneously accounting for efficiency, I/O performance, data protection and uptime. Advanced storage systems currently on the market will support simultaneous use of high performance SAS, nearline SAS and SSDs. They can automatically provision storage and ensure the provisioning is consistent with corporate policies. Storage tiering is complementary to caching. Unlike caching, tiering uses solid-state memory as permanent storage. Caching is used to dynamically redirect read and write requests.

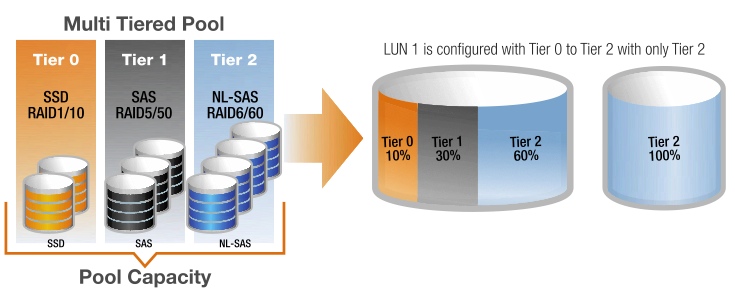

The following diagram demonstrates how a pool of storage can be configured with multiple storage types or with just a single storage device type.

In this diagram, tier 0 is synonymous with SSD, tier 1 with SAS HDDs and tier 2 with nearline SAS HDDs:

The ability to automatically tier data allows business systems to analyse traffic in real time and automatically relocate storage using policy based performance thresholds, without any downtime. Automatic tiering autonomously migrates data, which is then reallocated to appropriate devices based on maximising data access performance and maintaining the efficiency of the entire storage system.

Tuning for Total Optimisation

Adding an automatic tuning feature to the mix makes the picture complete – no administrator wants to manually tune their storage system if they can avoid it, but this is especially the case in a growing business environment, where demands on the system are increasing rapidly. Automation has made the tuning of basic storage systems nearly foolproof. Sophisticated storage management software will ensure a business’ devices get the IO/s they require while maintaining system performance even in the event of a hardware failure. Automatic tuning enables humans to focus on higher end challenges such as system design and evaluating future requirements.

Automatic storage tuning is based on dynamic performance analysis, which monitors I/O trends and detects bottlenecks. It helps identify trouble areas giving administrators the ability to automatically optimise all data resources by leveraging different tiers of storage, each with their own unique performance and availability characteristics. Tuning enables the storage system to resolve bottlenecks while maintaining online operations, improving access performance and helping optimise data location.

Conclusion

Optimising price/performance, ensuring data system responsiveness, optimising access to hot data and reducing performance degradation in the event of hardware failures are mission critical tasks for storage managers for any organisation. Storage caching, tiering and tuning are three interrelated storage management functions that administrators can use to drive the best results for their business operations. Tiering allows for more efficient use of storage resources, while caching eliminates I/O bottlenecks thereby improving performance. The combination of caching and tiering along with automated tuning enables the monitoring of data utilisation and optimises storage tiers without manual intervention. The application demands of databases, virtualised server and VDI continue to drive the need for adaptable, dynamic storage architecture.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter