HGST Research Showcased Persistent Memory Fabric With Mellanox

Low power DRAM alternative with greater scalability for in-memory compute applications

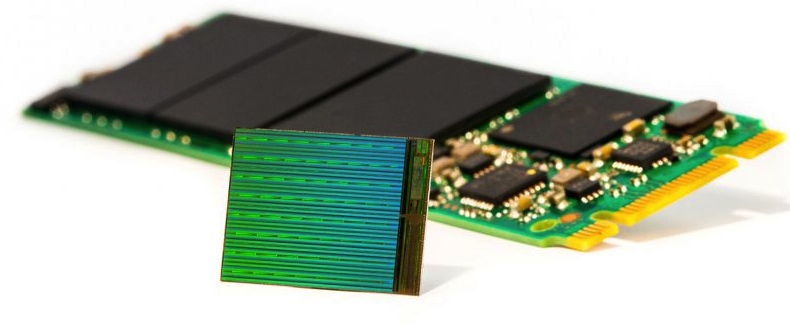

This is a Press Release edited by StorageNewsletter.com on August 17, 2015 at 2:54 pmBuilding upon last-year’s record-breaking three million I/O per second Phase Change Memory (PCM) demonstration , HGST Inc., a Western Digital company, in collaboration with Mellanox Technologies Ltd., showcased a PCM-based, RDMA-enabled in-memory compute cluster architecture that delivers DRAM-like performance at a lower cost of ownership with greater scalability.

In-memory computing is one of today’s hottest data center trends. Gartner Group projects that software revenue alone for this market will exceed $9 billion by the end of 2018 . In-memory computing enables organizations to gain business value from real-time insights by offering faster performance and greater scalability than legacy architectures.

While modern data center applications can benefit from more main memory, today’s DRAM approaches are expensive to scale because of that memory’s volatility: DRAM stores data in leaky capacitors, and thus needs to be rewritten many times per second to stave off data loss. This refresh power consumption can be as much as 20-30% of the total server energy . Emerging non-volatile memory technologies, such as PCM, do not have this refresh power demand thereby enabling far greater scalability of main memory than DRAM.

Company’s persistent memory fabric technology delivers reliable, scalable, low-power memory with DRAM-like performance, and does not require BIOS modification nor rewriting of applications. Memory mapping of remote PCM using the Remote Direct Memory Access (RDMA) protocol over networking infrastructures, such as Ethernet or IB, enables a wide scale deployment of in-memory computing. This network-based approach allows applications to harness the non-volatile PCM across multiple computers to scale out as needed.

The HGST/Mellanox demonstration achieved random access latency of less than 2μs for 512B reads, and throughput exceeding 3.5GB/s for two KB block sizes using RDMA over IB.

“DRAM is expensive and consumes significant power, but today’s alternatives lack sufficient density and are too slow to be a viable replacement,” said Steve Campbell, CTO, HGST. “Last year our Research arm demonstrated PCM as a viable DRAM performance alternative at a new price and capacity tier bridging main memory and persistent storage. To scale out this level of performance across the data center requires further innovation. Our work with Mellanox proves that non-volatile main memory can be mapped across a network with latencies that fit inside the performance envelope of in-memory compute applications.“

“Mellanox is excited to be working with HGST to drive persistent memory fabrics,” said Kevin Deierling, VP, marketing, Mellanox. “To truly shake up the economics of the in-memory compute ecosystem will require a combination of networking and storage working together transparently to minimize latency and maximize scalability. With this demonstration, we were able to leverage RDMA over IB to achieve record-breaking round-trip latencies under two microseconds. In the future, our goal is to support PCM access using both IB and RDMA over Converged Ethernet (RoCE) to increase the scalability and lower the cost of in-memory applications.“

“Taking full advantage of the extremely low latency of PCM across a network has been a grand challenge, seemingly requiring entirely new processor and network architectures and rewriting of the application software,” said Dr. Zvonimir Bandic, manager, storage architecture, HGST research. “Our big breakthrough came when we applied the PCIe Peer-to-Peer technology, inspired by supercomputers using general purpose GPUs, to create this low latency storage fabric using commodity server hardware. This demonstration is another key step enabling seamless adoption of emerging non-volatile memories into the data center.“

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter