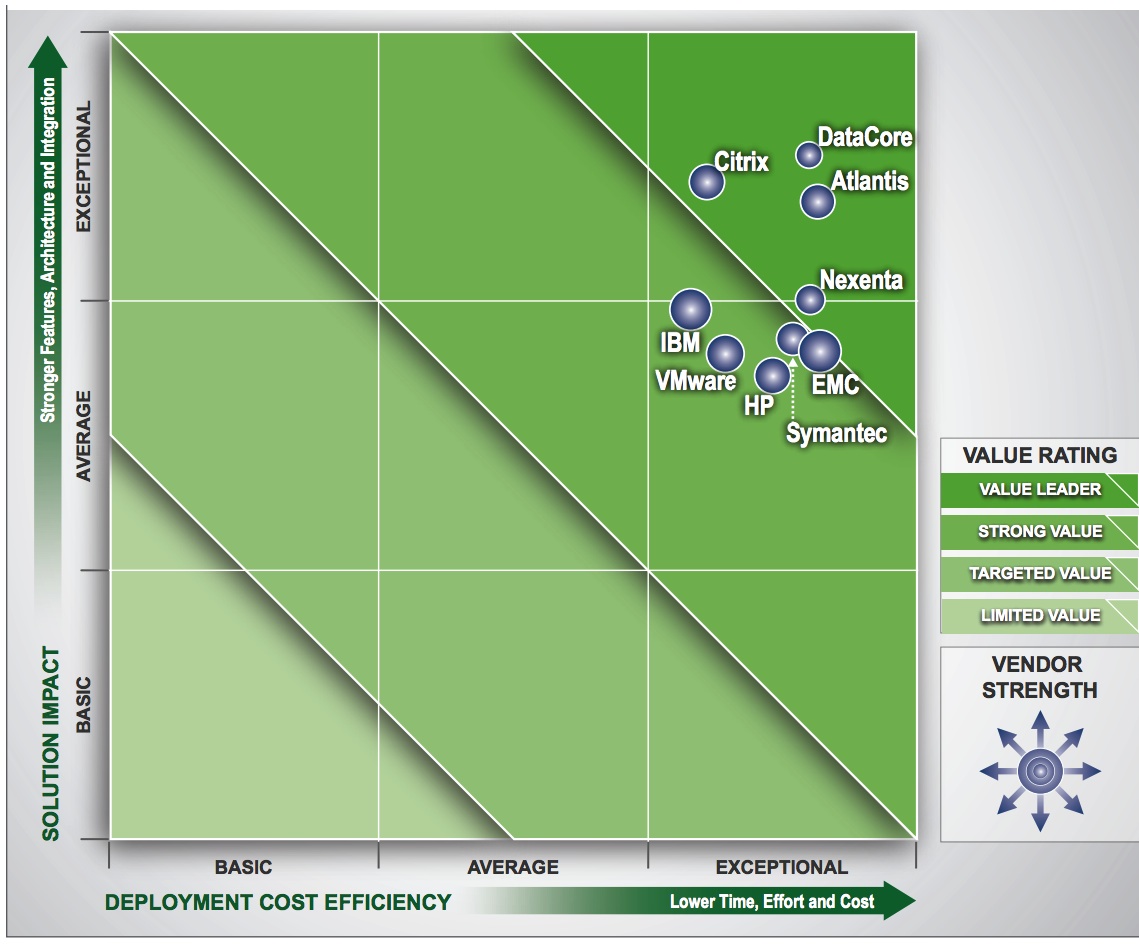

DataCore and Then Atlantis, Citrix and Nexenta Winners in Software-Defined Storage

2Q15 Radar report by Enterprise Management Associates

This is a Press Release edited by StorageNewsletter.com on November 4, 2015 at 4:32 pmThis Enterprise Management Associates, Inc. Radar report provides a holistic view of products in a particular market from the perspective of an IT user.

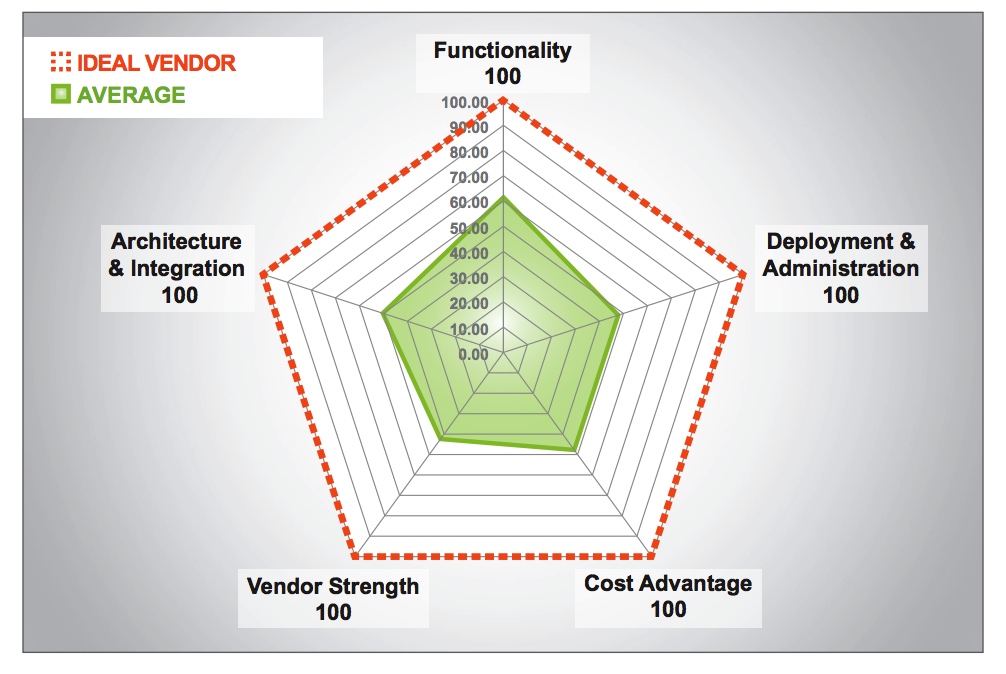

The report utilizes key product indicators (KPIs) to quantify a product in five focus areas. These areas are Functionality, Architecture & Integration, Deployment & Administration, Cost Advantage, and Vendor Strength. These areas are then contrasted with solutions from other vendors to provide relative comparisons.

Executive Summary

Data creation is growing at unprecedented rates with the amount of information stored doubling every year. Data is now more active throughout the lifecycle, with analytics driving competitive advantage in sales and marketing and compliance requirements needing to be met to satisfy legal obligations as well as governance. As business needs change, driven by analytics and real time transactional trends, there is the need to provision storage capacity and services more quickly. The diversity and volume of data are placing extreme operational and capital demands on an already overburdened IT. Software-defined storage (SDS) is a new technology that targets meeting these new requirements while controlling costs.

SDS aims at making storage application-aware, enabling server administrators, application managers, and developers to provision storage in a policy-driven, automated self-service manner. Like server and network virtualization, storage virtualization abstracts the physical infrastructure into logical resources by utilizing intelligent software. Where SDS extends beyond basic storage virtualization is its ability to deliver a consistent set of services across all storage by utilizing the underlying storage infrastructure. This results in a homogeneous storage infrastructure that can be quickly and flexibly provisioned and more easily managed.

The landscape is changing for how data is being stored, and a preferred SDS solution should span the entire enterprise, enabling IT organizations to exploit new capabilities and technologies as well as continue to leverage legacy network-based storage devices. This EMA Radar report focuses on the core capabilities needed to deliver SDS throughout the entire enterprise. There is much diversity in storage solutions throughout the enterprise. Storage solutions, even those from the same vendor, can have unique hardware, operating systems, connectivity, services, application program interfaces, and data types (block, file, and object). An enterprise-wide approach is preferred because one cannot get the full benefit of software-defined storage unless it is implemented on all storage in the enterprise.

SDS Landscape

A majority of the data being stored still consists of traditional structured data created by contemporary applications such as CRM and ERP, which consist of structured data; however, there is significant growth of unstructured data being created by applications that are driven by big data, social media, and mobile devices. Data is now more active throughout the lifecycle, with analytics driving competitive advantage in sales and marketing and compliance requirements needing to be met to satisfy legal obligations as well as governance. As business needs change, driven by analytics and real time transactional trends, there is the need to provision storage capacity more quickly. Finally, service level requirements have increased, being driven by the user’s need to access data quickly and reliably wherever it is located 24 hours a day, 365 days a year. The diversity and volume of data are placing extreme operational and capital demands on an already overburdened IT infrastructure.

With the growth of the cloud, and the self-service and elastic growth capabilities it provides, internal business organizations, departments, and users are now expecting similar capabilities when it comes to internal IT consumption. Virtualization has successfully transformed servers and networks into on-demand and customizable IT resources. In addition to faster and more flexible provisioning, server and network virtualization have reduced capital and operational expense. The adoption rate of storage virtualization has been slower, due largely to the inherent heterogeneity of storage. SDS is a relatively new technology that targets meeting these new requirements while controlling escalating storage costs. It aims at making storage application-aware, enabling server administrators, application managers, and developers to provision storage in a policy-driven, automated self-service manner.

Like server and network virtualization, storage virtualization abstracts the physical infrastructure into logical resources by utilizing intelligent software. Where SDS extends beyond basic storage virtualization is its ability to deliver a consistent set of services across all storage by utilizing the underlying storage infrastructure. SDS can deliver a homogeneous storage infrastructure that can be quickly and more efficiently provisioned and more easily managed. This EMA Radar report focuses on the core capabilities needed to deliver SDS throughout the entire enterprise. There is much diversity in storage solutions within the enterprise. Storage solutions, even those from the same vendor, can have unique hardware, operating systems, connectivity, services, application program interfaces, and data types (block, file, and object). Additional contributors to storage diversity include cloud storage, flash technology, and ‘shared nothing’ architectures. Without addressing the entire range of storage solutions, individual silos of storage will still exist, limiting utilization and complicating administration.

An enterprise-wide approach is preferred because one cannot get the full benefit of software-defined storage unless it is implemented on all storage in the enterprise. The advantage to an enterprise-wide approach is the ability to leverage existing storage, which is too costly to replace and would require a substantial operational effort to migrate all of the data. There are a number of solutions that market themselves as SDS, although they fall short of an enterprise-wide coverage, and/or do not provide a full set of device independent services. Some of these solutions do indeed use SDS principles, but are limited in scope on what storage addressed. As an example, a pure hyper-converged solution does indeed abstract storage and can deliver a set of consistent and hardware-independent services. But in most cases these solutions cannot affect storage outside of the hyper-converged solution. This, in effect, creates another island of storage – the very thing that an enterprise-wide, SDS solution is architected to correct.

This is not to say that targeted appliances do not provide significant customer value. Solutions provided by vendors within this report such as the Citrix WorkSpacePod for VDI, or the HP Converged System 200-HC hyper-converged solution enable IT organizations to quickly deploy solutions for applications. It is just that the proper expectation should be realized when identifying the benefit to the entire storage infrastructure.

Assessing the SDS Market

Model Buyer

The typical large enterprise utilizes a number of storage technologies to meet the needs of specific application or workload requirements. A large increase in network-based storage coincided at the same time as server virtualization grew, in large part because of the benefit and flexibility of using shared storage. However, as workloads diversified, multiple storage systems were deployed for unique applications, such as SQL or web-based engines, which benefited from the specialized hardware and software capabilities. The net result of using specialized storage technologies is that a plethora of heterogeneous, storage types were deployed, typically across multiple vendors. These systems have a great deal of intelligence within the storage system itself. This made for high performing systems that are able to meet the needs of individual applications. The problem is that these storage systems each have their own proprietary access methods, services, and management tools, making them difficult to deploy, provision, manage, and execute storage services. The ability to share resources or management across the different storage types is not possible, so islands of orphaned storage proliferated across the enterprise. Additionally, all the proprietary specialization makes these systems very expensive.

Prospective user of SDS has a number of issues they are trying to solve.

- First, there is the issue of operational complexity. As mentioned earlier, each storage system, even different storage systems from the same vendor, deploy unique operating systems, functional capabilities, and advanced services. Each system requires a number of tools to master and utilize, with no ability overlap the skill set across the multiple heterogeneous solutions. By using a single pane of glass to manage and provision storage, there is a large reduction in the complexity of managing enterprise-wide storage infrastructure.

- Second, there is the desire to continue to utilize legacy network-based hardware. Although expensive and proprietary, this hardware must continue to be leveraged for several reasons. First, there is the need to meet specific application requirements. The IT organization may have plans to replace legacy applications, or port the older applications to a less expensive hardware or solutions that offer greater functional capability, but for now either solution has taken a back seat to more pressing matters. The legacy hardware must continue to be used, yet IT organizations are looking for ways to reduce the administration and provisioning effort while increasing overall utilization.

Although legacy equipment must continue to be utilized, newer technologies such as flash are solving performance problems, especially for high transactional applications and applications such as VDI. Flash is still an expensive solution when compared with capacity spinning disk, so its use for now must be judicial. Whether it is flash, or some other new storage technology, it will require the movement of data across networks from and to unique systems.

The use of commodity storage can also reduce costs. Commodity storage can be directly attached to servers without proprietary software and can be procured at a fraction of the cost of network-based storage arrays. These direct-attached solutions avoid the high cost of network switches, infrastructure, and proprietary storage controllers.

Scope of the Research

In all EMA Radar reports, EMA evaluates solutions based on five key axes: Deployment and Administration, Cost Advantage, Functionality, Architecture and Integration, and Vendor Strength. The last category, perhaps the only one that is not self-explanatory, is focused on the market and industry presence, vision, and financial stability of the vendor. For the 2015 EMA Radar for Software-defined Storage, particular emphasis has been placed on deep and thorough analysis of Functionality, Cost Advantage, and Deployment and Administration. Architecture and Integration and Vendor Strength were also fully addressed, but on a less rigorous basis. EMA Radar reports will generate profiles for individual vendors based on numerical scores along five axes. An ‘ideal’ score would look like a pentagon, as in the figure below. Actual profile scores are contrasted against the average across all vendors for each of the five categories.

Beginning each of the sub-categories were multiple questions and points of consideration. At the lowest level, scoring variables were collected and evaluated for each vendor solution reviewed. The three major axes for final presentation of vendor relative results are resource efficiency, solution impact,and vendor strength.

Characteristics of a Preferred Solution

SDS is a new technology that targets meeting these new requirements while controlling costs. SDS aims at making storage application-aware, enabling server administrators, application managers, and developers to provision storage in a policy-driven, automated self-service manner. Like server and network virtualization, storage virtualization abstracts the physical infrastructure into logical resources by utilizing intelligent software. Where SDS extends beyond basic storage virtualization is its ability to deliver a consistent set of services across all storage by utilizing the underlying storage infrastructure. This results in a homogeneous storage infrastructure that can be quickly and flexibly provisioned and more easily managed. The landscape is changing for how the enterprise is storing data, and a preferred solution should span the entire landscape, enabling IT organizations to exploit new capabilities and technologies as well as continue to leverage legacy network-based storage devices.

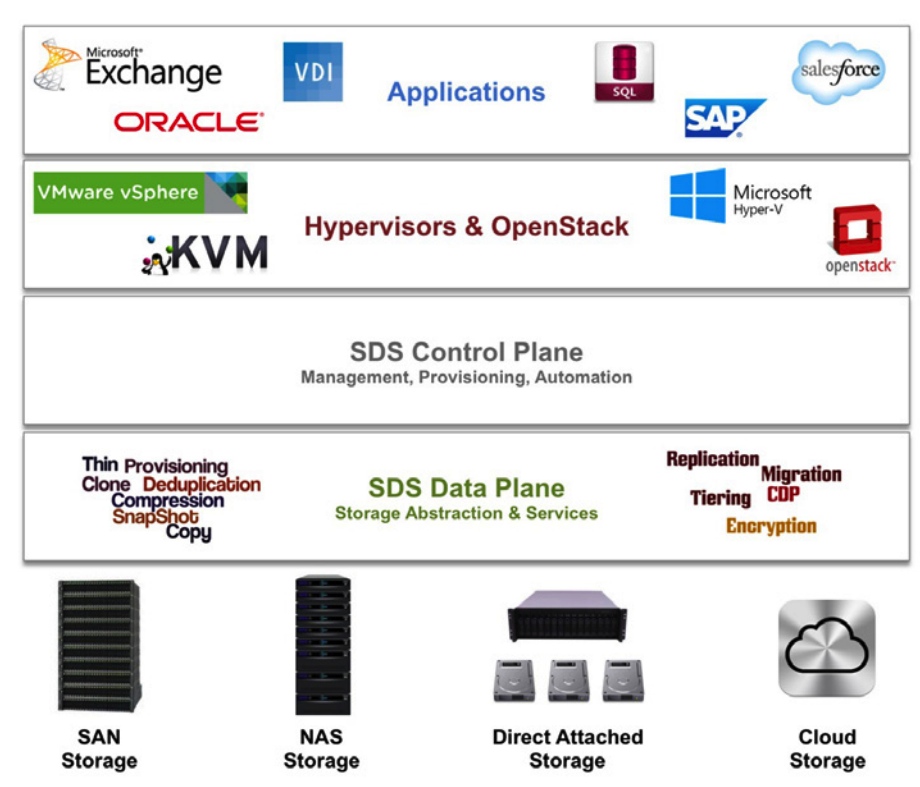

SDS utilizes software to deliver policy-based provisioning of capacity and services along with the management of storage independent of the hardware. There are two unique elements to a software-defined storage solution: the data plane and control plane. The data plane is the storage infrastructure that is abstracted and aggregated together to be utilized as a single storage infrastructure. The storage can be put into tiers depending on capabilities, such as performance or availability. As storage and services are provisioned, these tiers are then consumed based on the policies of the data or applications. Storage services include capabilities such as deduplication, thin provisioning, snapshot copies, replication, encryption, and continuous data protection. Before software-defined storage, the data plane existed on the individual storage solutions, each with its own hardware, operating systems, connectivity, services, application program interfaces, and data types (block, file, and object). This created the situation of islands of storage that could not be shared and needed different management interfaces.

The control plane acts as the link between the data plane and applications, providing provisioning, automation, and storage management to the abstracted storage resources within the data plane. It provides the homogeneous management of all storage, greatly simplifying the administrative effort for managing storage. Automation of tasks such as provisioning of capacity or QOS further reduces administrative effort and increases the quality of service by responding more quickly to application requirements. As software-defined storage further matures, this automation within the control plane provides the greatest opportunity for improvement and benefit.

There are three primary benefits that software-defined storage provides to the enterprise:

- Reduce Storage Management – Using a single pane of glass within the control plane greatly simplifies the administrative effort. No longer must vendor-specific storage provisioning, resource management, and services delivery be mastered and executed. In addition to less effort, the risk for errors is greatly reduced.

- Lower Costs – The first item that often comes to mind relative to cost reduction with softwaredefined storage is the ability to use commodity hardware. This is indeed true as commodity-based systems do not need the proprietary hardware and software that a traditional storage array has. In addition, costly storage services can be avoided since these services will be delivered out of the data plane of the SDS solution. Another cost factor is the ability to leverage legacy network-based storage systems, eliminating the need to purchase all new equipment to take advantage of new capabilities, such as advanced services. The abstraction of different types ofstorage also can increase the overall storage utilization rate.

- Flexibility – SDS enables IT to take advantage of the latest technical innovations while continuing to utilize existing hardware. The best of breed storage solution can be or future hardware choices. Solutions can even be built from off the shelf commodity components. Flash can be added and exploited throughout the infrastructure by taking advantage of the tiering available in software-defined storage. The separation of hardware from software in storage creates scalable and flexible environments that can quickly adapt to changing business or technical needs.

Evaluation Criteria

Storage Services

The primary difference between storage virtualization and SDS is the ability to abstract services as well as capacity and performance. Services have traditionally been proprietary in nature, with each storage solution having its own unique delivery and process for executing the services. Through abstraction, a common set of services are available and executed across all storage, regardless of its type or vendor. This approach leads to a consistent methodology and results, increasing the value and dependability of the service.

Services that are considered when quantifying this value of this section

include the following.

- Deduplication – This data reduction capability got its start in persistent data, but is now used in more mainstream applications with good results. Deduplication takes advantage of that fact that there is redundancy in data across a block or file. Deduplication stores only one copy of the redundant data and uses software to recompile that data when it is being read. Not only does deduplication reduce the amount of capacity required for a given amount of data, it also reduces the network bandwidth consumed for local, campus, or geographical storage. Results are data dependent, with applications such as VDI and backup often experiencing a reduction rate of ten to one or more.

- Compression – Another form of data reduction, compression, reduces bits by identifying and eliminating statistical redundancy. Compression can be used alone or in combination with deduplication to archive greater data reduction.

- Snapshot Copy – This is the process of copying only the data that has changed since the last full backup or snapshot copy. Snapshot technology enables more frequent point-in-time copies since only the changed data is stored. One metric that deviated from solution to solution is the number of snapshots that can be taken on a given volume.

- Thin Client – This is the ability to overprovision and allocate storage to an application or host that exceeds what is physically available. Although it does not reduce the amount of data that is stored, it only consumes the amount of capacity that actually had data written to it.

- QoS – This is the ability to reassign resources, typically performance related, to ensure a given defined performance policy is met.

- Auto-tiering – This is the process of moving data from one storage type to another based on data access rate, age of data, or other user-identified policies.

- Replication – This is the process of making a copy of data, typically offsite, to ensure business continuance in the event data can no longer be accessed at the primary site. This can be due to the loss of equipment or infrastructure at the primary site. Synchronous replication does not signal to the host that the operation is completed until the redundant copy is verified as stored. Asynchronous replication sends a copy of the data without waiting for it to be stored onto the replicated target before signaling to the host that the write has completed. Because of this, there is always some exposure to data not being confirmed to the secondary site should an event preclude data from being accessible from the primary site.

- Data Encryption – This is the process of protecting data by making it unreadable without the use of a secret key or password. There are two forms of encryption and vendors may use one or both methods. Encryption in flight is the process of encrypting data when it leases a storage system and is transferred to another storage system or server over a network. The data in then unencrypted when stored. Data at rest encryption refers to the process of requiring a key or password when the data is stored within a particular system. For instance, most tape libraries and their associated drives will require an encryption key in order to read data from a tape. This reduces the risk of data falling into the wrong hands should the tape be misplaced or stolen.

- Load Balancing – This is the process of shifting the resources within a storage system to more equally meet the performance requirements of the entire system.

The Cloud

The cloud has become a target for the storing of some enterprise data. There are three primary approaches to cloud storage: private, public, and hybrid. A private cloud often uses internal storage resources within the enterprise, or dedicated resources from a cloud service provider. Public cloud storage is delivered by service providers such as Amazon, Google, and Microsoft and use a multi-tenant approach that delivers a lower cost solution, but at the potential of greater security risk. Public cloud offerings within the enterprise are primarily used for the storing of persistent data, such as backups and archives. A hybrid cloud is an approach that uses both private and public cloud resources, usually storing mission critical data or data that requires higher security on the private cloud, and using the public cloud for data that has less stringent requirements. A storage solution that employs a RESTful API facilitates connectivity to the public cloud with support for Amazon Web Services S3 or other public cloud service providers.

On the EMA RADAR for SDS

The participating vendors in the EMA Radar for Software-defined Storage report fall into two distinct categories. There are four of the nine vendors that scored in the Value Leader category. These four solutions are capable of abstracting a wide range of storage while delivering a complete set of storage services. The other five vendors fall into the Strong Value category.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter