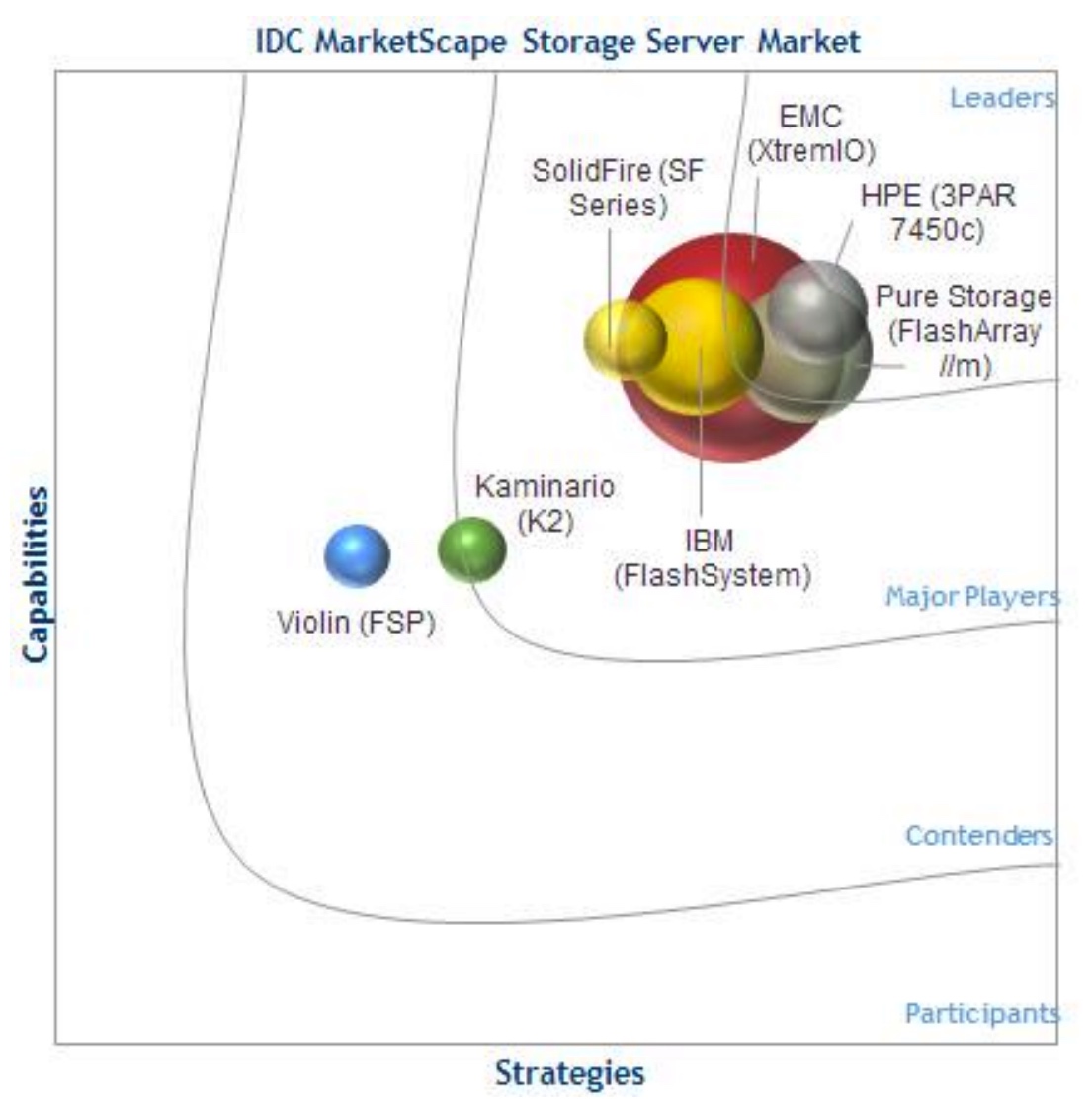

Three Leaders in All-Flash Arrays for IDC

HPE 3par 7450c, Pure Storge FlashArray//n and EMC XtremIO

This is a Press Release edited by StorageNewsletter.com on January 20, 2016 at 3:20 pmThis is an abstract of IDC MarketScape, Worldwide All-Flash Array, 2015-2016 Vendor Assessment (26 pages, $15,000), a report from International Data Corporation, written by analysts Eric Burgener, Ashish Nadkarni and Eric Sheppard

IDC MarketScape Worldwide All-Flash Array Vendor Assessment

(Source: IDC, 2015)

IDC Opinion

The all-flash array (AFA) market is beginning to transition from an emerging market to a more mature one. Among the relevant vendors evaluated in this study, a relatively comprehensive set of enterprise-class capabilities is broadly available, and it is clear that customers are thinking more and more about these platforms’ ability to serve as general-purpose primary storage platforms hosting a variety of mixed workloads.

All participating vendors offer solutions that easily support hundreds of thousands of IO/s, consistently deliver sub-millisecond latencies, and can support at least half a petabyte of effective storage capacity.

That said: there are certain design decisions and/or characteristics that differentiate vendors, particularly in the areas of system architecture (scale-up, scale-out, or some combination of the two), media packaging (custom flash modules – CFMs or SSDs), multitenancy, support for replicated configurations, and the ability to integrate well into existing datacenter environments.

There is less of a spread between vendors’ existing platform capabilities today than there is between their road aps and their vision of how the companies will succeed over the next several years.

Regardless of how they position their products externally, in IDC’s view, each vendor has uniquely distinguished itself in a certain area. All of the AFAs from the vendors in this study can confidently be deployed today as general-purpose primary storage arrays to deliver flash performance and enterprise availability/reliability, providing opportunities for customers to transform both their IT infrastructure and their business opportunities.

Prospective customers should look to the following second-order considerations to select the solution that best meets their requirements and make sure that they evaluate the consonance of vendors’ road maps with their own vision of future enterprise storage requirements:

- The scalability and consistent high performance of EMC XtremIO’s flash-optimized snapshotc implementation for copy data management combined with its unprecedented rapid growth that have propelled it to the prominent market share position by revenue.

- The data services comprehensiveness and maturity of HPE’s 3PAR StoreServ 7450cc combined with its best-in-class ability to integrate seamlessly with other platforms for virtualization, data movement, backup, DR, and archive under a single consistent management environment.

- The low latency, storage density, and power efficiency of the IBM FlashSystem based on its use of a CFM-based design (called IBM MicroLatency modules).

- The best-in-class combination of scale-up and scale-out storage architecture technology to deliver consistent performance and flexibility in the Kaminario K2.

- The comprehensive ability to cost-effectively and nondisruptively perform in-place upgrades across technology generations of the Pure Storage FlashArray//m along with its unparalleled ability (from a quantitative point of view) to please its customers as demonstrated by its net

promoter score (NPS). - The QoS capabilities (implemented as they are in a scale-out storagec architecture), the ability to support multitenancy, and the top-end scalability of the SolidFire SF Series.

- The industry-leading single-node performance, storage density, power efficiency, and nativec replication portfolio implementation of Violin Memory’s Flash Storage Platform (FSP).

IDC Marketspace Vendor Inclusion Criteria

To be included in this IDC MarketScape analysis, an AFA vendor must meet the following criteria:

- Have revenue generated from an AFA product line in 1H15 (prior to July 1, 2015)

- Use a multi-controller architecture or a scale-out design with nondisruptive failover (systemsc that require HA node pairs are excluded)

- Meet IDC’s definition of an AFA: the system must have hardware that is unique to the all-flash configuration within the vendor’s product line (other than just the choice of populating the array with all flash media) and the array’s inability to support HDDs must be more than just a marketing limitation; note that all-flash configurations of HFAs (HFA/A) do not qualify

- Only use either CFMs or SSDs to meet both performance and capacity requirements withinc the array

- Provide at least two customer references to whom IDC can speak

Note that a number of vendors market their HFA/A products as AFAs, but because those products do not meet IDC’s definition of an AFA, they have not been included. IDC’s review of unpublished performance testing data using more real-world workloads (not ‘hero’ tests) combined with simultaneous data services indicates that there is a noticeable performance difference, particularly with respect to consistent latencies, as a system is scaled up to its maximum throughput range between AFAs and HFAs. This area is evolving with several key vendors starting to narrow the performance gap between their HFA/A offerings and what IDC defines as true AFA products, and IDC will continue to keep a close eye on developments in this area.

Essential Buyer Guidance

The AFA market has undergone a significant evolution in the past year, moving away from dedicated application deployment models and more toward mixed workload consolidation. Over the past year, the major players in this space have all enhanced their offerings to provide the set of enterprise storage management features necessary for these arrays to be used as general-purpose primary storage arrays hosting many applications. There are still a few vendors that are missing a feature here or there – lack of deduplication or lack of a native replication capability – but these features are on their near-term roadmaps. Those vendors successfully selling AFAs to small and medium-sized enterprise have already seen their systems commonly used as general-purpose primary storage arrays, but more and more large enterprises are evaluating AFAs’ multitenant capabilities prior to initial purchase.

Table stakes in the AFA market include the following functionalities, which are either available on the systems already or in the near-term roadmap:

- Stellar flash performance, easily providing hundreds of thousands or more IO/s at consistentc sub-millisecond latencies combined with effective flash capacity of at least half a petabyte

- Wide striped data layout for improved performance consistency, better reliability, and faster recovery in the event of storage device failures

- Use of a variety of storage efficiency technologies that do not preclude systems from still delivering consistent sub-millisecond performance across their entire throughput range, including inline data reduction, thin provisioning, delta differentials for snapshot-based replication, and broad support for VAAI-based unmap functionality, among others

- Flash-optimized RAID-6 implementations for write minimization and capacity maximization

- All or most data services included with the array base price (except for, in some cases, encryption and replication)

- Effective price per gigabyte for most vendors, ranging from $1/GBto $2/GB (assuming data reduction ratios of 4:1), supported by the increasing use of MLC flash media moving toward 3D NAND TLC in the near term

- Broad API support to aid in enterprise infrastructure integration – including VAAI, VADP, SRM, VSS, RMAN, SNMP, and RESTful APIs and/or scriptable CLIs for management – with VVOLs and Hyper-V ODX generally being a near-term road map item (if not already available)

- Field-proven five-nines-plus reliability closing in on six-nines based on real-time monitoring of system uptime and usage statistics, which will increasingly be utilized not only by vendors but also customers directly

Despite the broad commonality in enterprise storage management capabilities, there are still a few areas where vendor offerings show significant differentiation. Storage architecture is one of those areas. There are classic dual-controller architecture systems (such as Pure Storage FlashArray//m and Violin Memory Flash Storage Platform), and there are systems built around classic scale-out designs (EMC XtremIO and SolidFire SF Series). As noted in Evaluating Scale-Out and Scale-Up Architectural Differences for Primary Storage Environments (IDC #256932, June 2015), more and more storage systems across all areas of enterprise storage are blending aspects of both storage architectures to improve scalability and flexibility. IBM (FlashSystem) can cluster up to four dual-controller nodes, allowing workloads to be spread across all nodes in the cluster (although workload redistribution when nodes are added requires manual intervention). Kaminario (K2) exhibits the elements of both scale-up and scale-out, allowing up to eight dual-controller nodes (each of which supports internal capacity expansion) to be clustered together under a true single-system image, complete with fully distributed data and metadata. HPE can scale a single system, like a 3PAR StoreServ 7450c, in terms of both controller nodes (up to four) and capacity within a single-system image and then can further cluster nodes together under a single management (but not system) image.

Other architectural design decisions in which systems differ include the use of CFMs instead of SSDs and whether a system uses active/active or active/passive controllers. Vendors using CFMs argue that increased visibility at the individual flash cell level enables more efficient and less impactful garbage collection operations, but with most systems in production use in the field still running at under 30% utilization, it is difficult to prove this based on real-world customer experience. IDC notes that those AFAs that use CFMs tend to lead the market in storage densities (TB/U) and power efficiency. Higher storage density can make for more compact systems (less floor or rack space required), but all evaluated systems easily support at least half a petabyte or so of effective capacity with near-term growth paths in the petabyte range and beyond. Active/active or active/passive controller implementations will have different performance impacts in post-failure scenarios.

Another area where significant differences exist today between vendors is in QoS controls. A premier vendor in this space, SolidFire, sets the bar by providing a complete set of controls on the array itself (ability to set maximum, minimum, and burst limits for IO/s, QoS evenly balanced across all available system resources, and admission control) as well as extensive integration with QoS controls on third-party platforms, like vSphere. Most AFAs in use today run at relatively low levels of utilization and with few applications, minimizing ‘noisy neighbor’ concerns.

However, as customers start to use these systems more and more as general-purpose primary storage arrays, contention for system resources will become a real concern. As a group, service providers using AFAs tend to run them at the highest levels of utilization, and SolidFire’s strong position in QoS is due in large part to its initial focus on the service provider market (which has since been expanded to more broadly target enterprise users as well). Other vendors’ road maps include QoS features as part of a general set of enhancements to improve these arrays’ ability to support dense multitenancy while continuing to meet performance and security requirements. Note that the ability to apply data services selectively at the volume level (instead of just at the system level) is another important multitenancy requirement.

Over the past three to four years, most AFAs in the enterprise were initially purchased specifically for a single application – most often a database or a VDI environment. Customers love the performance and ease of use that AFAs bring to the table (storage performance tuning time immediately drops to zero), and 100% of the customers interviewed by IDC about their AFA experiences expressed an interest in moving more workloads to flash over time.

This has driven the interest in array-based data services, and replication is key. AFAs are in widespread use with mission-critical application environments, and customers need DR solutions for these configurations. The replication technology portfolio is a strong differentiator among vendors today with some only supporting replication through the purchase of separate products, some offering just the basic snapshot and/or asynchronous replication, and others offering a full replication portfolio – including snapshot, continuous asynchronous, and synchronous replication as well as stretched cluster support, data migration between disparate systems, and other features. A vendor’s portfolio strategy is an important consideration, and those vendors that offer an ability to replicate between their AFAs (at a primary site) and other less-performant but potentially less-expensive HFAs (at secondary sites) have an important differentiator.

When making buying decisions, it is important to understand the systems’ future intended use. All systems can deliver extremely high performance and low latency for a single application, but a system’s ability to support key multitenant features should be considered for customers that plan to host multiple workloads, thereby maximizing the ROI that derives from the secondary economic benefits of flash deployment at scale. IDC believes that mixed workload consolidation is the future of AFAs and, by 2019, they will dominate primary storage spend in the enterprise. We also expect those systems that best support the features and flexibility that multitenancy requires to be the near-term winners.

In Closing The ‘Guidance’ Comments:

It is important to take note of how the AFA vendors got to where they are now. Six of the seven (EMC, IBM, Kaminario, Pure Storage, SolidFire, and Violin) started with a blank sheet of paper to create systems that were specifically optimized for flash and, in the process, they were able to create some interesting differentiators: EMC’s flash-optimized snapshot implementation for copy data management, IBM’s excellent power efficiency, Kaminario’s combination of scale-up and scale-out in a single system, Pure’s comprehensive ability to cost effectively and nondisruptively perform in-place upgrades across technology generations, SolidFire’s QoS, and Violin’s excellent single-node performance.

HPE came at this problem from a different point of view, taking the more mature 3PAR operating environment (which interestingly had originally been designed around the wide data striping that all the other vendors starting with a blank sheet of paper went with as well) and choosing to flash optimize that over time. HPE’s strength is in the comprehensiveness and maturity of its data services offerings, allowing customers to take advantage of flash performance while continuing to work within the familiar 3PAR operating environment for management. For some enterprises, particularly those that care about data services maturity and the ability to integrate with and move data between AFAs and other non-AFA storage platforms, these could be important features.

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter