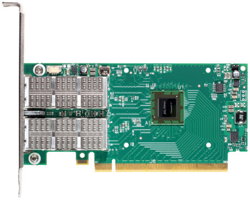

Mellanox ConnectX-4 Adapter, 100Gb IB Interconnect Solution

Doubling throughput of previous generation

This is a Press Release edited by StorageNewsletter.com on November 21, 2014 at 2:42 pmMellanox Technologies, Ltd. announced the ConnectX-4 single/dual-port 100Gb/s Virtual Protocol Interconnect (VPI) adapter, the final piece to a complete end-to-end 100Gb IB interconnect solution.

Doubling the throughput of the previous generation, the adapter delivers the consistent, high-performance and low latency required for HPC, cloud, Web 2.0 and enterprise applications to process and fulfill requests in real-time.

ConnectX-4 VPI adapter delivers 10, 20, 25, 40, 50, 56 and 100Gb/s throughput supporting both the IB and the Ethernet standard protocols, and the flexibility to connect any CPU architecture – x86, GPU, POWER, ARM (News – Alert), FPGA and more. With performance at 150 million messages per second, latency of 0.7?s, and smart acceleration engines such as RDMA, GPUDirect and SR-IOV, ConnectX-4 will enable efficient compute and storage platforms.

“Large-scale clusters have incredibly high demands and require extremely low latency and high bandwidth,” said Jorge Vinals, director, MN Supercomputing Institute of the University of Minnesota. “Mellanox’s ConnectX-4 will provide us with the node-to-node communication and real-time data retrieval capabilities we needed to make our EDR IB cluster the first of its kind in the U.S. With 100Gb/s capabilities, the EDR IB large-scale cluster will become a critical contribution to research at the University of MN.“

“IDC expects the use of 100Gb/s interconnects to begin ramping up in 2015,” said Steve Conway, research VP for high-performance computing, IDC. “Most HPC data centers need high bandwidth, low latency and strong overall interconnect performance to remain competitive in today’s increasingly data-driven world. The introduction of 100Gb/s interconnects will help organizations keep up with the escalating demands for data retrieval and processing, and will enable unprecedented performance on mission-critical applications.“

“Cloud infrastructures are becoming a more mainstream way of building compute and storage networks. More corporations and applications target the vast technological and financial improvements that utilization of the cloud offers,” said Eyal Waldman, president and CEO, Mellanox. “With the exponential growth of data, the need for increased bandwidth and lower latency becomes a necessity to stay competitive. The same applies to the high-performance computing and the Web 2.0 markets. We have experienced the pull for 100Gb/s interconnects for over a year, and now with ConnectX-4, we will have a full end-to-end 100Gb/s interconnect that will provide the lowest latency, highest bandwidth and return-on-investment in the market.“

ConnectX-4 adapters provide enterprises with a scalable and fast solution for cloud, Web 2.0, HPC and storage applications. It supports the RoCE v2 (RDMA) specification, the variety of overlay networks technologies – NVGRE (Network Virtualization using GRE), VXLAN (Virtual Extensible LAN), GENEVE (Generic Network Virtualization Encapsulation), and MPLS (Multi-Protocol abel Switching), and storage offloads such as T10-DIF and RAID offload, and more.

ConnectX-4 adapters will begin sampling with select customers in Q1 2015. With ConnectX-4, Mellanox will offer a end-to-end 100Gb IB solution, including the EDR 100Gb Switch-IB IB switch and LinkX 100Gb copper and fiber cables. For Ethernet-based data centers,,ConnectX-4 provides the link speed options of 10, 25, 40, 50 and 100Gb/s. Supporting these speeds, it offers a copper and fiber cable options. Leveraging Mellanox network adapters, cables and switches, users can ensure reliability, applications performance, and high ROI.

“For HPC and for big data, latency is a key contributor to application efficiency, and users pay more and more attention to time-to-solution,” said Pascal Barbolosi, VP extreme computing, Bull SAS. “Bull is ready to incorporate Mellanox’s new end-to-end 100Gb/s interconnect solution in the bullx server ranges, to deliver ever more performance and continue to reduce latency.“

“A basic requirement for big data applications in business and scientific computing today is to scale with the highest performance and efficiency,” said Mike Vildibill, VP product management and emerging technologies, DDN. “Mellanox’s ConnectX-4 100Gb/s adapter gives our customers the power to scale mission critical applications in order to reduce application runtimes.“

“A huge need for today’s HPC compute-intensive applications is being able to scale with the highest performance and efficiency,” said Ingolf Staerk, senior director global HPC office, Fujitsu Ltd. “Mellanox’s ConnectX-4 adapter will give our customers the power to scale at the speed of 100Gb/s and reduce their application runtime with Primergy HPC solutions.“

“Through the OpenPower Foundation, IBM and Mellanox have been working closely together to drive much-needed innovation forward, particularly for the HPC space,” said Brad McCredie, VP, power development, IBM, and president, OpenPower. “Data demands have created an unbelievable amount of bottlenecks because networks have not been able to keep up. Mellanox’s ConnectX-4 100Gb adapter combined with IBM Power Systems and all OpenPower compatible systems will meet the needs of today’s and tomorrow’s data demands.“

“Mellanox’s ConnectX-4 will make an immediate impact for our customers and for our business with the 100Gb/s interconnect performance,” said Leijun Hu, VP, Inpsur Group. “Integrating the adapter into our next generation blade server system will allow us to have the flexibility and scalability we didn’t have before to make our customer’s infrastructure run as efficient and productive as possible.“

“Interconnect performance capabilities are critical to compute-intensive applications which require ultra-low latency and a high rate of message communication in order to deliver faster results,” said Bob Galush, VP, Lenovo. “Our systems today have been designed with Mellanox’s ConnectX-4 100Gb/s capable adapters in mind and will easily and quickly integrate into our solution designs.“

“Distributed memory systems have seen considerable growth over the past few years, increasing in both scale and complexity to serve a user base that prioritizes performance and efficiency,” said Jorge Titinger, president and CEO, SGI. “Mellanox’s ConnectX-4 will allow our customers to scale their systems at the speed of 100Gb/s, providing customers with a low latency network that drastically reduces application runtime and accelerates time to insight.“

“Data demands have created an unbelievable amount of bottlenecks because networks have not been able to keep up,” said Chaoqun Sha, VP of technology, Sugon. “Mellanox’s ConnectX-4 100Gb/s adapter will allow our new system TC5600 to meet the needs of today’s and tomorrow’s data demands.“

“Supermicro’s end-to-end Green Computing server, storage and networking solutions provide highly scalable, high performance, energy efficient server building blocks to support the most compute and data intensive supercomputing applications,” said Tau Leng, VP of HPC, Super Micro Computer, Inc. “Our collaborative efforts with Mellanox to integrate ConnectX-4 100Gb/s adapters across our extensive range of solutions advances HPC to the next level for scientific and research communities with increased flexibility, scalability, lower latency and higher bandwidth interconnectivity.“

Subscribe to our free daily newsletter

Subscribe to our free daily newsletter